A critical and challenging requirement for many organizations is meeting audit and compliance obligations. The goal of compliance is to secure business processes, sensitive data, and monitor for unauthorized activities or breaches.

AWS CloudTrail Lake now enables customers to record user activity from any source — application, infrastructure, and platform including virtual machines and containers — into CloudTrail Lake, making this a single source for immutable storage and query of audit logs (AWS News Blog). CloudTrail Lake records events in a standardized schema making it easier for end users to consume the data and quickly respond to any security incident or audit request.

By providing North-South Security, Kong Enterprise is the bridge between backend applications and the outside world. Because of its core functionality, it is vital to support the compliance efforts that have a direct impact on business security. Now, with the launch of partner integrations for AWS CloudTrail Lake, Kong Enterprise Audit Logs can be published, stored, and queried together with AWS and non-AWS activity events within the AWS console.

How does the integration work?

Audit Logging is an enterprise feature with Kong Enterprise. When enabled on the Global Control Plane, both Request Audits and Database Audits are accessible through the Kong Admin API. Request Audits provide audit log entries for each HTTP request made to the Admin API. Database Audits provide audit log entries for each database creation, update, or deletion. More detailed information can be found at

Kong Enterprise Admin API Audit Log.

For the Kong-CloudTrail integration, first the customer creates a channel for Kong to deliver events to your event store. The channel should be located in the same AWS region where the Kong Global Control Plane resides. See the documentation, Create an integration with an event source outside of AWS, for more information.

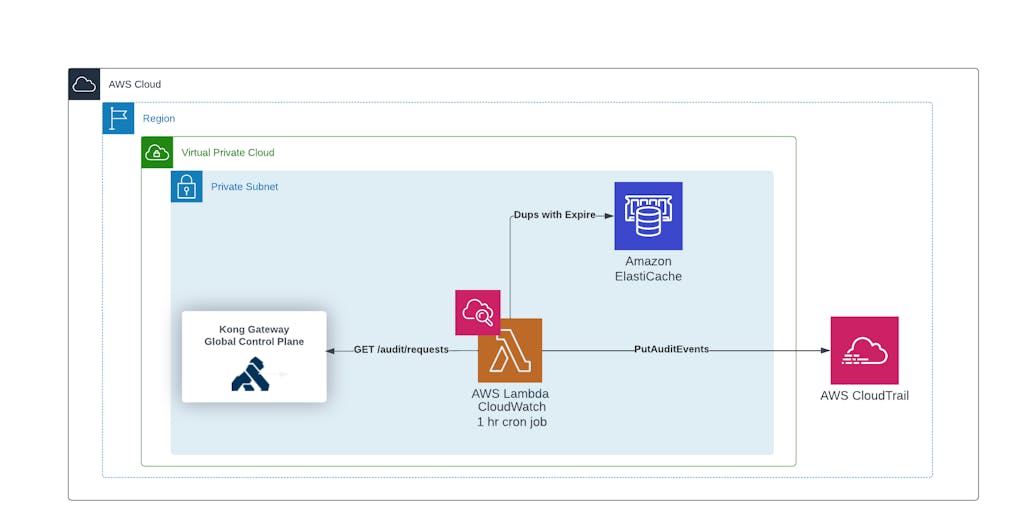

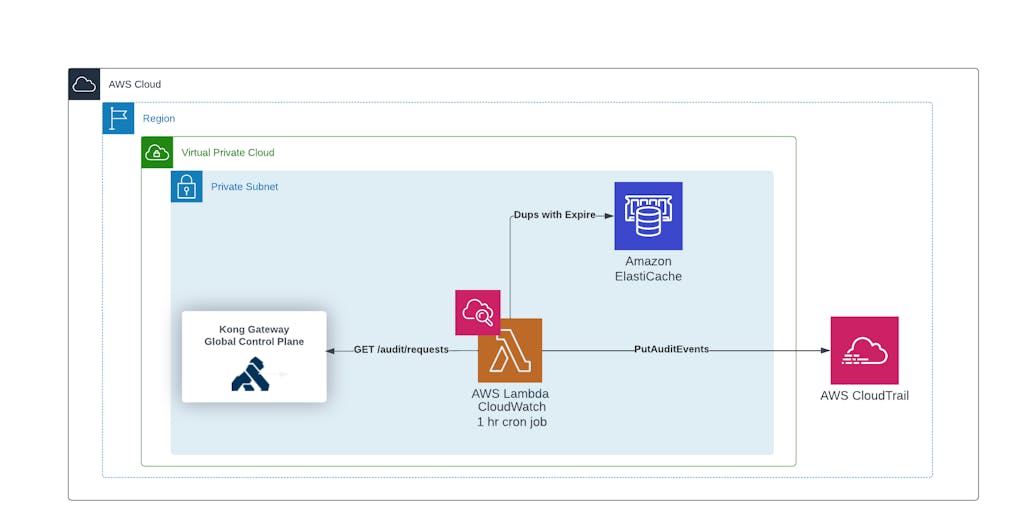

Once the channel has been created, the next step is to set up the additional infrastructure. The additional AWS infrastructure deploys a Lambda function and ElastiCache-Redis into the existing VPC where the Kong Global Control Plane resides. The lambda function will call the /audit/requests endpoint to retrieve Request Audit Log entries, duplicates are then removed by evaluating each audit log entry against existing keys logged in Redis before submitting the logs to CloudTrail Lake. Each audit log entry in Kong has a defined TTL. When the TTL is reached, the entry will be deleted from Kong and similarly expire in Redis. Finally, AWS CloudWatch is used to schedule the lambda function so that it will process audit logs hourly.

Kong CloudTrail Lake integration deployment

High-level the requirements for kicking off this integration are:

- Create a Kong channel ARN to your event store. The channel should be created in the same AWS Region where the Kong Global Control Plane exists. For more information, refer to the documentation, Create an integration with event store outside AWS.

- Enabled and configured audit logs on the Kong Enterprise Control Plane.

- Be able to build out the additional AWS infrastructure with the terraform script provided by Kong.

Enable and configure audit logging on the Kong Global Control Plane

Audit logging is disabled by default. It is configurable via the Kong configuration (e.g. kong.conf):

```bash

audit_log = on # audit logging is enabled

```

This can generate more audit logs than you are interested in and it may be desirable to ignore certain requests. To this end, the audit_log_ignore_methods and audit_log_ignore_paths configuration options are presented:

For more information on Audit Log Configuration please refer to the documentation, Kong Gateway Admin API Audit Logging.

Deploy Kong CloudTrail Lake infrastructure

With the channel ARN created, and the audit logging enabled on Kong Enterprise, you are ready to deploy the additional AWS components to complete the integration.

Set up the Terraform tfvars

Here is a brief overview of the parameters required and optional for the Terraform tfvars file. A more detailed description can be found in the Kong CloudTrail Integration GitHub Documentation.

existing_vpc = "vpc-uzcrqlyml0mdejmduvy"

existing_subnet_ids = ["subnet-zmuavkc6xnatd7cd1bm", "subnet-7n4ae9ua3uhjw5dhgzx", "subnet-kan0csgez5lwh5ancl0"]

security_group = "kong-ct-sg"

lambda_env = {

KONG_ADMIN_API = "https://ec2-5-531-26-7.compute-1.amazonaws.com:8444"

KONG_SUPERADMIN = true

KONG_ADMIN_TOKEN = "test"

KONG_ROOT_CA = "-----BEGIN CERTIFICATE-----content-----END CERTIFICATE-----"

REDIS_DB = 0

CHANNEL_ARN = "arn:aws:cloudtrail:us-east-1:123456789651:channel/07441ab6-c4a1-4c8a-943d-a2f0c50c8a76"

}

channel_arn = "arn:aws:cloudtrail:us-east-1:123456789651:channel/07441ab6-c4a1-4c8a-943d-a2f0c50c8a76"

image = "kong/cloudtrails-integration:1.0.0"

resource_name = "kong-ct-integration"

- existing_vpc and existing_subnet_ids : required to deploy Elasticache and the Lambda function.

- security_group : a security group will be created. It allows for the Lambda function to reach out to Kong, Redis, and CloudTrail Lake.

- lambda_env: configurable environment variables on the Lambda Function. Many are optional. For more information review the documentation in GitHub for more details.

- image: the kong/cloudtrails-integration image is publicly available in DockerHub.

- resource_name: name that will be provisioned to all resources.

With the tfvars file ready, the terraform execution plan can be created and apply:

Deployment

Step 1 - Export AWS variables:

```bash

export AWS_ACCESS_KEY_ID=

export AWS_SECRET_ACCESS_KEY=

```

Step 2 - Navigate to terraform/ in this repo and spin up the additional infrastructure:

Verify the integration was successful

Below is a quick review on how to validate the infrastructure successfully installed and view the Kong audit logs in CloudTrail Lake.

AWS Infrastructure

- AWS Lambda - navigate to the AWS Lambda console and validate that a “kong-ct-integration” lambda function exists and review the environment variables.

- AWS CloudWatch - navigate to the AWS CloudWatch (or AWS EventBridge) console, navigate to the Rules, and validate that a “kong-ct-integration” rule exists, this is to schedule the lambda function.

- AWS ElasticCache - navigate to the AWS ElastiCache console, navigate to Redis Clusters, and validate that a “kong-ct-integration” cluster exists.

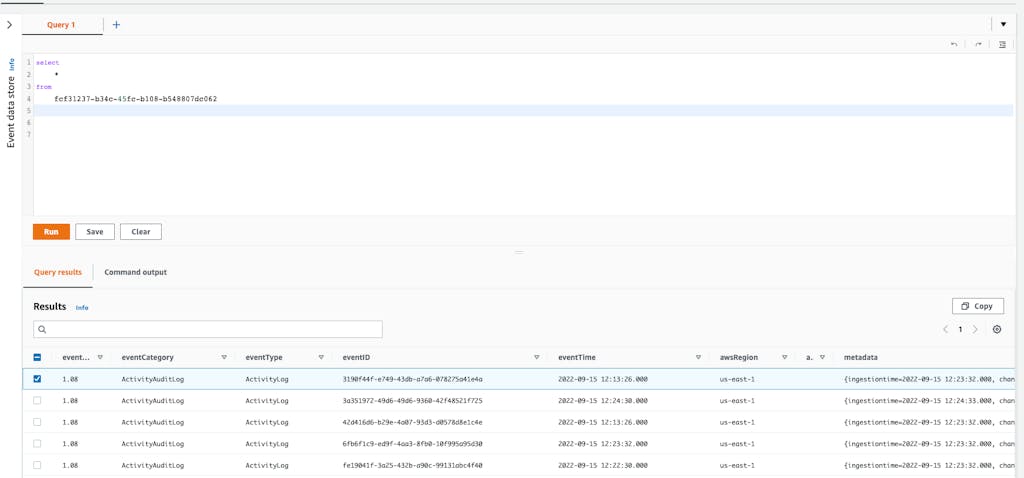

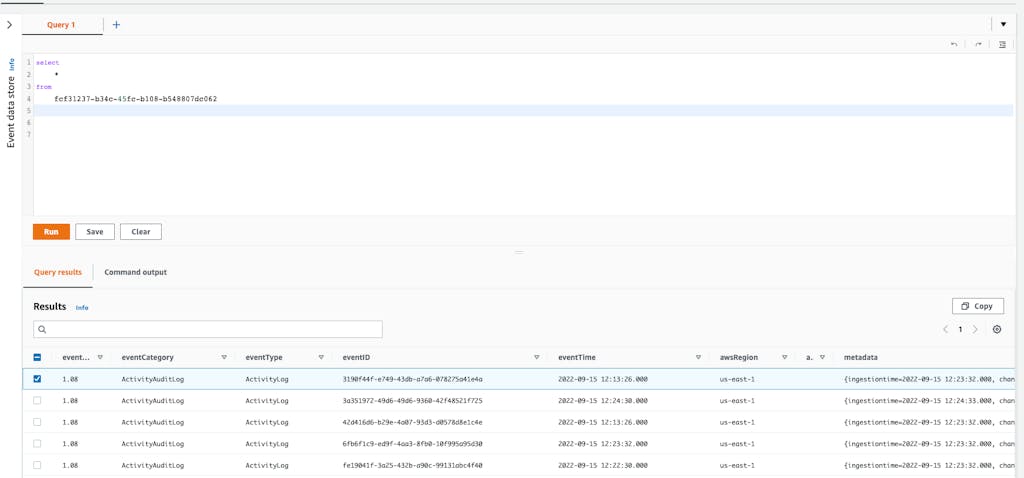

AWS CloudTrail Lake logs

With the infrastructure validated, the lambda function will be triggered hourly by CloudWatch. Once the lambda function has been triggered, logs should be present in the CloudTrail event store. In order to view the logs, in the AWS console navigate to CloudTrail → Lake and in the Editor Tab you can query the logs in the event data store.

What data are we submitting to CloudTrail Lake ?

Let's review the mapping of an audit log entry to eventData in the data store.

The Kong request API audit log entries provide the following information:

In the event store, the eventData Field is populated with the data retrieved from Kong. Below is a sample of the Kong audit log transformed into the event data object. What is important to know is that not a single piece of information from the original audit log entry is lost. It is all mapped to the eventData object.

Conclusion

With the Kong CloudTrail Lake Integration, the objective is to simplify compliance efforts by hosting the Kong Gateway Audit logs in AWS, alongside the rest of your AWS Infrastructure and event activity. You can learn more about this integration at the github repo: Kong CloudTrail Integration and AWS CloudTrail.

References