Developers will remember times when they were trying to figure out why something they were working on wasn’t behaving as expected. Hours of frustration, too much (or perhaps never enough) caffeine consumed, and sotto voce curses uttered. And then — as if by fate — the issue is narrowed down to a simple oversight that makes perfect sense upon discovery. Problem solved!

One of my favorite examples of this was with a colleague at my first job. We carefully reviewed our code over and over, and everything looked fine. But it wasn’t behaving as expected. We then noticed that our changes were reliably not being reflected no matter what they were. It turned out we were forgetting to compile our code once we shipped it to the server for execution. Oops! "We’re geniuses,” he said. We had a good laugh.

Kong Gateway, the world's most popular API gateway, is sure to frustrate some developers here and there. It’s software, and software is never perfect. In this guide, we’ll go through some methods I use when helping others figure out what went wrong.

1. Look at the headers, and use the Kong-Debug: 1 header when appropriate

When you make a call to Kong, it gives you information about your request. Let’s assume you made one, but it is not giving the expected result. In this example, we have httpie installed.

We are getting a 200 OK, but the output is not what we expected. We see there is a 2ms latency for Kong, and a 6ms upstream latency. Kong is able to proxy the request. If the ‘X-Kong-Upstream-Latency’ header was zero or missing, then we know the request never made it to the upstream.

Let’s get more details. Are we going to the right upstream?

We have more information. We now know the upstream service, and the route. Hmm . . . the route and service aren’t correct. Why’s that? Let’s see:

Aha! One of our fellow developers is having a little fun. They created a route that will catch anything in the request path that begins with the letter ‘p'.

They did exactly what the documentation warned about. Fortunately, we figured this out thanks to the Kong-Debug: 1 header. This header will likely be disabled in production environments — as it should — via a global response transformer plugin.

2. Whose plugin is it, anyway?

Well, this is strange. All of a sudden a route that has been working fine is giving us a 503.

Let's get more detail with the debug header.

Both the service and route are correct. But why is there no upstream latency? Is Kong terminating or failing on the request? Let's check.

And there are also no plugins on the route! Is there a global plugin perhaps? Kong Manager lets us find them from the UI on the plugins tab. Kong Manager is available in Kong Free Mode as well as Kong Enterprise. But we can also use the API with the shell in a pinch. We use JQ in this command.

Aha! Someone created a global request termination plugin. We’re being sabotaged! No, not really. Perhaps it was an honest mistake.

Not only should we pay attention to what plugins are running, but also what order they run in. Plugins follow an execution order, and do work in different phases. This concept is covered in depth here. It’s possible to see a visualization of each route and the plugins that run on it. KongMap is a nifty tool for this purpose. Also note that Kong allows us to change the order of execution of the plugins, so be sure to check if this has been altered as well.

3. Get a higher view

Sometimes, after double and triple-checking all settings, we’re still not seeing the expected behavior. We tailed both access.log and error.log for any hints, but we found nothing. It helps to get a view of the entire environment.

Here’s a fun example I was involved in not too long ago. We configured the mTLS plugin, but no matter what we did, we weren’t able to get our consumer identified and validated. Here’s a visualization of what we were trying to do.

It’s never this simple, is it? Kong was running in the cloud, which means we needed a load balancer to expose it externally. And sure enough, it was an application load balancer, which does the TLS termination.

Of course, Kong wasn’t getting the client's certificate. The ALB didn’t pass it along.

If you get stuck, try to draw an image of the components you’re working with. Draw it in your mind, on paper, or using your favorite application. You might find that you uncover areas that need further checking.

4. Is that feature there?

Here’s a small one: you read the docs, and you swear that you configured the feature properly. No matter what you do, the behavior isn’t what you expected! Let’s back up just a little. What version of Kong are you running?

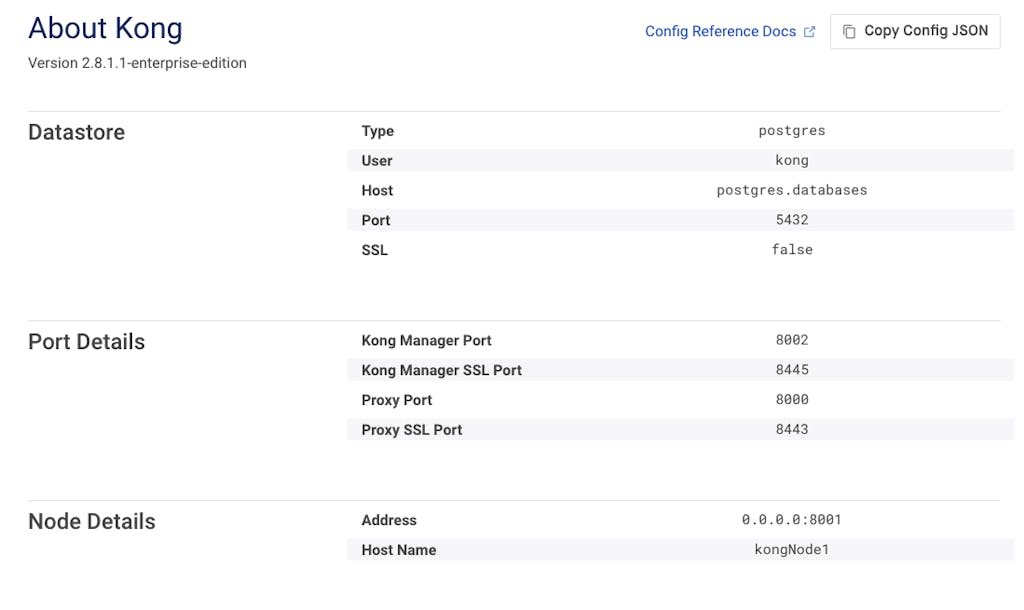

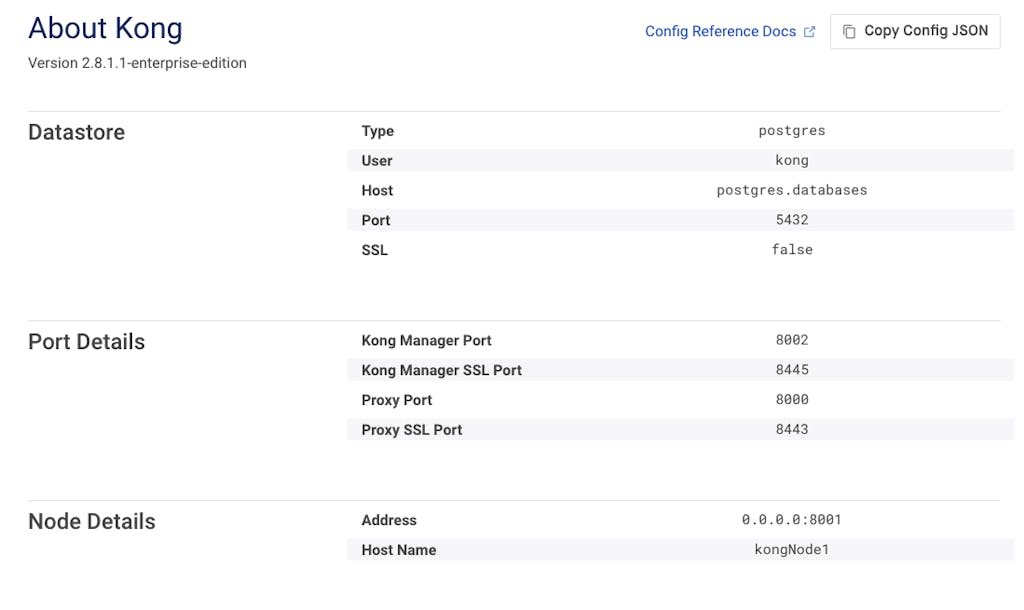

In Kong Manager, mentioned earlier, if you go to http://managerhost:port/info — you’ll get a screen like this:

As you can see in the Release Notes, Kong is an active project with many releases introducing new features. Be sure you’re running the latest version to get access to all the features you see in the docs.

Also, make sure to familiarize yourself with any breaking changes. These are rare, and may or may not apply to you, so be sure to scan a changelog to know if you need to make any alterations. It may save you hours of troubleshooting later.

5. Get granular

Kong is blazing fast and scalable. But somehow we’re getting a 70 ms response time! The application teams aren’t happy with us. We aren’t getting any alerts that Kong has saturated the hardware we give it. So what’s going on here?

It’s time to turn on granular tracing to find out. Once enabled, we can generate detailed traces about a particular request. Let’s add these settings to our deployment.

Note: This feature is deprecated, but it will be available at least until Kong 4.x. If you’re reading this article and the feature is no longer available, please use OTEL.

We now pass a header, gimmieDatTrace, as part of a debug request. Then we have a look at the /tmp/trace.log file. Aha! The trace section shows a Lambda plugin taking 68 ms. Of course, this isn’t within the Gateway's latency. The route in question is a serviceless one. The Lambda function is being called synchronously.

So we know we can tell the application team about the third suggestion in this article.

OTEL is another option. It will need an OTEL destination. It has another advantage in that it further allows us to make our own tracers if we need to. This is a more complex approach and is documented here.

6. There’s a Knowledge Center?

Yes, there is a Knowledge Center.

Let’s see if it works. There are over 650 articles as of the time this was published. We want to log additional fields into our ELK stack. Let’s search for ‘logging'. And it’s the first hit. (It’s a good day already.)

As per the article, we can log requests, responses, custom headers, and fields. In fact, someone already used this feature with a logging filter:

https://github.com/StuAtKong/kong-plugin-log-filter

7. Get help!

Kong is an active project with a large and supportive community. Kong Nation is a good place to search for issues and ask questions.

Kong Academy is another good resource and has some courses that cover quite a few areas. Best of all, it is free.

If you’re using Kong Enterprise, you have access to Kong's support team, who in turn have access to Kong's engineering teams.

Conclusion

We sometimes wonder if software is deterministic. Given that it’s digital, it must be, right? If a behavior is repeatable under certain conditions, then it’s fixable. It’s only a matter of getting the necessary details.

We hope this short guide helps you in your Kong projects and helps you reclaim some time solving puzzles — fun as they may be.