Security best practices remain a top priority for enterprises, especially as high-profile hacks and cybersecurity breaches pose increased risks. According to the 2022 Morgan Stanley CIO survey, IT spending is expected to reach 4.4%, with cloud computing and security software as the leading verticals. This rapid digital transformation across sectors presents organizations with opportunities, along with some new challenges.

One notable trend in digital transformation is the shift from a monolithic architecture to microservices.

Microservice-based architecture provides several benefits, but it also presents unique security challenges. Traditional cybersecurity models that focus on perimeter defense don't translate to microservices, which have a wider attack surface from multiple containers. Once a microservice is compromised, traditional security models cannot effectively protect other services inside the network. Instead, enterprises need to embrace defense in depth strategies to secure their applications.

In this article, we'll learn what defense in depth is, and we'll see how it applies to microservices. Along the way, we'll also introduce some tools to help. This guide will focus specifically on containerized microservices running on cloud-hosted Kubernetes clusters.

What is defense in depth (DiD)?

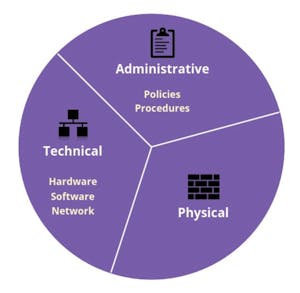

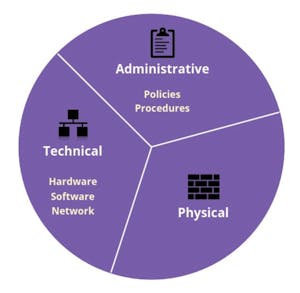

Defense in depth is a cybersecurity model conceived by the National Security Agency (NSA) that focuses on layering multiple levels of protection. Theoretically, defense in depth security reduces the chance of a successful attack by deploying several independent controls to create multiple layers of security. There are three key categories of control for defense in depth.

1. Physical

This category focuses on securing physical access to IT systems. This includes physical prevention measures such as fences and active guards around sensitive data centers, as well as temperature controls and uninterrupted power supplies. Most enterprises running their systems in the cloud can offload this responsibility to cloud providers or FISMA-compliant data centers.

2. Administrative

Administrative controls focus on policies and procedures such as:

- The principle of least privilege

- Role-based access control (RBAC)

- Attribute-based access control (ABAC)

- Identity and access management (IAM)

- Strong password policies.

Strong security teams will also undergo threat modeling exercises to review vulnerable areas.

3. Technical

Technical controls cover both hardware and software systems. This includes the use of:

- Encryption, such as through hardware security modules (HSM) or Transport Layer Security (TLS)

- Firewalls, such as web application firewalls (WAF)

- Network and host intrusion and prevention systems

- API gateways

Technical controls can also include higher-level activities, such as threat detection using machine learning, as well as logging and monitoring practices.

All three categories are important for a robust defense in depth strategy.

In this article, we’ll focus mainly on technical controls for microservices running on Kubernetes-based architecture in the cloud.

Defense in depth with microservices

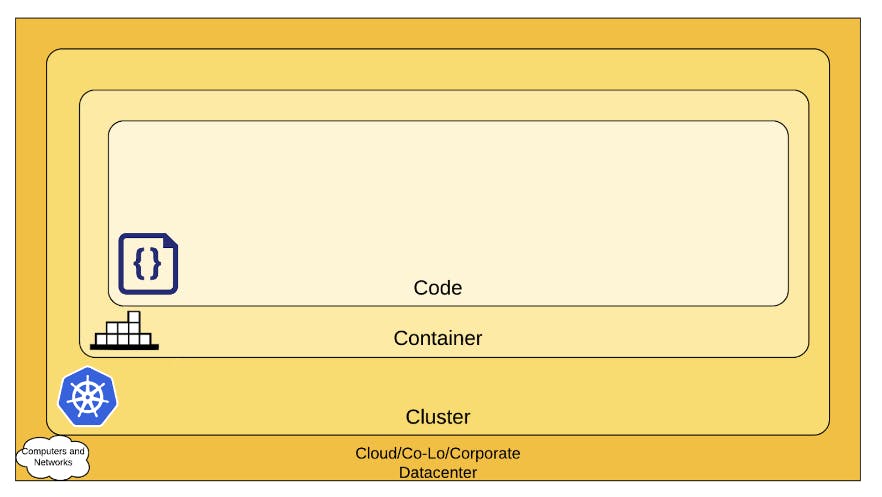

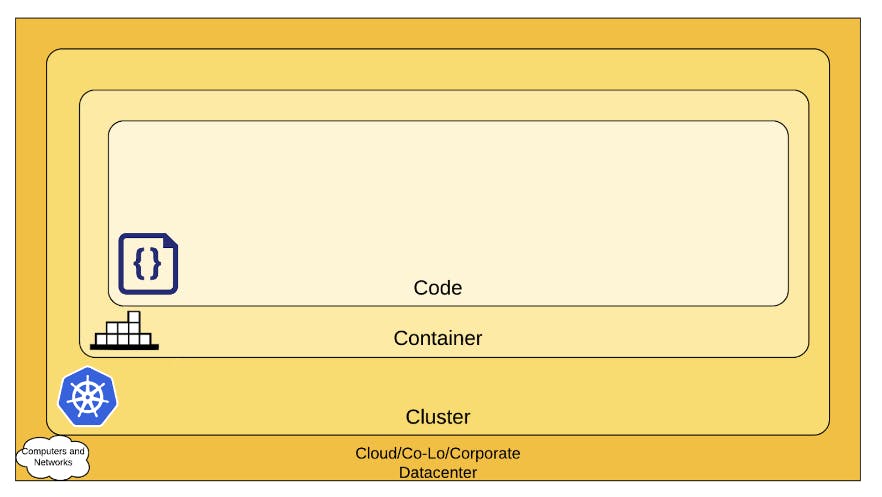

When compared with traditional monolithic applications running on company-managed data centers, microservices running on Kubernetes in the cloud have an enormous attack surface. An often-used approach to understanding the attack surface is by considering the 4C's of Cloud Native Security.

Source

1. Cloud

This outermost layer aims to protect the cloud account and reduce the risk of a compromised account and service. Protection at this level includes some administrative controls, which might include:

- Splitting cloud accounts based on environment, project, or role

- Configuring IAM policies following the principle of least privilege.

You should secure each account with multi-factor authentication and use FIDO2-compliant standards to prevent phishing attacks. The process of securing each service may include setting up load balancers, network policies (with the use of network ACLs and firewalls), and an encryption scheme with key management or HSM services.

2. Cluster

Once you've secured the cloud account, the next priority is securing the Kubernetes cluster. Most cloud providers offer a managed Kubernetes cluster with secured control planes. However, cluster administrators should harden the cluster according to the security guidelines in the CIS Kubernetes Benchmark. To start, turn on encryption and audit logging features, which are disabled by default on most Kubernetes distributions.

Beyond the initial setup, Kubernetes administrators must also configure network policies and admission controllers. These limit the risk of unauthorized communication and privilege escalation. Open-source tools are available to simplify this setup. For example, some tools can inject default security contexts and block pod exec to prevent privilege escalation, both namespace- and cluster-wide.

Finally, you need to protect the OS of the underlying hosts. To do this, opt for a specialized, container-optimized OS instead of general-purpose Linux nodes (such as AWS Bottlerocket or GKE COS) with a large attack surface.

3. Container

After securing the cloud and cluster, the next layer is the container. Use multi-stage builds to reduce the container size, including only necessary components.

If you're using a distro-based container image, then use the corresponding CIS benchmark to harden the image. Alternatively, if you only want to include necessary binaries, use a distro-less or scratch image. To further secure the container, you can add Linux kernel features (such as SELinux, AppArmor, or seccomp) to the securityContext section of the pod definition.

At runtime, you can utilize host intrusion detection systems to parse Linux syscalls and alert you when a threat is detected. Some systems provide an additional security measure to protect against compromised workloads.

4. Code

Finally, you need to ensure the code itself is free of vulnerabilities. This includes:

- Scanning the code for security vulnerabilities

- Securing the supply chain of tools and dependencies used to create containers from the application code.

Protecting your software supply chain ensures nothing tampers with your application code during the build process. There are many popular code scanning tools you can use, including open-source tools to audit the metadata for your software supply chain.

At this point, you have securely set up your Kubernetes cluster, which is running in the cloud along with your containerized code. But how can you further extend the defense in depth model to ensure runtime security with incoming requests?

Securing Microservices with API Gateway and Service Mesh

For containerized microservices running on Kubernetes, implementing an API gateway and a service mesh is a great way to add security checkpoints to your overall system architecture. As a bonus, these components let the cluster administrator and application developer offload many of their security responsibilities.

The API gateway

An API gateway is an API management software that receives requests and routes them to the appropriate backend services. The API gateway sits in front of all your upstream applications, acting as a single entry point to allow ingress, without exposing internal application endpoints.

This centralization also means the API gateway can facilitate cross-cutting security measures like authentication and authorization, input validation, and rate-limiting. This abstraction lets application developers focus on the business logic in their application code, offloading ingress security to the API gateway.

Most cloud-hosted API gateways sit behind a load balancer equipped with a WAF to provide automatic service discovery, cached responses, and load balancing capabilities as well.

The service mesh

While an API gateway is useful for securing ingress into the cluster, a service mesh is useful for securing intra-cluster communication among components.

A service mesh is a network of proxies that facilitate communication between microservices. Instead of implementing common service-to-service communication features (such as service discovery, encryption, and retries into the application code), you can offload these to a proxy (called a "sidecar") that runs alongside the application container. The service mesh becomes responsible for securely communicating between services. This makes it easier for the developers to write applications and centrally implement a zero-trust architecture.

The API gateway and service mesh are complementary technologies. A Kubernetes cluster can use an API gateway to control ingress requests and use a service mesh internally to control service-to-service communication. You can also use these for cluster-to-cluster connections for multi-region or multi-cluster use cases.

Conclusion

As the number of microservices in your application grows, the risk of a security breach increases as well.

In this article, we've learned about defense in depth strategies, which is the layering of different security measures for redundancy. We learned about the three categories of control for defense in depth (physical, administrative, and technical), along with the 4C's of cloud-native security, to protect microservices running in the cloud. Finally, we looked at how the API gateway and the service mesh allow engineers to offload security responsibilities to dedicated software components as a scalable abstraction layer.

The Kong API gateway (available self-managed as Kong Enterprise or as a SaaS solution with Kong Konnect) and Kong Mesh are enterprise-ready security solutions for cloud-native workloads. Kong Enterprise and Kong Konnect provide API security with a robust suite of pre-configured plugins, from authentication to traffic control. Kong Mesh is a service mesh based on Kuma to integrate across multi-cloud, multi-cluster Kubernetes, and VMs. Together, Kong Konnect or Kong Enterprise and Kong Mesh will help your organization move in the direction of a fully featured defense in depth security solution. Get a personalized demo today!