Continuous integration and continuous deployment—known colloquially as CI/CD—are essential strategies for building modern software applications. The goal of these processes is to foster a culture of continuous updates.

CI is the process by which an external machine (not your local development environment) fetches your app and dependencies and then runs a test suite to ensure everything in your application builds and runs correctly.

After your CI passes and your changes are merged into the code base, CD takes over to ensure that your application is distributed to production environments—whether that's a single server, a fleet of servers or even a mobile app store.

If your application is architected as a collection of microservices, a CI/CD pipeline for each microservice can vastly simplify the software development lifecycle. Each CI/CD step runs on individual repositories, ensuring that every microservice is tested and deployed individually. Kong Gateway simplifies the management of these microservices.

But what should you do when Kong Gateway itself needs to be tested and updated?

Since it's a good practice to continuously test and deploy your microservices, those same habits should also apply to your orchestration. In this post, we'll take a step-by-step look at how to iterate, test and deploy Kong Gateway using CI/CD. You'll learn how to anticipate and approach changes at the microservice level and how your gateway tests should handle updates—both expected and not.

Setting Up Your Development Environment

We will write some code in this blog post, but you can download the entire codebase for our walk-through here.

To get started, ensure that you have a recent version (12.0 or later) of Node installed on your computer. You will also need a Postgres database running on your machine. Finally, you should also have Kong Gateway installed.

Clone the repository above, then navigate to the new directory via the command line:

Install all the Node dependencies with npm install. Then, run node server.js to start the local server. In a browser window, navigate to http://localhost:3000. You should see a greeting message.

By default, Kong expects to use a Postgres database to store information. Enter the admin mode for Postgres by typing psql, then create both a user and a database named kong:

If you have a fresh Kong installation, you'll need to set up your database schema through migrations. To do so, enter the following command in the terminal:

You can start the Kong service next:

For our final configuration step, we'll place the Kong Gateway in front of our Node server. We need to create a service and then map a route from Kong to that service.

To create a service, we can use the Kong API. Run the following curl query in the terminal to set up a service named node-server that maps to our localhost:3000 server:

Next, create a route from Kong to that service, at a path called /api:

To verify that this all works, navigate to http://localhost:8000/api in your browser. You should see the same greeting message as before.

Adding a CI Pipeline

Our repository comes with a little test script that makes a curl request to our Kong route and verifies that everything is working. You can run the test script with the command bash test.sh. An error message will be printed out if there's a failure, as we'll see later in this tutorial.

Now, let's add a CI pipeline to this existing test. For quite some time now, GitHub has been the de facto hosting service for software repositories. They also offer a CI/CD platform called GitHub Actions. GitHub Actions allow you to define a sequence of steps to build, test and deploy your code. This makes GitHub the perfect platform to explore establishing a CI/CD pipeline for our Kong Gateway.

A walk-through of the full capabilities of GitHub Actions is beyond the scope of this blog post, but we'll highlight some of the most important aspects. Open up the file called .github/workflows/ci.yml. This file contains the mappings which GitHub Actions uses to prepare and execute our tests. Let's take a look at some of the more relevant key/value pairs.

Setting Up Environment Variables

Like on our local machine, we need to set up our Kong database and username, then specify that we want to use Postgres. We do this by setting up a Postgres service and passing along the relevant environment variable and startup options:

Setting Up the Test State

The first few steps of our CI process check out our repository and install Node:

Installing and Configuring Dependencies

Next, we install Kong:

After that, we install our Node dependencies and run out database migrations. These steps should look familiar since they're the same processes you went through when setting up your local development environment:

Like we did on our local machine, we need to create a service and route for Kong. That way, Kong understands how to pass requests to our Node server:

Running the Test

Last but certainly not least, we run our test suite:

Let's take a quick look at this test.sh file:

This test leans heavily on the fact that curl returns an exit code if it can't communicate with a server. The idea here is that we run our Node server in the background, and then we attempt to make a curl request to the Kong Gateway. If that request succeeds, the test passes; if not, the fail code is printed, and the test is marked as failing.

Before we get into validating that this test suite works, let's see it in action in its current state. Create an empty commit, and then push it up to your repository. This, in turn, will trigger the GitHub CI action to run:

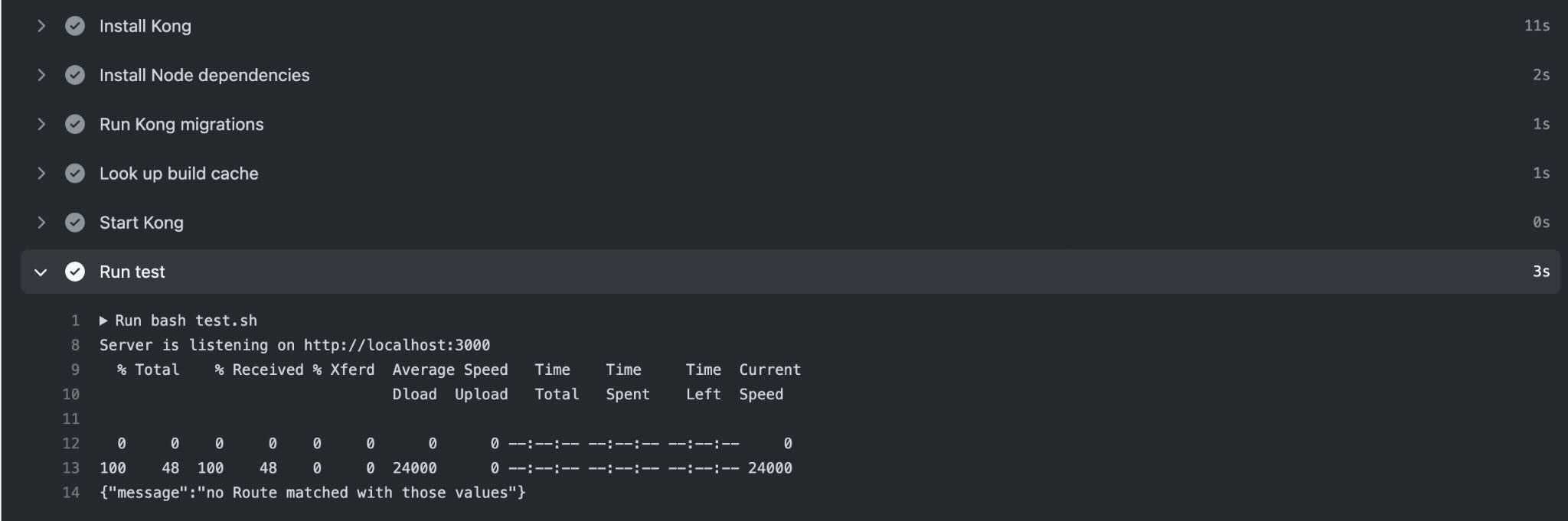

If you navigate to the GitHub Actions tab on your repository, you should see a response from the route, which indicates that the test passed!

Now, let's see what happens when this test fails. The Kong Gateway comes with a configuration file. This file controls many aspects of how Kong runs, including:

- Plugins to load

- Ports to listen on

- Authentication certificates to use

- Logging destinations

Our test does try to make use of a custom configuration file that changes a few of the default settings:

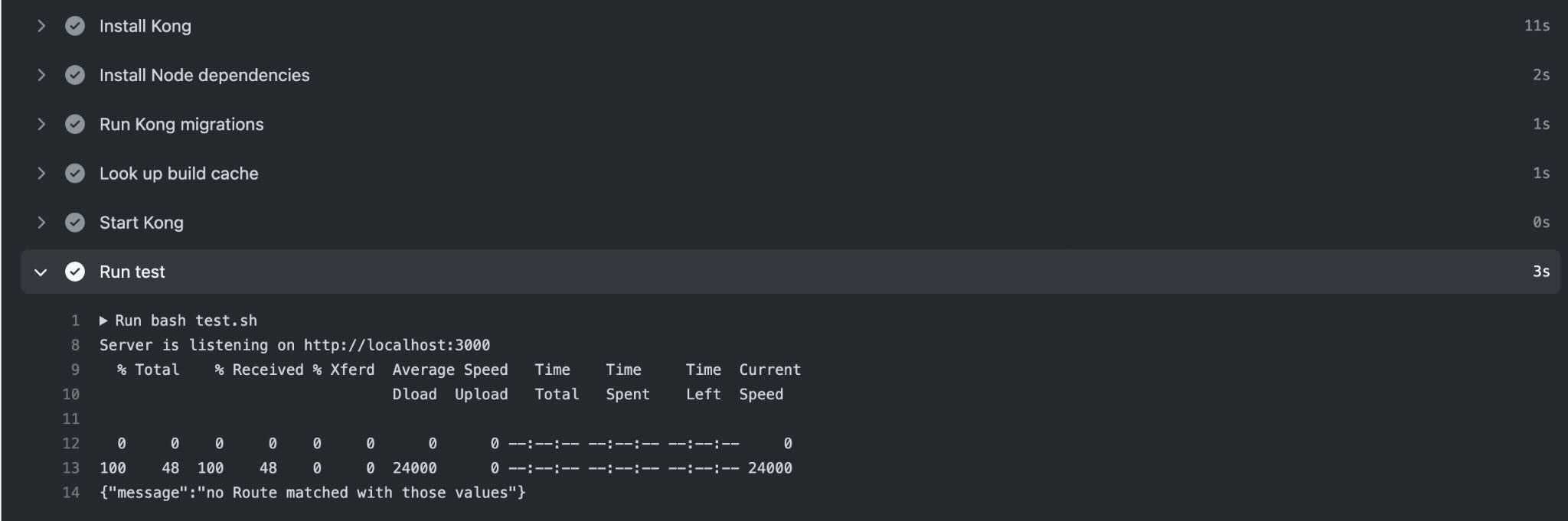

proxy_listen changes Kong's HTTP port to 9001, instead of using the default of 8000. Let's remove the comment (#) to enable this configuration change. Commit this diff and push it up to your repository. Your GitHub Action test should now be failing. Can you guess why?

In test.sh , curl makes a request to http://localhost:8000/api, but we've just changed the Kong proxy port to 9001. Because the Kong Gateway configuration is not in sync with the test's expectations, we've successfully demonstrated two things: 1) that our Kong Gateway is being tested and 2) that our CI can fail. Go ahead and change the port number in test.sh to 9001, commit the change, and push it again. Your CI pipeline should succeed once more!

Adding a CD Pipeline

The process for adding a continuous deployment pipeline to this project is similar to the one we just went through for CI. The only major difference is that getting the project onto a specific hosting platform is unique to each provider—AWS, Google Cloud, Microsoft Azure or something else.

Aside from differences in hosting providers, how we deploy our Kong updates depends on whether or not the app is containerized and/or managed by Kubernetes. For this example, we'll assume the following:

- DigitalOcean hosts both the Kong Gateway and an existing microservice.

- The infrastructure is not containerized.

- Some sort of process manager, like pm2, handles recovering from errors.

While these steps may not precisely match your infrastructure, we're merely practicing how to craft a GitHub Action that performs the deployment. Let's take a look at our GitHub Action workflow in deploy.yaml:

We can break down the deployment into two separate steps. First, we indicate that we only want this workflow to run on pushes to the main branch. This ensures that other pull requests and commits don't inadvertently cause a deployment to production.

Second (and more importantly), we're taking advantage of a feature in GitHub Actions: encrypted secrets. Secrets are essentially a safe place to store credentials, like environment variables, which are needed to interact with servers. Our actual deployment occurs using ssh-action. We provide ssh-action with our DigitalOcean server's host, username and key, and ssh-action takes this information and uses it to log into the host machine. Next, we navigate to the location of our project on disk and fetch the latest release as a tarball archive. Finally, we stop Kong, and then we restart it with our config file.

In this way, we can ensure that our deployments always occur after a push to main; and a push to main is only possible after all of our Kong Gateway tests pass.

Conclusion

Continuous integration and deployment is an important procedure - one that enables teams to iterate quickly and confidently. CI/CD eliminates the possibility of developing software that only works on a single developer's machine, removing human error from the deployment process.

CI/CD not only applies to application code like in microservices. It can (and should!) be used for your other configurations as well, like the Kong Gateway.

By doing so, you expand your Kong Gateway usage iteratively. For example, you can see the effect of adding a new plugin on your infrastructure in testing and development before promoting the change to production. Consistent and repeatable deployments of configuration changes mean fewer surprises, and that lets you focus on adding new features instead of tracking down production issues.

Once you've developed and deployed Kong Gateway within CI/CD, you may find these other tutorials helpful:

Have questions or want to stay in touch with the Kong community? Join us wherever you hang out:

🌎 Join the Kong Community

🍻 Join our Meetups

💯 Apply to become a Kong Champion

📺 Subscribe on YouTube

🐦 Follow us on Twitter

⭐ Star us on GitHub

❓ ️Ask and answer questions on Kong Nation