As an application developer, have you ever had to troubleshoot an issue that only happens in production? Bugs can occur when your application gets released into the wild, and they can be extremely difficult to debug when you cannot reproduce without production data. In this blog, I am going to show you how to safely send your production data to development applications deployed using a service mesh to help you better debug and build production proof releases. For this how-to, we will use the Kuma service mesh and a Kubernetes cluster.

To begin, let’s install the necessary pre-requisite software. First, connect to your Kubernetes cluster and install Kuma. Then, clone the following git repository which contains the necessary files for this tutorial and change to the project directory.

Next, create and label namespaces for the applications and install Kuma:

Note: At the time of this writing, Kuma is at version 2.0.0, so you may want to change VERSION to reflect the latest version.

Let’s verify Kuma successfully installed by port forwarding and sending a request to the Kuma’s HTTP API. In a separate terminal window, execute the following command.

Now let’s install all of the applications we are going to use. We are going to use Kong Gateway for ingress traffic into the mesh and mockbin.org as the application:

Now verify Kong Gateway is a service on the mesh:

Next, we will install two versions of mockbin. The first version is “production”, and the second version will be our development release candidate:

Verify both versions of the mockbin service are part of the mesh:

After our applications are installed, we need a way to observe traffic, so let’s install the built-in Kuma observability stack:

After the reference observability stack is installed, we will configure the mesh metrics backend and review Kuma’s built-in Grafana dashboards.

Run the below command to configure the metrics backend on the default mesh:

In order to use metrics in Kuma, you must first enable a metrics “backend”. By setting a metrics backend to “prometheus”, this instructs Kuma to expose metrics from every proxy inside the mesh. The below snippet shows you the default mesh configuration:

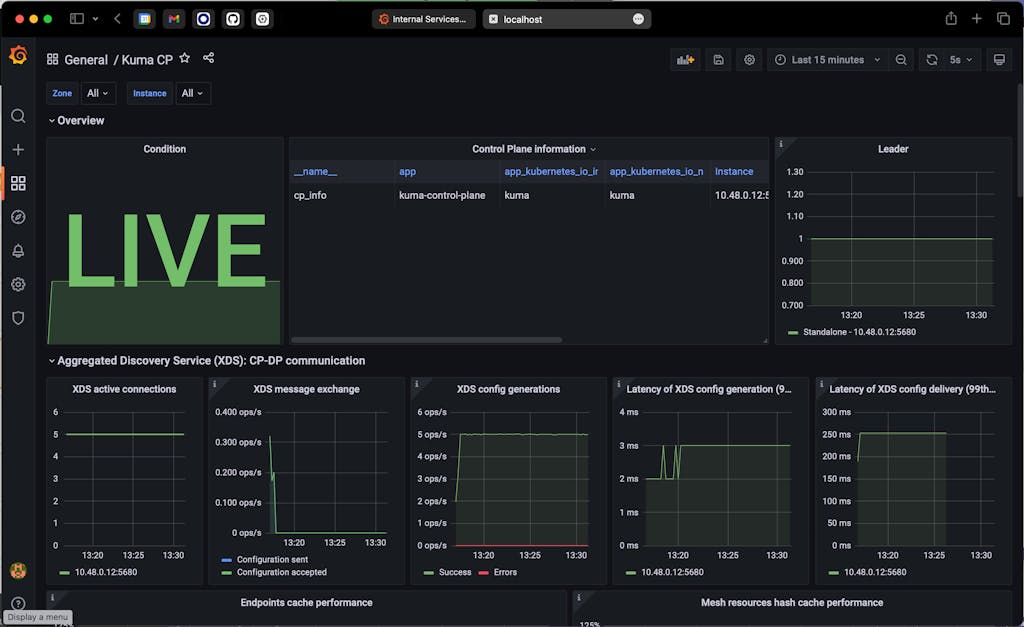

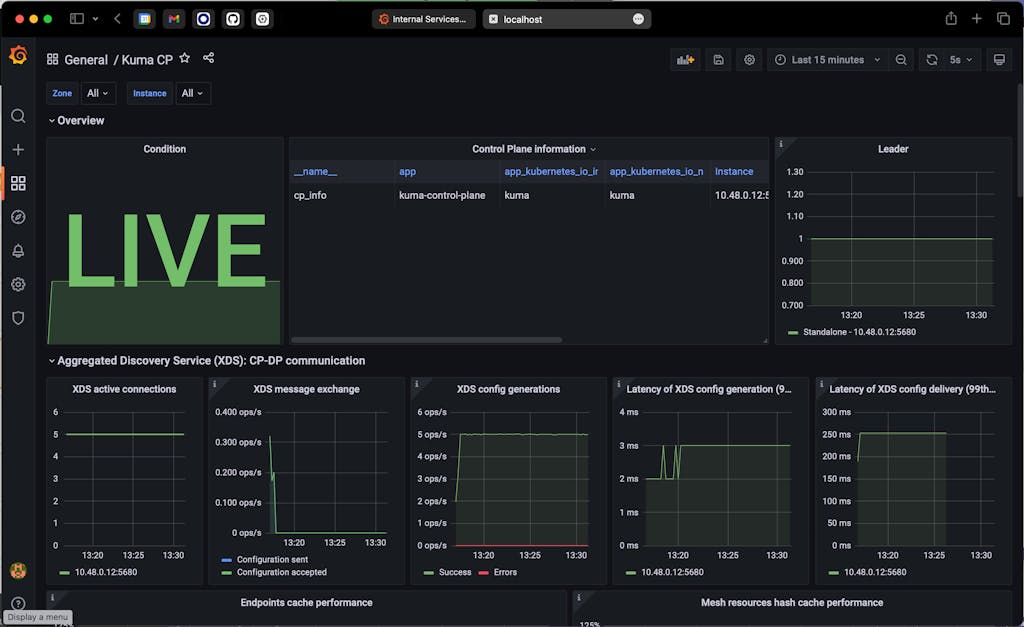

Click on Grafana and login using the default credentials (admin/admin). After you are logged into the admin console, find the Kuma CP dashboard, and you should see something similar to the below screen shot.

Next, create an Ingress for the mockbin application to call it with an external address:

Get the external address of the mockbin service:

Verify the mockbin service works:

Let’s generate some traffic so our Grafana dashboard has some data:

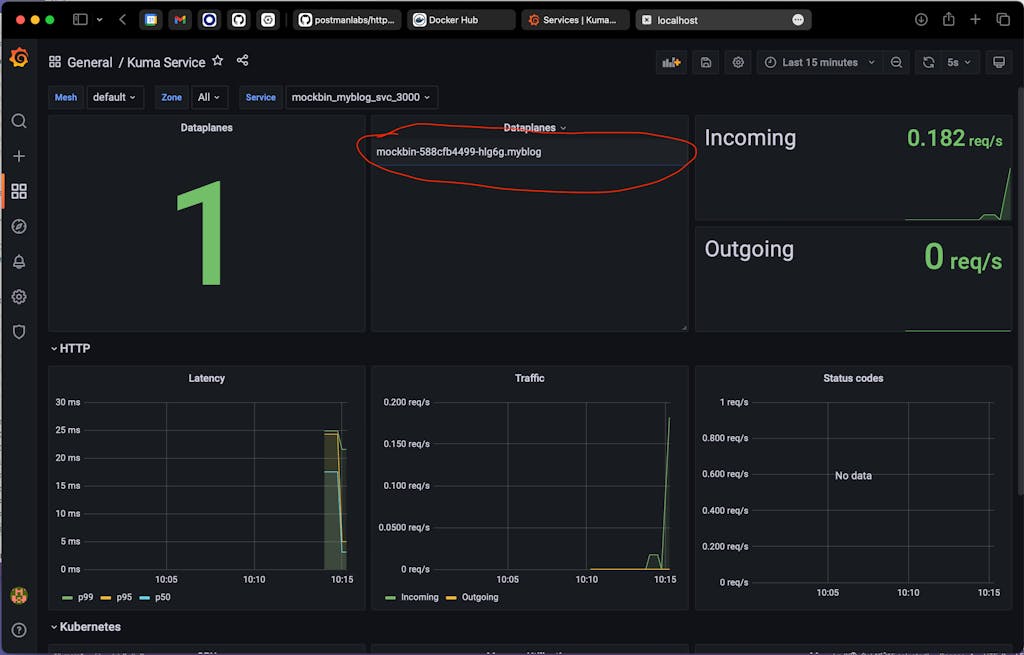

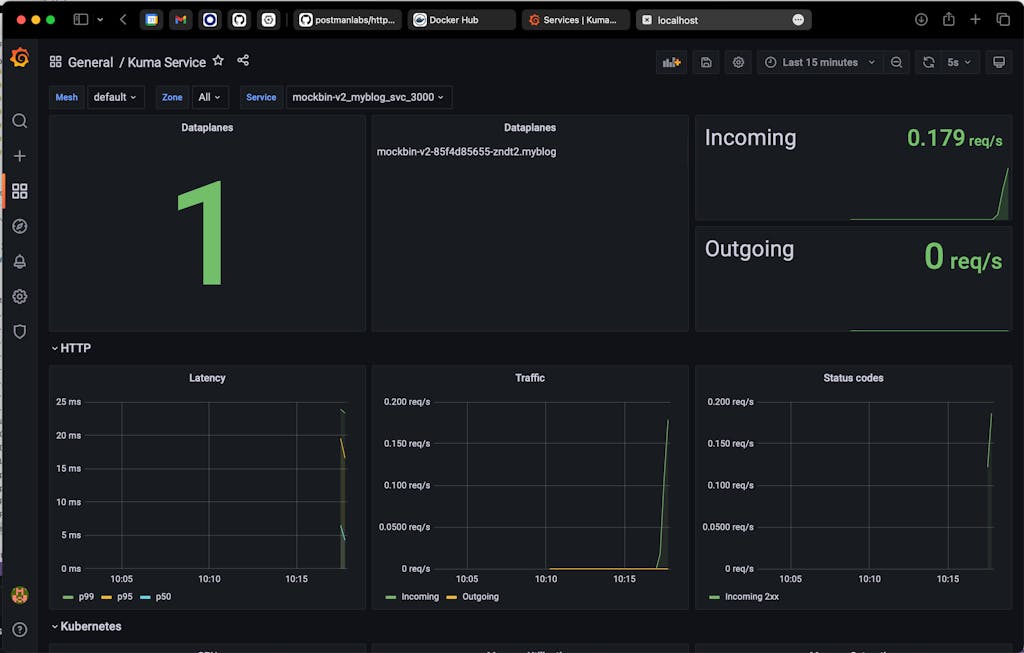

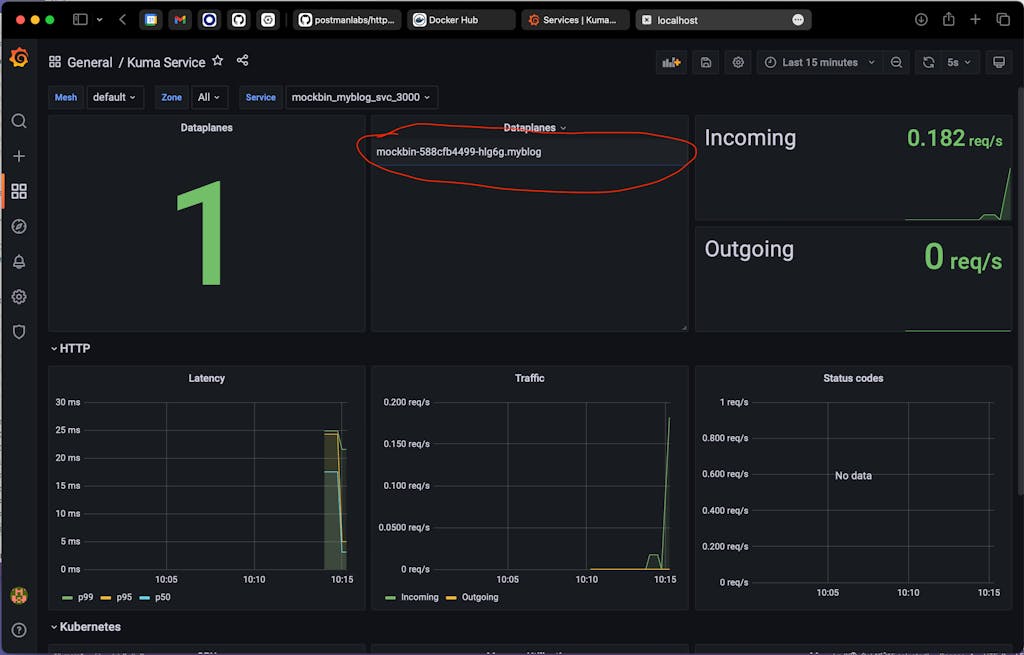

After the above command executes for a bit, find the Kuma Service dashboard in Grafana and select mockbin_myblog_svc_3000 from the “Service” dropdown. You should see data in the Incoming and Outgoing panels:

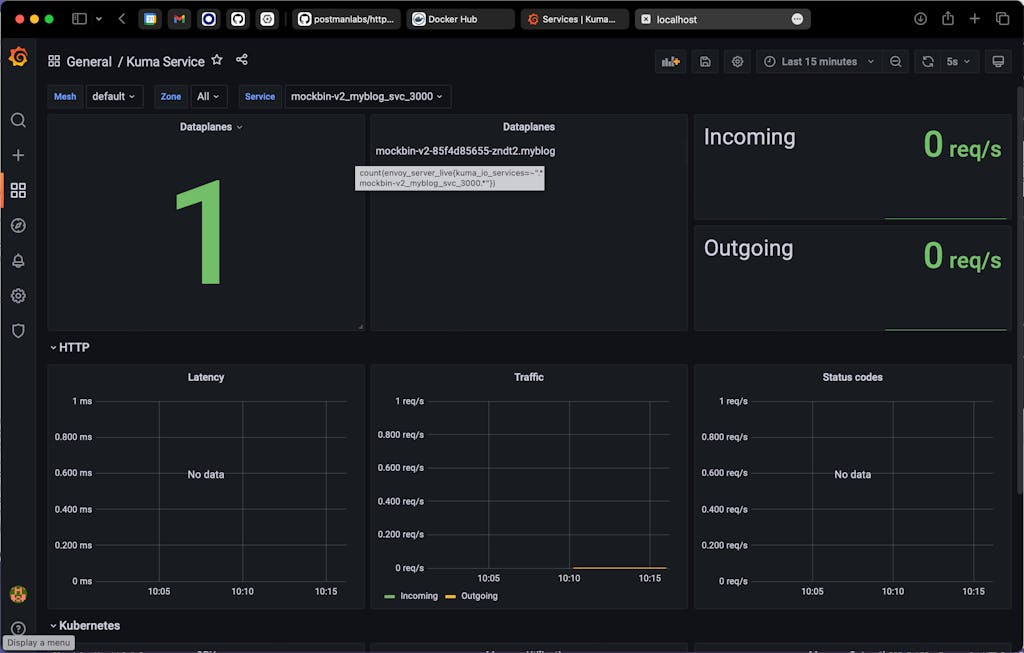

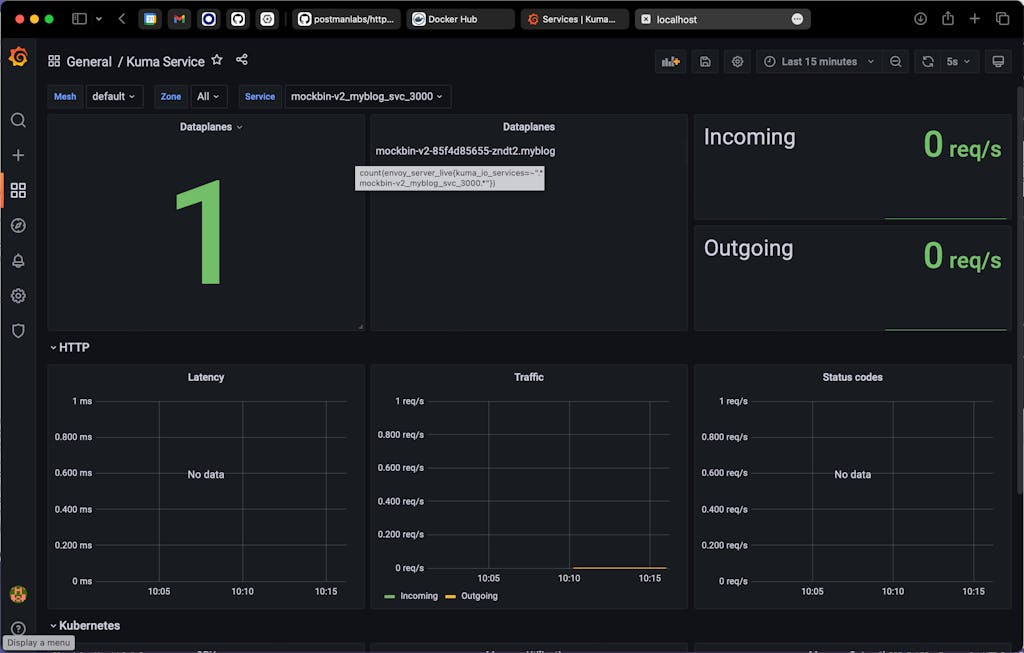

Next, let’s check version 2 of the mockbin service and make sure no traffic is flowing to that service. Select mockbin-v2_myblog_svc_3000 from the “Service” dropdown:

Now that we have determined no traffic is flowing to version 2, let’s mirror the traffic there:

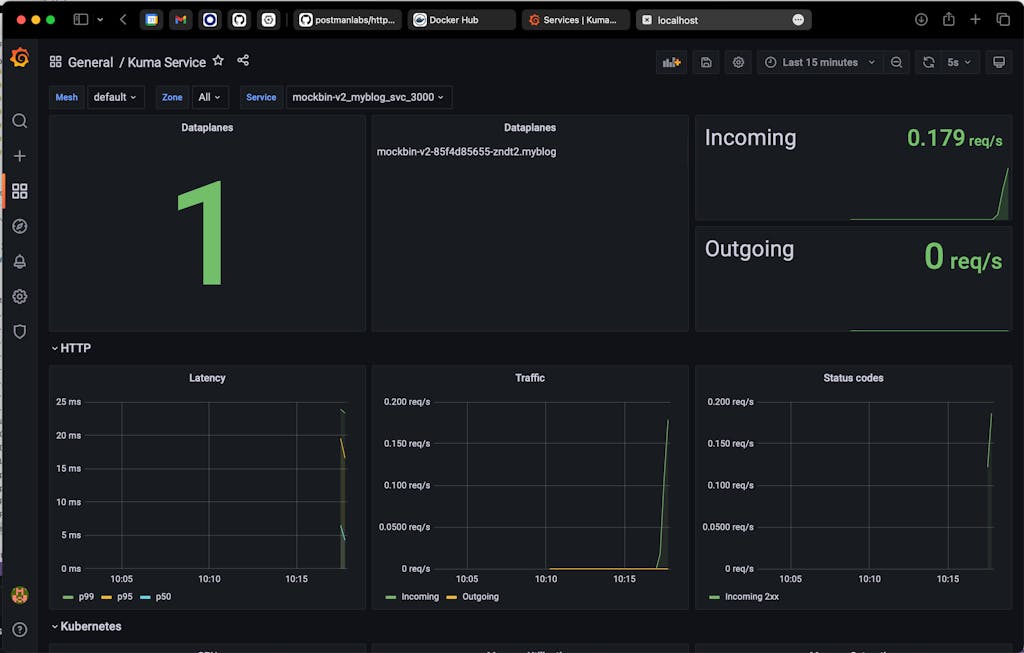

Wait for a bit and you should start seeing inbound traffic flowing on the mockbin-v2_myblog_svc_3000 service:

Let’s look at the Kuma policy we just applied. The Kuma policy we applied is of type “ProxyTemplate”. A ProxyTemplate allows custom policy to be applied directly to Envoy. This is a powerful feature in Kuma which allows the user to send Envoy configuration directly from Kuma. The below snippet says to apply the ProxyTemplate to the “mockbin_myblog_svc_3000” service.

The below snippet patches the Envoy HTTP Connection Manager by matching certain criteria. In this case, the criteria is an inbound network filter of type “envoy.filters.network.http_connection_manager” tagged with kuma.io/service: mockbin_myblog_svc_3000.

Once Kuma finds the matches network filter, it applies the below value which will create a route in Envoy. The route defines a virtual host that sends traffic coming from domain “mockbin.myblog.svc.3000.mesh” with path “/requests” to the local Envoy “cluster”, i.e. localhost:3000. For more information on route matching in Envoy, see here.

In order to send traffic to version 2 of the mockbin service, we need to set an option on the Envoy route called “request_mirror_policies”. The request_mirror_policies option tells Envoy to send a configurable amount of traffic to the “mockbin-v2_myblog_svc_3000” cluster. In this example, we send 100% of the traffic. Envoy will not return the response from version 2 to the calling service which allows us to safely run development code in production:

The complete policy is located in the traffic-mirror.yaml file.

Now that we have production traffic flowing to a development version of the application, we can troubleshoot problems that are difficult to reproduce without production data.

Let’s see this in action. First, get the pod names:

Let’s inspect the logs for version 1 of our mockbin service:

Now, let’s inspect the logs for version 2 of our mockbin service. In version 2, we have inserted additional logging information, which in conjunction with production data, allows us to reproduce and identify the source of the problem:

Congratulations, you have successfully and safely mirrored production application traffic into a development version of the application to help you better debug and build production proof releases.