As more companies are undergoing digital transformation (resulting in a huge explosion of APIs and microservices), it's of paramount importance to get all the necessary data points and feedback to provide the best experience for both users and developers.

Kong Gateway is a lightweight API gateway that is built to be open and versatile. Regardless of the technology stack involved, Kong supports these monitoring or logging requirements through its extensive ecosystem of plugins.

In this post, we'll explore how customers leverage Kong plugins and open technology to parse and forward their API logs of their cloud-managed service for further analysis.

Background

Recently I worked with a customer who wanted to forward their API logs to Azure Log Analytics. This required some tinkering as there are no native solutions that will ship API logs to Azure Log Analytics directly.

Overview

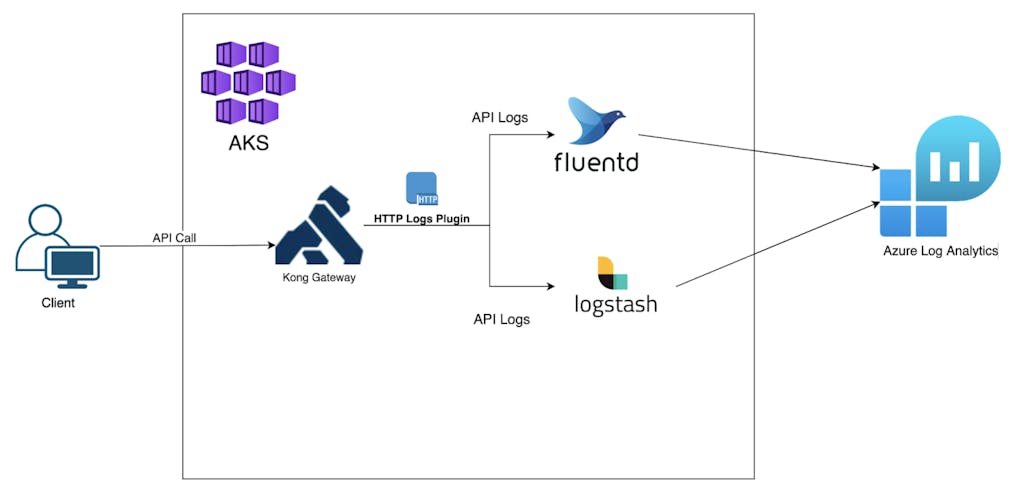

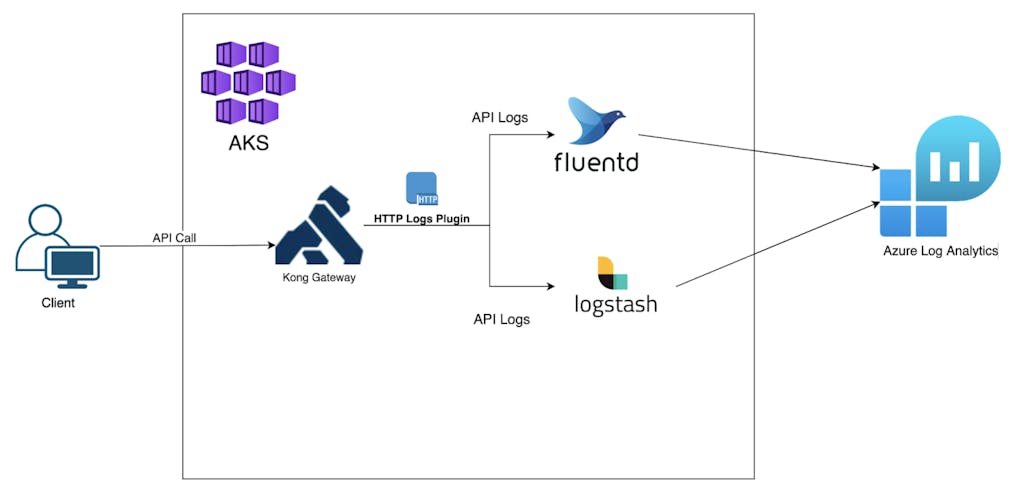

Kong is deployed in Azure Kubernetes Services, and for this experimentation, we'll try capturing API logs with HTTP Plugins and have them ingested by either Logstash or FluentD. To forward the logs to Azure Log Analytics, we need to install third party libraries for both Logstash and FluentD.

Technical Steps

Preparing the Plugins

As both FluentD and Logstash do output directly to Azure Log Analytics, we need to enable third-party plugins that allow them to do so.

Logstash Plugin

The recommended way to install plugins into Logstash container images is to create a custom image.

First, we re-bake the official image by installing the plugin. The Dockerfile as follows:

To ensure that the container runs on a linux machine, we add the - platform linux/amd64 flag during the build process.

Lastly, push the container to a registry.

FluentD Plugin

For FluentD, we just have to specify the additional plugins at the configuration. More details at the Helm configuration section below.

Setting up Kong in Azure Kubernetes

Kong has many flavors of installation mode. (You can see all the options here.) For our experimentation, we'll use Helm to configure and install Kong Gateway in Kubernetes.

Configuring Logstash and FluentD

We'll also use Helm to set up both Logstash and FluentD in AKS.

Logstash Helm Chart Configuration

If you've yet to create a Log Analytics Workspace, do so now. Go to the workspace and select Agent Management. Retrieve the Workspace ID and Primary/Secondary Key and add into the configuration. For good security practice, consider mounting the config as a secret volume or ConfigMap.

FluentD Configuration

Remember to include the additional plugin for Azure Log Analytics.

Enable Kong HTTP Log Plugin

For our experimentation, we'll use the Kong HTTP Plugin, which sends API request and response logs to a HTTP ingestion point. In this case, they’re Logstash and FluentD.

Replace {{Host}} with your DNS or IP address where you accessed the Kong Admin API.

- Let's create a sample Service for Logstash

2. Enable the plugin on the Service, and point to the internal hostname for Logstash which we just installed.

It should be in this example format http://{{service-name}}.{{namespace}}.svc.cluster.local:8080

3. Create another sample Service for FluentD.

4. Enable the plugin on the service created above, and point to the internal hostname for fluentd.

Generating API Logs

Let's try generating some API logs by accessing the services. Before that, we need to create the corresponding Routes for both services.

Generate some sample request to capture API Logs

Azure Log Analytics

Next we'll check if the Logs have been successfully lodged in Azure Log Analytics.

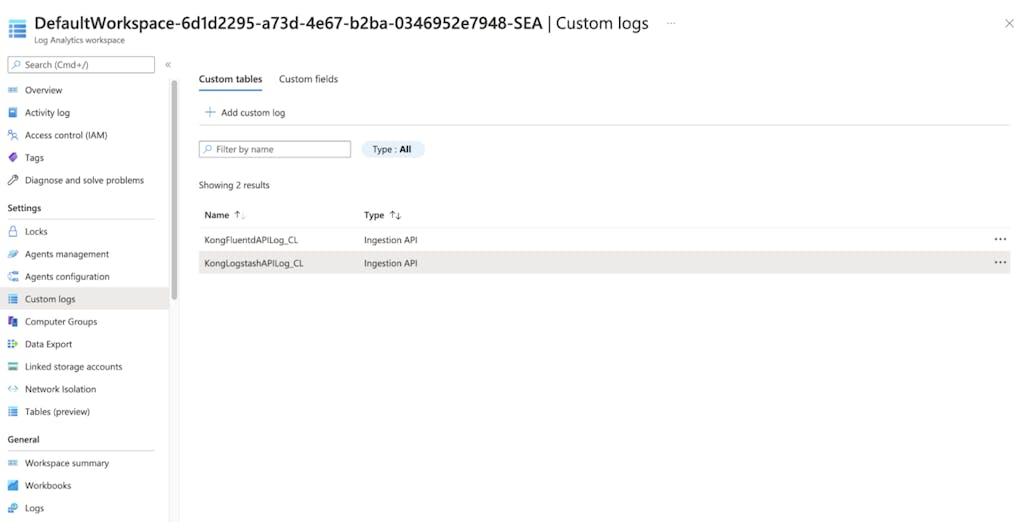

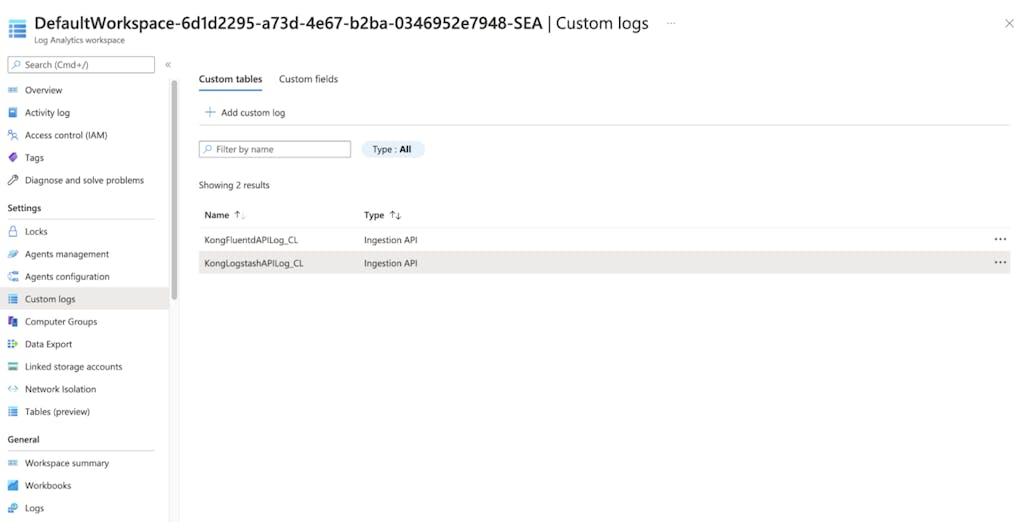

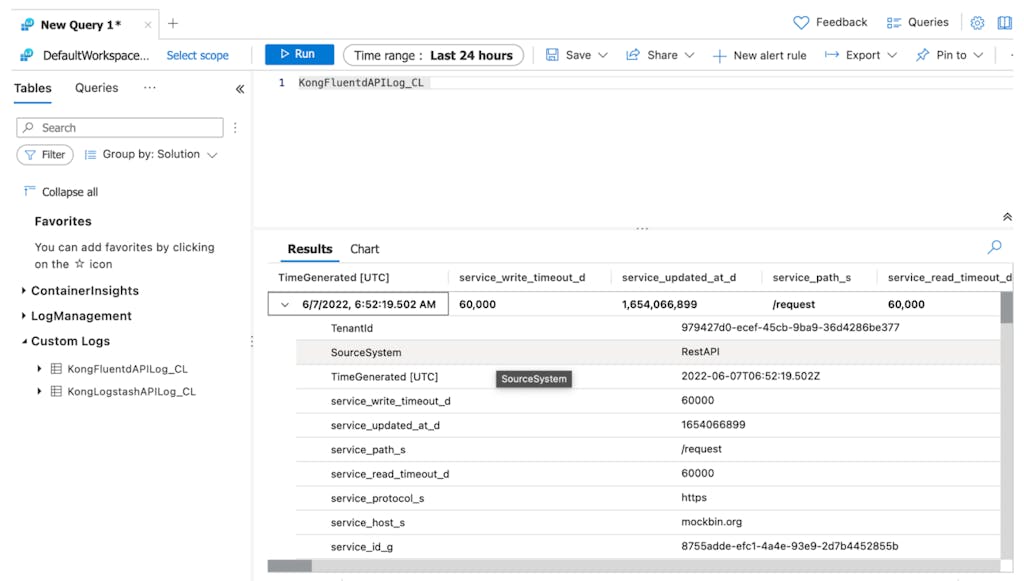

Go to Azure Portal, search for Log Analytics Workspace, and access the workspace that you created previously. Click on Custom logs, and you should see two Custom Tables that we configured in the logstash/fluentd configs.

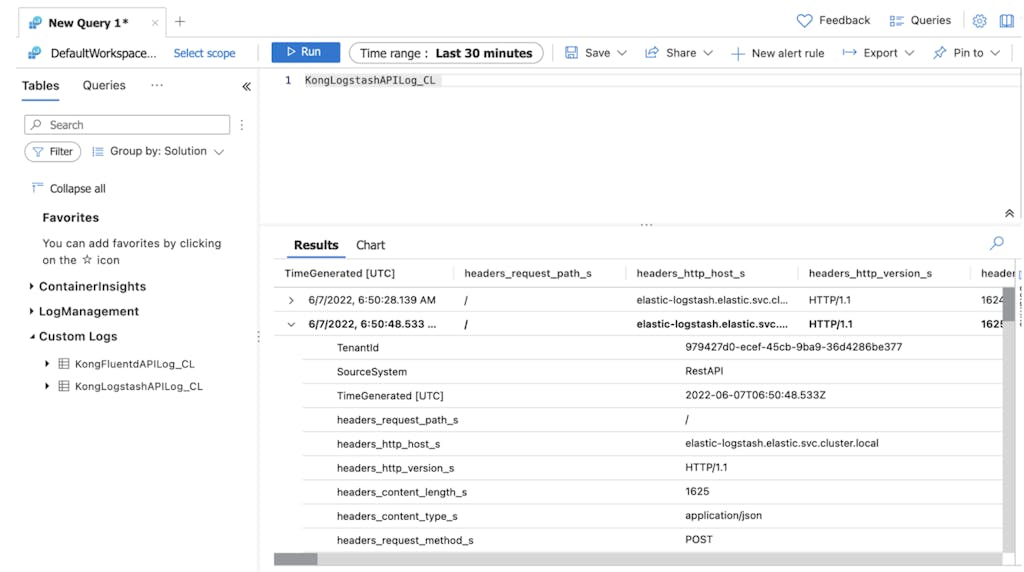

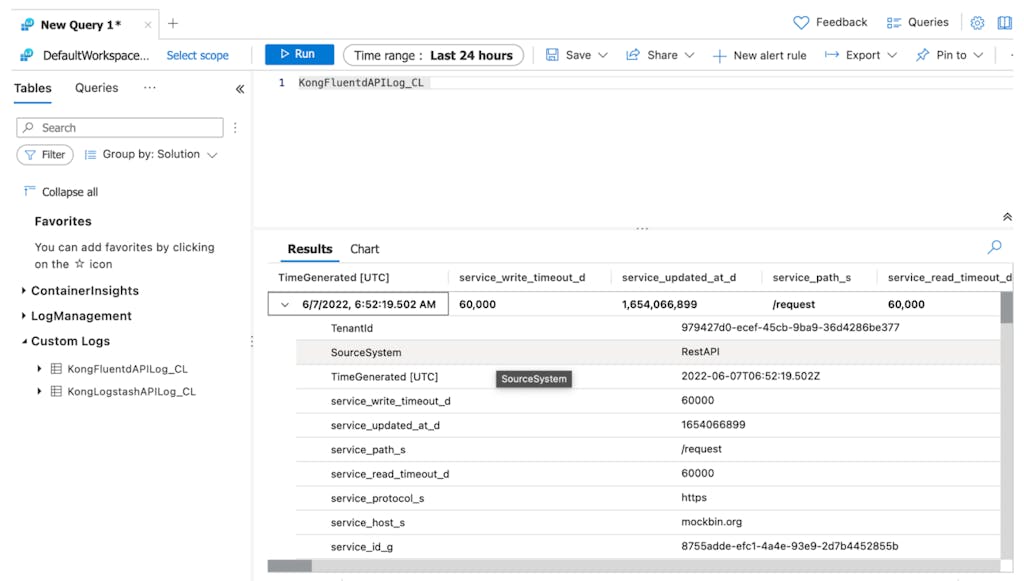

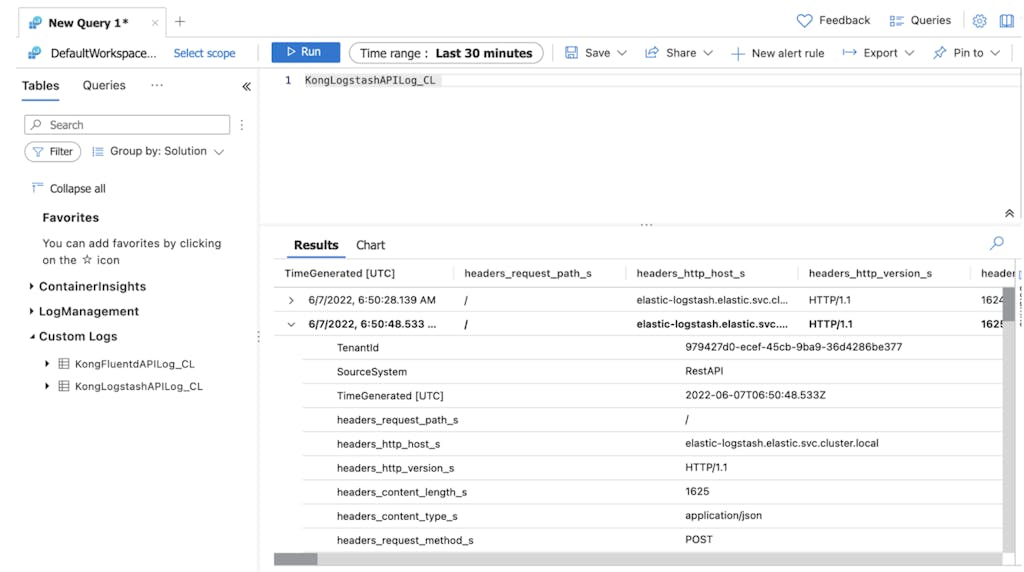

We can see API logs are being pipe to Azure Log Analytics by running some query.

Logs forwarded by Logstash

Logs forwarded by Fluentd

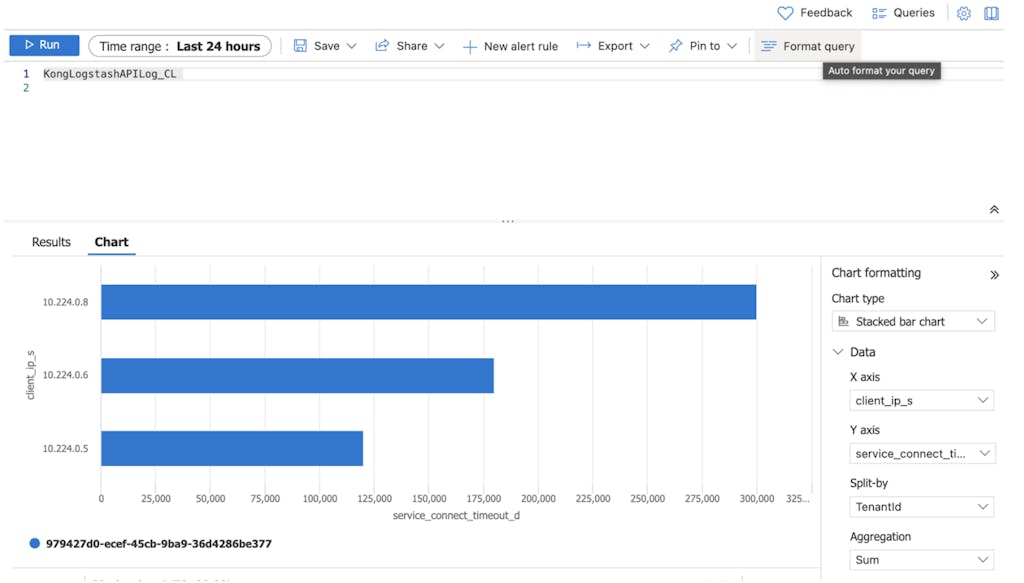

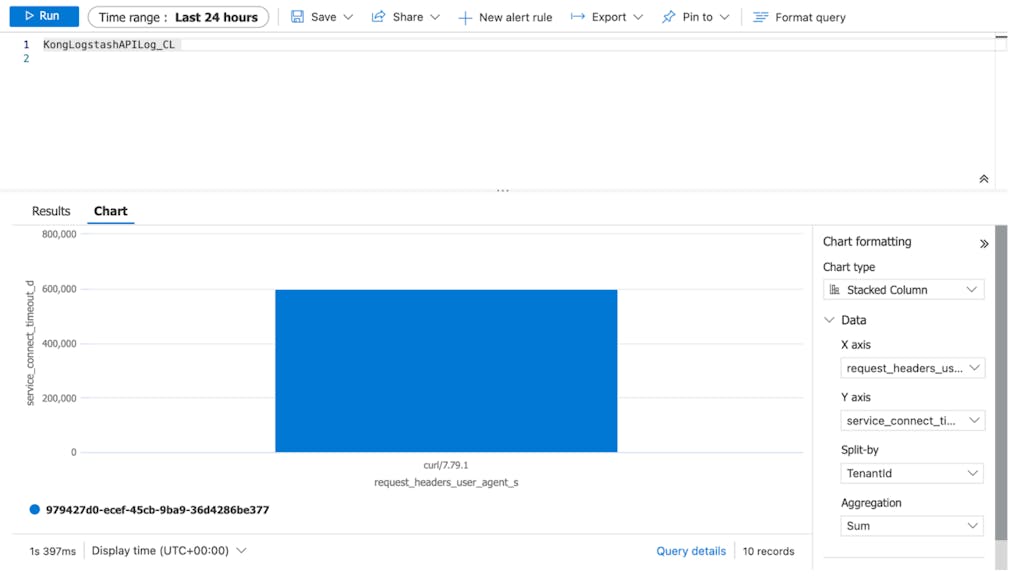

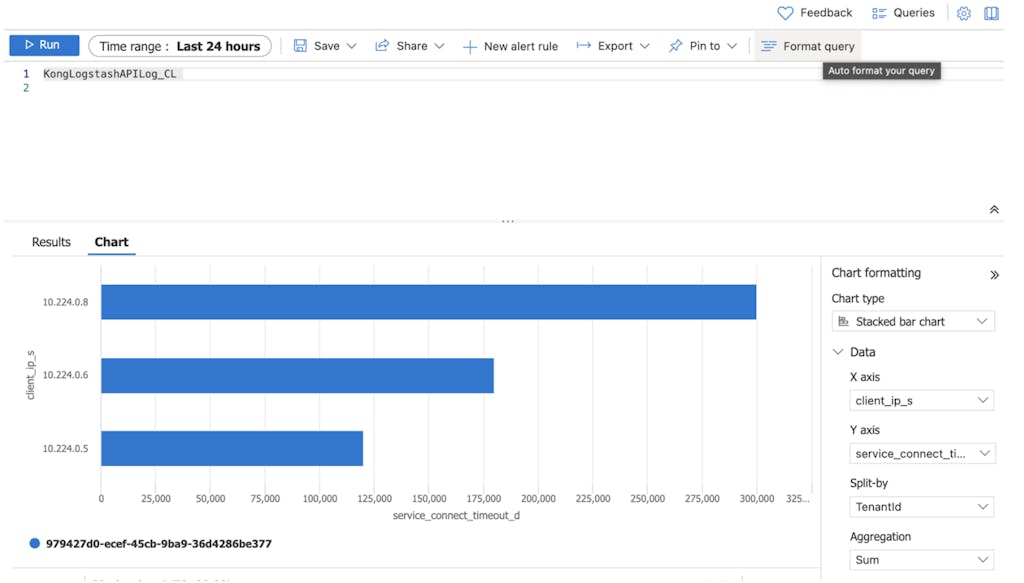

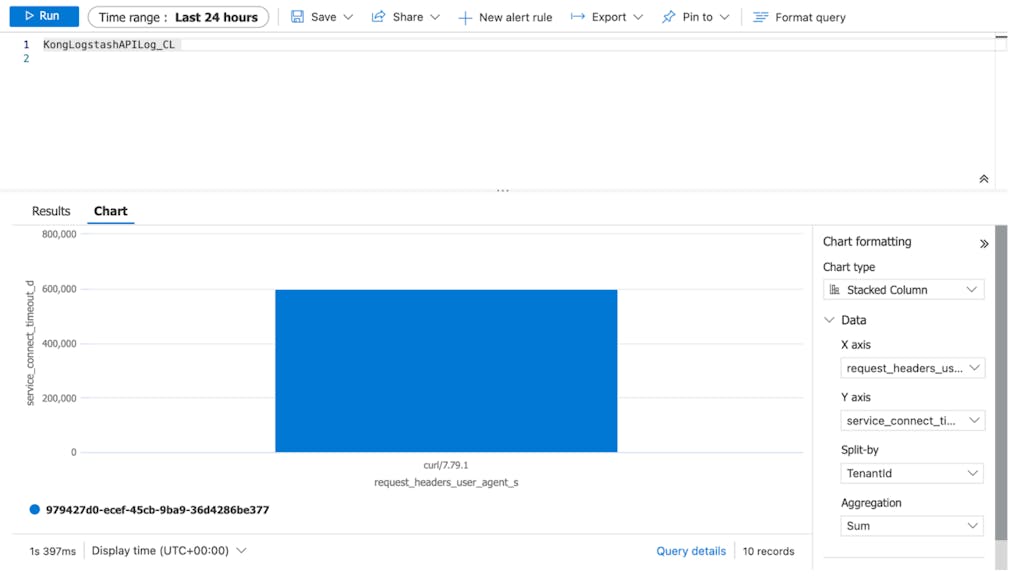

Basic Charts

We can configure some basic charts to analyze and observe the API logs.

Conclusion

This experimentation was set up to address our customer's need to leverage on their existing Azure cloud service for API logging. As our customer undergoes their digital transformation by developing more microservices, possessing the capability to analyze how the services are behaving is essential for day-to-day operation.

We demonstrated how Kong is able to create a synergistic integration with other solutions due to its plug and play nature, and how seamless it can be when setting it up.

Kong's plugins make things simple by abstracting the integration complexity. With Kong’s ever-growing plugins ecosystem, it opened up more possibilities for our customers in creating even more value from their technology stack.

Try out the steps above by yourself with a Kong installation. Got questions? Contact us!

The fastest, most-adopted API gateway is just the start See why Kong is king for modernization.