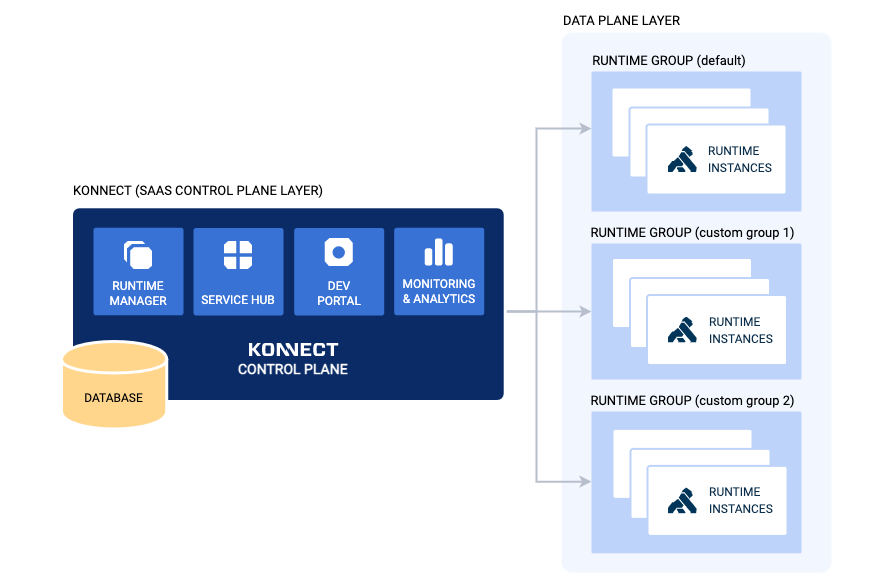

Application Modernization projects often require their workloads to run on multiple platforms which requires a hybrid model.

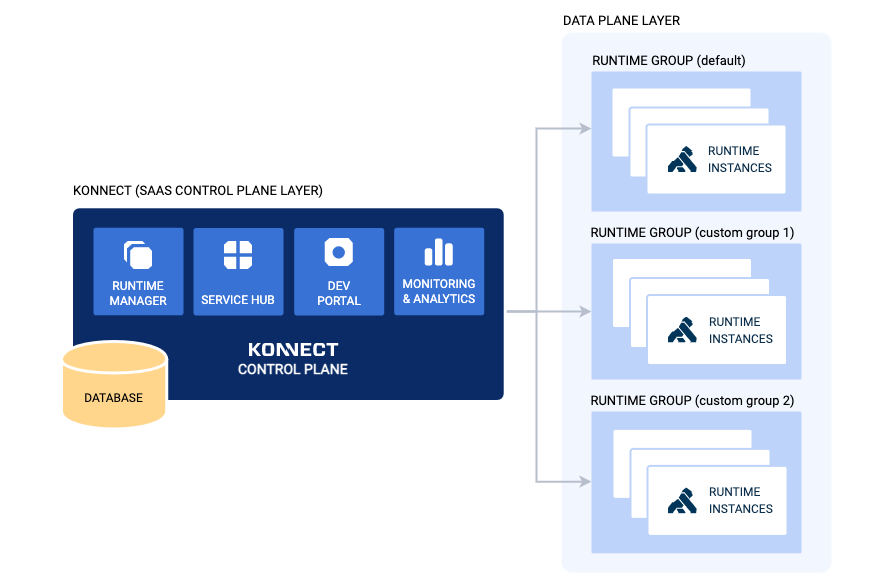

Kong Konnect is an API lifecycle management platform delivered as a service. The Control Plane, responsible for admin tasks, is hosted in the cloud by Kong. The Data Plane, totally based on Kong Gateway, can be deployed across multiple environments including VMs, Kubernetes, Docker, etc.

The Konnect Control Plane enables administrators to execute typical API Life Cycle activities such as create API routes, define services, apply policies, etc. Data Plane runtime instances connect to the Control Plane using mutual transport layer authentication (mTLS), receive the updates from the Control Plane and take customer-facing API traffic.

This blog post describes the Data Plane Runtime Instance installation process for Amazon Elastic Container Service (ECS). The blog post assumes you have already deployed Amazon ECS clusters and are familiar with its fundamental components and constructs like Services, Tasks, Container Instances, etc. Please check the official Amazon ECS documentation if you need to learn more about it.

Prepare the ECS installation VM

All the installation process described in this blog is based on a Linux based EC2 VM. Make sure you have installed:

To learn more about ECS CLI go to its respective GitHub repo.

Create the ECS Cluster

For the purposes of this blog post, we are going to create a fundamental and simple ECS Cluster. The main point here is to explain how the Konnect Data Plane instance can be deployed on this platform. For a production-ready deployment, an ECS Cluster should consider several other capabilities including Auto Scaling, security, among others.

ECS Profile and Cluster configuration

After installing the cli's, we need to configure:

- The ECS Profile with the AWS Access and Secret Keys

- The ECS Cluster with the specific information like, for example, if it's going to be EC2 or Fargate based, the region where it's going to be deployed, the name of the cluster, etc.

Use the following commands to configure the ECS Profile:

# ecs-cli configure profile --profile-name kong-profile --access-key <your_aws_access_key> --secret-key <your_aws_secret_key>

Use the next command to create the ECS Cluster, with type EC2 in region us-west-2:

# ecs-cli configure --cluster kong-ecs --region us-west-2 --config-name kong-config --default-launch-type EC2

ECS Cluster Creation

With all this configuration done, we can create the actual ECS Cluster. Notice that the next command assumes you have already created an AWS Key Pair. Check the AWS documentation to learn how to create it. We are setting the port 8000 for the ECS Cluster as it is the Data Plane default port

# ecs-cli up --keypair <your_key_pair> \

--capability-iam \

--cluster-config kong-config \

--instance-type t3.2xlarge \

--tags project=kong \

--port 8000 \

--region us-west-2

In case you want to destroy it, run the following command (we are skipping this for now):

# ecs-cli down --cluster-config kong-config --region us-west-2

You can check the ECS Cluster state with the command:

# aws ecs describe-clusters --cluster kong-ecs --region us-west-2

{

"clusters": [

{

"clusterArn": "arn:aws:ecs:eu-west-2:1234567890:cluster/kong-ecs",

"clusterName": "kong-ecs",

"status": "ACTIVE",

"registeredContainerInstancesCount": 1,

"runningTasksCount": 0,

"pendingTasksCount": 0,

"activeServicesCount": 0,

"statistics": [],

"tags": [],

"settings": [],

"capacityProviders": [],

"defaultCapacityProviderStrategy": []

}

],

"failures": []

}

ECS Cluster Container Instance Public IP

The Cluster we just created has a single Container Instance where the Konnect Data Plane Runtime instance will be deployed. In order to send requests to the Data Plane once it is deployed, we need to get the Public IP provisioned for the ECS Container Instance.

To check the Container Instance Public IP we need to get the Container Instance ID first. The following command returns the Container Instance Amazon Resource Name (ARN) where you can take the ID from:

# aws ecs list-container-instances --cluster kong-ecs --region us-west-2

{

"containerInstanceArns": [

"arn:aws:ecs:us-west-2:1234567890:container-instance/kong-ecs/96f9f3f692da4be9a7b3e3392dc7d05b"

]

}

Use the Container Instance ID to get the EC2 Instance ID related to it:

# aws ecs describe-container-instances --container-instance 96f9f3f692da4be9a7b3e3392dc7d05b --cluster kong-ecs --region us-west-2 | jq -r .containerInstances[0].ec2InstanceId

i-0d8c29d043a37410f

And now you can check the EC2 Public IP and DNS Name if you will:

# aws ec2 describe-instances --instance-id i-0d8c29d043a37410f | jq -r .Reservations[].Instances[].PublicIpAddress

34.214.132.0

# aws ec2 describe-instances --instance-id i-0d8c29d043a37410f | jq -r .Reservations[].Instances[].PublicDnsName

ec2-34-214-132-0.us-west-2.compute.amazonaws.com

Konnect Data Plane

With the ECS Cluster up and running, it's time to deploy the Konnect Data Plane.

The main tasks of the installation process are:

- Register to Kong Konnect

- Store the Data Plane Digital Certificate and Private Key pair as AWS System Manager parameters. The pair is used by the Data Plane to connect to the Konnect Runtime Group defined in the Control Plane.

- Create new AWS Identity and Access Management (IAM) Roles and Policies

- Create ECS Task Definition and Parameters files

- Deploy Konnect Data Plane

Register to Kong Konnect

You will need a Konnect subscription. Go to the Konnect registration page to get a 14-day free trial of Konnect Plus subscription. After 14 days, you can choose to downgrade to the free version, or continue with a paid Plus subscription.

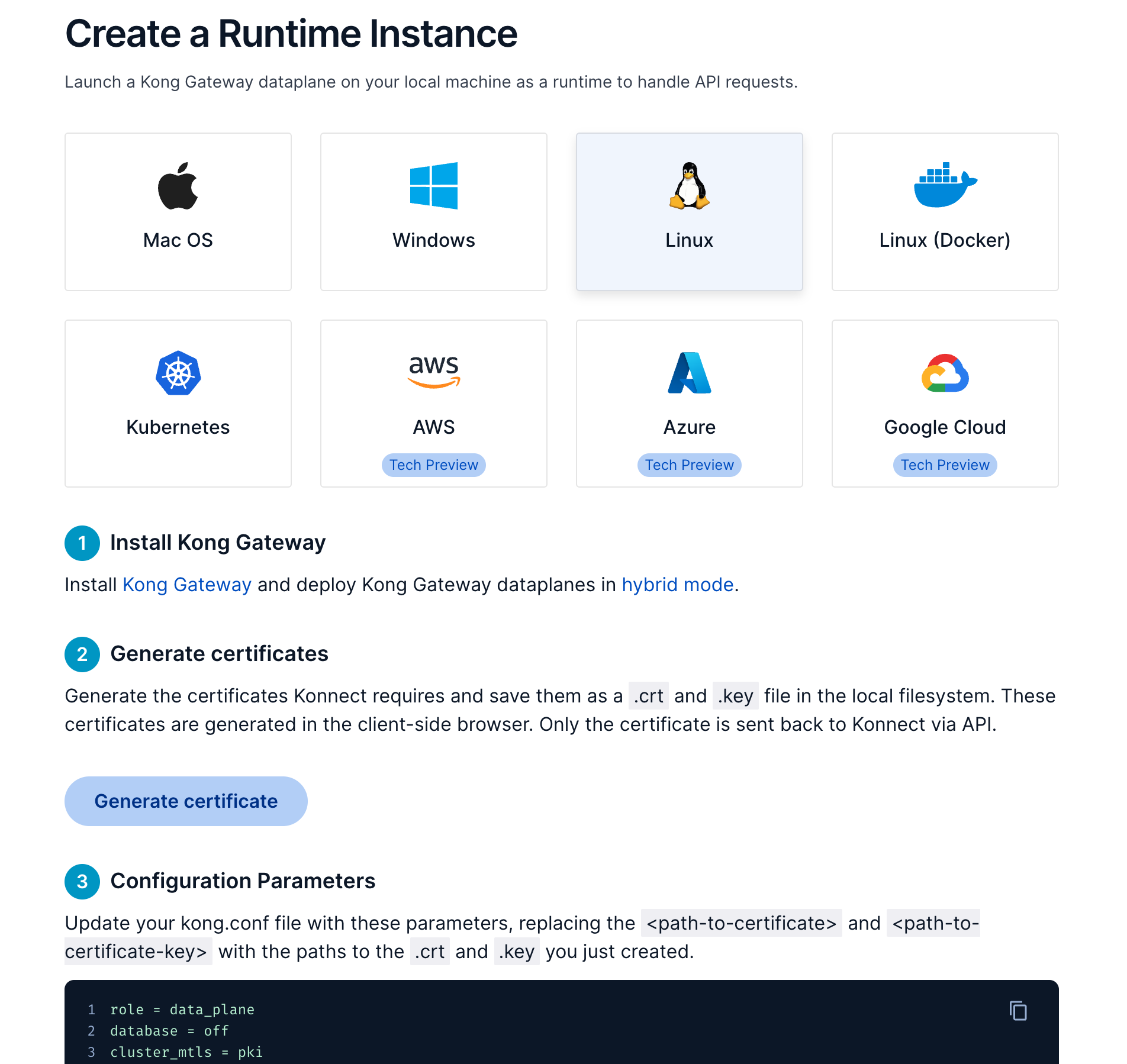

Get and Store the Data Plane Digital Certificate and Private Key pair

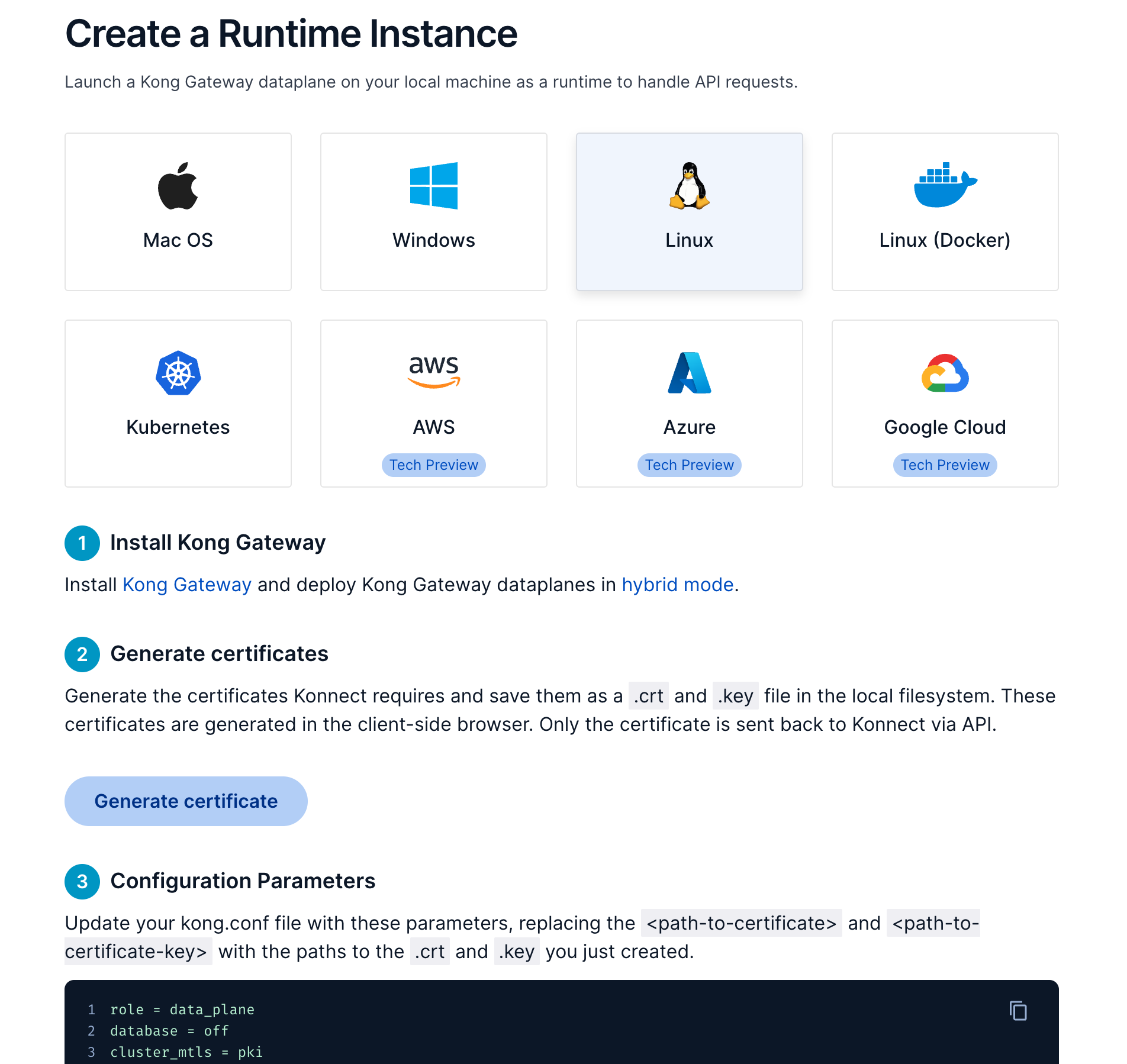

After registering, login to your Konnect Control Plane and click the Runtime Manager menu option. Choose the default Runtime Group (and can define a new one if you will). Click on + New Runtime Instance. You'll see all platforms where you can create a new Runtime Instance.

Konnect provides instructions on all platform options, including the Digital Certificate and Private Key pair. You can use any one of them, for example the instructions for Linux:

Inside the Configuration Parameters box, you'll see some settings. Copy and save these ones:

- cluster_control_plane = <your_control_plane_name>:443

- cluster_server_name = <your_control_plane_name>

- cluster_telemetry_endpoint = <your_telemetry_server_name>:443

- cluster_telemetry_server_name = <your_telemetry_server_name>

We are going to use them along with the other ones later when deploying the Kong Data Plane instance on ECS.

Click the Generate certificate button to get your Digital Certificate and Private Key pair. For each one of them, click the copy button and use it in the following commands and store them as AWS System Manager parameters

Digital Certificate

aws ssm put-parameter \

--name kong_cert \

--region us-west-2 \

--type String \

--value "-----BEGIN CERTIFICATE-----

MIIDlDCCAn6gAwIBAgIBATALBgkqhkiG9w0BAQ0wNDEyMAkGA1UEBhMCVVMwJQYD

VQQDHh4AawBvAG4AbgBlAGMAdAAtAGQAZQBmAGEAdQBsAHQwHhcNMjMwMzAyMTMx

………

-----END CERTIFICATE-----"

Private Key

aws ssm put-parameter \

--name kong_key \

--region us-west-2 \

--type String \

--value "-----BEGIN PRIVATE KEY-----

MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQDIFDaI++h2JY7K

gAxJ29Vq9i8aMeIa8pkapuKewYso/KNPSyNmyPIyDb3D8KET3gxk4vALG4J+dL9Q

………

-----END PRIVATE KEY-----"

Create new AWS IAM Roles and Policies

Amazon ECS provides, natively, a policy named AmazonECSTaskExecutionRolePolicy which contains the permissions required by ECS to run its fundamental tasks.

If you go to AWS IAM console you'll see the policy defined as follows:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}

Create IAM Role

We are going to create a new Role named ecsTaskExecutionRole to be used by our Kong Data Plane deployment. Besides the AmazonECSTaskExecutionRolePolicy policy the Role will have another specific one attached to allow ECS to access the AWS System Manager parameters we created previously.

Create a file named ecs-tasks-trust-policy.json with the following content. The file contains the trust policy to use for the IAM role we are going to create. The file should contain the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Service": "ecs-tasks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Create the new IAM Role named escTaskExecutionRole using the trust policy created previously:

# aws iam create-role --role-name ecsTaskExecutionRole --assume-role-policy-document file://ecs-tasks-trust-policy.json

Attach the existing AmazonECSTaskExecutionRolePolicy policy to the new Role:

# aws iam attach-role-policy \

--role-name ecsTaskExecutionRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonECSTaskExecutionRolePolicy

Create a System Manager policy

Create another file named ecs-ssm-policy.json to allow ECS to access the AWS System Manager parameters we created before. Replace <your_aws_account> with your respective account id

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:GetParameters"

],

"Resource": [

"arn:aws:ssm:us-west-2:<your_aws_account>:parameter/kong_key",

"arn:aws:ssm:us-west-2:<your_aws_account>:parameter/kong_cert"

]

}

]

}

Create the new Policy with:

aws iam create-policy --policy-name ecsKongSSMPolicy --policy-document file://ecs-ssm-policy.json

Attach the new Policy to the same escTaskExecutionRole Role:

aws iam attach-role-policy \

--role-name ecsTaskExecutionRole \

--policy-arn arn:aws:iam::<your_aws_account>:policy/ecsKongSSMPolicy

ECS Task Definition and Parameters Files

The latest version of the Amazon ECS CLI supports the major versions of Docker Compose file syntax for the Task Definition specification.

Besides the Docker Compose based file, we can specify ECS related parameters in a second file.

docker-compose-konnect.yml

Create a file named docker-compose-konnect.yml to define our ECS Task Definition. Note that, as stated before, the file follows the Docker Compose file syntax.

version: "3"

services:

konnect-data-plane:

image: kong/kong-gateway:3.2.1.0-amazonlinux-2022

container_name: konnect-data-plane

restart: always

ports:

- 8000:8000

- 8443:8443

environment:

- KONG_ROLE=data_plane

- KONG_DATABASE=off

- KONG_KONNECT_MODE=on

- KONG_VITALS=off

- KONG_CLUSTER_MTLS=pki

- KONG_CLUSTER_CONTROL_PLANE=<your_control_plane_name>:443

- KONG_CLUSTER_SERVER_NAME=<your_control_plane_name>

- KONG_CLUSTER_TELEMETRY_ENDPOINT=<your_telemetry_server_name>:443

- KONG_CLUSTER_TELEMETRY_SERVER_NAME=<your_telemetry_server_name>

- KONG_LUA_SSL_TRUSTED_CERTIFICATE=system

logging:

driver: awslogs

options:

awslogs-group: log-group1

awslogs-region: us-west-2

awslogs-stream-prefix: konnect-demo

The main settings are:

- image: kong/kong-gateway:3.2.1.0-amazonlinux-2022. Kong provides images for several OSes. Check Kong's Docker Hub page to see all versions available.

- ports: these are the default ports for both HTTP and HTTP/S protocols

- environment

- KONG_ROLE=data_plane - specifies that this instance will play the Data Plane role only.

- KONG_DATABASE=off - this is a DB-less instance.

- KONG_KONNECT_MODE=on - the instance is connected to the Konnect Control Plane.

- KONG_CLUSTER_MTLS=pki - the instance uses its own pair of Digital Certificate and Private Key pair. The pair is specified the

ecs-params.yml described next

- KONG_CLUSTER_* - these are the fields you copied from the Konnect Control Plane.

- logging: it leverages Amazon CloudWatch as the Konnect Data Plane log infrastructure.

ecs-params.yml

Since there are certain fields in an ECS Task Definition that do not correspond to fields in a Docker Compose file, you specify those values in another specific file.

Create a file names ecs-params.yml to include the specific ECS settings we need for our task definition:

version: 1

task_definition:

task_size:

cpu_limit: 256

mem_limit: 1024

task_execution_role: arn:aws:iam::<your_aws_account>:role/ecsTaskExecutionRole

services:

konnect-data-plane:

essential: true

secrets:

- value_from: kong_key

name: KONG_CLUSTER_CERT_KEY

- value_from: kong_cert

name: KONG_CLUSTER_CERT

The main settings are:

- secrets: these settings refer to the AWS System Manager parameters we created with the Digital Certificate and Private Key we got from the Konnect Control Plane.

- task_execution_role: that is the ARN of the AWS IAM Role we created in the previous section.

Start the Konnect Data Plane Runtime Instance ECS Service

For a quick recap, we have, up to this point, created the following artifacts:

- Two AWS System Manager parameter with the Digital Certificate and Private Key pair generated by the Konnect Control Plane

- A new AWS IAM Role with policies attached so the ECS Task can access all dependencies needed.

- ECS Task Definition and Parameters files.

Now, we are ready to bring the Data Plane instance up. Using the ECS CLI command we can pass the two files as parameters:

# ecs-cli compose --project-name kong-project \

--file docker-compose-konnect.yml \

--ecs-params ./ecs-params.yml \

--debug service up \

--region us-west-2 \

--ecs-profile kong-profile \

--cluster-config kong-config \

--create-log-groups

After starting the ECS Task, you should see it running with a command like this:

# ecs-cli ps

Name State Ports TaskDefinition Health

kong-ecs/99e5f433a5d74750a60c589b152dfb8e/konnect-data-plane RUNNING 34.214.132.0:8000->8000/tcp, 34.214.132.0:8443->8443/tcp kong-project:48 UNKNOWN

Check the Konnect Data Plane Runtime Instance

In fact, if we send a request to the ECS Container Instance using the port we specified for the Data Plane we should get a response from it:

# http 34.214.132.0:8000

HTTP/1.1 404 Not Found

Connection: keep-alive

Content-Length: 48

Content-Type: application/json; charset=utf-8

Date: Fri, 03 Mar 2023 20:13:11 GMT

Server: kong/3.2.1.0-enterprise-edition

X-Kong-Response-Latency: 1

{

"message": "no Route matched with those values"

}

Since we haven't defined any API at this point, the Runtime Instance replies with the message above.

Check the Konnect Control Plane

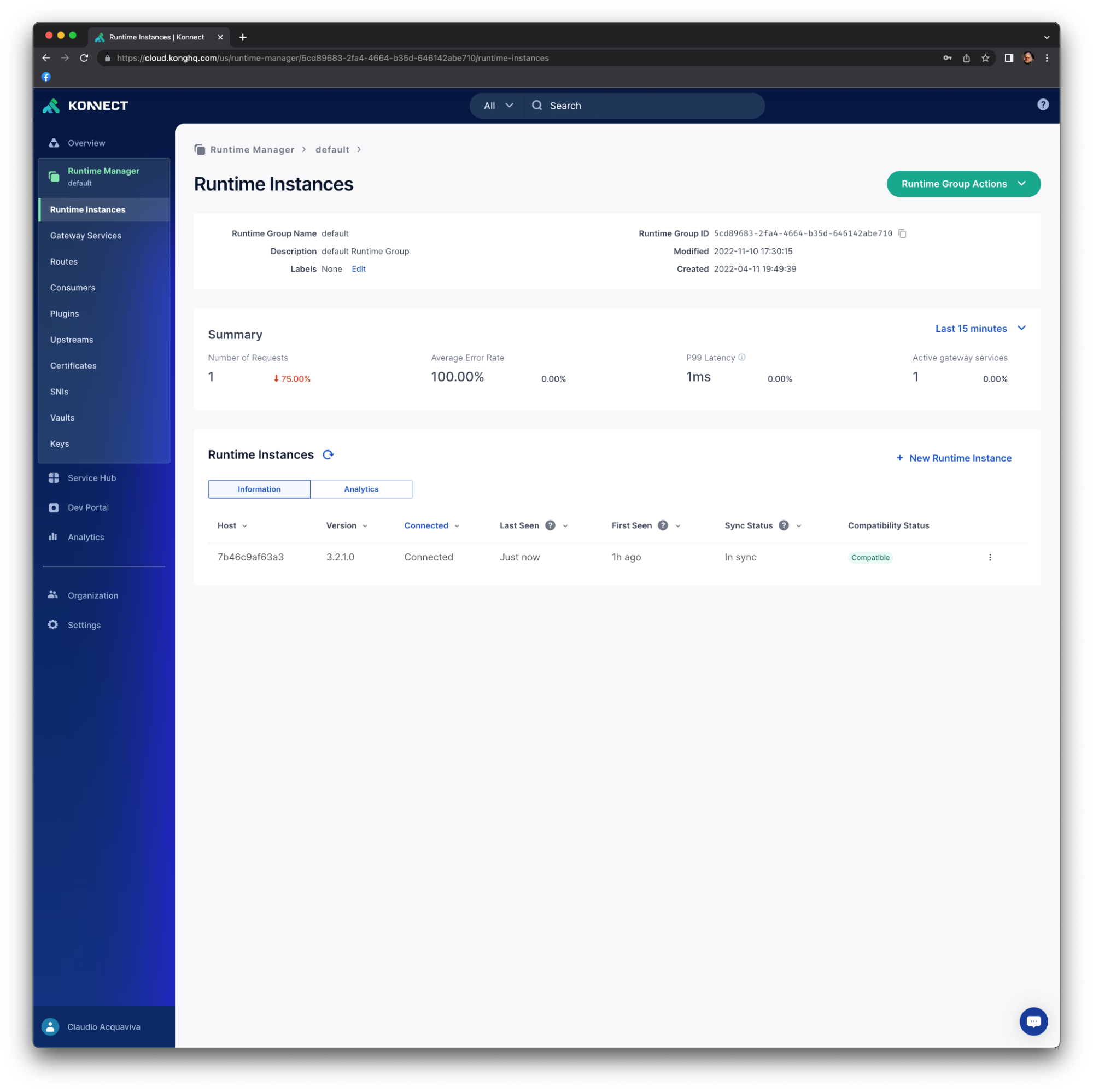

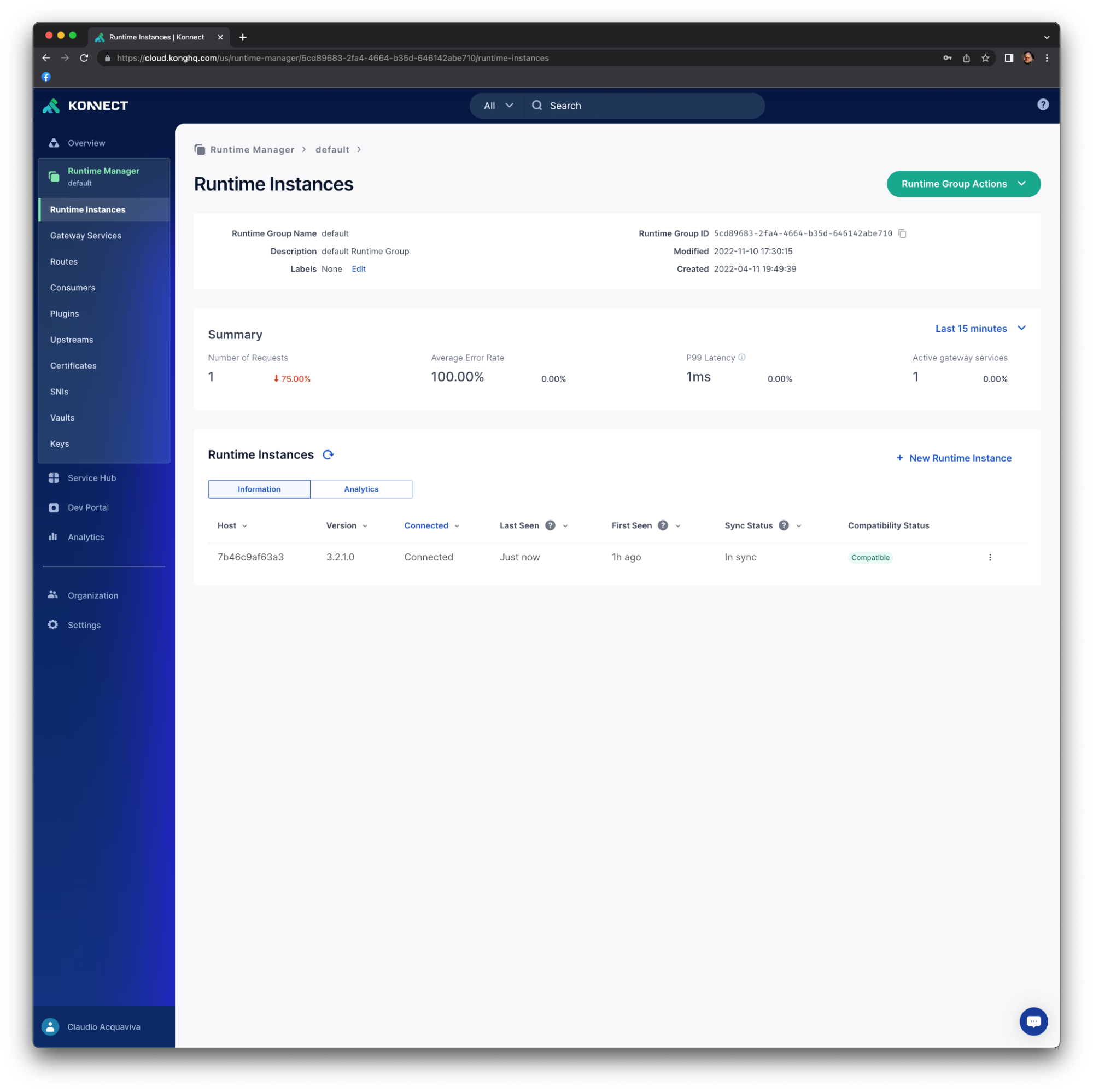

Similarly, we can check the Runtime Instance in the Control Plane. Go back to our Konnect Control Plane, click the Runtime Manager menu option and choose the default Runtime Group. You should see your Runtime Instance running:

Konnect API Creation

Create a Konnect Service and Route

Now that we have a fully operational ECS-based Konnect Data Plane Runtime Instance running, let's create our first Konnect Service and Route:

Create a Service

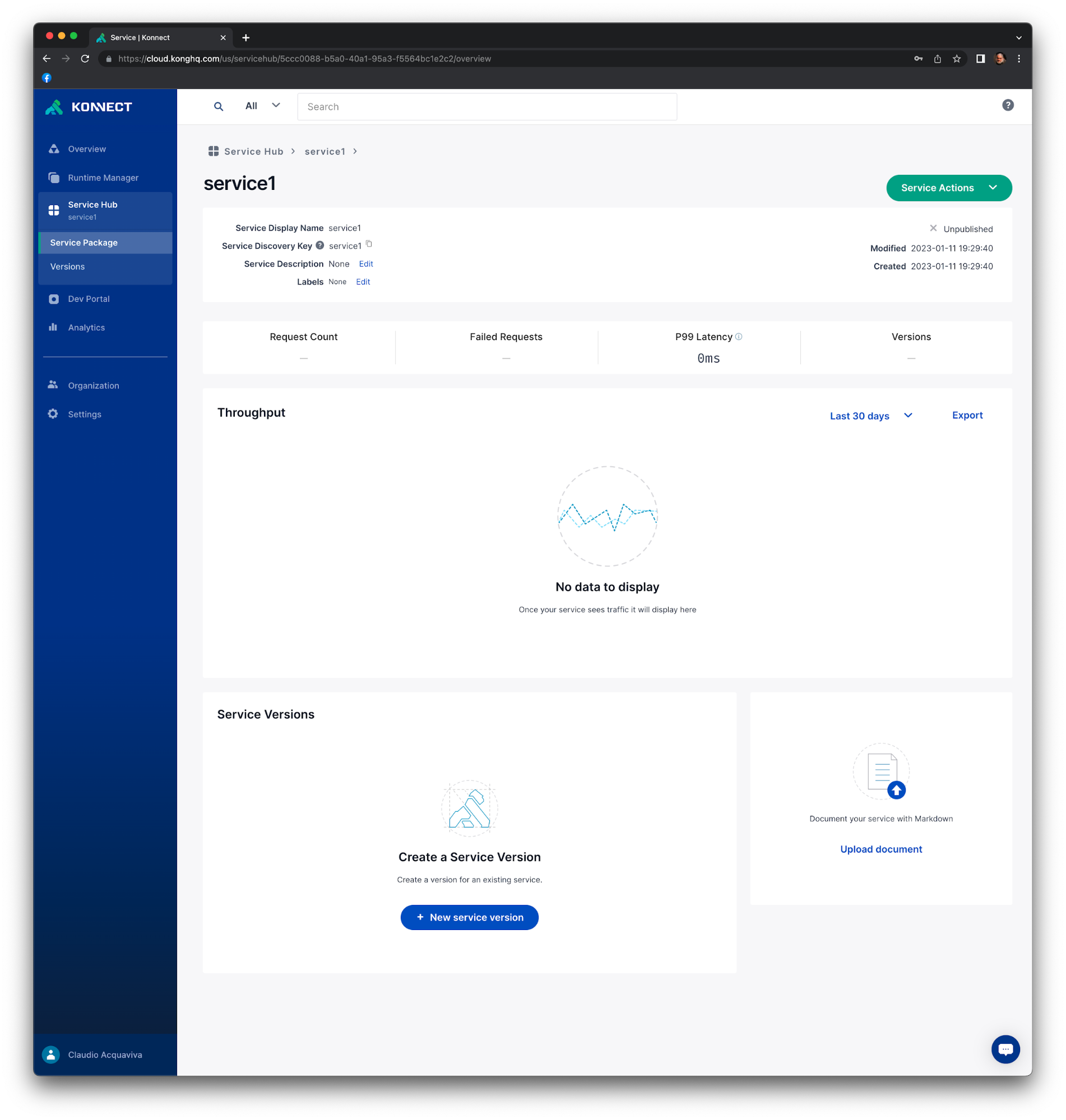

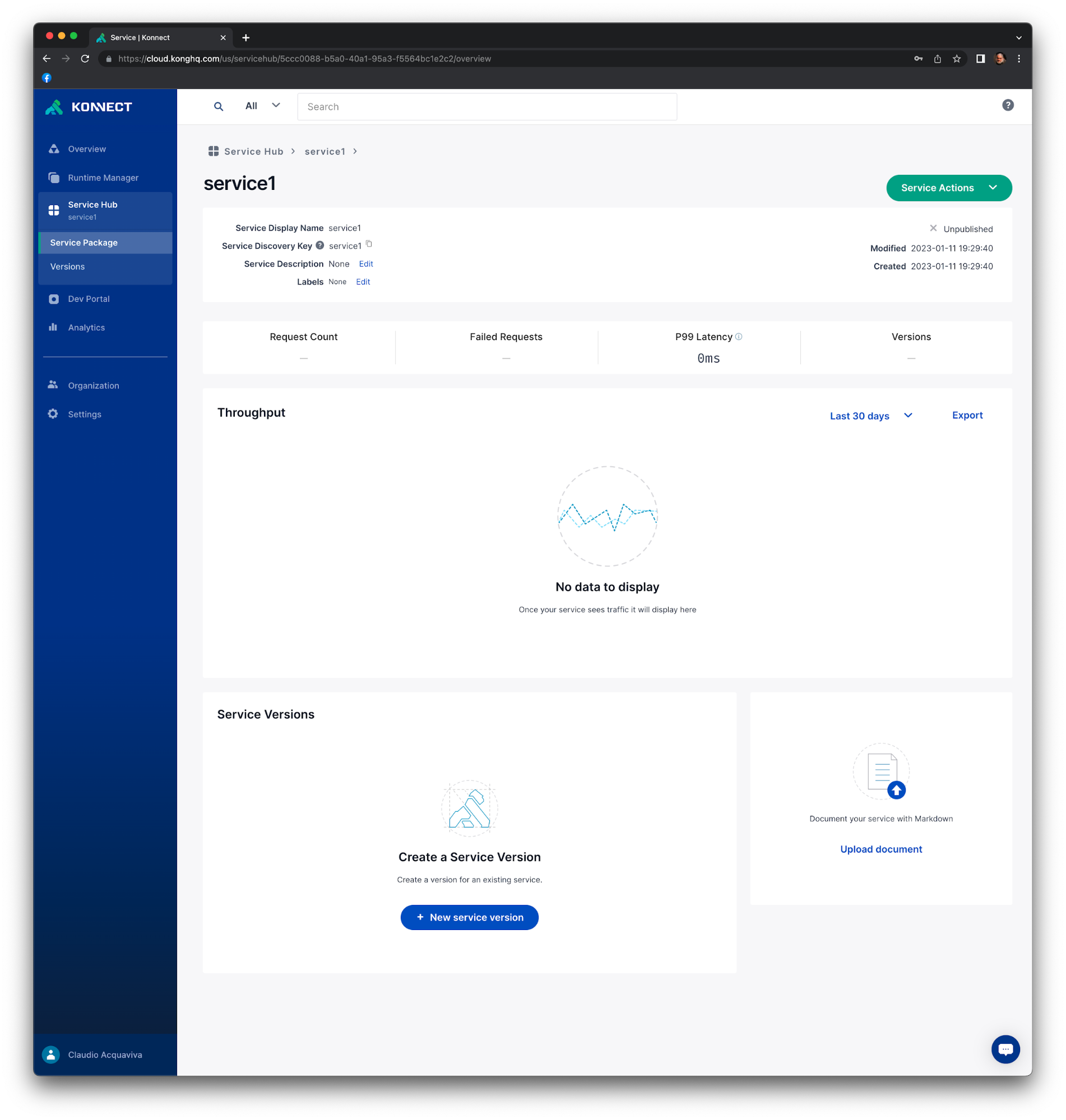

Go to Konnect Admin GUI and, from the left navigation menu, click Service Hub. Click "+ New service".

For Display Name type service1 and click Save. You should see your new service’s overview page.

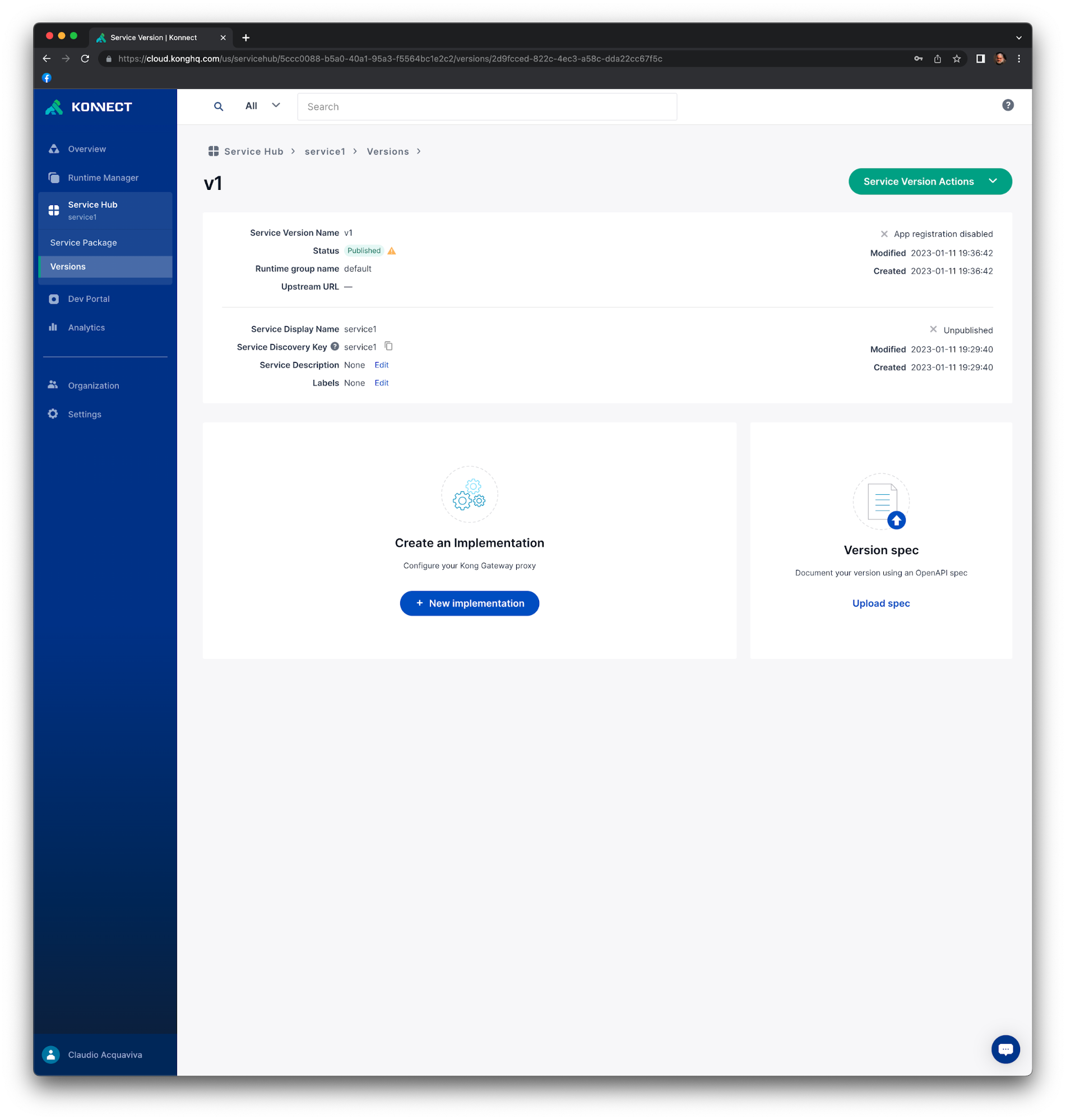

Create a Service Version

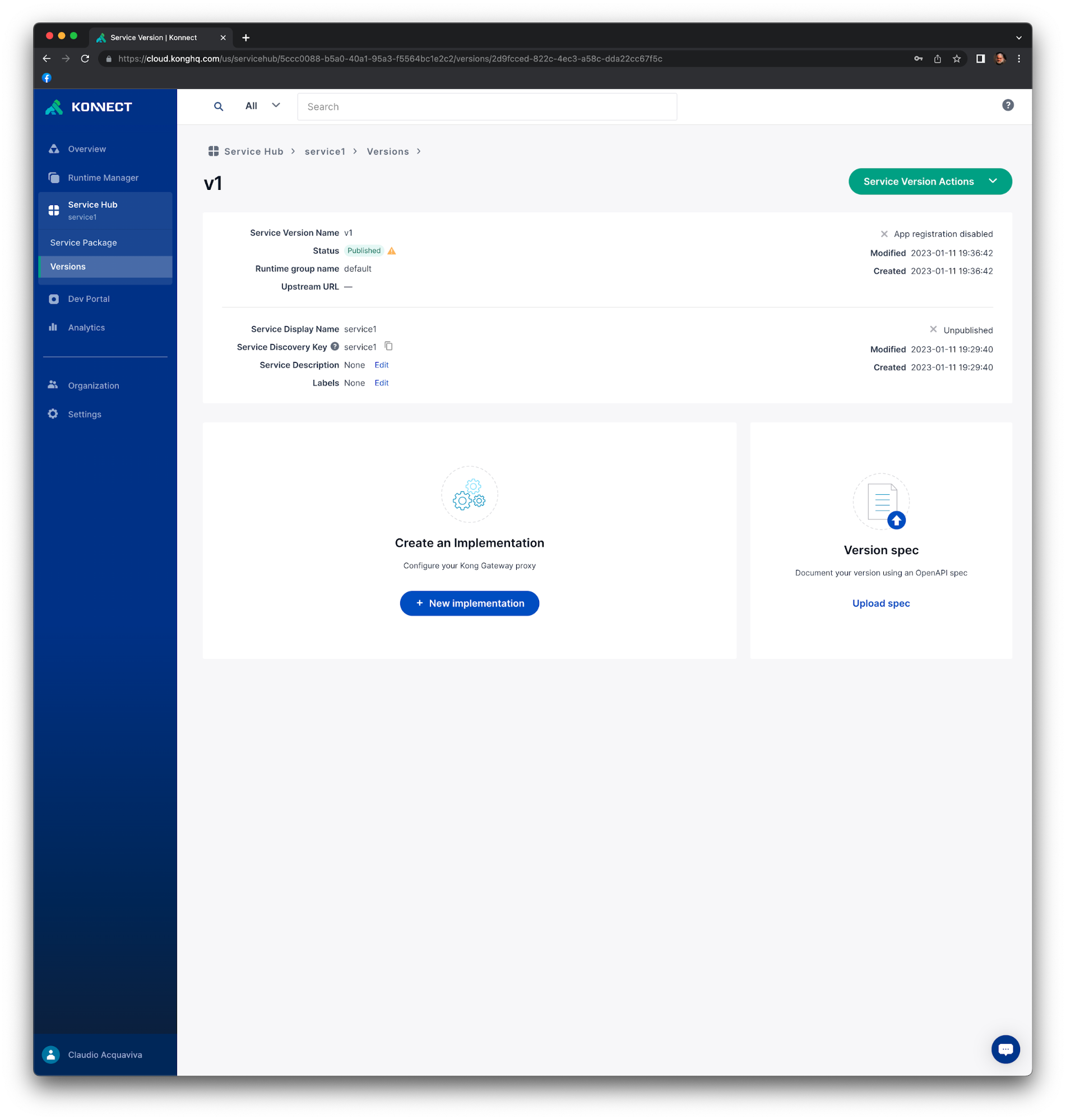

Now that you have a service set up, you can start filling out details about your API.

Click on Service Actions > New Version.

For Version Name type v1.

Select a Runtime Group. In our case, we have the default Runtime Group only. Click Create. You should see the v1 version of your service.

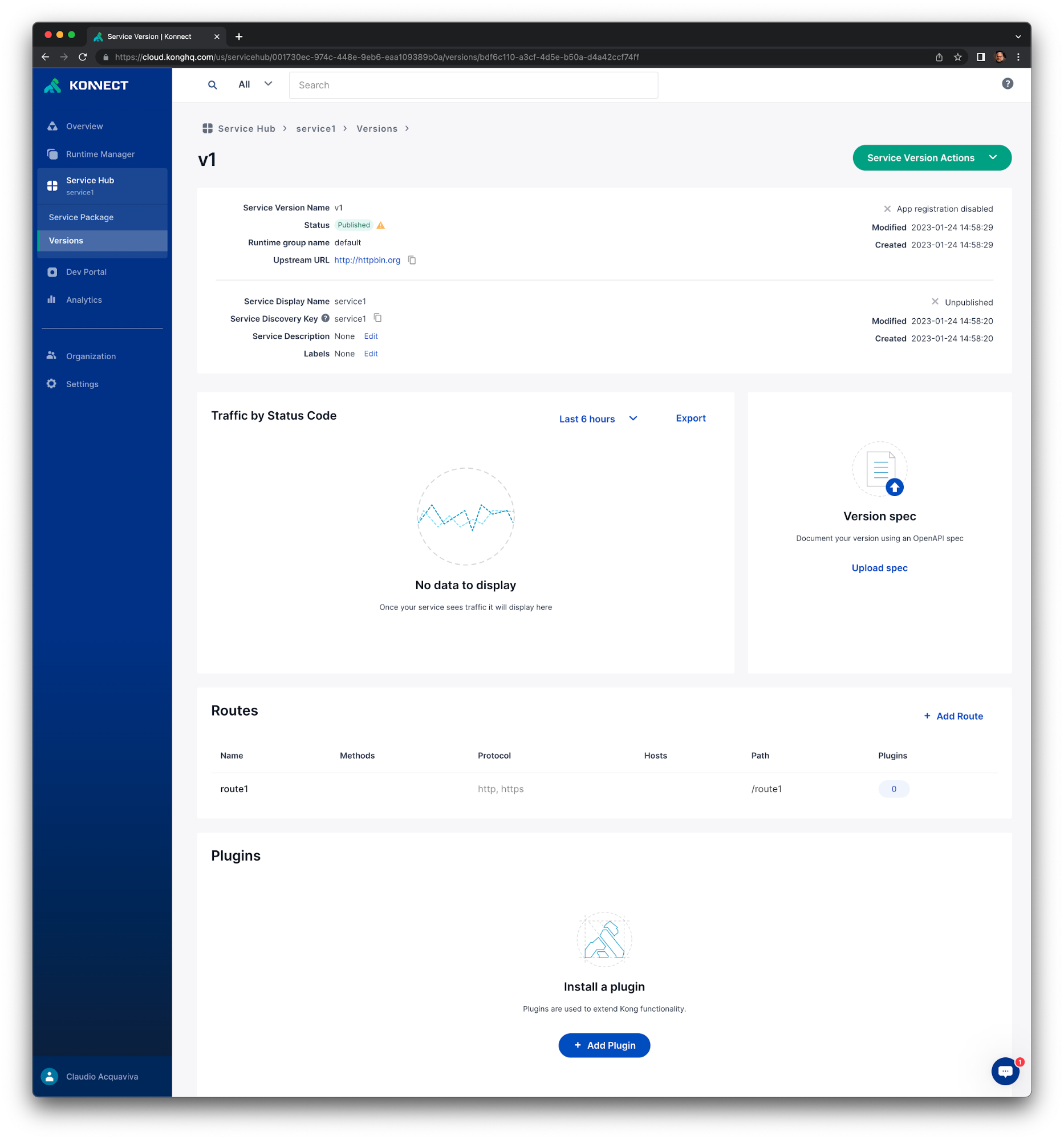

Create a Service Implementation and a Kong Route

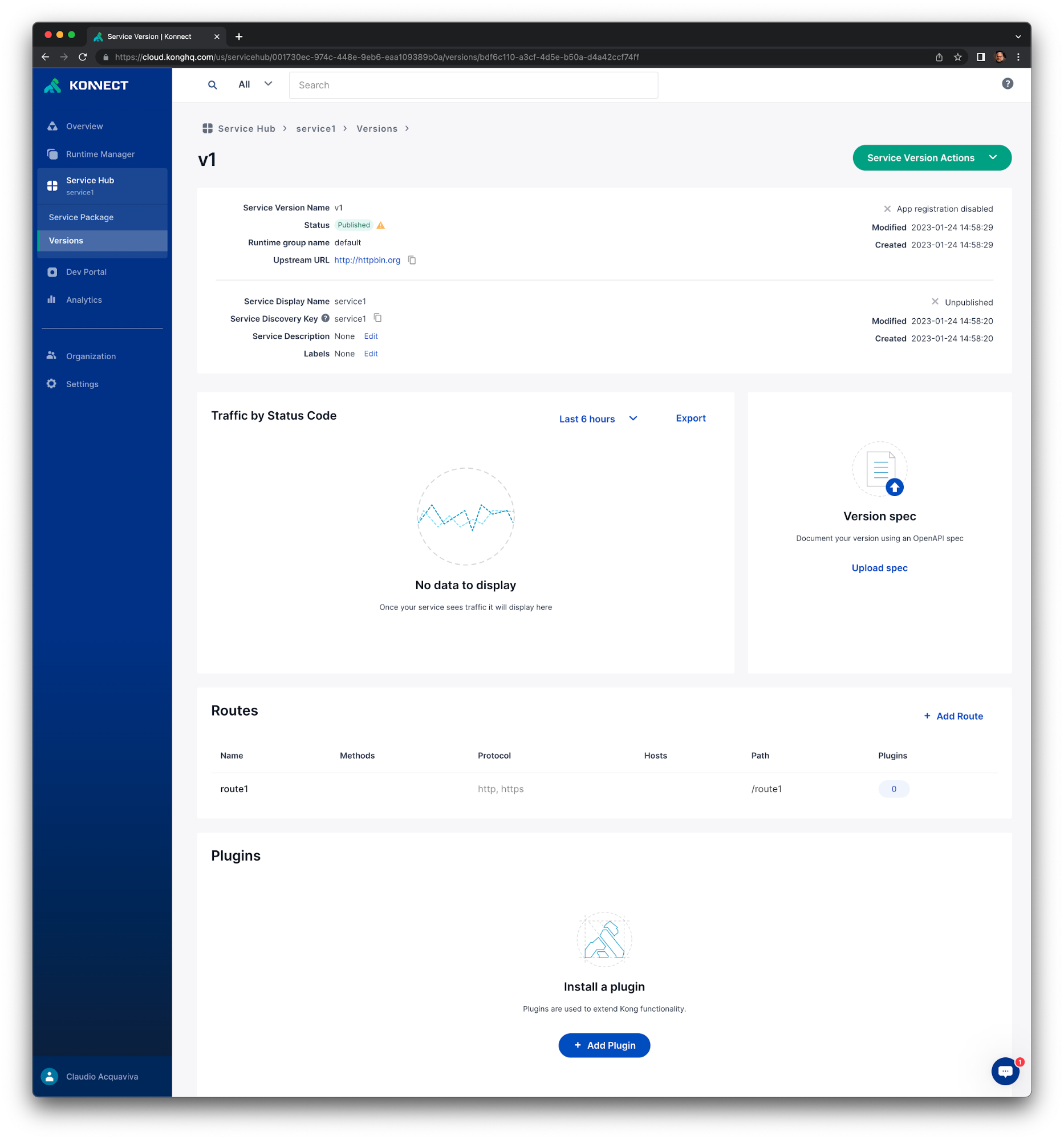

When you create a Service Implementation, you also specify the route to it. This route, combined with the proxy URL for the service, will lead to the endpoint specified in the service implementation.

Click New Implementation.

In the step 1 of the Create an Implementation dialog, create a new service implementation to associate with your service version.

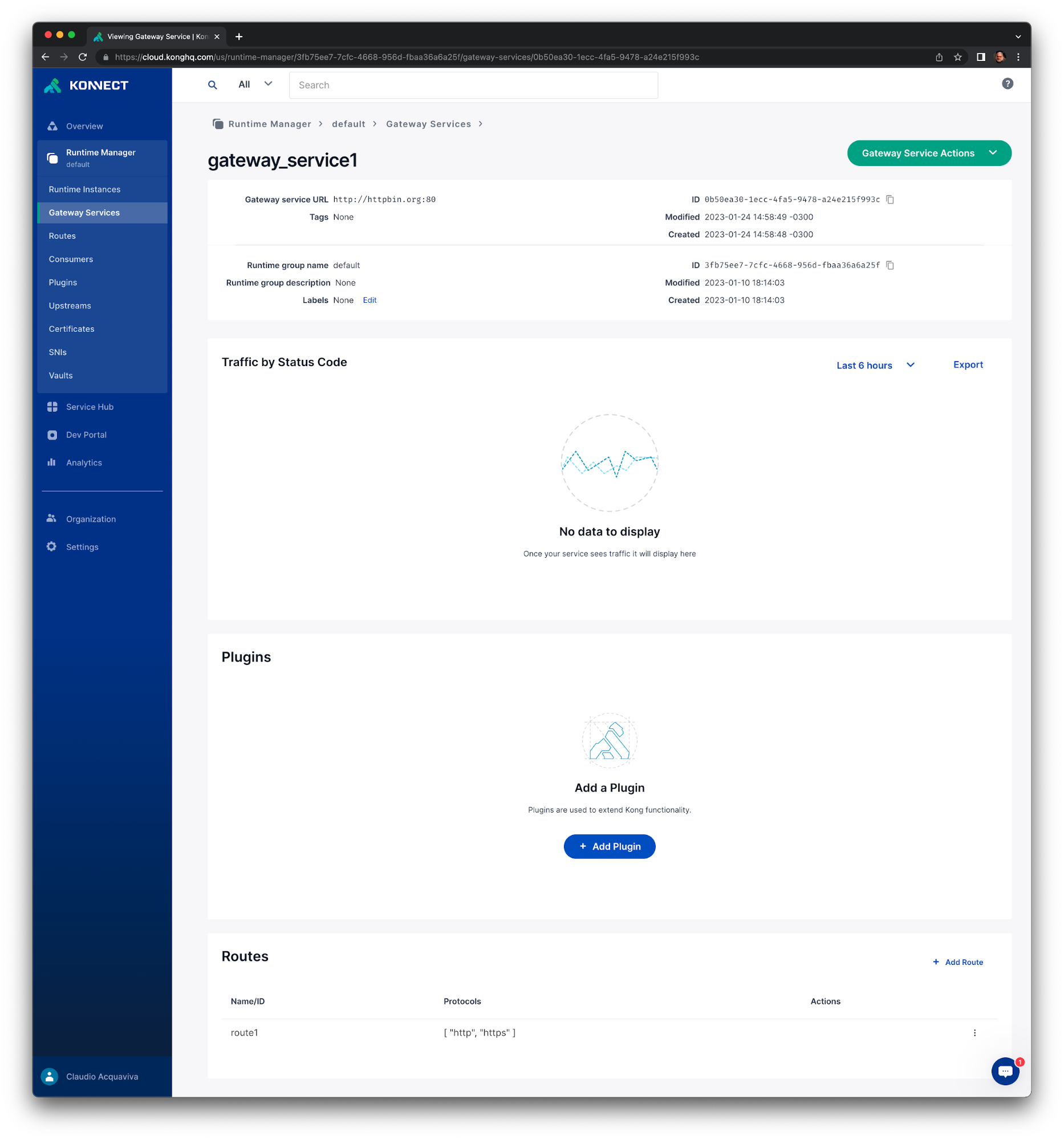

Enter a unique name for the Gateway service. For the purpose of this example, enter gateway_service1.

In the URL field, enter http://httpbin.org. Click Next.

In step 2, add a route to your service implementation. Enter with the following settings:

Name: route1

Path(s): /route1

Click Create. You should see the new service implementation with its route. Notice that the Upstream URL refers to the Gateway Service you used, http://httpbin.org.

If you want to view the configuration, edit or delete the implementation, or delete the version, click the Version actions menu.

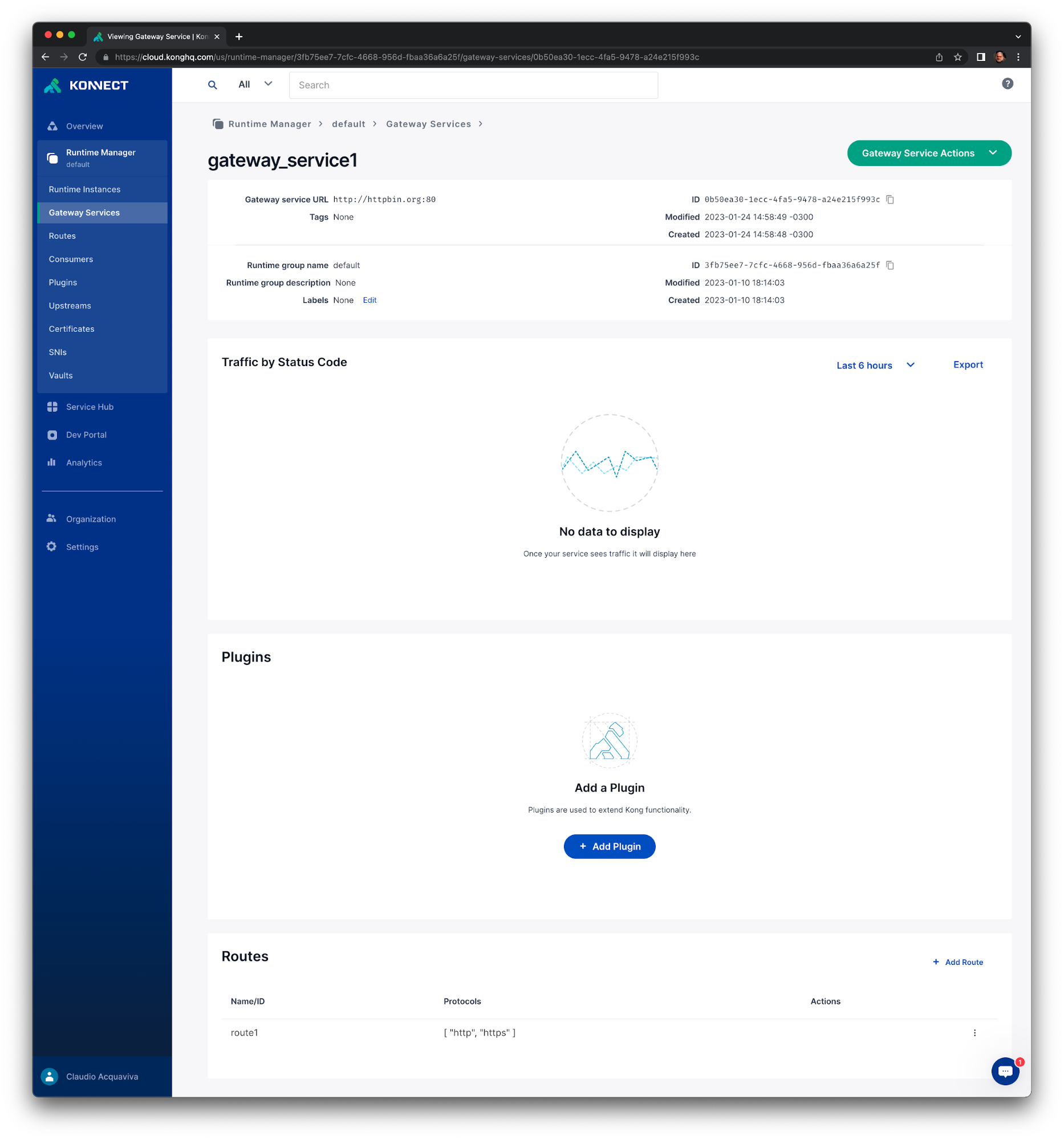

Every time you create a service implementation, Konnect creates or connects to a parallel Kong Gateway Service to proxy requests based on your configuration. Let’s check out the service you just created.

From the main menu, open the runtimes icon Runtime Manager and click on the default Runtime Group.

Open Gateway Services from the sub-menu, then click on the gateway_service1 we created before.

You can manage your Gateway Service from here, or from the Service Hub, through the service implementation. All changes will be reflected in both locations.

Use the same ECS Container Instance Public IP to send another request to the Data Plane:

# http 34.214.132.0:8000/route1/get

HTTP/1.1 200 OK

Access-Control-Allow-Credentials: true

Access-Control-Allow-Origin: *

Connection: keep-alive

Content-Length: 430

Content-Type: application/json

Date: Fri, 03 Mar 2023 21:09:56 GMT

Server: gunicorn/19.9.0

Via: kong/3.2.1.0-enterprise-edition

X-Kong-Proxy-Latency: 177

X-Kong-Upstream-Latency: 130

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "HTTPie/2.6.0",

"X-Amzn-Trace-Id": "Root=1-64026224-468bd94b1b4396f911942682",

"X-Forwarded-Host": "34.214.132.0",

"X-Forwarded-Path": "/route1/get",

"X-Forwarded-Prefix": "/route1"

},

"origin": "52.41.185.161, 34.214.132.0",

"url": "http://34.214.132.0/get"

}

Applying a Plugin

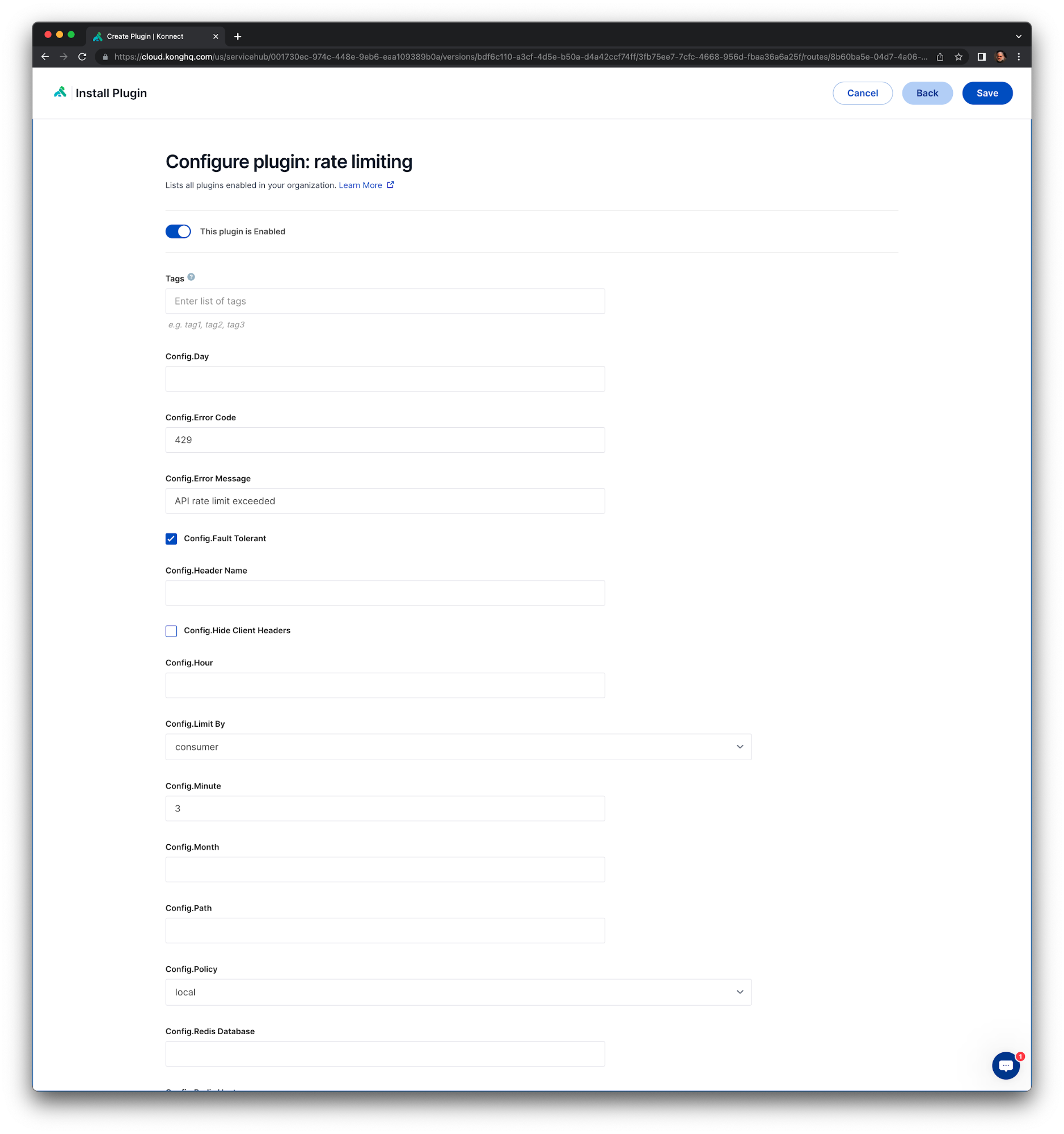

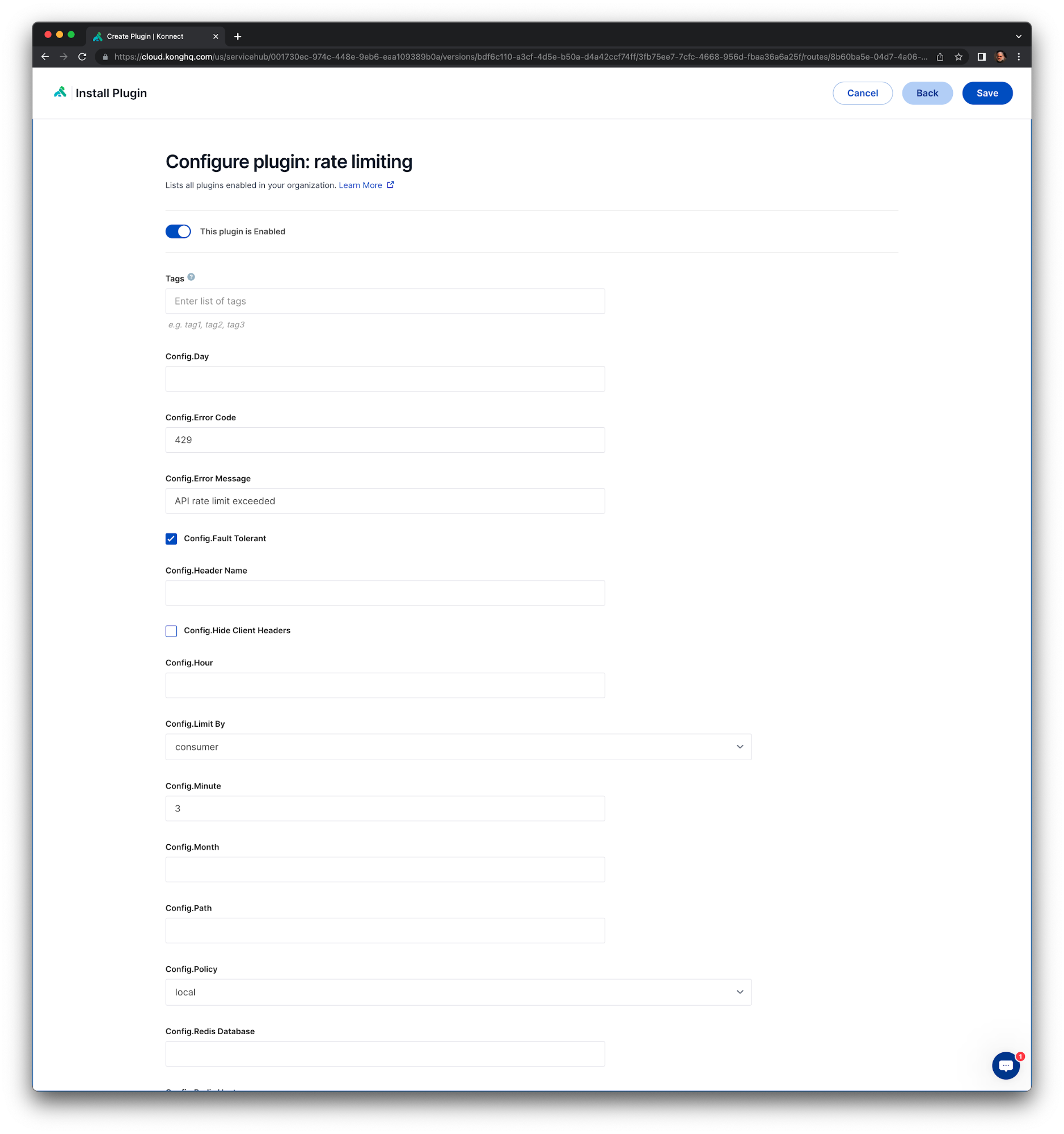

Now, we are going to define a Rate Limiting policy for our Service, enabling the Rate Limiting plugin to the Kong Route.

Go back to Service Hub again and choose the same v1 version of the service1. Click the route1 Route. Under the Plugins tab click +Add Plugin. The Rate Limiting plugin is inside the Traffic control plugin section.

In the Configure plugin: rate limiting page set Config.Minute as 3, which means the Route can be consumed only 3 times a given minute.

Click Save.

If you consume the service again, you'll see new headers describing the status of current rate limiting policy:

# http 34.214.132.0:8000/route1/get

HTTP/1.1 200 OK

Access-Control-Allow-Credentials: true

Access-Control-Allow-Origin: *

Connection: keep-alive

Content-Length: 430

Content-Type: application/json

Date: Fri, 03 Mar 2023 21:22:01 GMT

RateLimit-Limit: 3

RateLimit-Remaining: 2

RateLimit-Reset: 60

Server: gunicorn/19.9.0

Via: kong/3.2.1.0-enterprise-edition

X-Kong-Proxy-Latency: 54

X-Kong-Upstream-Latency: 124

X-RateLimit-Limit-Minute: 3

X-RateLimit-Remaining-Minute: 2

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "HTTPie/2.6.0",

"X-Amzn-Trace-Id": "Root=1-640264f9-3fde5d2606b14fff17f778ec",

"X-Forwarded-Host": "34.214.132.0",

"X-Forwarded-Path": "/route1/get",

"X-Forwarded-Prefix": "/route1"

},

"origin": "52.41.185.161, 34.214.132.0",

"url": "http://34.214.132.0/get"

}

If you keep sending new requests to the Runtime Instance, eventually, you'll get a 429 error code, meaning you have reached the consumption rate limiting policy for this Route.

# http 34.214.132.0:8000/route1/get

HTTP/1.1 429 Too Many Requests

Connection: keep-alive

Content-Length: 41

Content-Type: application/json; charset=utf-8

Date: Fri, 03 Mar 2023 21:22:07 GMT

RateLimit-Limit: 3

RateLimit-Remaining: 0

RateLimit-Reset: 53

Retry-After: 53

Server: kong/3.2.1.0-enterprise-edition

X-Kong-Response-Latency: 1

X-RateLimit-Limit-Minute: 3

X-RateLimit-Remaining-Minute: 0

{

"message": "API rate limit exceeded"

}

Conclusion

The Konnect "Architecture Freedom" principle provides a flexible environment to deploy multiple Data Planes running on several platforms available today. Konnect fully supports ECS, one of the main services provided by AWS to run enterprise class applications and workloads.

This blog post described how to deploy Kong Konnect Data Plane using AWS and ECS CLIs. Kong and AWS also provide Cloud Development Kit (CDK) Constructs to deploy Kong Data Planes on multiple AWS platforms including not just ECS but EKS (Elastic Kubernetes Service) Clusters as well.

The AWS CDK brings a higher level of abstraction for the concept of IaC. The possibility to define deployment logic using best-of-breed programming languages available today is its main benefit.

You can learn more about products showcased in this blog through the official documentation: Amazon Elastic Container Services and Kong Konnect.

Feel free to apply and experiment with API policies like caching with AWS ElastiCache for Redis, log processing with AWS Opensearch Services, OIDC-based authentication with AWS Cognito, canary, GraphQL integration and more with the extensive list of plugins provided by Kong Konnect.