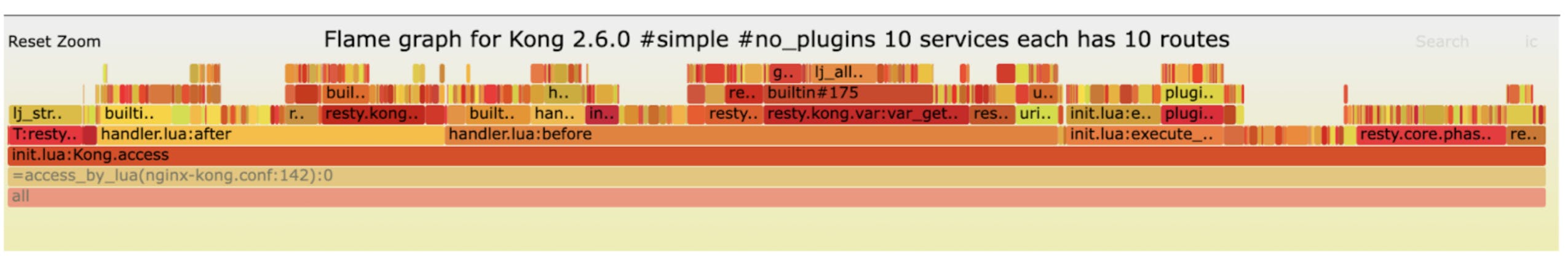

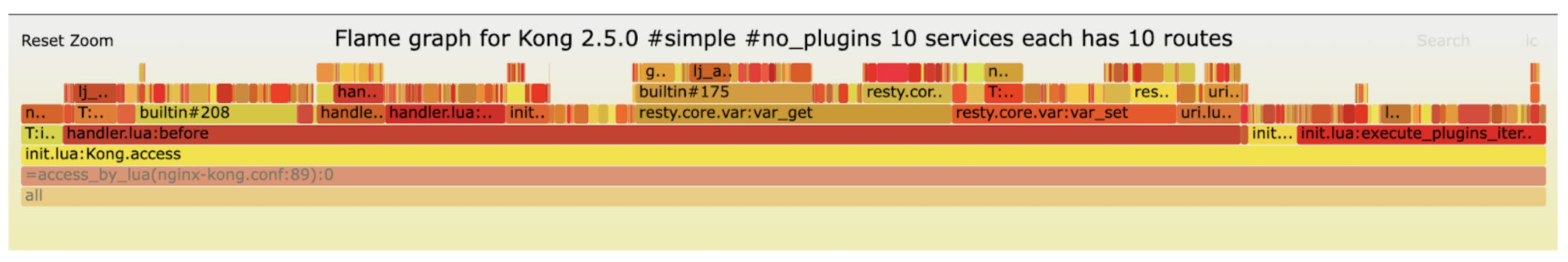

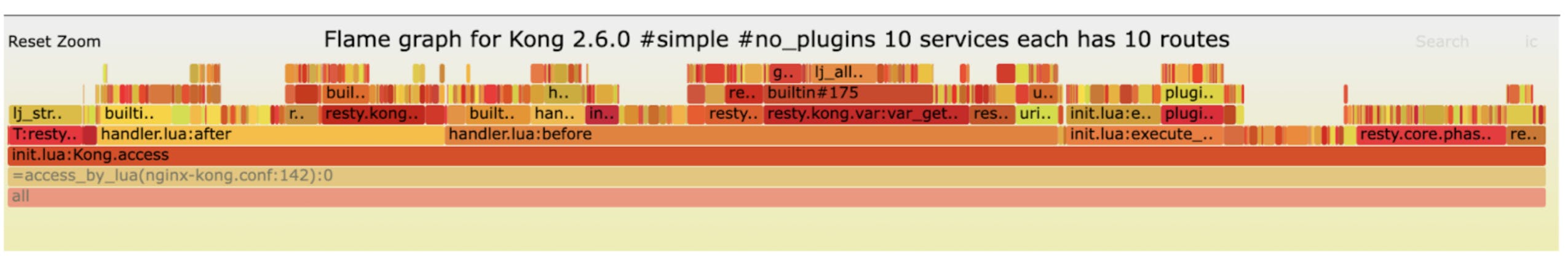

At Kong, we run performance testing in CI in every commit or pull request that has a potential performance impact, as well as on each release. Thanks to the performance testing framework and its integration with Github Actions, we can easily get basic metrics like RPS and latency. Also, flame graphs to pinpoint the significant part that draws down performance.

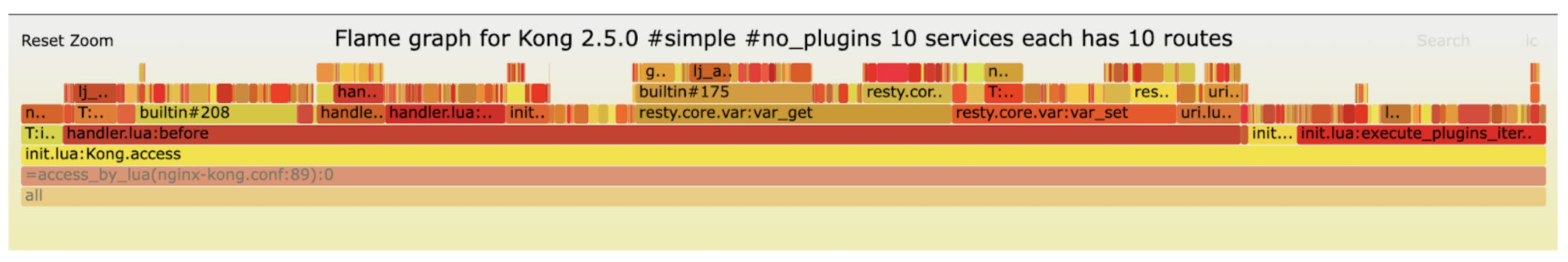

With that workflow in place, we figured one of the most significant parts of Kong's hotpath is Nginx variable accesses.

The flame graph above is generated by counting each function involved in executing the test. The wider the bar appears in the flame graph, the more CPU time is taken in that function. In the above flame graph, resty.core.var:var_get and resty.core.var:var_get are APIs to access Nginx variables; they add up to take 21% of samples in Kong's access phase.

Nginx Variable

An Nginx variable starts with an "$" in the Nginx configuration; it’s widely used in almost every Nginx module. We usually rely on Nginx variables to read the current request's state (like $host for the current host) or set some state for the current request (like $request_uri for rewriting path). Nginx variables are also used to interact with other Nginx modules. It also allows OpenResty to read the module's state (through variable read) or set their behaviors (by variable write).

If you are already familiar with Kong, you may see variables being used in many places, especially when Kong is routing the requests. Our team spotted the Nginx variable access as a potential bottleneck because it took a large chunk in the flame graph.

To solve the bottleneck, let's first look at how Nginx variables are accessed in OpenResty.

Nginx Variable in OpenResty

To access variables in OpenResty, we use the ngx.var API, including getting and setting the variable. The Lua part of ngx.var, implemented at lua-resty-core, uses a Lua metatable to turn ordinary Lua table access into variable get and set operations.

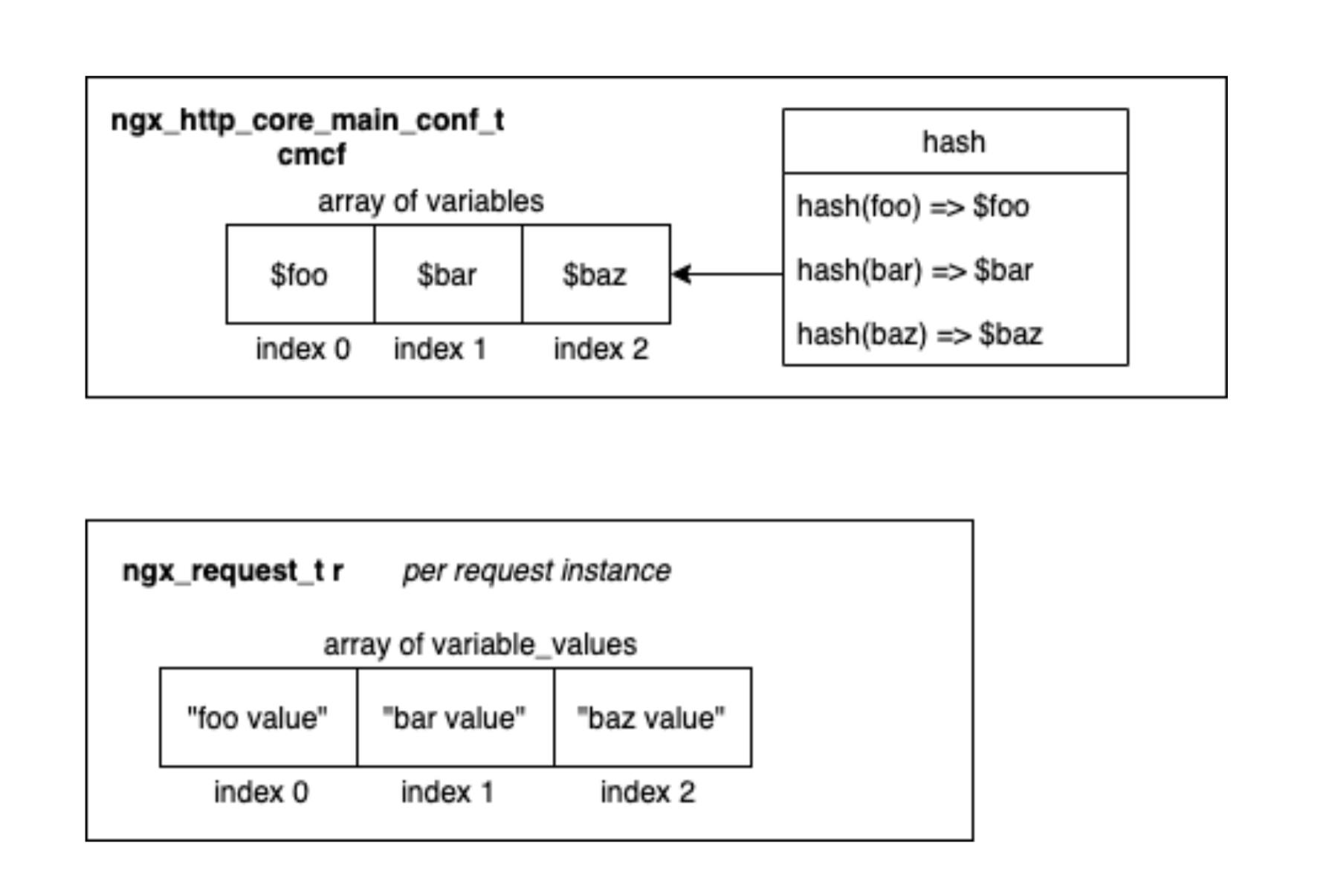

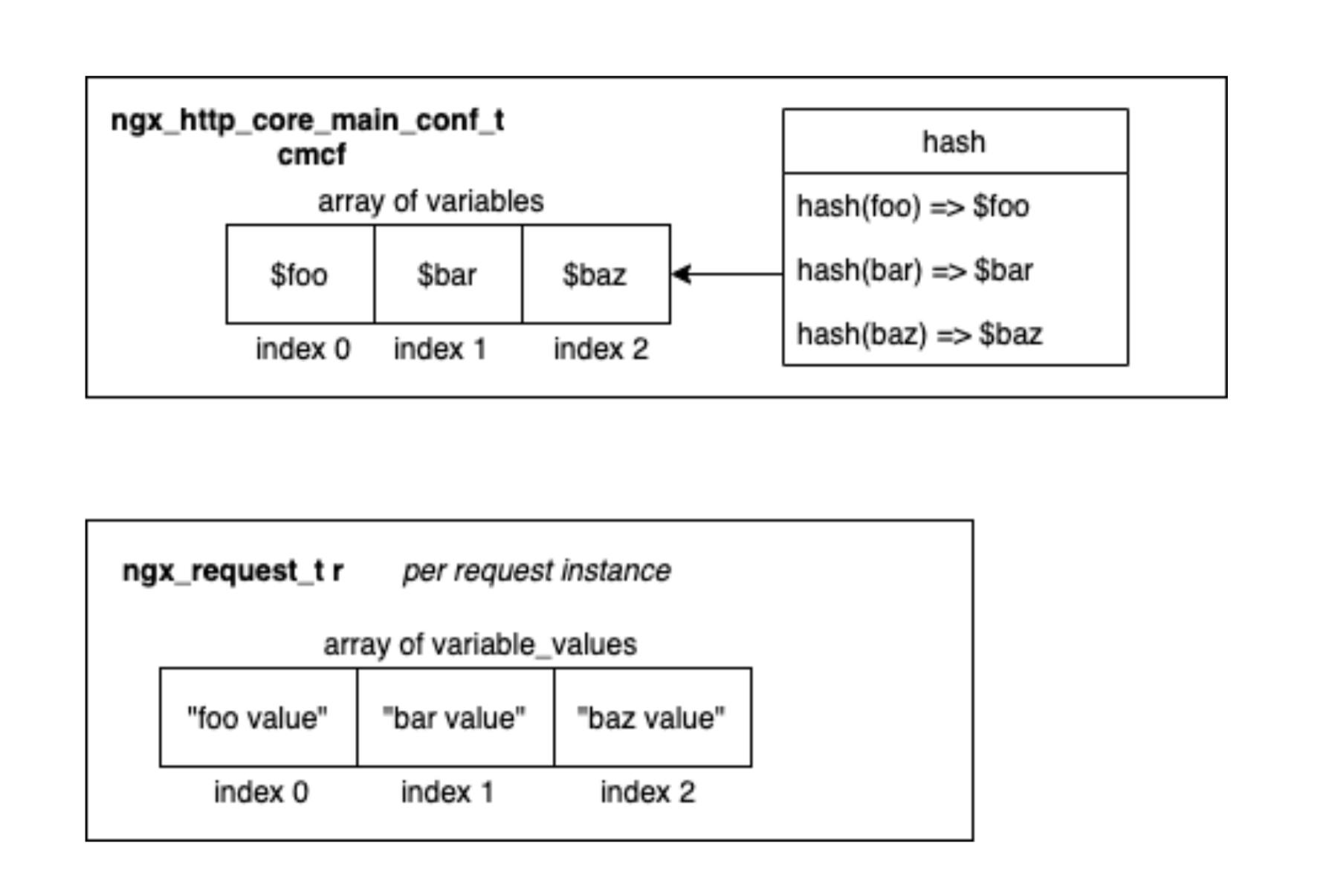

The core logic uses ngx_hash_strlow, which calculates the hash of the variable's name accessed and stores the lower case form of name_data. Then with the hash, we can perform a hash table lookup and find the instance of the variable for the current request with ngx_http_get_variable. Note that Nginx configuration declares the variables themselves at config parsing time, but variable values are only available in the ngx_request_t instance at run time.

Let's hold the analysis first and look at how the Nginx module access variables. We will use ngx_http_memcached_module as an example. The module expects a special variable "$memcached_key" to exist to use as the key for caching. Two parts seem to relate to this variable. First one is in function ngx_http_memcached_pass:

This function is the handler for the memcache_pass directive, so it will be called during the config phase, i.e., when Nginx loads its configuration. Note the function ngx_http_get_variable_index, where the numeric index is retrieved from the current conf context cf and stored into a member index of the current module's context mlcf.

Note function ngx_http_get_indexed_variable is used to get variable value by passing the index previously stored in module context mlcf.

What Are the Differences

Now we can identify the difference between how Nginx variables are being used. If we search for occurrences of ngx_http_get_variable versus ngx_http_get_indexed_variable in Nginx official modules source code, most modules use the later one; there are two exceptions: the perl module and the ssi module:

One might already notice that those using ngx_http_get_indexed_variable always expect the variable to be accessed already in configuration time, where ngx_http_get_variable is used in cases where the variable appears in the dynamically interpreted script. This is also the reason OpenResty chooses the hash-based API to access variables, as it's nearly impossible to know what variables will be used afterward before actually running the Lua code.

Secondly, we introduce a new FFI function to let Lua code pull all known indexes and their corresponding names from Nginx's variable index array.

We use the returned indexes and names on the Lua side to build a Lua table that maps names to numeric indexes.

Finally, we introduce a new FFI function to get variables by index. With get, ngx_http_get_indexed_variable is sufficient to return the variable value; with set, we will use index to find the variable and invoke its set_handler or set the value directly. On the Lua side, we first lookup the Lua table for numeric index and fall back to the original unindexed way only if the index is not found.

Those operations are a drop-in replacement for the current ngx.var API. They're all done in our lua-kong-nginx-module, so end users don't (and can't) manage or modify indexes when accessing variables. The wrong index number could lead to undefined behaviors. Users only need to add the lua_kong_load_var_index directive to cache desired variables to gain performance. The result is that the existing code will automatically take advantage of indexed variable access with the existing safe OpenResty Lua API.

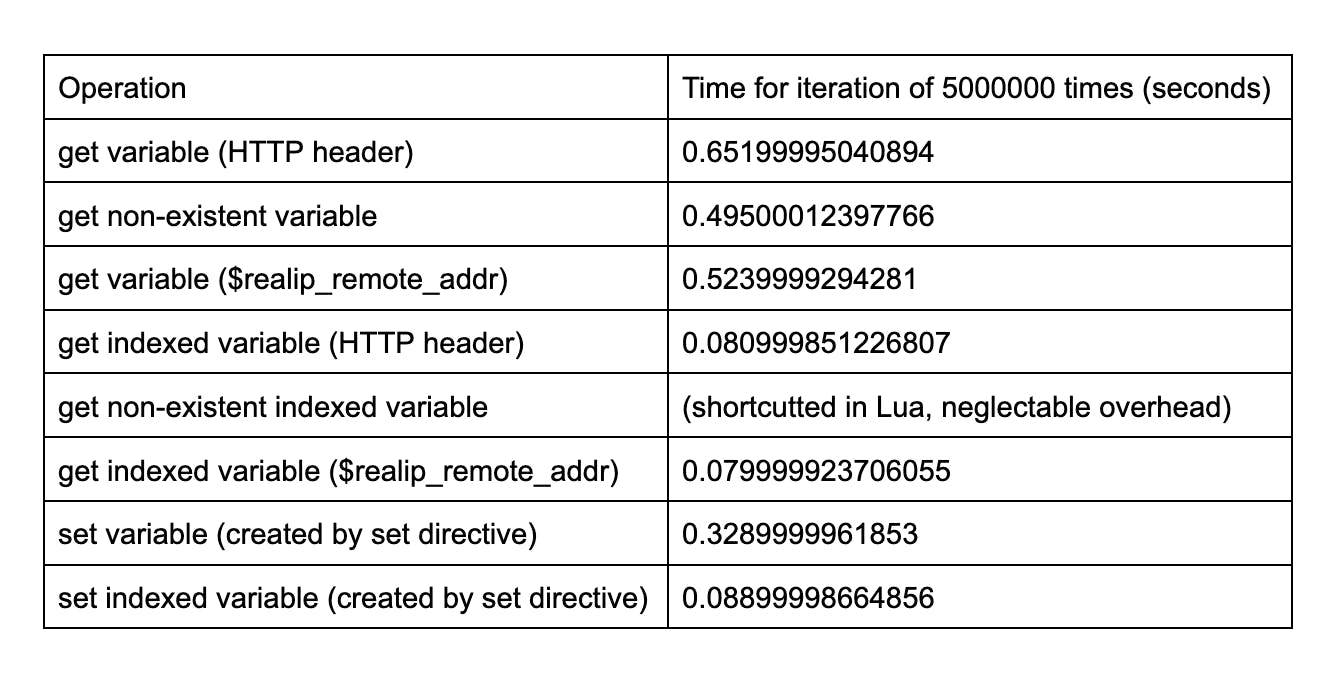

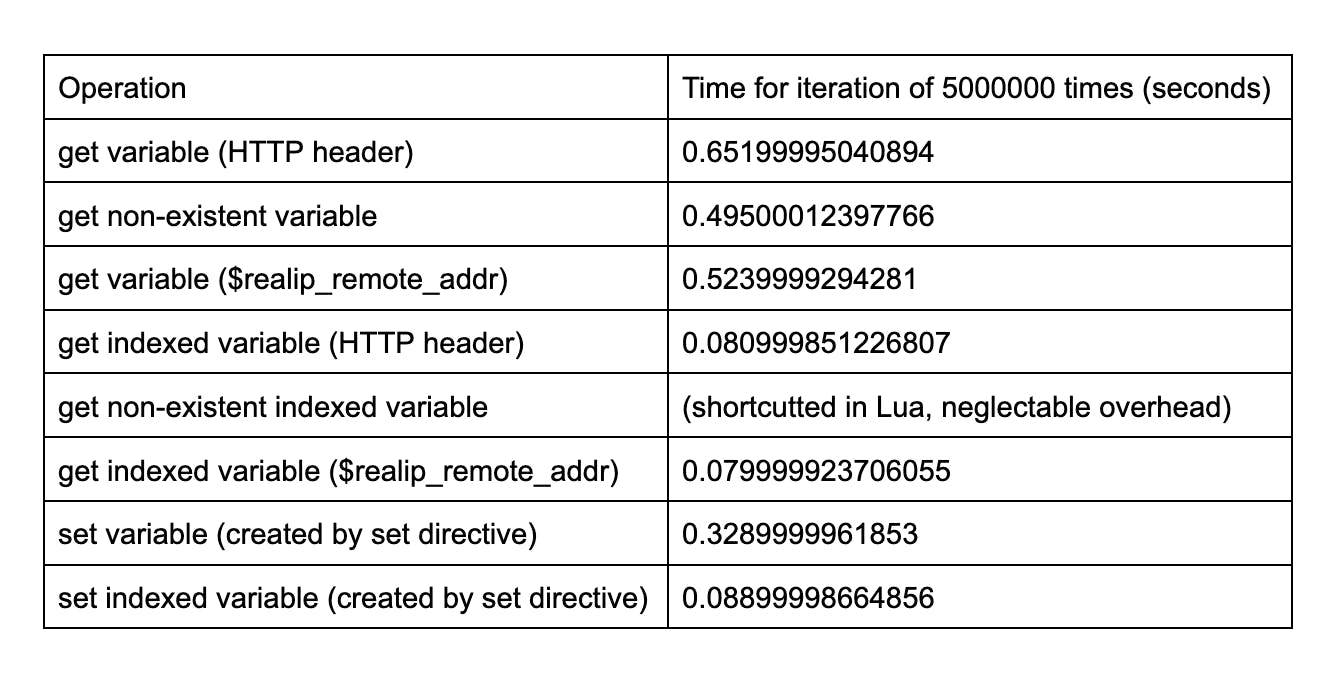

We did a ballpark test of accessing variables with and without an index. It showed our approach boosted the speed at 73% for set and 85% for get.

We also observed our base test for Kong has a 12% increase of requests per second (RPS) and a 37% drop in latency.

In the flame graph with our improvements, we can shrink the samples down to less than 10% in the access phase.

Future Improvements

Performance optimization doesn't have an end here. That includes the ones we perform for Nginx variables. There are a couple of ways we can further improve. We can add a function style API like kong.var.get(key) and kong.var.set(key, value) to directly invoke FFI functions without the need for metatable magic.

Want to Join Kong's Engineering Team?

We're hiring across our cloud applications, data, Gateway & K8s, QE and security teams! Apply to work with us >>