By now, when we hear the words "service mesh" we typically know what to expect: service discovery, load balancing, traffic management and routing, security, observability, and resilience. So, why Kong Mesh? What does Kong Mesh offer that would be more difficult to obtain with other solutions? Why is Kong Mesh with Red Hat OpenShift a great pairing?

We're happy to announce that the Kong Mesh 2.2.0 UBI Images are available in the Red Hat Ecosystem Catalog.

In this post, we're going to review some use cases of Kong Mesh and highlight the possibilities Kong Mesh can solve for. And, to help you get started evaluating Kong Mesh on your own, we'll end with a quick tutorial on how to install and play with Kong Mesh on Red Hat OpenShift.

Why Kong Mesh

Kong Mesh democratizes service meshes by making it accessible in heterogeneous environments simultaneously, VMs, and cloud-native workloads, such as Red Hat OpenShift.

Quite often the number one struggle large enterprises face is too much of a good thing: too many clouds, clusters, and data centers — all to meet your customers’ demand. The network complexity, and the cognitive strain this puts on your engineering organization, can be tough.

But Kong Mesh natively enables the networking flexibility to bring these platforms together and behave as a service mesh under one centralized control plane.

Not only do you get a cohesive hybrid cloud service mesh, but all those cloud-native technologies you were waiting to use when you shifted to a cloud platform (e.g., observability, traffic management, and zero-trust) are equally available to those legacy workloads.

It's also possible to support multiple business units in isolation. Kong Mesh supports multiple meshes/multi-tenancy. So isolating multiple business units that span a hybrid cloud and managing it all from the same Kong Mesh control plane isn't a problem.

Why Kong Mesh with Red Hat OpenShift

Red Hat OpenShift is the industry's leading hybrid cloud application platform, powered by Kubernetes, intended to run anywhere: on-prem or in the cloud. It provides the same experience irrespective of where that platform is running.

As your footprint into Red Hat OpenShift expands, you need a mesh with a footprint that can expand alongside your platform needs. That may include cross-cluster communication or easy-to-orchestrate failover scenarios. Kong Mesh excels on all these fronts.

In order to be able to digest the architectural freedom that Kong Mesh on Red Hat OpenShift offers we're going to step through several use cases that incrementally build on each other.

Kong Mesh on Red Hat OpenShift use cases

The use cases we're going to cover from simplest to most complex are the following:

- Hybrid cloud on OpenShift and on-prem with multi-zone deployment

- Modernization and migration of workloads to the cloud

- Global HA resiliency

Multi-zone support offering true hybrid cloud and multi-cluster infrastructure

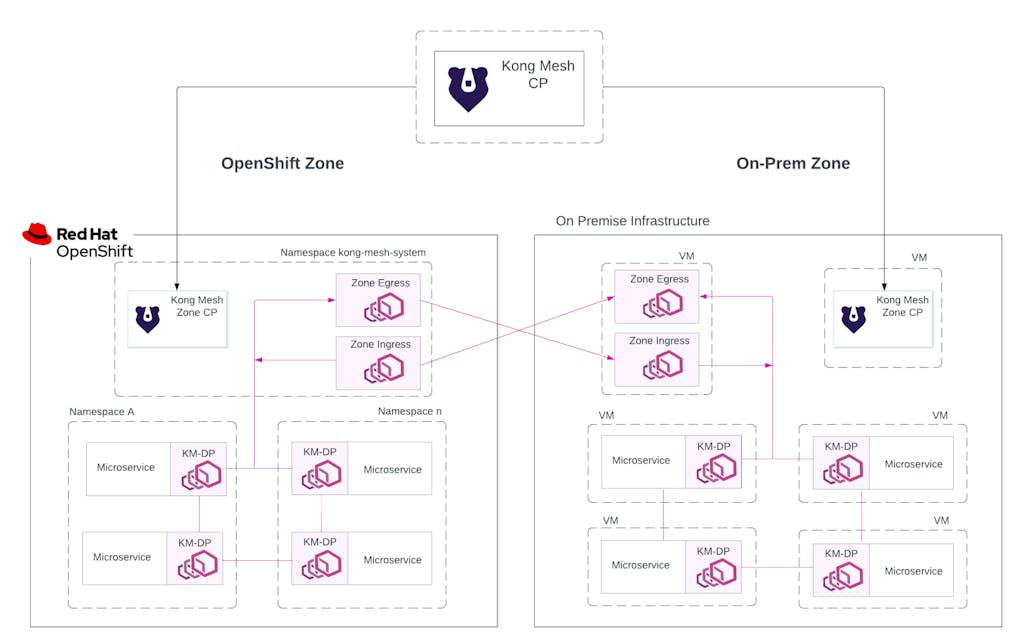

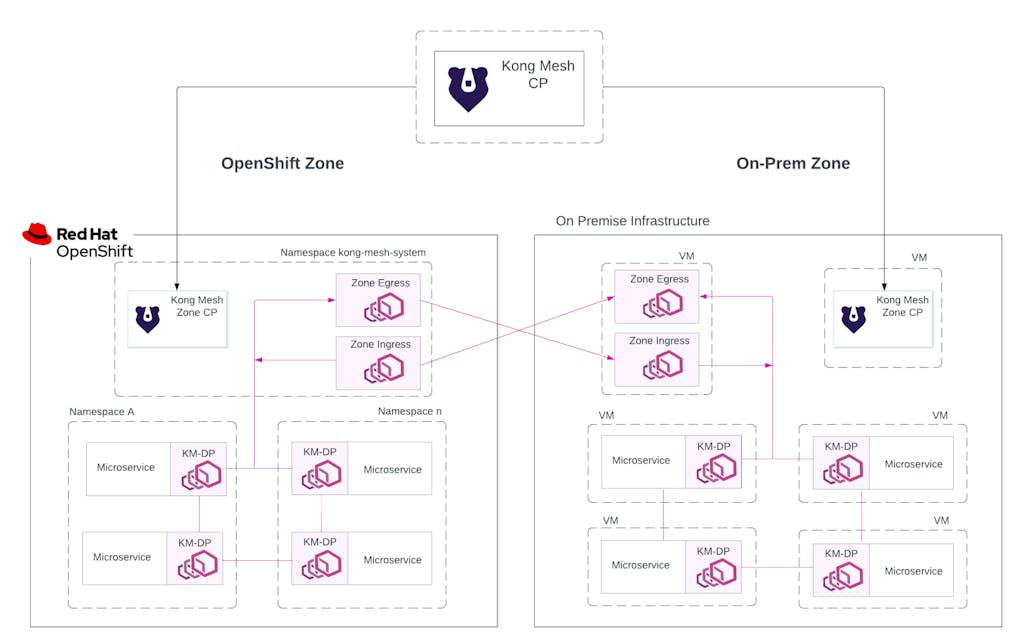

In the deployment strategy of Kong Mesh, we have a concept of zones. A zone is any platform supported by Kong Mesh. So OpenShift clusters, clusters running on different clouds, or on-prem-like infrastructure, such as VMs running in a data center or a VPC, can each be represented as a zone on the service mesh.

Here's how it works. The Kong Mesh control plane will run independently. This can either be in a VM or Red Hat OpenShift. If the control plane is running on a VM then it requires a PostgreSQL datastore, otherwise on OpenShift it's using etcd.

To understand the zones, we stated that any platform unit can be represented as a zone. So in the example below on the left, a Red Hat OpenShift cluster would be a zone, and on the right, an on-prem data center is another zone. Each zone has a remote zone control plane.

The global control plane pushes configuration down to the remote zone control planes, and the zone CPs, in turn, distribute the configuration to the data planes running in its zone.

For cross-zone communication, it is abstracted away. Microservices on the mesh are unaware when calling a microservice in another zone. But, it's the zone ingress and egress that manage and proxy requests between zones.

Figure 1 — Hybrid cloud example

In a nutshell, with Kong Mesh you can build a multi-tenancy hybrid cloud service mesh that abstracts the network connectivity from your microservices and brings them all together.

Modernized and migrate workloads to the cloud

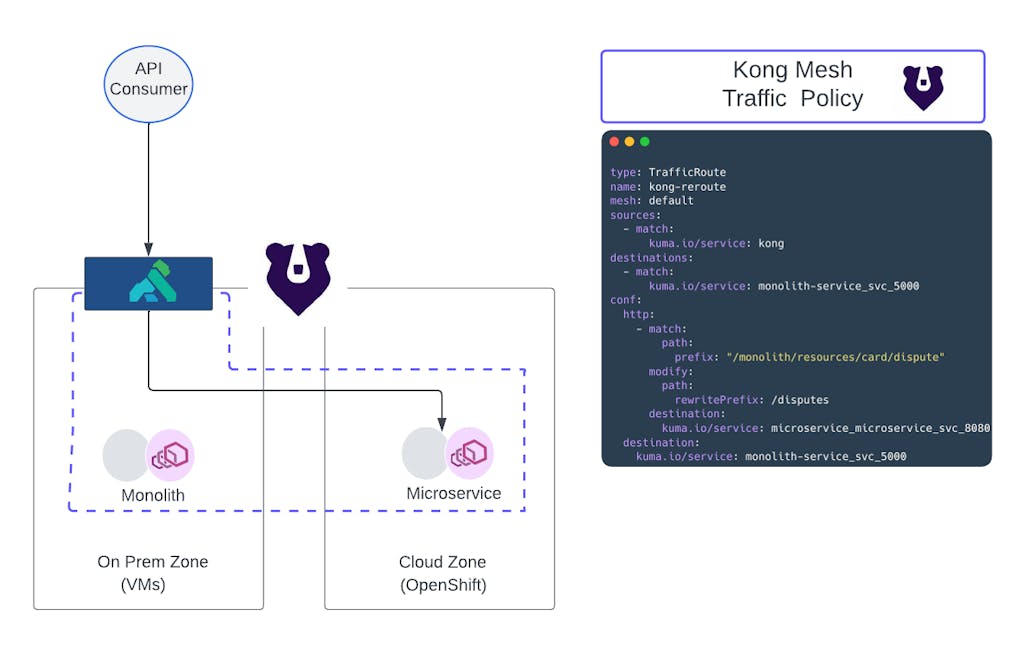

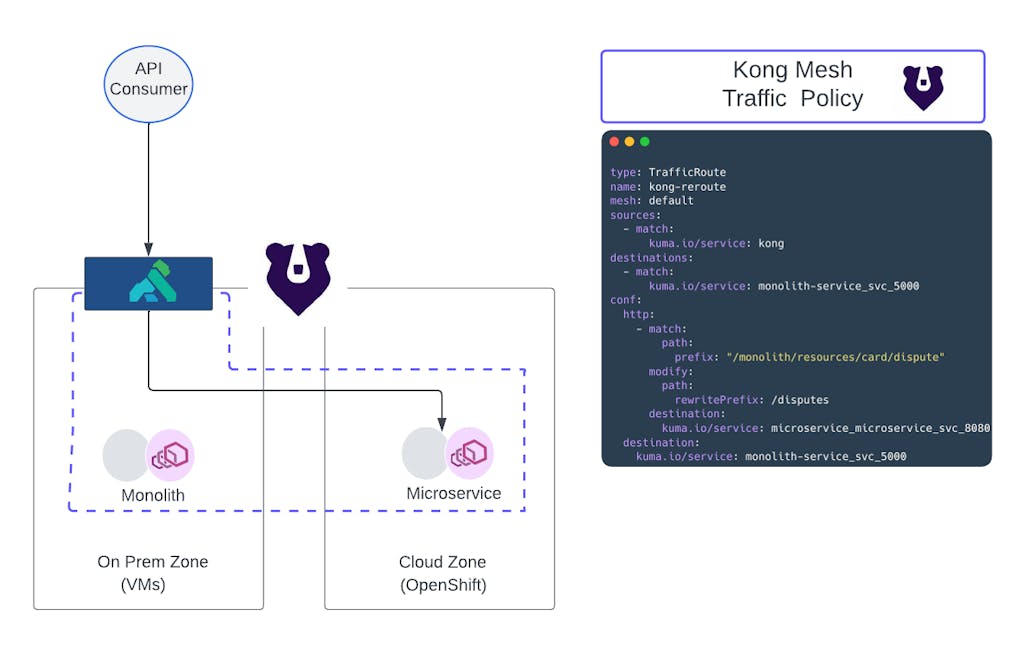

For those customers that have on-prem workloads and want to push those into Red Hat OpenShift, we have a strategy for you. In many modernization and migration stories, the main problem becomes how to manage the network connectivity as we shift workloads from on-prem to cloud infrastructure such as Red Hat Openshift.

The traffic management capabilities of Kong Mesh in conjunction with hybrid cloud and multi-zone support, make it possible to build a migration strategy to gradually deprecate on-prem workloads in a risk-averse structure.

Figure 2 — Cloud migration journey

At Kong, this is the Crawl/Walk/Run Cloud Migration Strategy. It is a three-phased approach that demonstrates to customers how to incrementally introduce Kong Mesh and Kong Konnect Gateway to your infrastructure and build a flywheel to deprecate old services in favor of the new.

For a deep dive into this solution, take a look at the link above.

Global high availability for easy cloud region-to-region failover

When Red Hat OpenShift is running in a cloud provider’s infrastructure — for example, ROSA (Red Hat OpenShift Service on AWS) or ARO (Azure Red Hat OpenShift) — the cluster will span multiple availability zones for high availability, but the cluster itself is isolated to a particular AWS or Azure region. If the entire region were to experience downtime, the global control plane would eventually degrade.

With Kong Mesh 2.2.0, there's now built-in support for ‘Universal on K8s' mode. In this mode, the global control planes run on Red Hat OpenShift but now the backend storage is a PostgreSQL database. What this setup allows you to do is to easily failover from one region to another.

In the example below, because the global configuration is pushed to the PostgreSQL datastore, an entire region can go down and you will still be fully operational in the next region.

Figure 3 — Global HA with Universal on K8s mode

Tutorial time!

So how can you get started with Kong Mesh?

Let's walk through installing Kong Mesh in Standalone Mode on Red Hat OpenShift Service on AWS (ROSA) 4.12 cluster. During this tutorial we're going to demonstrate the following:

- How to set up the values file for the helm chart

- How to reference the UBI-certified images

- Prerequisites steps before running the helm chart

- Deploy and join the Kong for Kubernetes Ingress Controller (KIC) to the mesh

- Deploy a sample application, bookinfo, on the mesh and validate it’s all working

Here, we are going to do the abridged version, for more details you can clone down the GitHub Repo.

If you prefer to watch the video, check out the video on YouTube.

Prerequisites

Let's start off with the prerequisites for the cluster first.

Create the namespace:

Create the image pull secret so we can pull down the certified UBI images:

Add nonroot-v2 permissions to the service accounts running the jobs:

Understanding the Helm Values File Setup

Grab the latest helm chart:

And we're almost ready to install. But before we do, let's review the values.yaml file.

First, defining the registry, image version, and pull secret are shown below.

Second, for the control plane, I wanted to highlight:

- environment – is set as kubernetes

- mode – is standalone, meaning we'll do an all-in-one install with no zones to PoC

- and for Red Hat OpenShift we have to include an extra environment variable "KUMA_RUNTIME_KUBERNETES_INJECTOR_CONTAINER_PATCHES"

What is the injector container patch?

On Red Hat OpenShift, the sidecars require some Linux capabilities in order for the processes to start. With Kong Mesh we can use the ContainerPatch CRD to define any side customizations needed (securityContext, resource limits, etc). In order to distribute the container patch to all sidecars we can provide default patches via the injector_container_patches environment variable.

For install

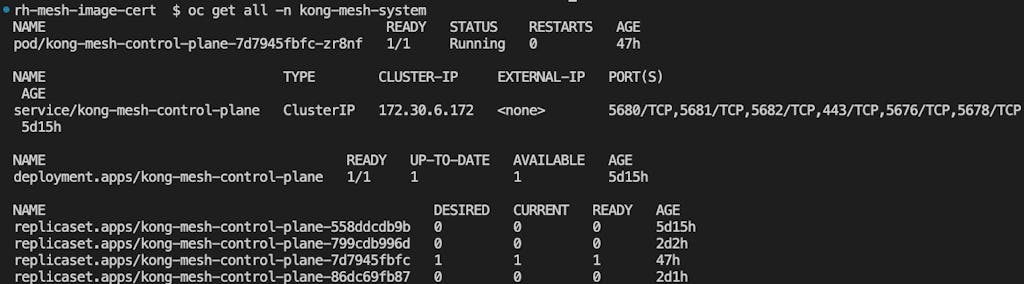

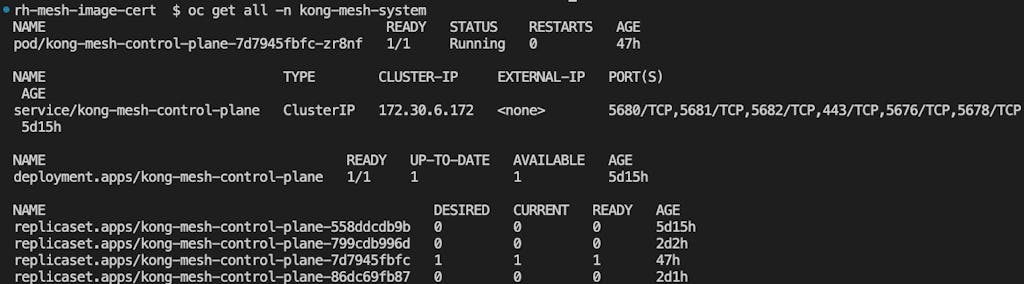

When the install completes, what you expect to see in the namespace is a service for the mesh control plane and one pod with its deployment:

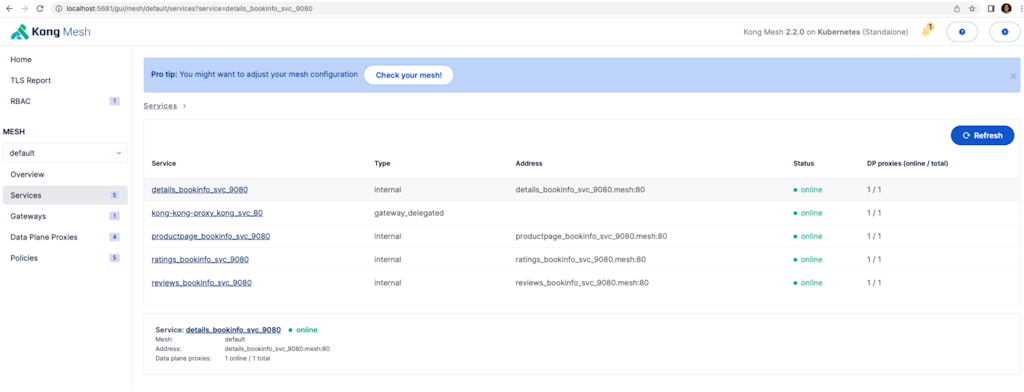

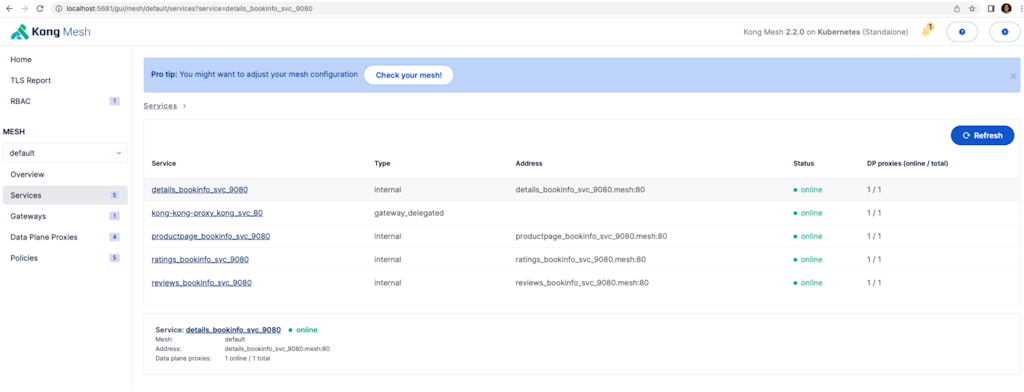

You can navigate to the UI. Because this is a demo we'll port-forward and head to the browser on http://localhost:5681/gui:

Deploy KIC and bookinfo

We want to test out an application and make sure things are working. So let's deploy the Kubernetes Ingress Controller (KIC) and bookinfo.

Finally, Bookinfo. Give the services accounts permission to use the kong-mesh-sidecar scc, then deploy a paired down bookinfo app and ingress resources for it:

To the validation

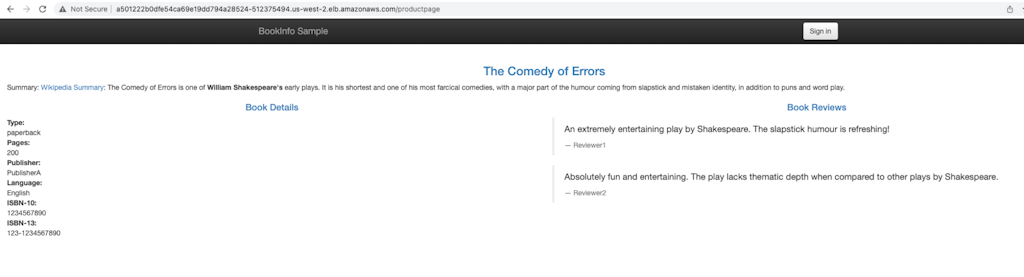

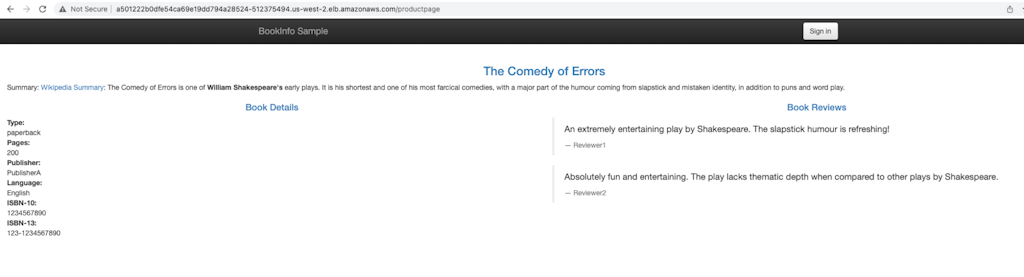

Grab the load balancer address, and navigate:

Navigate to the http://<your-lb>/productpage, and you should see that classic bookinfo sample application, with most of the bells and whistles. We didn't have enough control planes in evaluation mode for the whole thing 🙂.

Conclusion

In this post, we covered the various topologies available with Kong Mesh and the net-new topology Global HA released with 2.2.0. Then, we stepped through how to deploy Kong Mesh using the Red Hat UBI Certified image and how to deploy KIC and a sample application into that mesh.

We’re really excited to hit this milestone and have Kong Mesh be a part of the Red Hat Ecosystem Catalog. With Kong Mesh 2.2.0 UBI images certified on Red Hat OpenShift, we’re able to deliver greater value to customers in navigating modernization efforts across cloud native and on-prem environments.

Kong Mesh on Red Hat Openshift is adaptable to your needs. It can help customers maintain cohesion between on-prem and cloud workloads long-term, or modernize at your pace.