After you've built your microservices-backed application, it's time to deploy and connect them. Luckily, there are many cloud providers to choose from, and you can even mix and match.

Many organizations, like Australia Post, are taking the mix-and-match approach, embracing a multi-cloud architecture. The microservices that make up a system no longer have to stay on a single cloud. Instead, microservices can span across multiple cloud providers, multiple accounts and multiple availability zones.

This approach brings greater visibility across microservices and enables decoupled scaling of only those services that need it, and in environments where it's most cost-effective to do so.

However, you need to pair a multi-cloud strategy with a thoughtful approach to unifying your microservices—now sprawled across multiple clouds—for edge connectivity behind a single point of entry. In this post, we'll walk through how to use Kong Gateway to do just that. We'll walk through the following:

- An overview of our mini-project: an application made up of microservices deployed across both GCP and AWS

- Setup of a local deployment of Kong Gateway (OSS)

- Configuring Kong Gateway to point to our microservices

- Applying an authentication strategy (JWT) to manage access

- Applying rate limiting to manage traffic

Our Mini-Project

To simplify this demo, our application will have three services:

- Users service: An admin user can fetch a list of all users (GET /users), while a non-admin user can only get their own record (GET /users/:userId). Non-authenticated requests are rejected.

- Orders service: An admin user can fetch a list of orders for a given user ID (GET /orders/:userId), while a non-admin user can only get a list of their own orders (GET /orders/:userId). Non-authenticated requests are rejected.

- Authentication service: When a request is sent (POST /login) with a valid email and password combination, the authentication service returns a signed JWT to authenticate requests to the Users and Orders services.

For simplicity, each of our services references a JSON file for its data rather than a full-fledged database layer. This will keep us focused on the task at hand: deploying a multi-cloud API gateway.

We wrote all of our services in Node.js, and you can find the source code here.

Multi-Cloud Deployment

We have deployed our Users service to Google App Engine. Because we implemented some authorization restrictions (based on JWT payload) to access user information, requests to the endpoints will result in a 403 Forbidden error. After we put JWT authentication in place, the response will be 401 Unauthorized.

To confirm that the service is up and running, we've created a /public endpoint that does not have any authorization restrictions:

Meanwhile, we've deployed our Orders service as a Docker container running on an AWS EC2 instance. We've built similar authorization restrictions as well as an open /public endpoint:

Lastly, our Authentication service is on AWS Lambda, which we invoke with the AWS CLI:

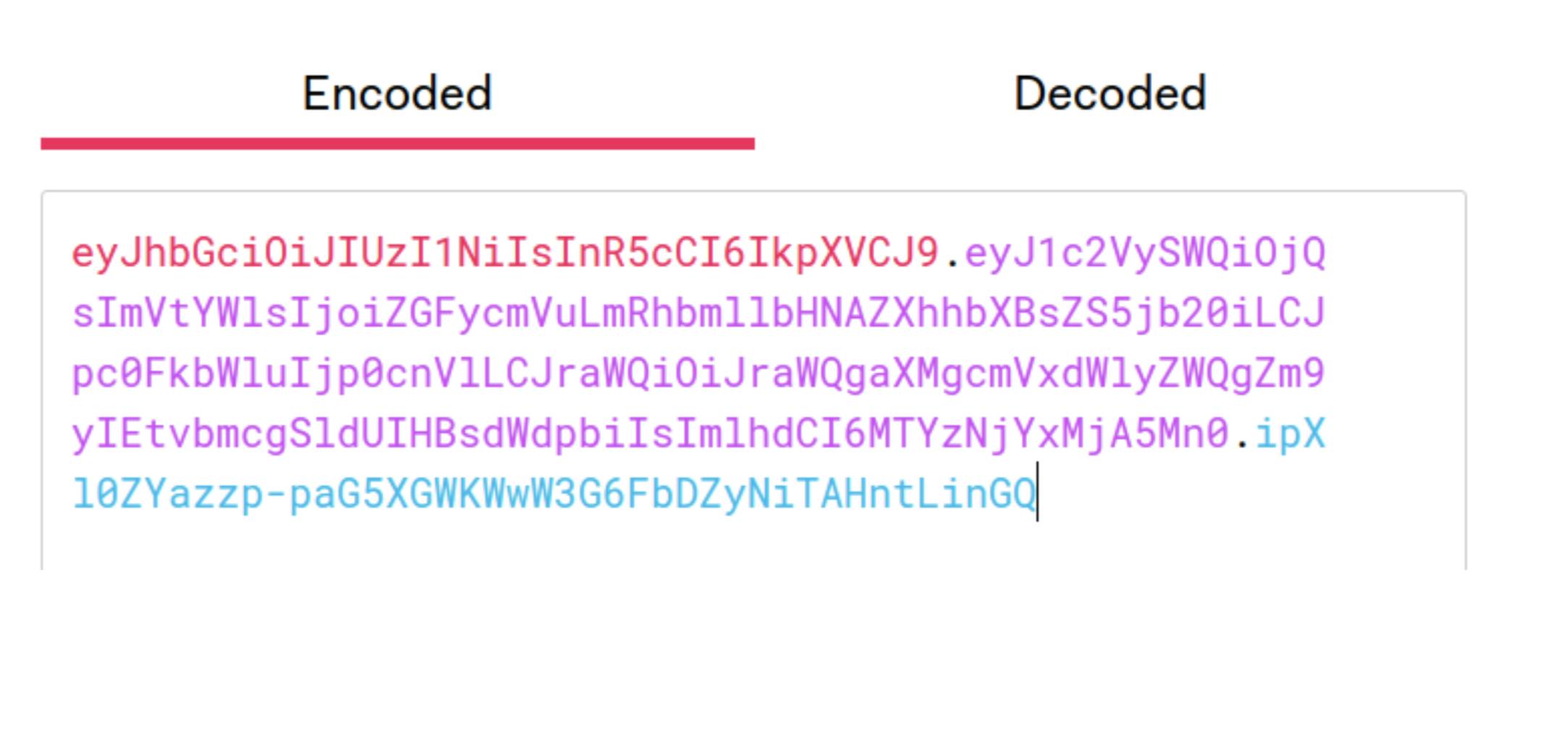

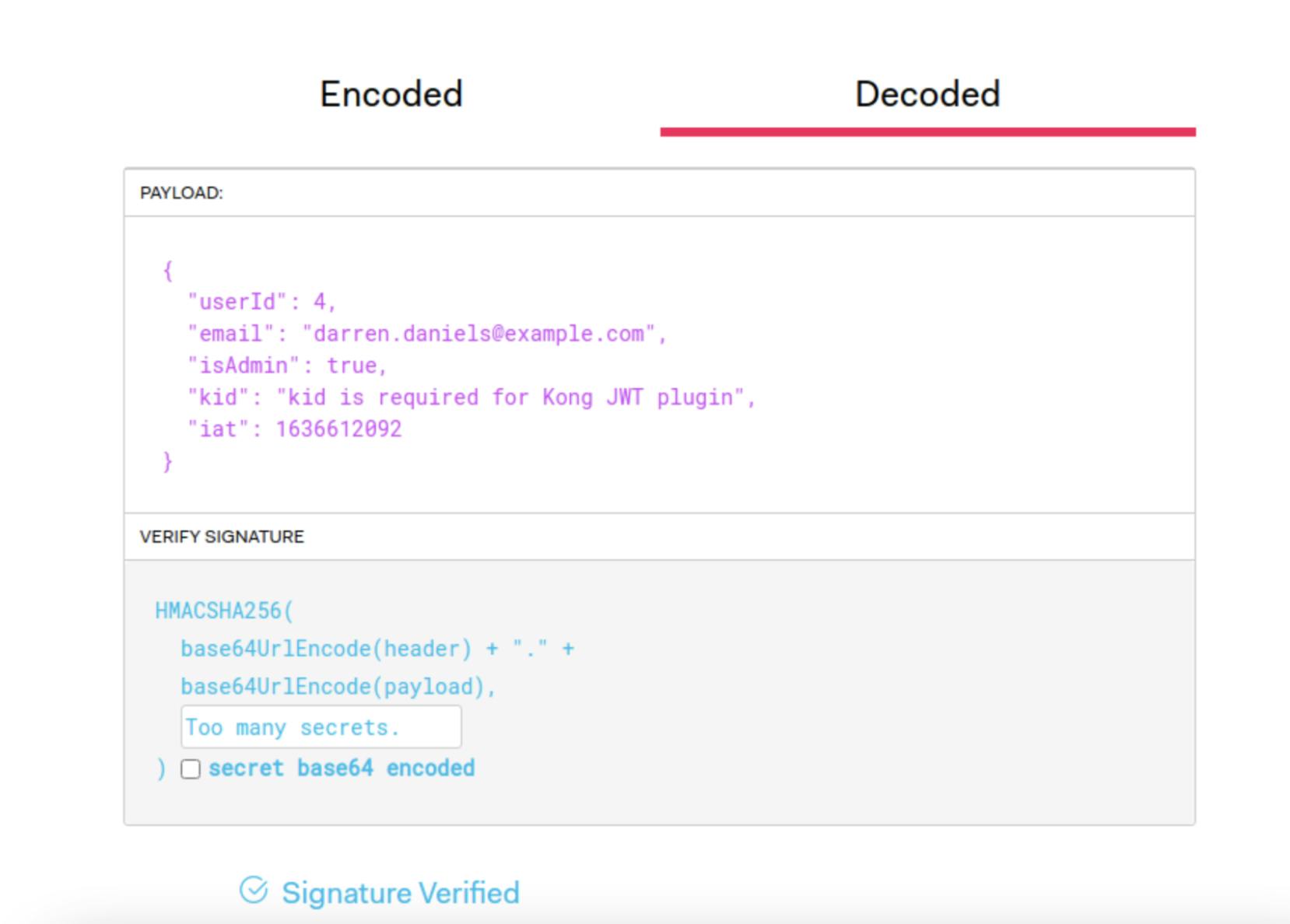

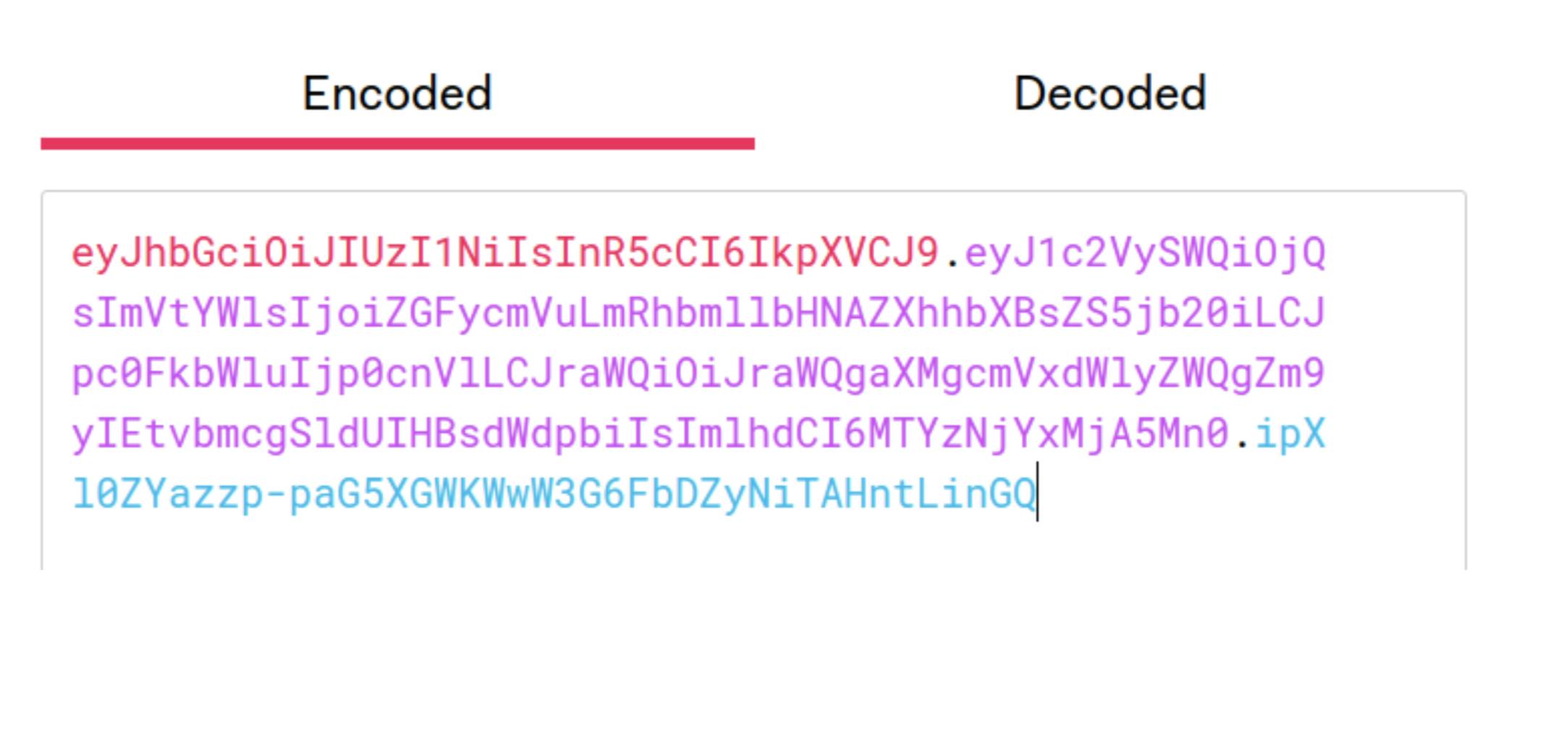

We see that a login attempt with a bad password results in a 401 status code. Meanwhile, a successful login returns a JWT. We can check this JWT at jwt.io to see its payload and to verify the signature.

The payload for our JWT includes the userID and email for the user, along with a boolean isAdmin. We use these values in the Users and Orders services as part of authorization.

Notice the payload includes a string called kid and that we've signed our JWT with the string Too many secrets. We'll discuss both of these pieces later when we set up JWT authentication at the Kong Gateway level.

Case Study: First Abu Dhabi Bank Accelerates Multi-Cloud Connectivity With Kong

Set Up Kong API Gateway Open Source

With our microservices sprawled out across different cloud providers, we'll set up Kong Gateway as the single entry point to unify it all. For simplicity in this walkthrough, we'll install Kong Gateway on our local machine. The installation steps for Kong Gateway vary depending on your system.

We can also install Kong to a cloud provider like AWS by using CloudFormation templates.

After installing Kong, we'll configure Kong to use a database-less declarative configuration. We'll configure all of the upstream services, routes and plugins in a YAML file which Kong loads in upon startup.

First, create a project folder, and then use Kong to bootstrap a configuration file:

Next, we'll configure the kong.conf file, which Kong looks to for its startup configuration. The initial Kong installation provides a kong.conf.default file, which we copy and rename to kong.conf.

We'll edit kong.conf to make two changes.

Add Upstream Services

Before we start Kong Gateway, we'll add our upstream services and routes to our declarative configuration file. We edit kong.yml to look like the following:

In our configuration, we've specified two upstream services. The first one, users-service, points to our URL for our Users service's Google App Engine deployment. We set up a route so that requests to /users forward to our Users service. Similarly, orders-service points to our URL for the AWS EC2 container where we've deployed our Orders service. Kong takes any requests to the /orders path and forwards them to our AWS-based service.

With our configuration all ready to go, we start Kong Gateway:

Test Requests Through Kong Proxy

Now, we can send all of our requests through Kong Gateway, which is listening at http://localhost:8000.

With Kong Gateway set up, our single entry point now manages traffic across our services spread out among two different cloud providers. This is multi-cloud at its most basic.

Add Authentication Service as AWS Lambda

We also have our Authentication Service—a basic email and password system that returns a signed JWT—deployed to AWS Lambda. For this service, we can use Kong's AWS Lambda plugin to invoke our Lambda.

Let's update our kong.yml configuration file to use the plugin. We add the following lines to the file:

We've added a new route and path for Kong Gateway to listen on. Ordinarily, routes map to upstream services, but we're using the AWS Lambda plugin in place of an upstream service. With the plugin associated with the route, the Kong proxy will listen for requests to the /login path and then invoke the AWS Lambda, passing along the request body. We made sure to configure the plugin with the access key and secret for the IAM user with invocation privileges for this Lambda.

Now, we can restart Kong and test out requests to /login.

Excellent. Successful login attempts return a signed JWT in the body.

JWT Authentication With Kong's Plugin

Now that our services are up and running let's protect the Users and Orders services, requiring authentication with a JWT. For this, we'll use Kong's JWT plugin. If you'd like a more detailed walkthrough of this plugin's features, you can see this post.

We enable the plugin on both upstream services by adding the following lines to the plugins section in the kong.yml declarative configuration:

As expected, after we restart Kong and try our service endpoints again, we receive 401 Unauthorized responses.

These are not like the 403 Forbidden errors we received before. Because we didn't provide a valid JWT to get through the door, Kong has refused to forward our requests to the upstream services. You'll notice that even our previously public endpoint has been restricted from access too.

Add Consumers and Secrets

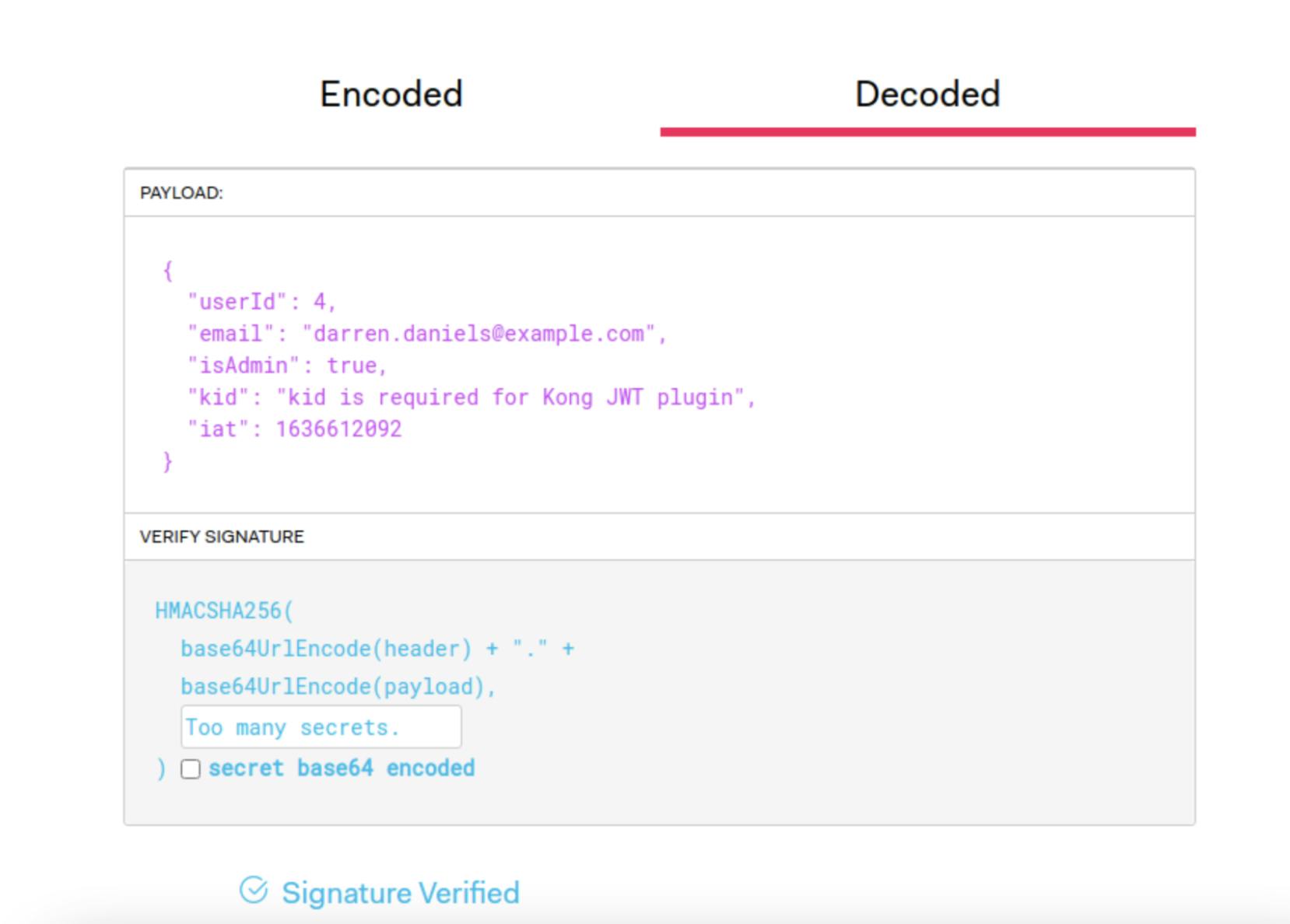

To complete the JWT authentication setup, we need to add a consumer and associate a signing secret with that consumer. The plugin will verify that an incoming JWT was signed with that secret and forward authenticated requests to their final destination.

We add these last few lines to our kong.yml file:

Next, we restart Kong.

Update JWT Authentication Service to Use a Consumer Key

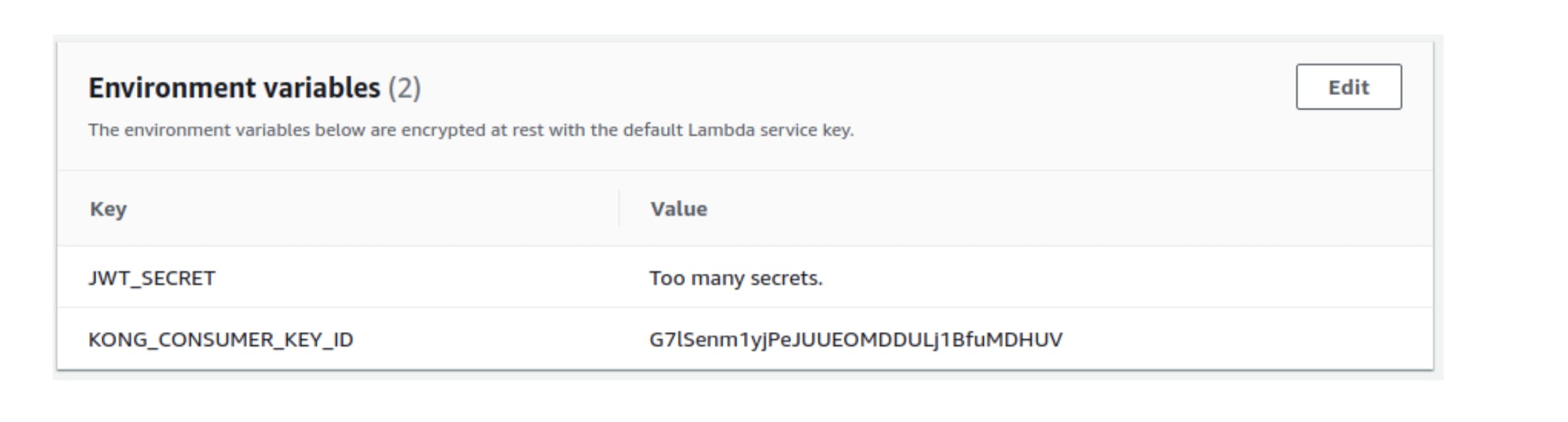

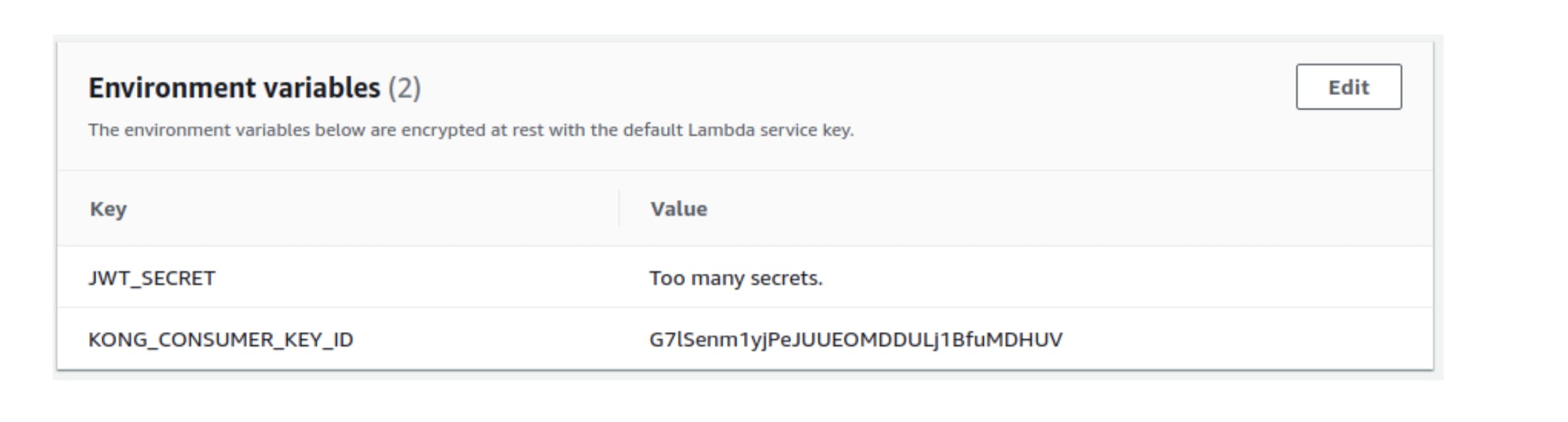

Our Authentication service needs the JWT signing secret and the Kong consumer key ID (kid) to generate a JWT that passes verification. We have the secret (Too many secrets), and we've set an environment variable for our AWS Lambda to use that secret.

We need to get the key ID for the consumer we just created in Kong and then set an additional environment variable at AWS Lambda to use that as our JWT payload's kid value. To get the key ID, we call Kong's Admin API:

The key associated with this consumer is what we need to set as the kid for every JWT that we generate. We update our AWS Lambda environment variables accordingly.

End-to-End Test

Now that we're all set up, it's time to test it. We'll log in with good credentials, get a signed and valid JWT, and then use that JWT as we send requests to our Users and Orders services.

It works! Kong Gateway is now set up with JWT authentication on our Users and Orders services, validating JWTs that come from our Authentication service—and all of these services are running on different clouds!

Rate Limiting and Other Plugins

We've just demonstrated how to leverage the JWT plugin to apply authentication consistently across all our multi-cloud services. Kong has countless plugins that we could apply across the board—for security, traffic control, monitoring and more.

Just to demonstrate another example, we could apply the Rate Limiting plugin to our services. To do this, we add a few more lines to the plugins section of our kong.yml file:

With these settings, we can send requests to the Users service up to a limit of three per minute. For the Orders service, we allow up to 10 per minute.

After we restart Kong, we make repeated requests to the Users service. The first three requests return good data. Then, on our fourth request:

Similarly, our eleventh request to the Orders service returns the following:

You'll notice that we used the local policy in our rate limiting plugin configuration. With this policy, we store request counters locally in-memory. We can also configure the plugin to use Redis, in which case we could store counters on a service like Amazon ElastiCache.

For a more detailed walkthrough on using this plugin, you can read this post.

Conclusion

As more organizations, like Australia and New Zealand Banking Group Limited, move toward developing microservices-backed applications deployed to multiple clouds, connectivity is an ever-present concern. What's needed is a simple way to unify and manage a system of sprawling microservices, and that's Kong Gateway.

This article demonstrated how Kong Gateway provides that single point of entry for services deployed across multiple clouds. In addition, we're able to leverage powerful plugins to apply authentication and traffic control measures consistently across all of our services.

If your system is in the clouds, Kong Gateway is a great way to manage and secure your multi-cloud deployment of microservices.