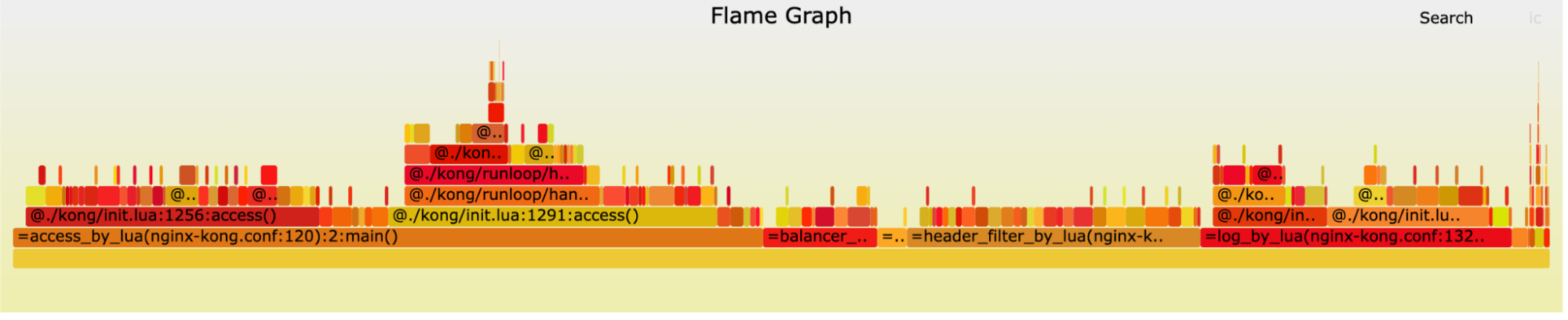

After cache key algorithm rework (instruction-based)

We searched for 'lru,' finding it accounted for 2.6% of CPU time but was associated with only 0.1% of instructions—specifically, LuaJIT bytecode. Typically, if a code path executes around 10% bytecode, it should consume approximately 10% of CPU time. While the number does not always match exactly due to different bytecodes having different costs, the discrepancy shouldn't be as vast.

Additionally, flamegraphs prior to the algorithm rework commit show no spans of 'lru,' despite a 100% cache hit rate, indicating it wasn't a significant performance factor before the change However, after the cache key algorithm refactor it suddenly started showing up in the profiling result. The LRU cache code has not seen any change for a long time, what could have caused this seemingly mysterious change in its performance?

JIT debugging

What is LuaJIT doing behind the scenes?

When dealing with complex dynamic language runtimes like LuaJIT, performance characteristics of the runtime are often affected by subtle codepath changes that are easily missed by human eyes. This is especially true with LuaJIT which performs a “high risk, high reward” JIT compilation of the bytecode at runtime to achieve faster execution for hot codepaths. Being able to JIT a codepath often results in many times of performance improvement while failing to do so can often lead to noticeable performance loss.

LuaJIT operates as a trace-based JIT (Just-In-Time) compiler, mapping the execution flow of bytecodes in real time. It creates a 'trace', a data structure encompassing both the sequence of bytecodes and their runtime context, and then compiles this trace into machine code.

Looking inside the LuaJIT

LuaJIT gives us some interfaces for debugging the JIT compiler.

After enabling the JIT log, we identified a suspicious trace abort within the hot execution path of the cache key algorithm.

To be more specific, here is the line of code that was causing the trace abort during the execution: https://github.com/Kong/kong/blob/c9fd6c127a9576da09d9af4fa4ba1139b30b3509/kong/router/fields.lua#L295

This segment of code, situated in the critical execution path, is responsible for appending routable fields to the router to facilitate subsequent matching. The issue arises from generating a new closure for each request (the FNEW bytecode), a process that leads to a trace abort since LuaJIT is currently unable to compile this operation yet.

What is the trace abort?

A trace abort occurs when LuaJIT encounters a scenario it cannot, or chooses not to, compile, resulting in the decision to halt the trace and refrain from compiling that particular execution flow.

For example, LuaJIT can not JIT compile the standard function error. This makes sense, this function is used for raising a Lua error (which is similar to the concept exception in languages like C++/Java and should not be in the hot path). Implementing JIT compilation on it would result in little performance benefit.

Here is an example list that shows a few functions LuaJIT cannot or chooses not to compile:

debug.* — Never compile

getfenv — Only compile getfenv(0)

string.dump — Never compile

LuaJIT bytecode flow tracing and JIT compilation incur more overhead in addition to the cost of interpreter execution, therefore, it is only triggered when a hot code path has been identified at runtime. In the event of a trace abort, the trace workflow will be terminated immediately until the next hotspot is found. Consequently, this may sometimes affect the opportunity to compile subsequent code segments and cause them to run in the slower interpreted mode as well.

Fixing and result

Resolving this issue is straightforward: replacing the closure with a local function eliminates the need to generate it for every request.

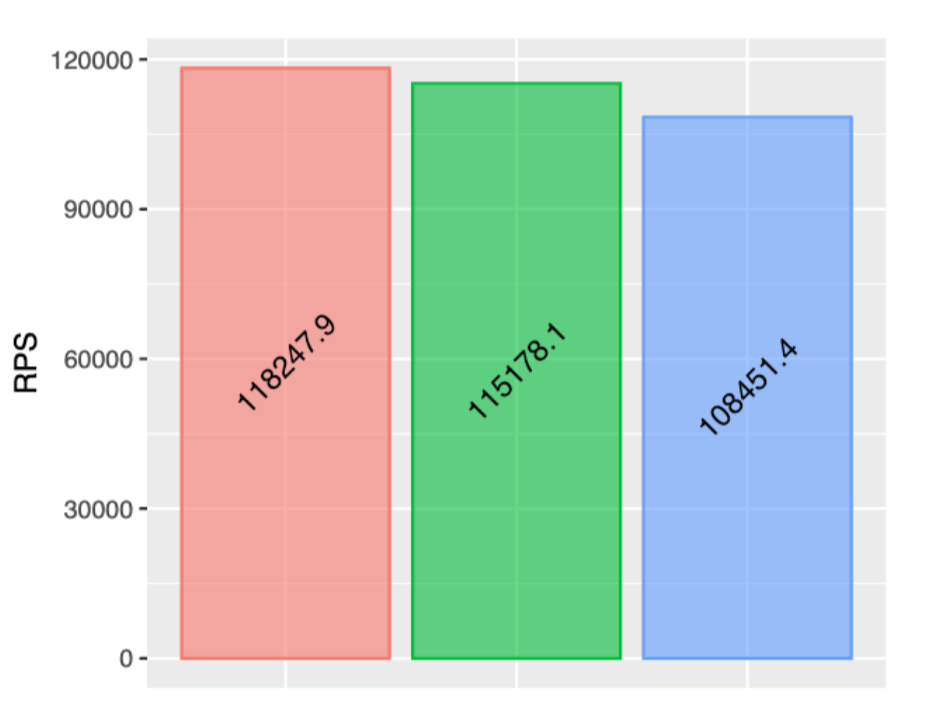

We subsequently conducted another performance comparison involving three versions: