Observability has become critical to ensuring the effective monitoring of application and system performance and health. It focuses on understanding a system’s internal state by analyzing the data it produces in the context of real-time events and actions across the infrastructure. Unlike traditional monitoring, which mainly notifies you when issues arise, observability offers the tools and insights needed to determine not only that a problem exists but also its root cause. This enables teams to take a proactive approach to optimizing and managing systems, rather than simply responding to failures.

To achieve this, observability focuses on three core pillars:

- Logs: Detailed, timestamped records of events and activities within a system, offering a granular view of operations

- Metrics: Quantitative data points that capture various aspects of system performance, such as resource usage, response times, and throughput

- Traces: Visual paths that requests follow as they traverse through different system components, enabling end-to-end analysis of transactions and interactions

Implementing observability at the API gateway layer is crucial. As the API gateway serves as a central point for managing and routing traffic across distributed services, applying observability to it brings an enterprise-wide perspective on how applications are consumed. Here are some important benefits it can bring:

- Observability at the API gateway layer allows you to track request patterns, response times, and error rates across all applications.

- It enables faster problem identification, such as high latencies, unexpected error rates, or traffic spikes, before they are routed to other services.

- It provides a first line of defense for troubleshooting and mitigation monitoring and logging suspicious activity, failed authentication attempts, or potential attacks.

- Since API gateways route requests to multiple applications, observability can help map dependencies between services, offering insights into how they interact, such as failures and bottlenecks.

- The API gateway can start and maintain distributed tracing across multiple applications by injecting trace IDs into all requests.

- Last but not least, observability at the API gateway layer provides important business metrics like: most accessed APIs, usage patterns by different clients or regions, API performance trends over time, monitor and report on service-level agreements (SLAs) and compliance metrics effectively

This blog post starts with a short overview of OTel and a reference architecture showing how Kong and Dynatrace work together. Next, it describes a basic Kong Konnect deployment integrated with Dynatrace through the OpenTelemetry (OTel) plugin to implement observability processes.

OpenTelemetry Introduction

Here's a concise definition of OpenTelemetry, available on its website:

“OpenTelemetry, also known as OTel, is a vendor-neutral open source Observability framework for instrumenting, generating, collecting, and exporting telemetry data such as traces, metrics, and logs.”

Born as a consolidation of OpenTracing and OpenCensus initiatives, OpenTelemetry has become a de facto standard supported by several vendors, including Dynatrace.

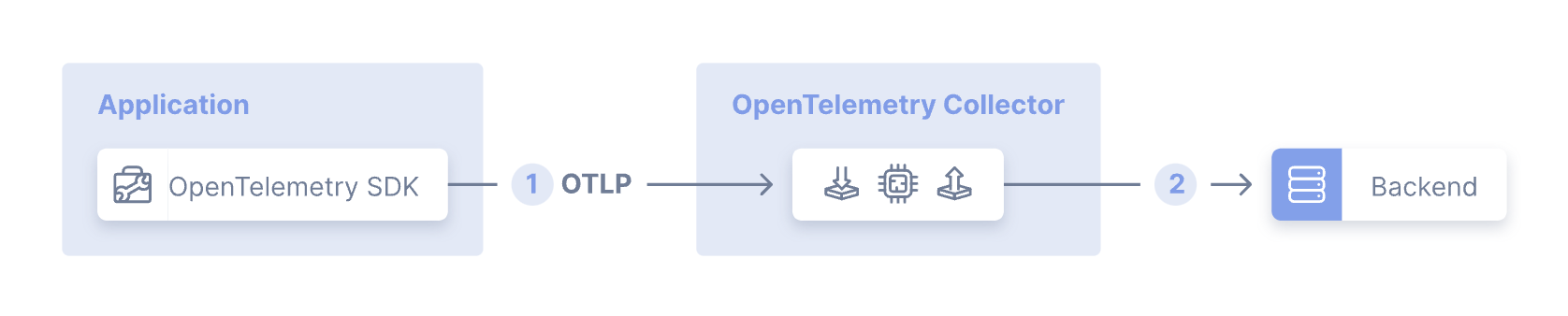

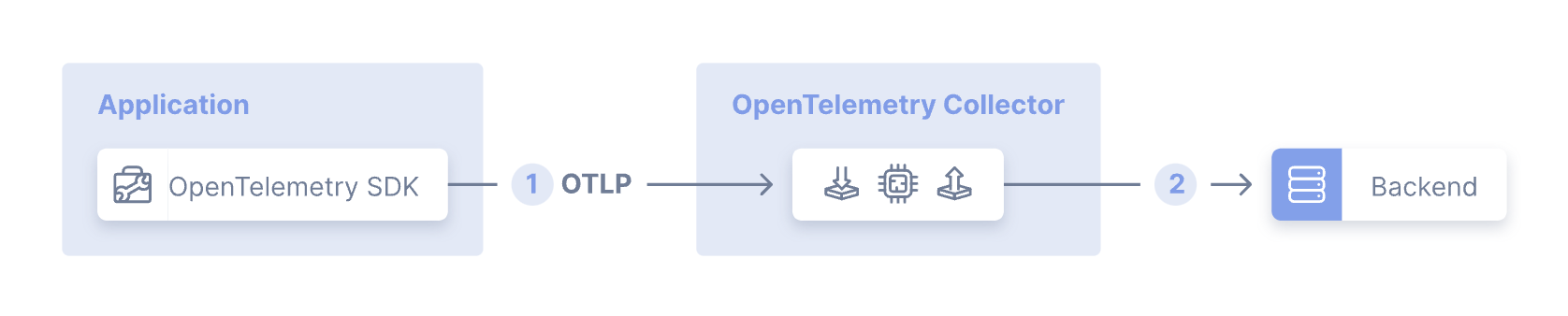

OTel Collector

The OTel specification comprises several components, including, for example, the OpenTelemetry Protocol (OTLP). From the architecture perspective, one of the main components is the OpenTelemetry Collector, which is responsible for receiving, processing, and exporting telemetry data. The following diagram is taken from the official OpenTelemetry Collector documentation page.

Although it's totally valid to send telemetry signals directly from the application to the observability backends with no collector in place, it's generally recommended to use the OTel Collector. The collector abstracts the backend observability infrastructure, so the services can normalize this kind of processing more quickly in a standardized manner as well as let the collector take care of error handling, encryption, data filtering, transformation, etc.

As you can see in the diagram, the collector defines multiple components such as:

- Receivers: Responsible for collecting telemetry data from the sources

- Processors: Apply transformation, filtering, and calculation to the received data

- Exporters: Send data to the Observability backend

OTel Collector offers other types of connectors and extensions. Please, refer to OTel Collector documentation to learn more about these components.

The components are tied together in Pipelines, inside the Service section of the collector configuration file.

From the deployment perspective, here's the minimum recommended scenario called Agent Pattern. The application uses the OTel SDK to send telemetry data to the collector through OTLP. The collector, in turn, sends the data to the existing backends. The collector is also flexible enough to support a variety of topologies to address scalability, high availability, fan-out, etc. Check the OTel Collector deployment page for more information.

The OTel Collector comes from the community, but Dynatrace provides a distribution for the OpenTelemetry Collector. It is a customized implementation tailored for typical use cases in a Dynatrace context. It ships with an optimized and verified set of collector components.

Kong Konnect and Dynatrace reference architecture

The Kong Konnect and Dynatrace topology is quite simple in this example:

The main components here are:

- Konnect Control Plane: responsible for administration tasks including APIs and Policies definition

- Konnect Data Plane: handles the requests sent by the API consumers

- Kong Gateway Plugins: components running inside the Data Plane to produce OpenTelemetry signals

- Upstream Service: services or microservices protected by the Konnnect Data Plane

- OpenTelemetry Collector: handles and processes the signals sent by the OTel plugin and sends them to the Dynatrace tenant

- Dynatrace Platform: provides a single pane of glass with dashboards, reports, etc.

Simple e-commerce application

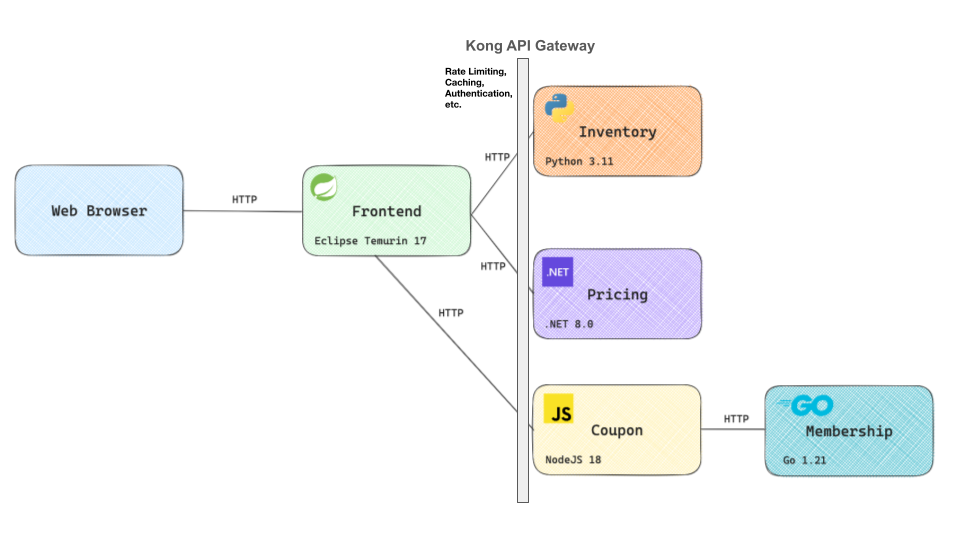

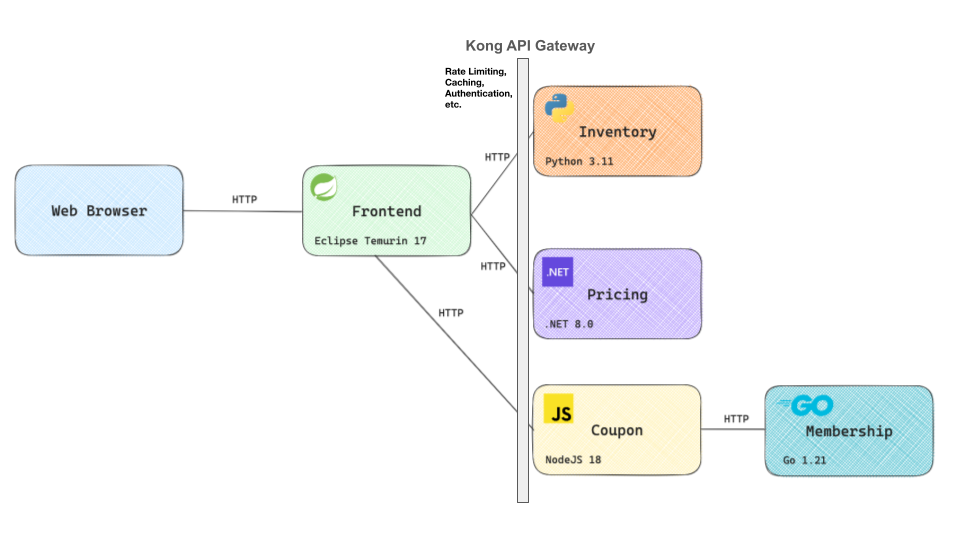

To demonstrate Kong and Dynatrace working together in a more realistic scenario, we're going to take a simple e-commerce application and get it protected by Kong and monitored by Dynatrace. The application is available publicly and here's a diagram with a high-level architecture of the application with its microservices:

Logically speaking, we can separate the microservices into two main layers:

- Backend with Inventory, Pricing, Coupon, and Membership microservices.

- Frontend, responsible for sending requests to the Backend Services

As we mentioned earlier, one of the main goals of an API gateway is to abstract services and microservices from the frontend perspective so we can take advantage of all its capabilities, including policies, protocol abstraction, etc.

In this sense, all incoming requests coming from the frontend microservices are processed by Kong Gateway and routed accordingly to the microservices sitting behind it.

Application instrumentation

One of the Kong Gateway's responsibilities is to generate and provide all signals to Dynatrace. This is done by the Kong Gateway Plugins, which can be configured accordingly. For example, from the tracing perspective, the Kong OpenTelemetry Plugin starts all traces. The Kong Prometheus Plugin is responsible for producing Prometheus-based metrics as the Kong TCP Log Plugin transmits the Kong Gateway access logs to Dynatrace.

The e-commerce app microservices aren't prepared to be part of an observability environment. That is: all components should be instrumented to be part of it. In other words, the microservices must add observability code to emit traces, metrics, and logs.

There are two instrumentation options:

- Code-based: Through the use of SDKs the application, or microservice, can be extended to process the OpenTelemetry signals.

- Zero-code: As the name implies, it doesn't require any code to get injected into the microservices.

Specifically for Kubernetes deployments, the OpenTelemetry Operator provides a Zero-code option, called Auto-Instrumentation, which injects necessary code to the application. That's the mechanism we are going to use in this blog post.

So, let's get started with the reference architecture implementation and application deployment.

Kong Konnect Data Plane and Dynatrace observability deployment

It's time to describe the actual deployment of the reference architecture. We can summarize it with the following steps:

- Pre-requisites: Kong Konnect and Dynatrace registration

- Kubernetes cluster creation

- Kong Konnect Data Plane deployment

- OpenTelemetry Operator installation

- OpenTelemetry Collector instantiation

- e-commerce application deployment and instrumentation

- Kong Objects creation and Traces setup

- Adding Metrics and Logs to the OpenTelemetry Connector configuration

1. Pre-requisites: Kong Konnect and Dynatrace registration

Before deploying the Data Plane we should subscribe to Konnect. Click on the Konnect Registration link and present your credentials. Or, if you already have a Konnect subscription, log in to it. You should get redirected to the Konnect landing page:

Similarly, go to the Dynatrace signup link to register and get a 15-day trial. You should get redirected to its landing page:

2. Kubernetes cluster creation

Our Konnect Data Plane, with all Kong Gateway Plugins, alongside the OTel Collector will be running on an Amazon EKS cluster. So, let's create it using eksctl, the official CLI for Amazon EKS, like this:

3. Kong Konnect Data Plane deployment

Any Konnect subscription has a "default" Control Plane defined. Click on it and, inside its landing page, click on “Create a New Data Plane Node”.

Choose Kubernetes as your platform. Click on "Generate certificate", copy and save the Digital Certificate and Private Key as tls.crt and tls.key files as described in the instructions. Also copy and save the configuration parameters in a values.yaml file.

The main comments here are:]

- Replace the cluster_* endpoints and server names with yours.

- The “tracing_instrumentations: all” and “tracing_sampling_rate: 1.0” parameters are needed for the Kong Gateway OpenTelemetry plugin we are going to describe later.

- The Kong Data Plane is going to be consumed exclusively by the Frontend microservice, therefore it can be deployed as “type: ClusterIP”.

Now, use the Helm command to deploy the Data Plane. First, add Kong's repo to your Helm environment.

Create a namespace and a secret for your Digital Certificate and Private Key pair and apply the values.yaml file:

You can check Kong Data Plane logs with:

Check the Kubernetes Service, related to the Kong Data Plane, with:

4. OpenTelemetry Operator installation

The next step is to deploy the OpenTelemetry Collector. To get better control over it, we're going to do it through the OpenTelemetry Kubernetes Operator. In fact, the collector is also capable of auto-instrument applications and services using OpenTelemetry instrumentation libraries.

Installing Cert-Manager

The OpenTelemetry Operator requires Cert-Manager to be installed in your Kubernetes cluster. The Cert-Manager can then issue certificates to be used by the communication between the Kubernetes API Server and the existing webhook included in the operator.

Use the Cert-Manager Helm Charts to get it installed. Add the repo first:

Install Cert-Manager with:

Installing OpenTelemetry Operator

Now we're going to use the OpenTelemetry Helm Charts to install it. Add its repo:

Install the operator:

As you may recall, one of the microservices of the e-Commerce application is written in Go Programming Language. By default, the operator has the Go instrumentation disabled. The Helm command has the “extraArgs” parameter to enable it.

The “admissionWebhooks” parameter asks Cert-Manager to generate a self-signed certificate. The operator installs some new CRDs used to create a new OpenTelemetry Collector. You can check them out with:

5. OpenTelemetry Collector instantiation

With the operator in place, we can use the new CRD to deploy our OpenTelemetry Collector. Dynatrace provides a first-class distribution of the collector, including support and security patches independent of the OpenTelemetry Collector release.

The Dynatrace distribution of the OpenTelemetry Collector supports the following components, including Receivers, Processors, Exporters, and Extensions Connectors, described here.

Create a collector declaration

To get started we're going to manage Traces first. Later on, we'll enhance the collector to process both Metrics and Logs. Here's the declaration:

The declaration has critical parameters defined:

Dynatrace Secrets

Before instantiating the collector we need to create the Kubernetes secrets with the Dynatrace endpoint and API Token. The endpoint refers to your Dynatrace tenant:

And create another secret for the Dynatrace API Token. The Token needs to be created with the “Ingest metrics” scope. Since we're going to manage Logs and Traces, add the “Ingest logs” and “Ingest OpenTelemetry traces” scopes as well. Check the Dynatrace documentation to learn how to issue API Tokens.

In case you want to decode your secret, use the base64 command. For example:

Deploy the collector

You can instantiate the collector by simply submitting the declaration:

If you want to destroy it run:

Check the collector's log with:

Based on the declaration, the deployment creates a Kubernetes service named “collector-kong-collector” listening to ports 4317 and 4318. That means that any application, including Kong Data Plane, should refer to the OTel Collector's Kubernetes FQDN (e.g., http://collector-kong-collector.opentelemetry-operator-system.svc.cluster.local:4318/v1/traces) to send data to the collector. The “/v1/traces” path is the default the collector uses to handle requests with trace data.

6. e-commerce application instrumentation and deployment

One of the most powerful capabilities provided by the OpenTelemetry Operator is the ability to auto-instrument your services. With such functionality, the operator injects and configures libraries for .Net, Java, Node.js, Python, and Go services. Considering the multi-programming language scenario, it fits nicely to the e-Commerce application.

Here's a diagram illustrating how Auto-Instrumentation works. The instrumentation depends on the Programming Language we used to develop the microservices. For some languages, the CRD uses the OTLP HTTP protocol, which is configured in the OTel Collector listening to port 4318. That's the case of all languages, excluding Node.js, where the CRD uses gRPC and therefore should refer to port 4317.

The instrumentation process is divided into two steps:

- Configuration using the

Instrumentation Kubernetes CRD - Kubernetes deployment annotations to get the code instrumented.

Instrumentation Configuration

First of all, we need to use the Instrumentation CRD installed to configure it. Here's the declaration:

Some comments about it:

- For tracing, with the “propagators” section, it supports the OpenTelemetry Context Propagation, which defines the W3C TraceContext specification as the default propagator.

- The “sampler” section controls the number of traces collected (sampling) and sent to the Observability system, in our case, Dynatrace.

- As we mentioned earlier, the OTLP endpoint the Instrumentation should use depends on the Programming Language. The declaration has a specific configuration for each one of them.

- Lastly, as an exercise, for the Go Lang section, we've configured the OTel service name as well as the Image the Auto-Instrumentation process should use.

For all Instrumentation CRD declaration sections, check the General OpenTelemetry SDK configuration page where you can find all related parameters. For example: the OTEL_TRACES_SAMPLER SDK configuration maps the “sampler” CRD section declaration.

Configure the Auto-Instrumentation process by submitting the declaration:

If you want to delete it run:

Application deployment

Now, with the Auto-Intrumentation process properly instructed, let's deploy the Application. The original Kubernetes declaration can be downloaded from:

There are two main points we should change in order to get the Application properly deployed:

- Originally, the frontend microservice is configured to communicate directly with each one of the backend microservices (Inventory, Pricing and Coupon). We need to change that saying it should send requests to Kong API Gateway instead.

- In order to get instrumented, each microservice has to have specific annotations so the Auto-Instrumentation process can take care of it and inject the necessary code into it.

Frontend deployment declaration

Here's the original Frontend microservice deployment declaration:

You can manually update the declaration or use the yq tool. yq is a powerful YAML, JSON and XML text processor very useful if you want to automate the process.

The following commands update the “env” section, replacing the original endpoint with Kong references, including the Kong's Kubernetes Service FQDN (“kong-kong-proxy.kong”) and the Kong Route (e.g. “/inventory"). We don't have the Kong Routes defined yet as it is what the next section of the document describes. Please check the yq documentation to learn more about it.

Kubernetes deployment annotations

The second update is going to add Kubernetes annotations so the Auto-Instrumentation process can do its jobs. Here are the yq commands. Note that for each microservice, we use a different annotation to tell the Auto-Instrumentation process which Programming Language it should consider. For example, the “Inventory” microservice, written in Python, has the "instrumentation.opentelemetry.io/inject-python": "true" annotation injected.

The Auto-Instrumentation process works slightly differently for Go Lang-based microservices, like “Membership”. It requires another annotation with the path of the target Container executable. If you check the “keyval/odigos-demo-membership:v0.1.14” image you'll see the entry point is defined as “/membership”.

Here's a snippet showing the annotations injected for the “Pricing” microservice:

Now we're finally ready to deploy the app:

If you want to delete it run:

Check the deployment

To get a better understanding of the manipulation the Auto-Instrumentation process does, let's check, for example, the “Inventory” Pod, where the microservice is written in Python. If you run:

You'll see the Auto-Instrumentation process ran an Init Container to instrument the code and defined environment variables specifying how to connect to the OTel Collector. Check the Python auto-intrumentation repo to learn more.

Another interesting check is the “Membership” Pod. Since the microservice is written in Go, the Auto-Instrumentation solves the problem in a different way. To check that out, if you run:

You should get:

Meaning, the process has injected another container inside the Pod, playing the sidecar role. Again, it also defines environment variables just like it did for the first Pod we checked. Here's the Go auto-instrumentation repo to learn more.

7. Kong Objects creation and Traces setup

To complete our initial deployment we need to configure the Kong Objects to expose the e-commerce Backend microservices and Kong Gateway OpenTelemetry plugin.

decK and Konnect PAT

The OpenTelemetry Plugin and the actual Kong Objects will be configured using decK (declarations for Kong), a command line that allows you to manage Kong Objects in a declarative way. In order to use it, we need a Konnect PAT (Personal Access Token). Please, refer to the Konnect documentation to learn how to generate a PAT.

Kong Services and Routes decK declaration

Below you can see two decK declarations. The first one defines Kong Services and Routes. The second manages the Kong Plugins.

For the first declaration here are the main comments:

- A Kong Service for each e-commerce Backend microservice (Coupon, Inventory, and Pricing). Note that, since the Membership microservice is not consumed directly by the Frontend microservice, we don't need to define a Kong Service for it.

- A Kong Route for each Kong Service to expose them with the specific paths “/coupon”, “/inventory” and “/pricing”. Each Kong Route matches the Kubernetes declaration update we did for the Frontend microservice.

Kong Plugins decK declaration

The second declaration defines

- A globally configured Kong OpenTelemetry Plugin, meaning it's going to be applied to all Kong Services. The main configuration here is the “traces_endpoint” parameter. As you can see, it refers to the OpenTelemetry Collector instance we deployed previously.

- The Plugin supports the OpenTelemetry Context Propagation, which defines the W3C TraceContext specification as the default propagator.

- The Plugin sets “kong-otel” as the name of the Service getting monitored.

The Kong Gateway OpenTelemetry Plugin supports other propagators through the following headers: Zipkin, Jaeger, OpenTracing, Datadog, AWS X-Ray, and GCP X-Cloud-Trace-Context. The plugin also allows us to extract, inject, clear or preserve header to and out of the incoming requests.

All the instrumentations made by the plugin are configured with the “tracing_instrumentations: all” and “tracing_sampling_rate: 1.0” parameters we used for the Kong Data Plane deployment. As you can imagine the sampling rate is critical and can impact the overall performance of the Data Plane. For the purpose of this blog post we have set it as “1.0”. For a production-ready environment you should configure it accordingly. The plugin also has a “sampling_rate” parameter that can be used to override the Data Plane configuration.

Now, before submitting the declaration to Konnect to create the Kong Objects, you can test the connection first. Please, define a PAT environment variable with your Konnect PAT.

Apply the declarations with:

Now we're free to use the application and get the Kong Routes consumed.

Consume the e-Commerce Application

The Frontend Service has been deployed as “type=ClusterIP” so we need to expose with, for example:

For MacOS, you can open the application with:

You may note that the Frontend application does not present any other page. In fact, you can see the microservice activities checking its logs like:

Dynatrace Distributed Tracing

You should see new incoming traces in Dynatrace. For example, the “/coupon” trace was started by Kong and has two spans added by the “Coupon” and “Membership” microservices, showing us the Auto-Instrumentation process is working properly.

8. Adding Metrics and Logs to the OpenTelemetry Connector configuration

Now, let's add Metrics and Logs to our environment. Kong has supported Prometheus-based metrics for a long time through the Prometheus Plugin. In an OpenTelemetry configuration scenario the plugin is an option, where we could add a specific “prometheusreceiver” to the collector configuration. The receiver is responsible for scraping the Data Plane's Status API, which, by default, is configured with the “:8100/metrics” endpoint.

To inject Kong Gateway's Access Logs, we can use Log Processing plugin Kong Gateway provides, for example the TCP Log Plugin.

New collector configuration

The declaration has critical parameters defined:

Still inside the “service” section we have included the new “logs” pipeline. Its “receivers” are set to “otlp” and “tcplog” to get data from both Kong Gateway Plugin. Its “exporters” is set to the same “otlphttp” which sends data to Dynatrace.

Kubernetes Service Account for Prometheus Receiver

The OTel Collector Prometheus Receiver fully supports the scraping configuration defined by Prometheus. The receiver, more precisely, uses the “pod” role of the Kubernetes Service Discovery configurations (“kubernetes_sd_config”). Specific “relabel_config” settings with “regex” expressions allow the receiver to discover Kubernetes Pods that belong to the Kong Data Plane deployment.

One of the relabeling configs is related to the port 8100. This port configuration is part of the Data Plane deployment we used to get it running. Here's the snippet of the “values.yaml” file we used previously:

That's the Kong Gateway's Status API where the Prometheus plugin exposes the metrics produced. In fact, the endpoint the receiver scrapes is, as specified in the OTel Collector configuration.

On the other hand, the OTel Collector has to be allowed to scrape the endpoint. We can define such permission with a Kubernetes ClusterRole and apply it to a Kubernetes Service Account with a Kubernetes ClusterRoleBinding.

Here's the ClusterRole declaration. It's a quite open one but it's good enough for this exercise.

Then we need to create a Kubernetes Service Account and bind the Role to it.

Finally, note that the OTel Collector configuration is deployed using the Service Account with serviceAccount: collector and then it will be able to scrape the endpoint exposed by Kong Gateway.

Deploy the collector

Delete the current collector first and instantiate a new one simply submitting the declaration:

Interestingly enough, the collector service now listens to four ports:

Add the Prometheus and TCP Log plugins to our decK declaration and submit it to Konnect:

Submit the new plugin declaration with:

Consume the Application and check collector's Prometheus endpoint

Using “port-forward”, send a request to the collector's Prometheus endpoint. In a terminal run:

Continue navigating the Application to see some metrics getting generated. In another terminal send a request to Prometheus’ endpoint.

You should see several related Kong metrics including, for example, Histogram metrics like “kong_kong_latency_ms_bucket”, “kong_request_latency_ms_bucket” and “kong_upstream_latency_ms_bucket”. Maybe one of the most important is “kong_http_requests_total” where we can see consumption metrics. Here's a snippet of the output:

Check Metrics and Logs in Dynatrace

One of the main values provided by Dynatrace is Dashboard creation capabilities. You can create them visually and using DQL (Dynatrace Query Language). As an example, Dynatrace provides a Kong Dashboard where we can manage the main metrics and the access log.

The Kong Dashboard should look like this.

Connecting Log Data to Traces

Dynatrace has the ability to connect Log Events to Traces. That allows us to navigate to the trace associated with a given log event.

In order to do it, the log event has to have a “trace_id” field with the actual trace id it is related to. By default, the OpenTelemetry Plugin injects such a field. However, it adds the format used, in order case “w3c”. For example:

As you can see here, the TCP Log gets executed after the OpenTelemetry Plugin. So, to solve that, the TCP Log Plugin configuration has the “custom_fields_by_lua” set with a Lua code which removes the “w3c” part out of the field added by the OpenTelemetry Plugin. The new log event can then follow the format Dynatrace looks for:

Here's a Dynatrace Logs app with events generated by the TCP Log Plugin. Choose an event and you'll see the right panel with the “Open trace” button.

If you click on it, you can choose to get redirected to the Dynatrace Trace apps. In the “Distributed Tracing” app you should see the trace with all spans related to it.

Conclusion

The synergy of Dynatrace and Kong Konnect technologies provides a new era of observability architectures built on OpenTelemetry standards. By leveraging the combined capabilities of these technologies, organizations can strengthen their infrastructure with robust policies, laying a solid foundation for advanced observability platforms.

Try Kong Konnect and Dynatrace for free today! Kong Konnect simplifies API management and improves security for all services infrastructure as Dynatrace provides end-to-end visibility and automated insights to optimize the performance, security, and user experiences of applications.