Kong Cloud has been using StatsD and Prometheus heavily in monitoring and metrics collecting. In this blog post we discuss the use case of StatsD and Prometheus on Kong Cloud, the performance problem we found, and the way we proposed to solve it.

What is StatsD?

StatsD is a metrics server that accepts events from UDP or TCP protocol and export them to various backends. A typical StatsD event looks like:

host.sfo1.request.count:123|c

Every StatsD event is a string in a format of <metricname>:<value>|<type>. The above example represents a metric called host.sfo1.request.count with the type of counter and the value of 123.

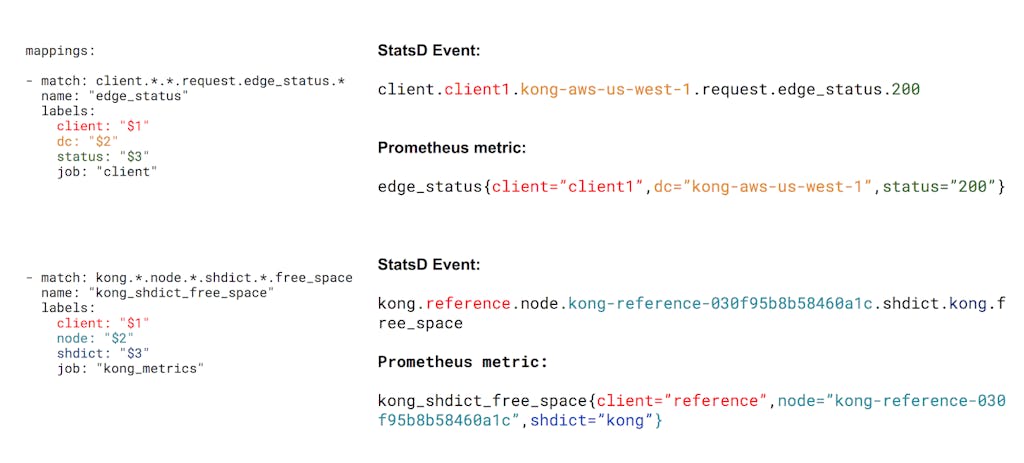

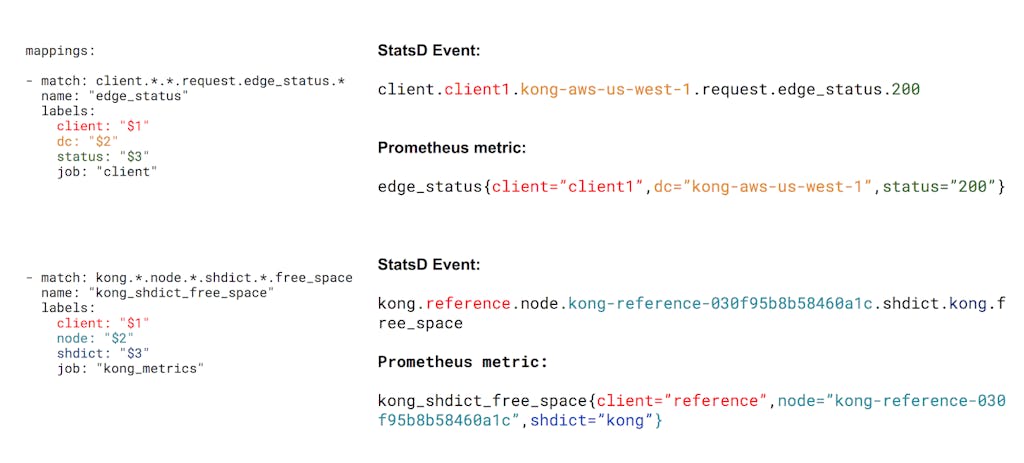

On Kong Cloud, we use the StatsD Prometheus exporter in our metrics pipeline to measure KPIs (Key Performance Indicator) of our service. The StatsD Prometheus exporter is a daemon that listens for StatsD events and exports them in Prometheus exposition formats. The exporter has a mapping config that maps the StatsD metric to a Prometheus metric.

In the example below, two StatsD events are translated according to the mapping config to the left.

The Problem

On Kong Cloud, various StatsD events are generated for each request. When the client request rate climbed up to several thousand requests per second, we spotted a high CPU usage of the StatsD exporter that took one and a half cores on an AWS m4.large instance.

To take a closer look, we started doing some profiling upon the StatsD exporter and used the perf tool to sample stacks. This gave us a rough idea of functions taking up most of the CPU time. Then, we used the perf_data_converter tool to convert perf file perf.data to profile.proto and used pprof to analyze the results.

pprof gave us the percentage of CPU time each functions took in descending order:

(pprof) top100

Showing nodes accounting for 311858250000, 83.29% of 374429750000 total

Dropped 505 nodes (cum <= 1872148750)

flat flat% sum% cum cum%

63493500000 16.96% 16.96% 63493500000 16.96% [[kernel.kallsyms]]

48018750000 12.82% 29.78% 48018750000 12.82% regexp.(*machine).onepass

31408750000 8.39% 38.17% 31408750000 8.39% regexp/syntax.(*Inst).MatchRunePos

17480000000 4.67% 42.84% 17480000000 4.67% runtime.duffcopy

17274250000 4.61% 47.45% 17274250000 4.61% regexp/syntax.EmptyOpContext

15302000000 4.09% 51.54% 15302000000 4.09% regexp.(*inputString).step

15244750000 4.07% 55.61% 15244750000 4.07% runtime.mapiternext

9875250000 2.64% 58.25% 9875250000 2.64% runtime.mallocgc

9310250000 2.49% 60.73% 9310250000 2.49% regexp.onePassNext

7658250000 2.05% 62.78% 7658250000 2.05% runtime.memmove

7192750000 1.92% 64.70% 7192750000 1.92% regexp/syntax.(*Inst).MatchRune

6075750000 1.62% 66.32% 6075750000 1.62% runtime.mapassign_faststr

5156750000 1.38% 67.70% 5156750000 1.38% sync.(*Mutex).Lock

4943500000 1.32% 69.02% 4943500000 1.32% github.com/prometheus/statsd_exporter/vendor/github.com/beorn7/perks/quantile.NewTargeted.func1

4722000000 1.26% 70.28% 4722000000 1.26% runtime.nextFreeFast

4608250000 1.23% 71.51% 4608250000 1.23% runtime.scanobject

4420750000 1.18% 72.69% 4420750000 1.18% sync.(*Mutex).Unlock

4281750000 1.14% 73.84% 4281750000 1.14% runtime.mapiterinit

4207000000 1.12% 74.96% 4207000000 1.12% runtime.heapBitsSetType

3840250000 1.03% 75.99% 3840250000 1.03% regexp.(*machine).tryBacktrack

3628250000 0.97% 76.96% 3628250000 0.97% [[vdso]]

3424000000 0.91% 77.87% 3424000000 0.91% runtime.memclrNoHeapPointers

2755500000 0.74% 78.61% 2755500000 0.74% runtime.heapBitsForObject

2732500000 0.73% 79.34% 2732500000 0.73% regexp.(*bitState).push

2695000000 0.72% 80.06% 2695000000 0.72% main.(*metricMapper).getMapping

2112000000 0.56% 80.62% 2112000000 0.56% regexp.(*Regexp).expand

2089250000 0.56% 81.18% 2089250000 0.56% github.com/prometheus/statsd_exporter/vendor/github.com/prometheus/common/model.hashAdd

2047000000 0.55% 81.72% 2047000000 0.55% runtime.growslice

2043500000 0.55% 82.27% 2043500000 0.55% runtime.greyobject

1939750000 0.52% 82.79% 1939750000 0.52% main.(*Exporter).Listen

1877000000 0.5% 83.29% 1877000000 0.5% runtime.makemap

The largest accumulation of events was system calls. This makes sense, as every UDP socket operation involves a system call. What caught our interest was that the total CPU time taken for the Go regular expression engine was around 37 percent of the CPU, which was twice the system calls.

We also tried rebuilding the StatsD exporter from the source using Go 1.10.3, which gave us this result:

(pprof) top 100

Showing nodes accounting for 75230000000, 82.30% of 91412500000 total

Dropped 542 nodes (cum <= 457062500)

flat flat% sum% cum cum%

17594750000 19.25% 19.25% 17594750000 19.25% [[kernel.kallsyms]]

16792500000 18.37% 37.62% 16792500000 18.37% regexp/syntax.writeRegexp

9554250000 10.45% 48.07% 9554250000 10.45% regexp.onePassCopy

6012750000 6.58% 54.65% 6012750000 6.58% regexp.cleanupOnePass

5165000000 5.65% 60.30% 5165000000 5.65% runtime.sigaltstack

4222250000 4.62% 64.92% 4222250000 4.62% regexp.(*Regexp).allMatches

2703500000 2.96% 67.87% 2703500000 2.96% regexp.compile

1195250000 1.31% 69.18% 1703500000 1.86% runtime.mallocgc

1159250000 1.27% 70.45% 1204500000 1.32% runtime.mapiternext

1112500000 1.22% 71.67% 1112500000 1.22% runtime.settls

1038500000 1.14% 72.80% 1038500000 1.14% sync.(*RWMutex).Unlock

912750000 1% 73.80% 912750000 1% runtime.mapassign_faststr

853000000 0.93% 74.73% 853000000 0.93% regexp.(*machine).step

840000000 0.92% 75.65% 869750000 0.95% sync.(*WaitGroup).Wait

814500000 0.89% 76.54% 1154750000 1.26% runtime.greyobject

780000000 0.85% 77.40% 780000000 0.85% runtime.largeAlloc

735000000 0.8% 78.20% 735000000 0.8% [statsd_exporter]

598750000 0.65% 78.86% 598750000 0.65% runtime.(*itabTableType).(runtime.add)-fm

588500000 0.64% 79.50% 593250000 0.65% runtime.heapBitsSetType

576750000 0.63% 80.13% 576750000 0.63% regexp.makeOnePass.func1

513250000 0.56% 80.69% 513250000 0.56% [[vdso]]

495250000 0.54% 81.23% 515000000 0.56% crypto/cipher.NewCTR

491000000 0.54% 81.77% 495500000 0.54% runtime.mapdelete_fast32

480750000 0.53% 82.30% 480750000 0.53% runtime.memmove

Go 1.10.3 definitely had a better performance in the framework itself and gave us 19 percent CPU time in system calls and 40 percent CPU time in the regular expression engine.

Finding a Solution

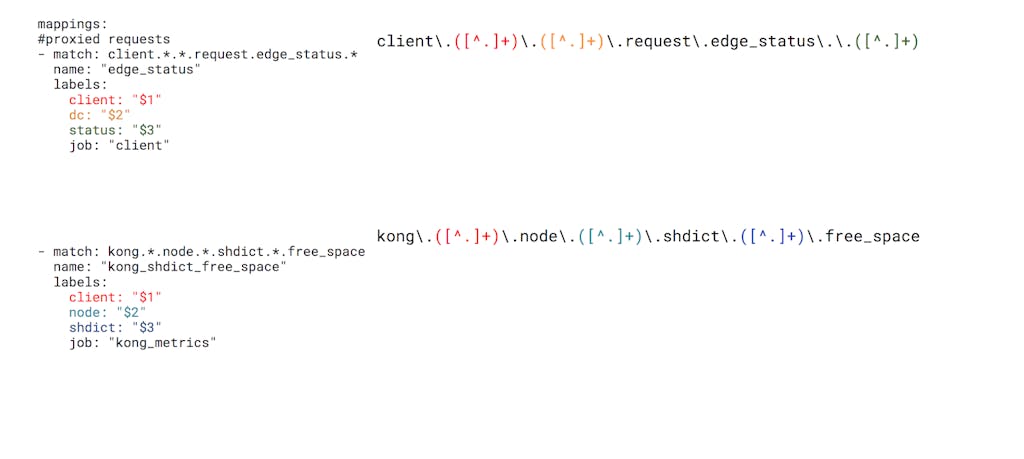

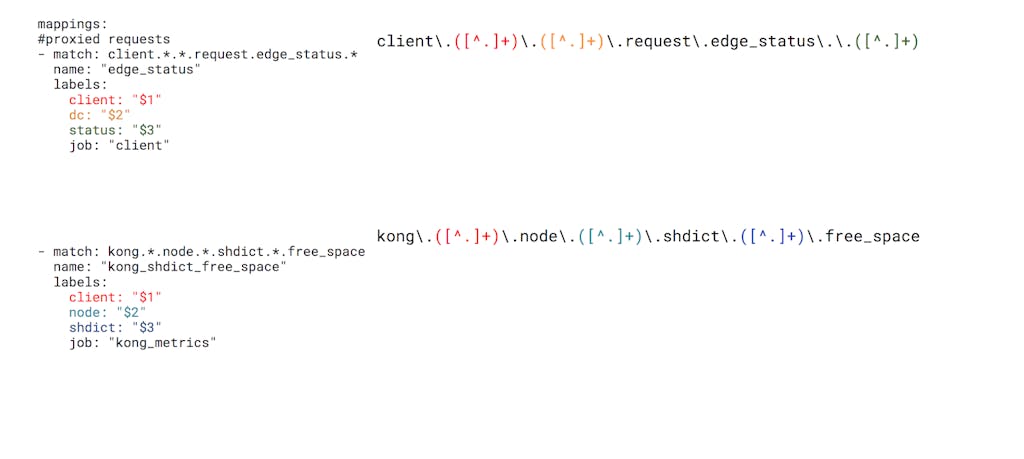

Since the largest portion of CPU was used in regular expression matching, we started to look into the source code of the StatsD exporter. The exporter uses regular expression to match rules and expand labels from matching groups. For example, the mapping config we see in the first section generates the following regular expressions:

All labels will be expanded using the regex.ExpandString function.

After reviewing the rules we used on Kong Cloud, it turned out that we didn’t actually need the full power of regular expressions because:

- We always use the

.*. pattern to match the whole field, which is separated by dot. There’s no use case in which we need to use complex expressions like host.*.status.\d+. - We always use only one capture group as a label. There’s no use case like

host: "$1_$2".

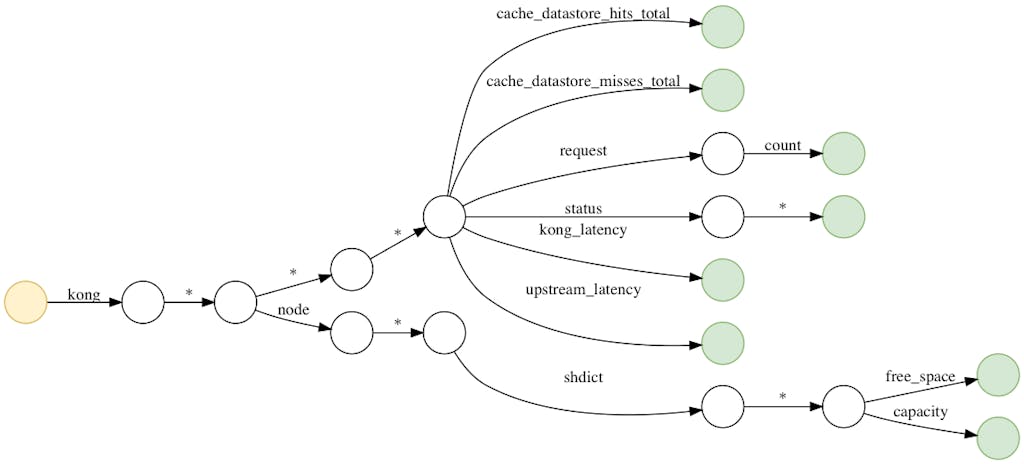

Based on this observation, we started to refactor the StatsD exporter with a light-weight matcher with limited features that was just enough to suit our use cases. Then we implemented a simple matching type in addition to the glob and regex matching type using a finite state machine to mock the behaviour of regular expression matching.

Simple Matcher Preparation

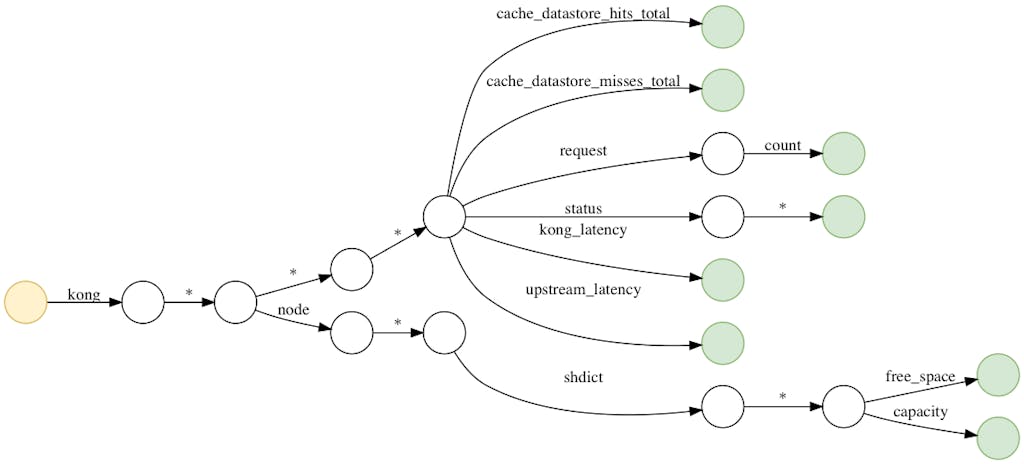

Every time the mapper rule is reloaded, the exporter will build a state machine following these steps:

- Read rules from yaml and split each rule by dot.

- Build a state machine using each split field. Each field represents a state in the state machine. For example, the following rules–

— will build a state machine as follows:

- For labels, replace regex expansion variables with `%s` and record the regex variable stored in struct labelFormatter. For example,

client_$1 becomes:

Simple matcher matching

- When a StatsD event comes in, split each event with dot.

- For each split field, do a traversal through the state machine. Each lookup of the next transition in state uses the go

map structure, thus is O(1) time complexity each time. - If the current transition state is a *, also put the current field to an array.

- * always matches but has a lower priority than exact match. For example, client.abc will always match rule client.abc before client.* regardless of the order of occurrence in the statsd.rules file.

- If the state ends in a matched state and there’s no split field left, this is a successful match. o to step 4; otherwise fall back to glob or regex matching.

- Format labels using the captured groups stored in the array.

- Return the matched rule and the formatted labels to the exporter and go to step 1.

We reran the perf and pprof profiling again with the workload with simple matching type enabled and received the following:

(pprof) top 100

Showing nodes accounting for 60068250000, 71.04% of 84558750000 total

Dropped 480 nodes (cum <= 422793750)

flat flat% sum% cum cum%

25372500000 30.01% 30.01% 25372500000 30.01% [[kernel.kallsyms]]

3718750000 4.40% 34.40% 3718750000 4.40% runtime.memmove

2691000000 3.18% 37.59% 4659000000 5.51% runtime.mallocgc

2037750000 2.41% 40.00% 2161500000 2.56% runtime.mapassign_faststr

2019000000 2.39% 42.38% 2166500000 2.56% runtime.heapBitsSetType

1964500000 2.32% 44.71% 2003500000 2.37% runtime.mapiternext

1556250000 1.84% 46.55% 1556250000 1.84% runtime.nextFreeFast (inline)

1369000000 1.62% 48.17% 1587750000 1.88% runtime.scanobject

1263000000 1.49% 49.66% 1263000000 1.49% github.com/beorn7/perks/quantile.NewTargeted.func1

1258750000 1.49% 51.15% 1848750000 2.19% regexp.(*machine).tryBacktrack

1255000000 1.48% 52.63% 1255000000 1.48% runtime.memclrNoHeapPointers

1217250000 1.44% 54.07% 1255250000 1.48% runtime.mapaccess2_faststr

1145000000 1.35% 55.43% 1145000000 1.35% [[vdso]]

1063500000 1.26% 56.68% 1063500000 1.26% runtime.aeshashbody

940500000 1.11% 57.80% 940500000 1.11% main.(*metricMapper).getMapping

870250000 1.03% 58.83% 870250000 1.03% regexp.(*inputString).step

802500000 0.95% 59.77% 802500000 0.95% regexp/syntax.(*Inst).MatchRunePos

796750000 0.94% 60.72% 815750000 0.96% runtime.mapaccess1_faststr

788250000 0.93% 61.65% 788250000 0.93% github.com/prometheus/common/model.hashAdd

784250000 0.93% 62.58% 784250000 0.93% main.(*Exporter).Listen

696750000 0.82% 63.40% 749750000 0.89% runtime.heapBitsForObject

695000000 0.82% 64.22% 695000000 0.82% strings.genSplit

675000000 0.8% 65.02% 675000000 0.8% syscall.Syscall6

615750000 0.73% 65.75% 615750000 0.73% regexp.(*machine).backtrack

560000000 0.66% 66.41% 603750000 0.71% github.com/prometheus/common/model.LabelsToSignature

521500000 0.62% 67.03% 521500000 0.62% runtime.unlock

510750000 0.6% 67.63% 510750000 0.6% runtime.lock

482250000 0.57% 68.20% 588250000 0.7% runtime.mapiterinit

468250000 0.55% 68.76% 468250000 0.55% github.com/beorn7/perks/quantile.(*stream).merge

454250000 0.54% 69.29% 454250000 0.54% unicode/utf8.ValidString

427500000 0.51% 69.80% 427500000 0.51% runtime.indexbytebody

374500000 0.44% 70.24% 428250000 0.51% runtime.growslice

337500000 0.4% 70.64% 590000000 0.7% regexp.(*bitState).push (inline)

335500000 0.4% 71.04% 445000000 0.53% runtime.greyobject

StatsD Exporter versionSyscall CPU percentage (prorated)Time taken to finish 100000 mapping iterationsStock Binary20.36%N/AGo 1.10.323.39%1.655sOur Version42.23%1.003s

+19%-39%

The CPU percentage in Syscall is larger the better under the same workload.

Now, we have less than five percent of CPU time spent in Go's regular expression library. Those are the functions called in Prometheus client_golang library.

We also ran a test which iterated the matching function only for 100,000 times, and we had 40 percent less time compared to glob matching type. If glob matching is not used as a fallback when simple matching can’t find a match and is completely removed, we had 60 percent time less spent in iteration.

Simple Matcher Caveats

There are a few caveats with simple matcher:

- Rules that need backtracking:

client.*.request.size.*

client.*.*.size.*

The above will fail to match if the event is client.aaa.response.size.100

Correct rules are:

client.*.response.status.*

client.*.response.size.*

- Rules that depend on the order of occurrence in statsd.rules (possible to use array to trade with performance if needed)

- Labels that have multiple regex expansion variables (possible to support if needed)

Improvements for the Future

After optimizing overhead introduced by the Go regex engine, most of the CPU time is now spent in system calls and Go runtime. On Kong Cloud, we use the StatsD Advanced Plugin to batch UDP packets for each request to significantly reduce the amount of system calls. This benefits both the sender(Kong instances) and the receiver(the StatsD exporter).

Kong Cloud delivers faster innovation through zero-touch, automatic updates and cloud-speed cadence of new product functionality. It offers the unique flexibility of running on any cloud option, including AWS, Azure and Google Cloud. Kong Cloud is currently in beta and will be available to the general public soon. To learn more about Kong Cloud, sign up for product updates at https://konghq.com/cloud/.

Update: Contribution Shipped!

This work is included as a major enhancement in the Prometheus StatsD Exporter as of v0.8.0-rc1, which was released on October 10th, 2018. Check it out here!