The service mesh architecture pattern has become a de facto standard for microservices-based projects. In fact, from the mesh standpoint, not just microservices but all components of an application should be under its control, including databases, event processing services, etc.

It's critical to analyze end-to-end service mesh infrastructure from two main perspectives:

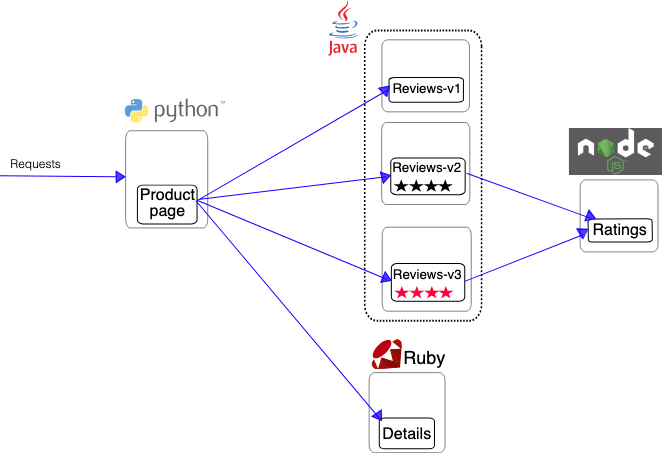

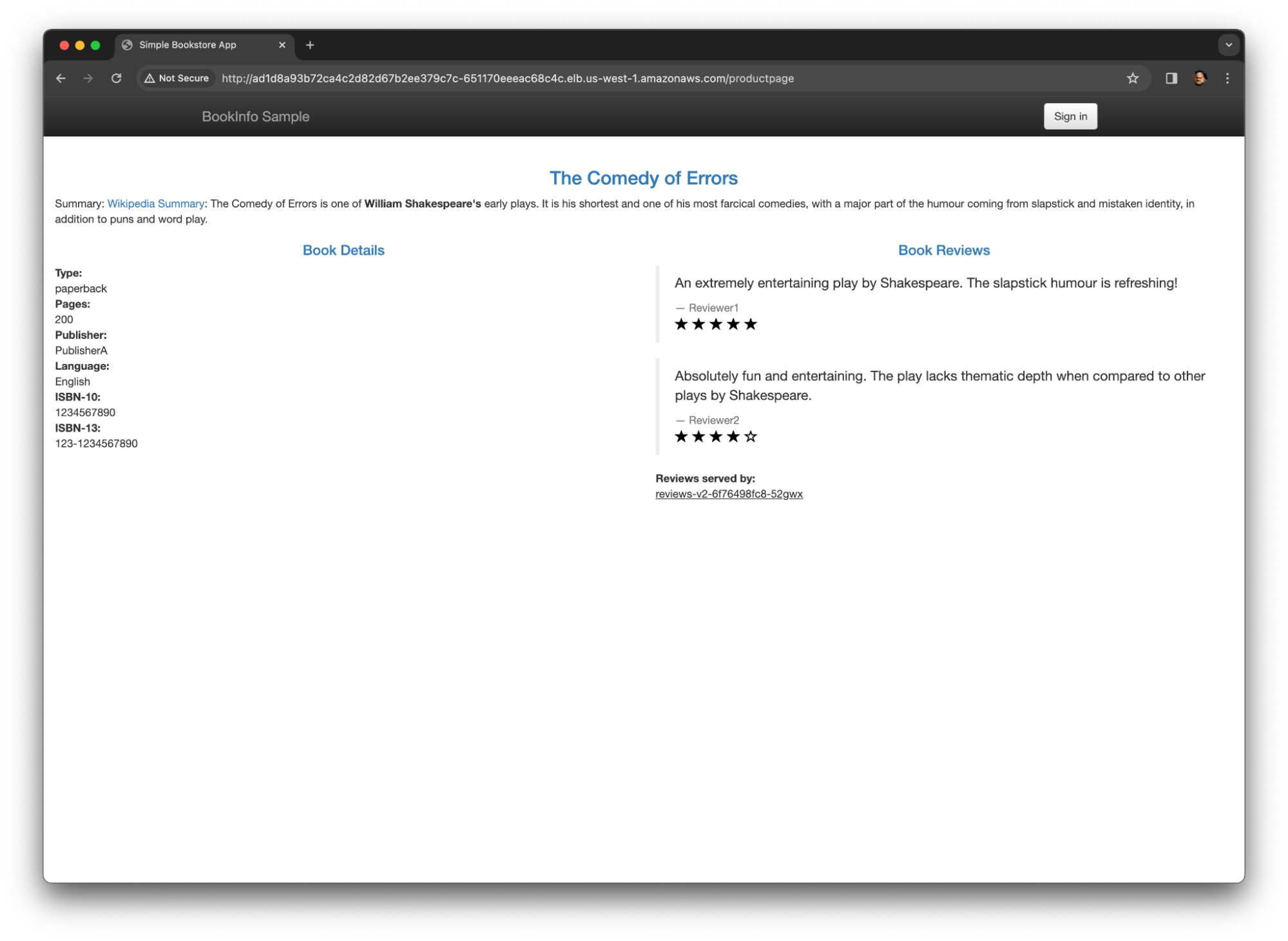

- The traffic within an application: Also called the east-west traffic, this is the main purpose of a service mesh implementation. We should be able to apply multiple policies to define how the service mesh components should talk to each other considering security as well as requirements concerning traffic control, observability, and more.

- The service mesh exposure: Typically, the mesh components and the actual communication among them are protected from external consumers. However, we have to expose at least one of its components so the mesh can be consumed. That's the role of a specific mesh component responsible for the north-south ingress traffic. This ingress traffic component is responsible not just for the mesh exposure, which is its natural purpose, but for implementing specific policies we should have in this layer, including multiple consumer authentication mechanisms (e.g., API Key, OIDC, mTLS), request throttling, mesh consumption metrics, etc.

In this blog post, we’ll present and describe a service mesh reference architecture based on Red Hat and Kong technologies and products, where the main actors, Istio Service Mesh and Kong Ingress Controller, run on a Red Hat OpenShift Container Platform (OCP) Cluster.

One of the most robust platforms to implement and deploy applications and service meshes available today is Red Hat OpenShift Container Platform (OCP).

Based on Kubernetes, Red Hat OCP provides a trusted, comprehensive, and consistent application platform for hybrid cloud that is capable of running single or multi-cluster service meshes. Below, we will detail the implementation of Kong technologies, Konnect, and KIC with Red Hat OpenShift for building modern applications.

Taking the perspectives described previously, on top of Red Hat OCP, the service mesh infrastructure consists of two products:

- Red Hat OpenShift Service Mesh: Based upon Istio Service Mesh, this is responsible for the actual service mesh providing all functions required such as monitoring, tracing, circuit breakers, load balancing, access control, and more.

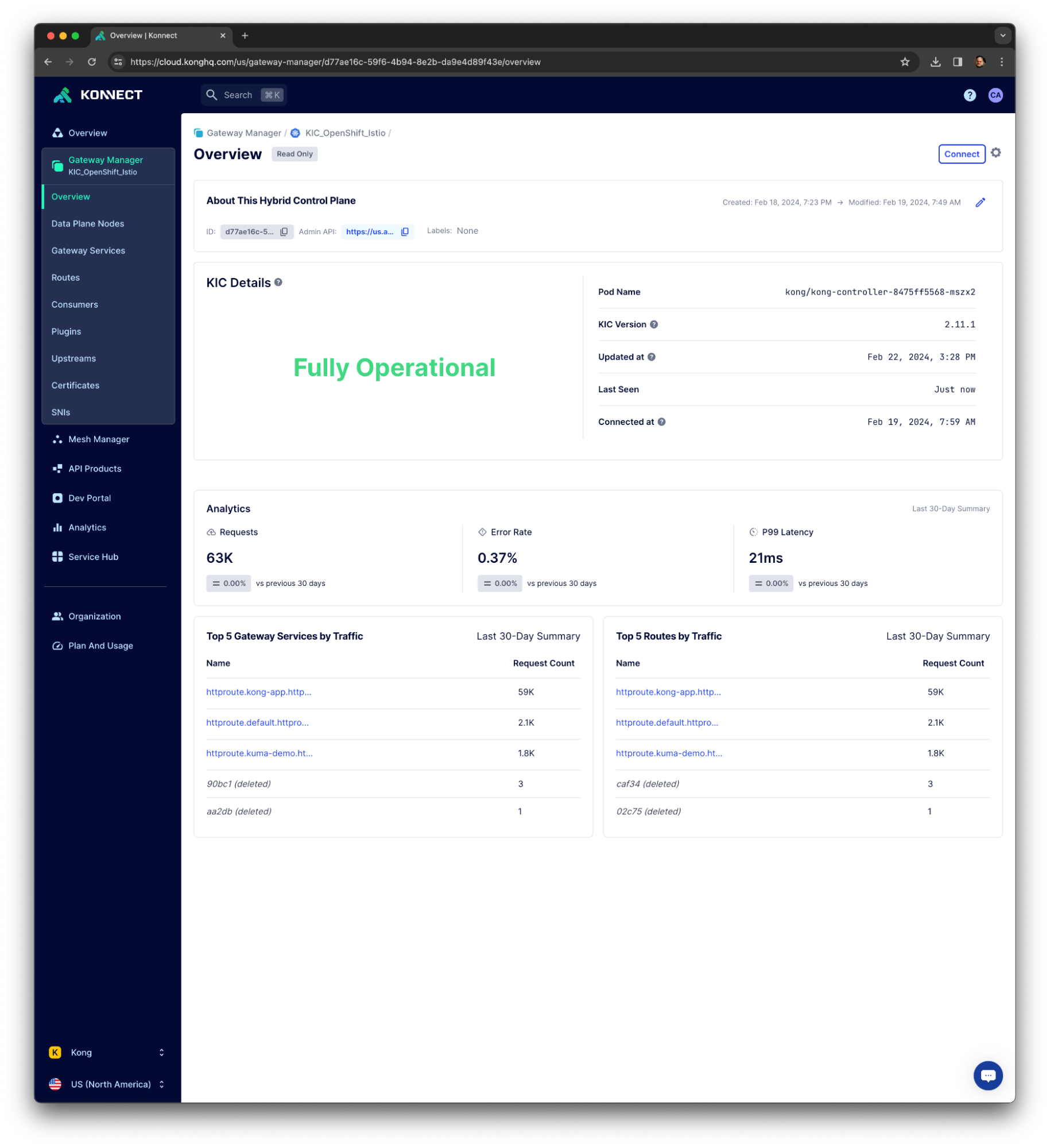

- Kong Ingress Controller (KIC): Also considered a service mesh component, this exposes the Istio Service Mesh with an extensive collection of policies like authentication, request transformation, response caching, rate limiting, traffic monitoring, logging, and tracing.

We should also consider two more layers implemented by:

- Keycloak: With a KIC Gateway integration, it plays the Identity Provider role to, as such, externalize the external consumer OIDC-based authentication and authorization processes.

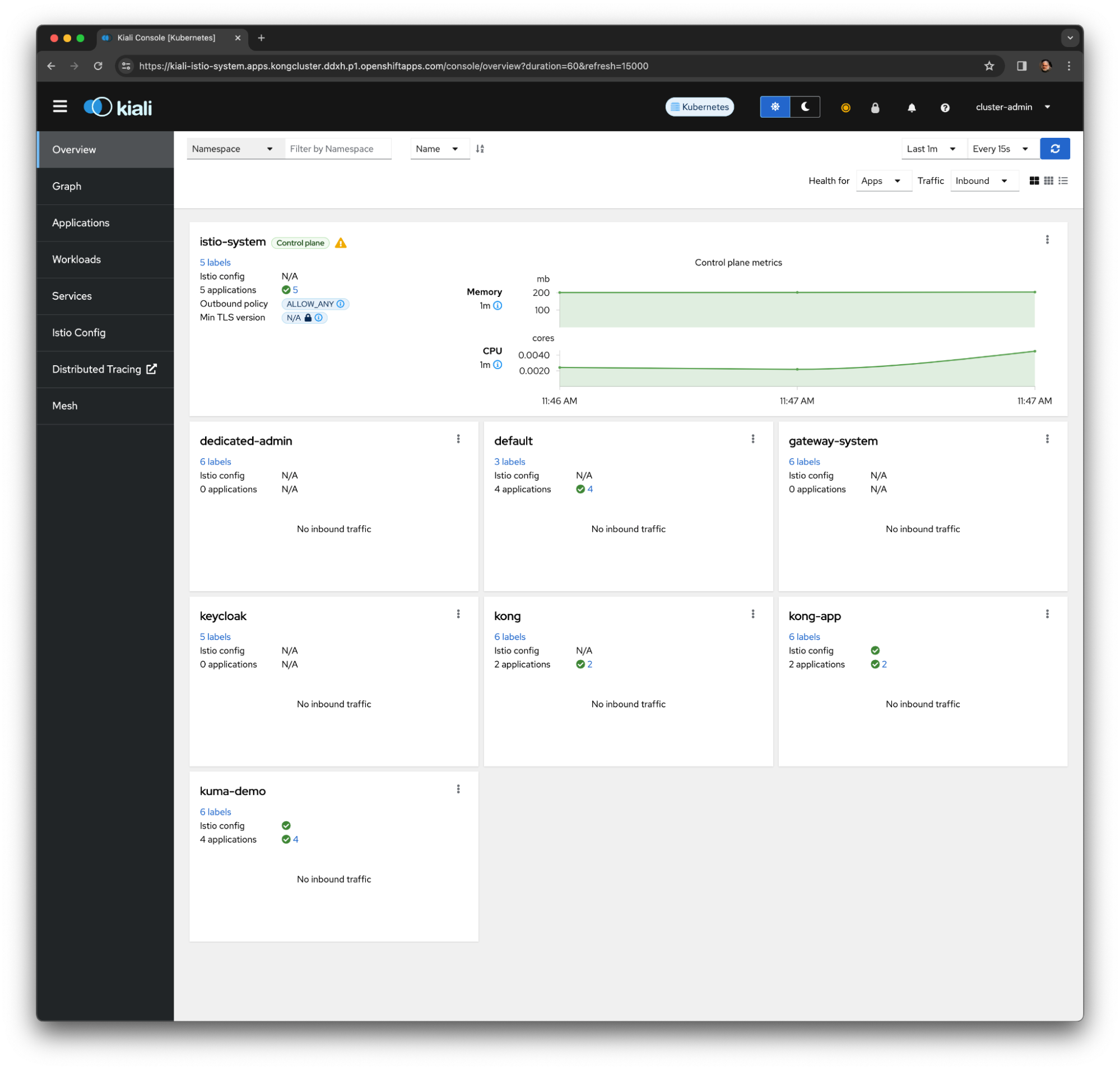

- Kiali: As the Istio Service Mesh monitoring and management console. Kiali uses Prometheus and Grafana to generate the topology graph, show metrics, calculate health, offer advanced metrics queries, and more.

The figure below illustrates the reference architecture: