Why Tracing?

There is a great number of logging plugins for Kong, which might be enough for your needs. However, they have certain limitations:

Most of them only work on HTTP/HTTPS traffic.

They make sense in an API gateway scenario, with a single Kong cluster proxying traffic between consumers and services. Each log line will generally correspond to a request which is "independent" from the rest. However, in more complex scenarios like a service mesh system where a single request can span multiple, internal requests and responses between several entities before the consumer gets an answer, log lines are not "independent" anymore.

The format in which log lines are saved are useful for security and compliance as well as to debug certain kinds of problems, but it may not be useful for others. This is because logging plugins tend to store a single log line per request, as unstructured text. Extracting and filtering information from them — for debugging performance problems, for example — can be quite laborious.

Tracing With Zipkin

Zipkin is a tracing server, specialized in gathering timing data. Used in conjunction with Kong's Zipkin plugin, it can address the points above:

It is compatible with both HTTP/HTTPS and stream traffic.

Traffic originating from each request is grouped under the "trace" abstraction, even when it travels through several Kong instances.

Each request produces a series of one or more "spans." Spans are very useful for debugging timing-related problems, especially when the ones belonging to the same trace are presented grouped together.

Information is thus gathered in a more structured way than with other logging plugins, requiring less parsing.

The trace abstraction can also be used to debug problems that occur in multi-service environments when requests "hop" over several elements of the infrastructure; they can easily be grouped under the same trace.

Setup

For a basic setup, you only need Docker and Docker-compose. Here's the docker-compose.yml file that we will use:

This file declares four docker images: a Zipkin server, a simple HTTP Echo service, a TCP Echo service and Kong itself. Given that the Kong instance that we are creating is db-less, it needs a declarative configuration file. It should be called kong.yml and be in the same folder as docker-compose.yml:

We should be able to start the four images with:

Leave the console running and open a new one.

Infrastructure Testing

Let's test that all services are up and running.

First, open http://localhost:9411/zipkin/ with a browser. You should see the Zipkin web UI, proving that Zipkin is up and running.

The following steps are done with httpie:

That's a call to Kong's Admin API (port 8000) using httpie. You should see a single service called echo_service. It indicates that Kong is up.

That's a straight GET request to the echo server (port 8080) using httpie. It should return HTTP/1.1 200 OK, indicating that the echo server is answering requests.

That's a straight TCP connection to the TCP echo server (port 9000). It should answer "hello world." You might need to close the connection with CTRL-C.

Tracing HTTP Traffic Through Kong

Let's start tracing with this command:

That's a POST request to the HTTP echo server proxied through Kong's proxy port (8000). The received answer should be very similar to the previous one, but with added response headers like Via: kong/2.0.1 . You should also see some of the headers that the Zipkin plugin added to the request, like x-b3-traceid.

Let's generate some more traffic:

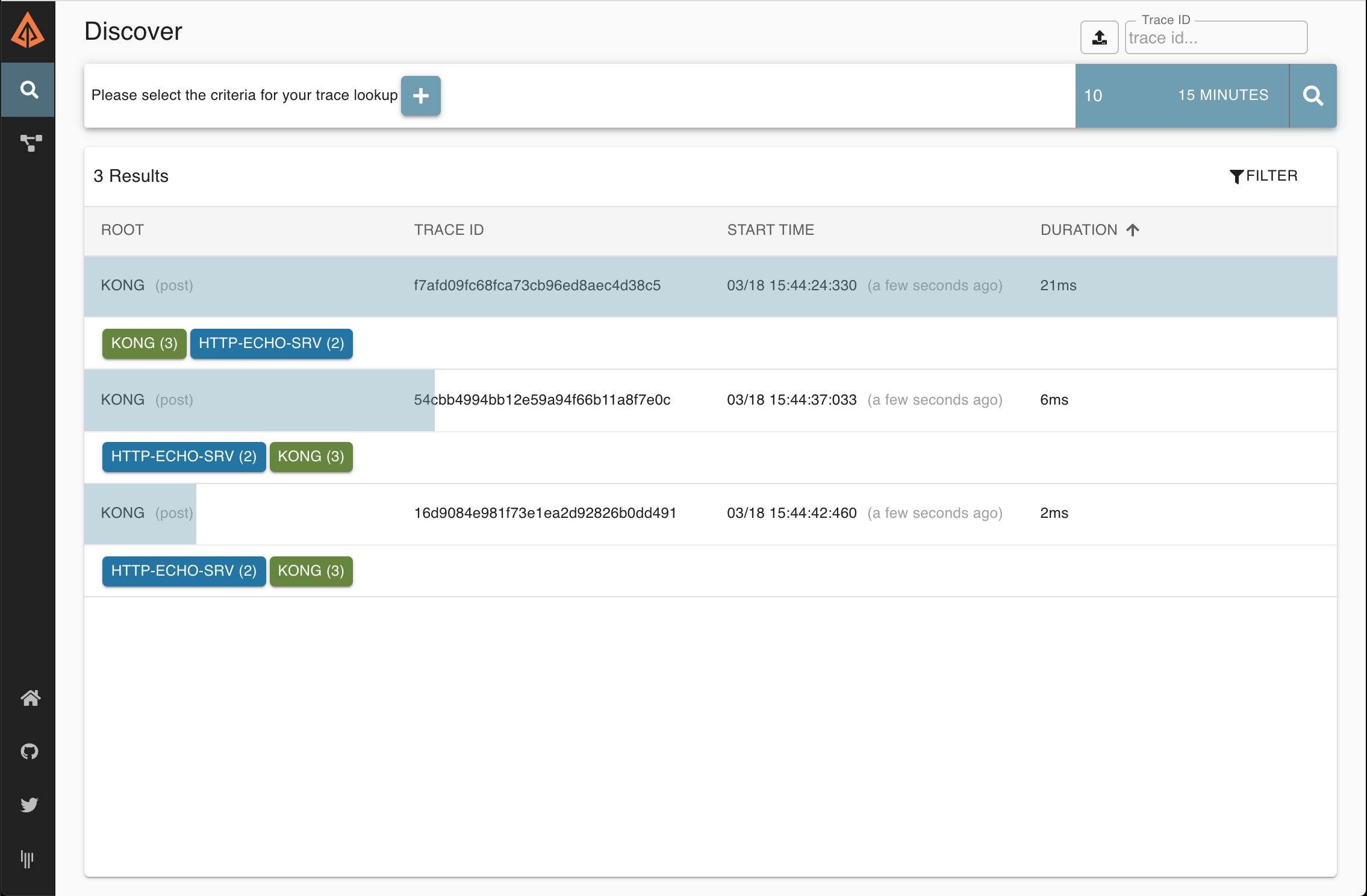

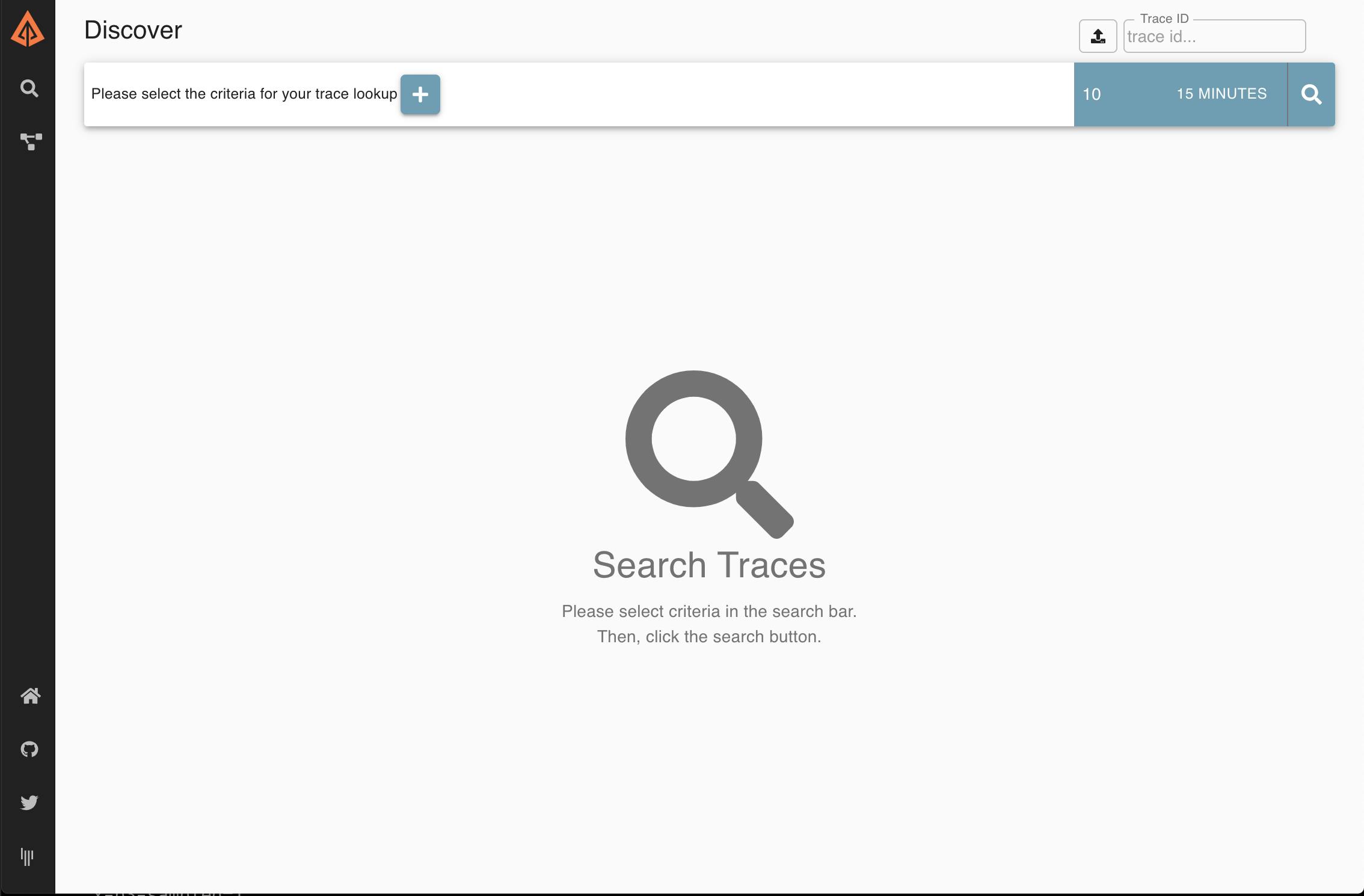

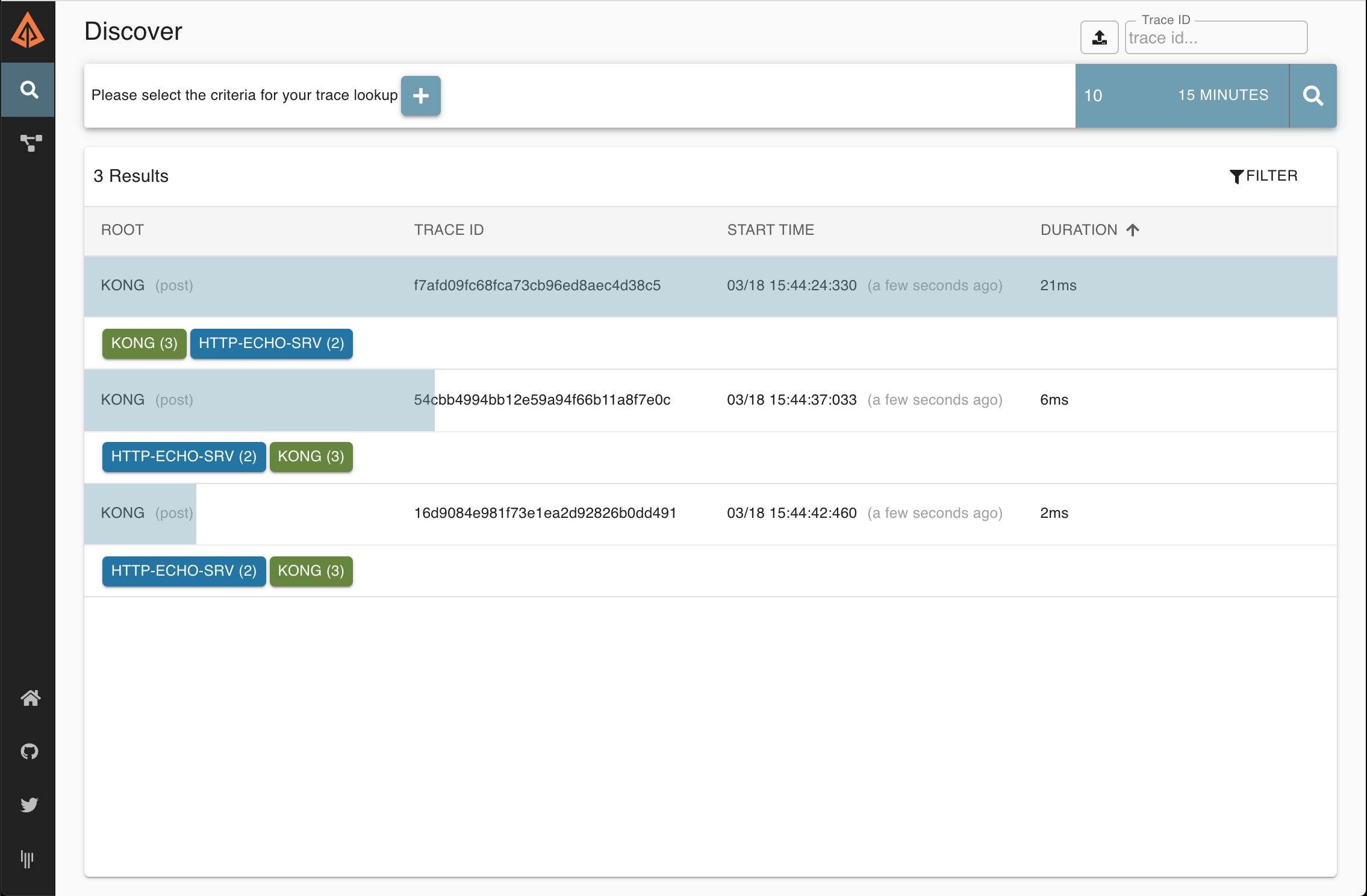

Now go back to the Zipkin UI interface in your browser (http://localhost:9411/zipkin/) and click on the magnifying glass icon (🔎 – You might need to click on Try new Lens UI first). You should be able to see each request as a series of spans.

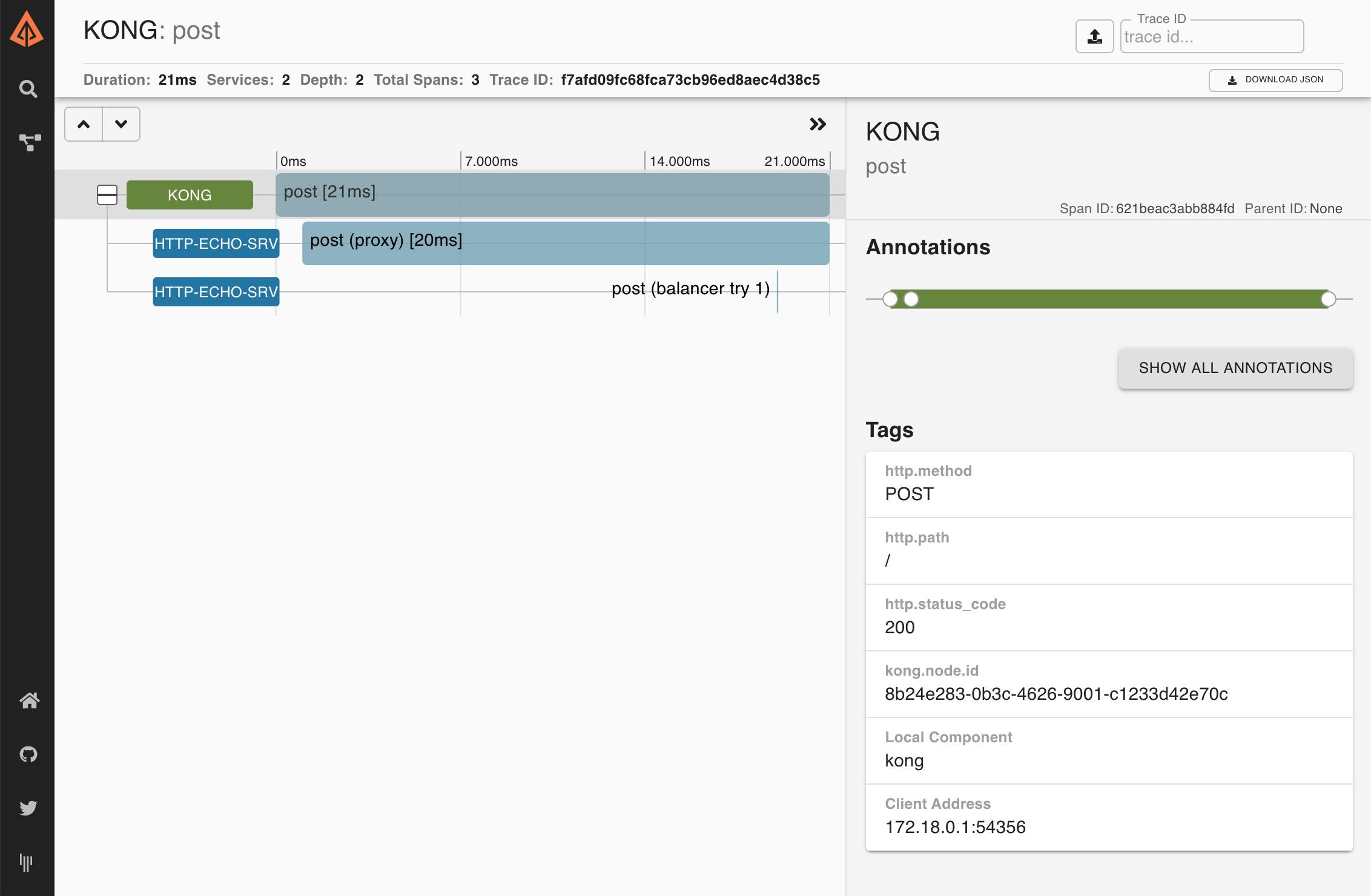

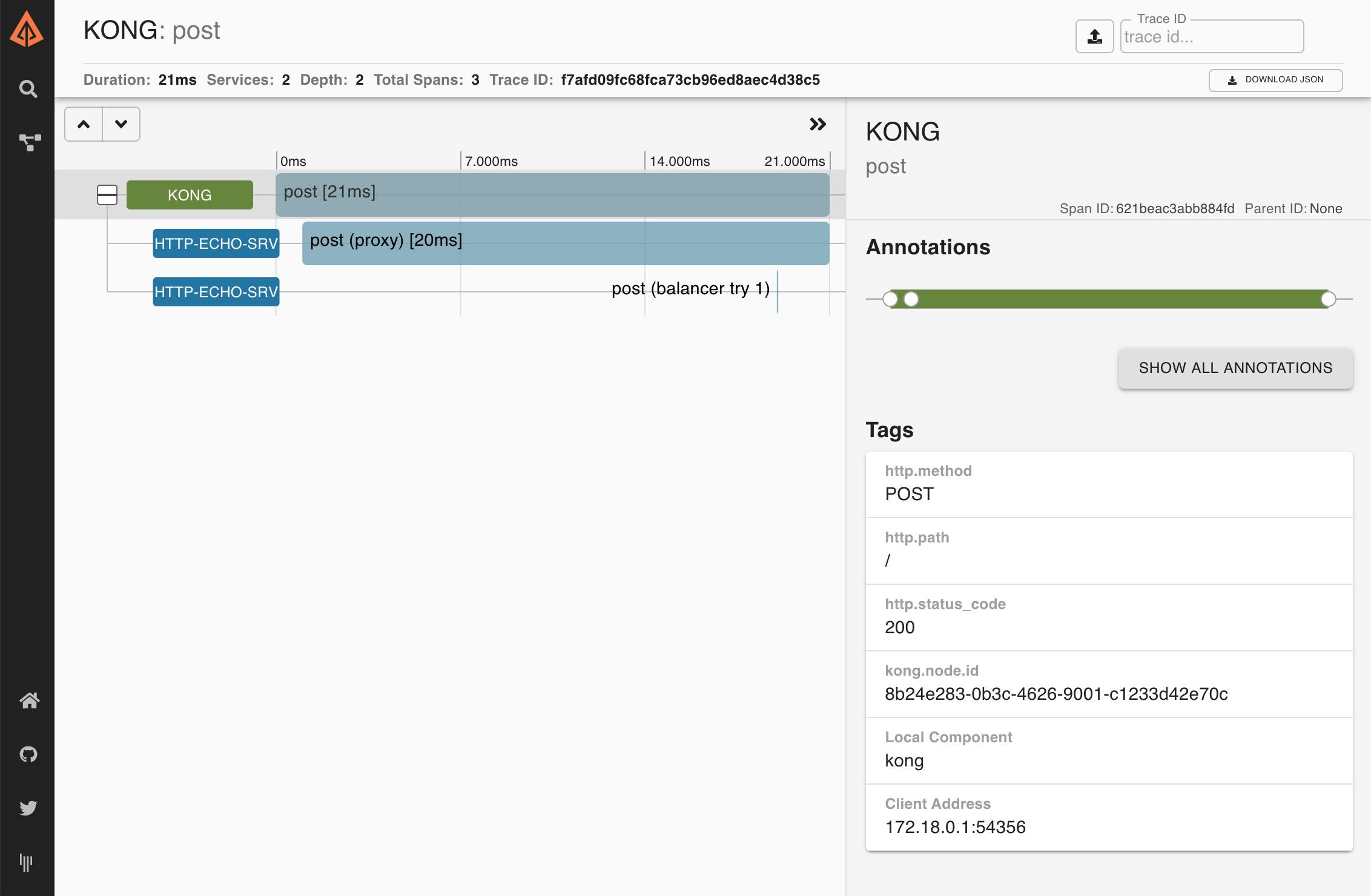

The Zipkin UI should display one trace per HTTP request. You may click on each individual trace to see more details, including information about how long each of the Kong phases took to execute:

This request is similar to the ones made before, but it includes an HTTP header called x-b3-traceid. This kind of header is used when a request is treated by several microservices and should remain unaltered when a request is passed around from one service to the other.

In this case, we can check that Kong is respecting this convention by checking that the headers returned in the response. One of them should be:

You should be able to visually see the trace in the Zipkin UI by following this link:

http://localhost:9411/zipkin/traces/12345678901234567890123456789012

Our Zipkin plugin currently supports several of these tracing headers, and more are planned for future releases!

Tracing TCP Traffic Through Kong

This will open a TCP connection to our TCP Echo server with Netcat. Write names and press enter to generate tcp traffic:

Press Ctrl-C to finish and close the connection — that is a requirement for the next step.

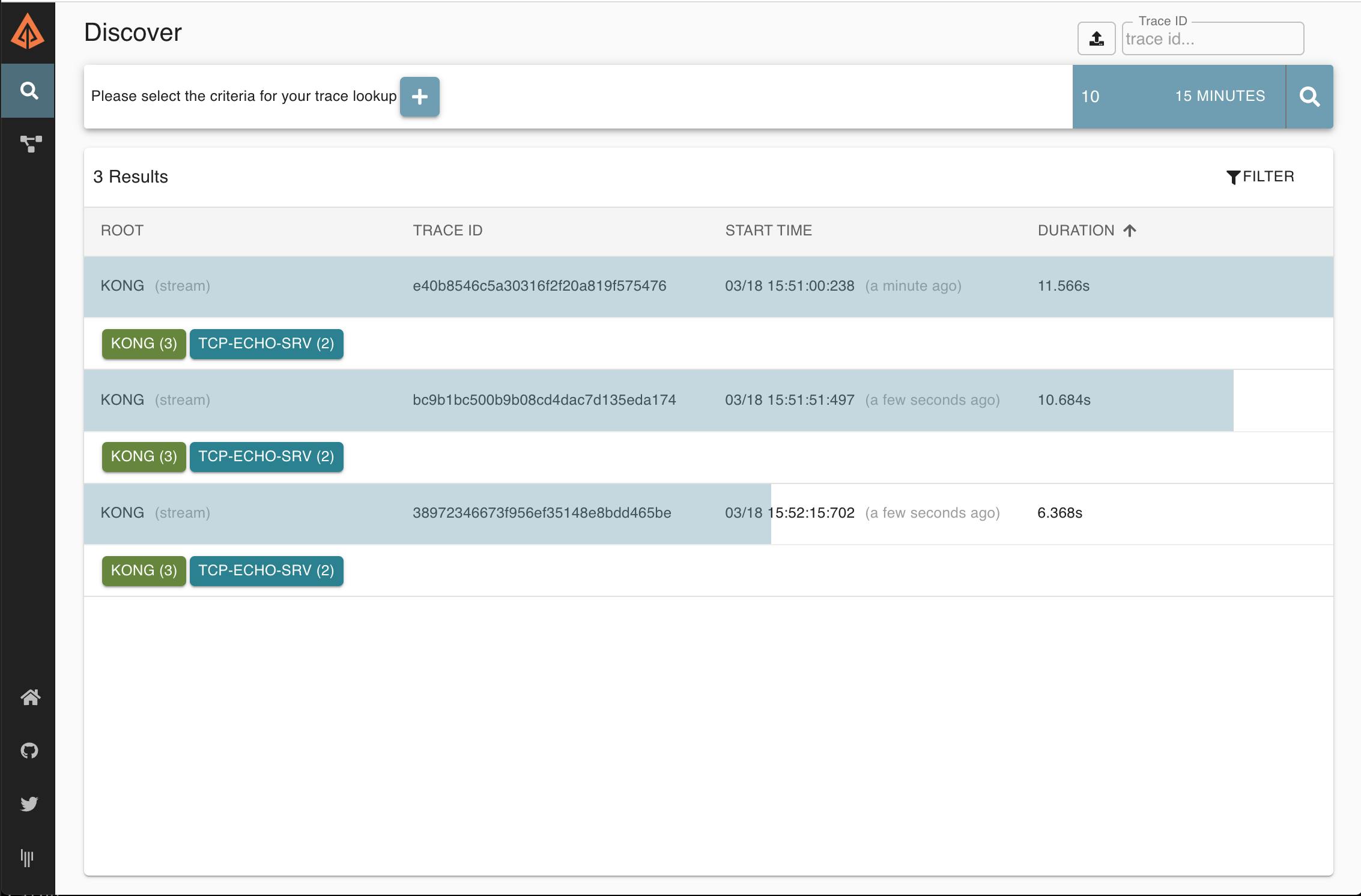

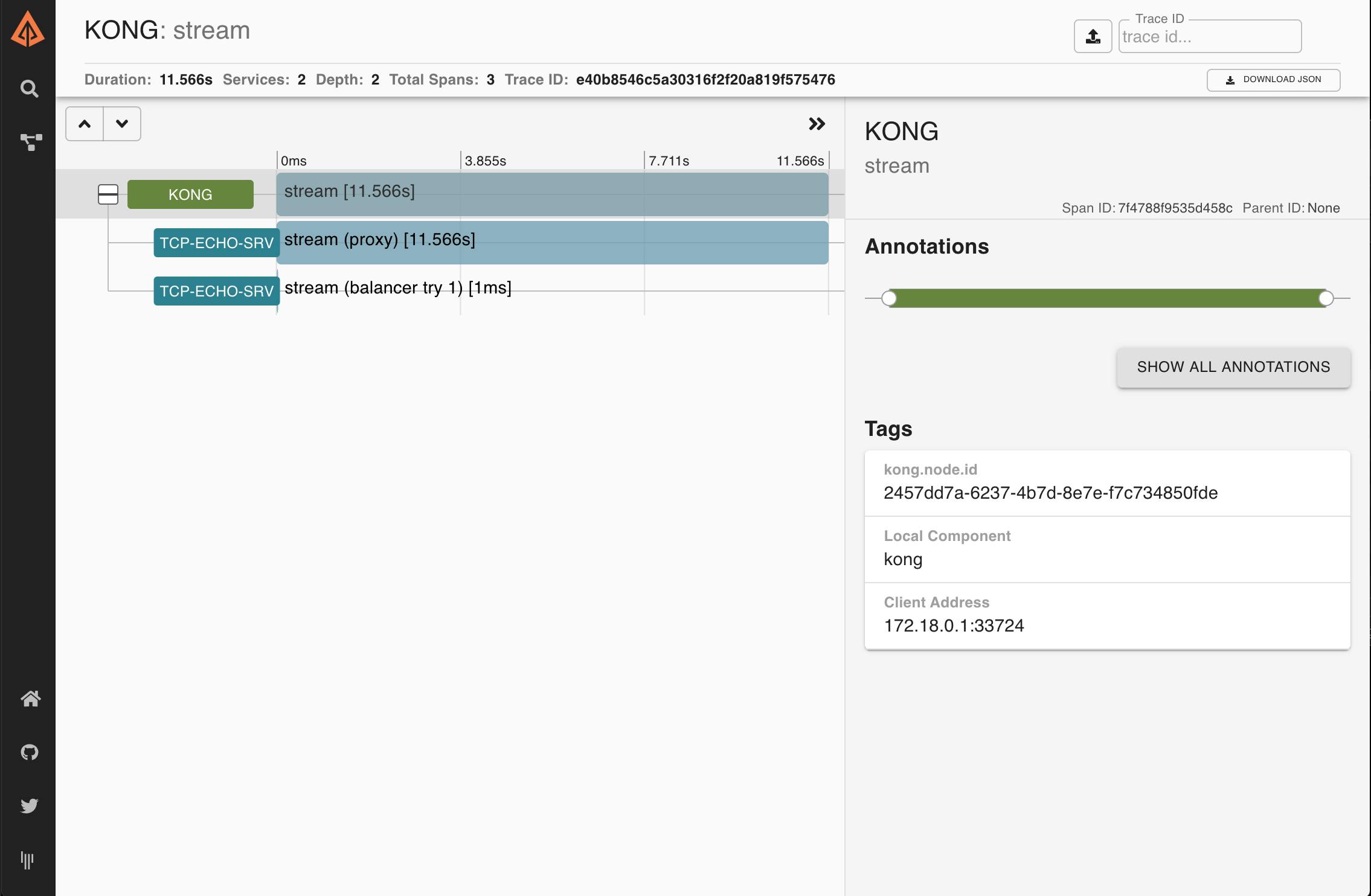

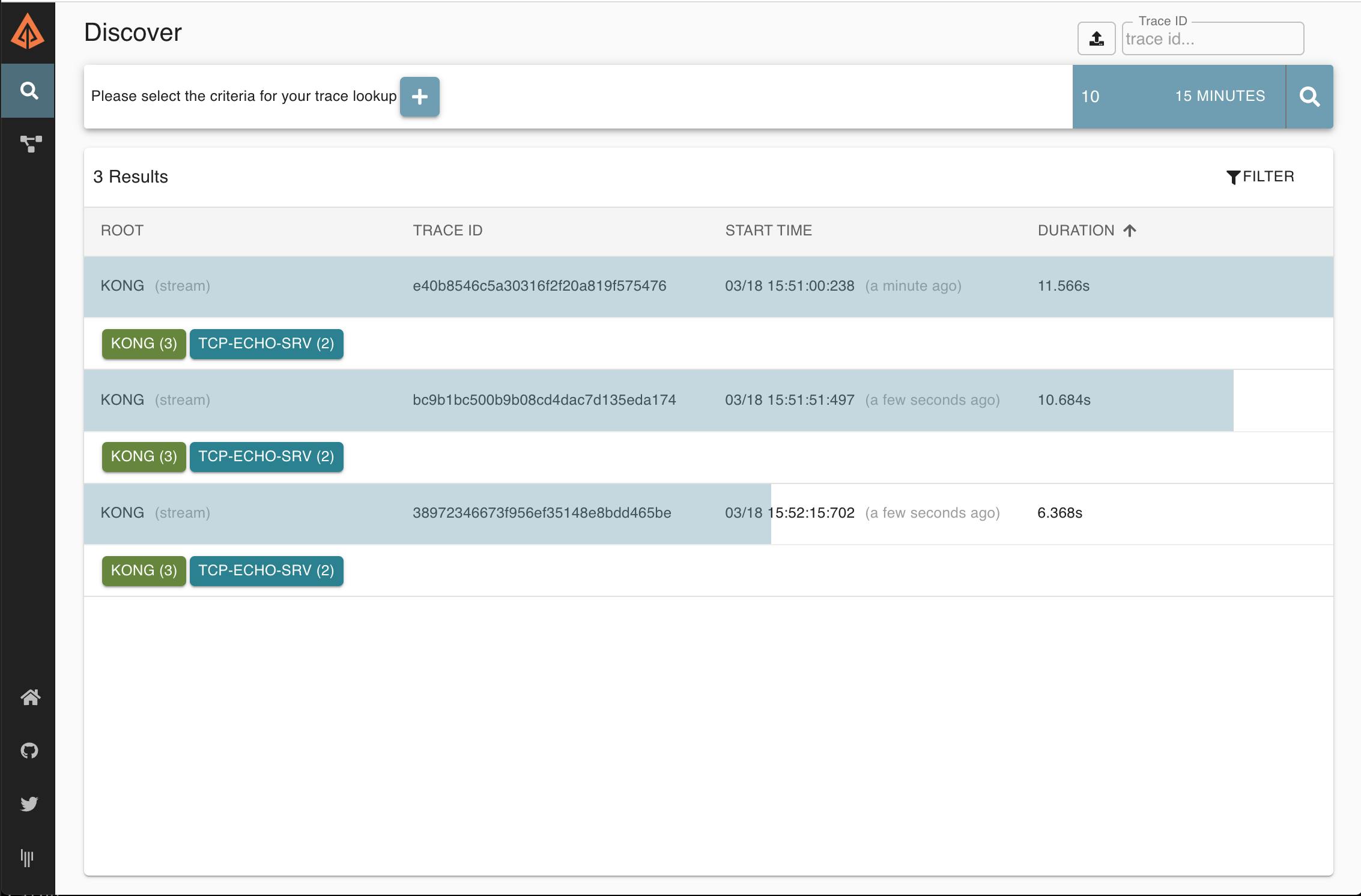

This should have created some traces on Zipkin — this time for TCP traffic. Open the Zipkin UI in your browser (http://localhost:9411/zipkin/) and click on the magnifying glass icon (🔎). You should see new traces with spans. Note that the number of traces you see might be different from the ones shown in the following screenshot:

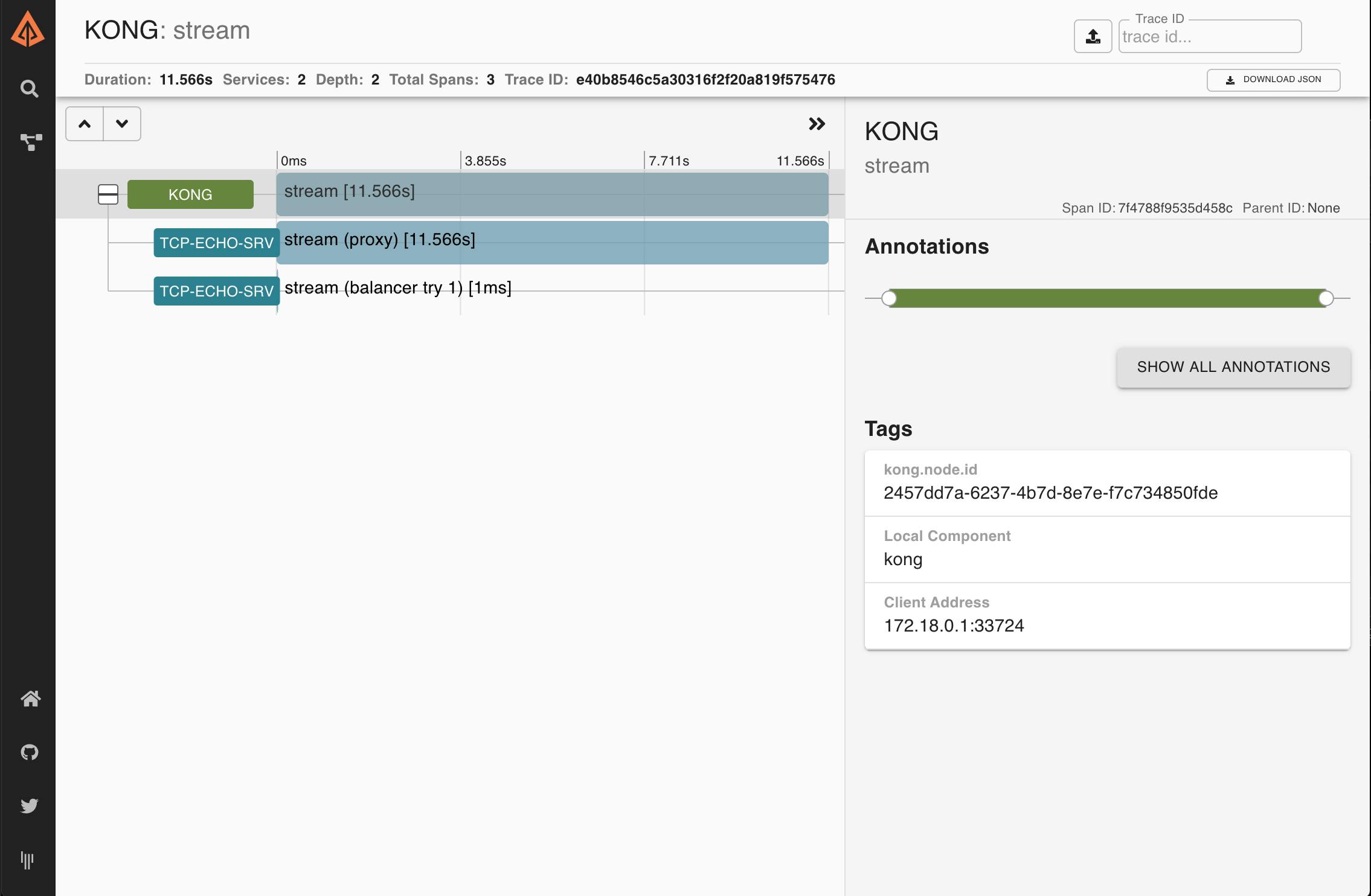

As before, you should be able to see each individual trace in more detail by clicking on them:

TCP traffic, by its own nature, is not compatible with tracing headers.

Conclusion

This was just an overview of some of the features available via the Kong Zipkin Plugin when Kong is being used as an API gateway. The tracing headers feature, in particular, can help shed some light on problems occurring on multi-service setups. Stay tuned for other articles about this!