Today, we're thrilled to announce that Kong Enterprise and Kong Konnect Data Planes are now validated to run on AWS Graviton3 processors and Amazon Linux 2023 OS.

As an APN Advanced Tier Partner of AWS, we were delighted to have the opportunity to benchmark Kong Enterprise running on AL2023 and Graviton3.

In this post, we're going to review our benchmark configuration and the outcomes of testing Kong Enterprise running with the Graviton3 processor on AL2023, C7g instance type, against C6i, the largest AWS Intel-based processor instance type, also running AL2023.

AWS states the Graviton3 processors, in comparison to the Graviton2, deliver up to 25% more compute performance and up to twice as much floating point and cryptographic performance. Similarly, Kong, as a lightweight, extensible service control platform, is inherently one of the fastest and most optimized API management solutions available today. As such, it was exciting to see how Graviton3 could further push the platform's performance.

We'll take a look at the setup and results below. But first, a quick bit of historical perspective.

Background

During AWS re:Invent 2018, AWS launched its first generation of ARM-based, AWS Graviton-powered EC2 instances. Since that announcement, thousands of AWS customers have used Graviton processors to run many different flavors of workloads. At the 2019 AWS re:Invent, AWS announced Graviton2, and two years later, they announced Graviton3 with C7g instance type support.

AWS Graviton3 is the latest in the Graviton family of processors that enable the best price performance for workloads in Amazon EC2. C7g delivers up to 40% better price performance over current generation C6i instances for compute-intensive applications. Overall, including C7g, AWS provides instance types to support other deployment purposes including M7g and R7g.

In a similar light, AWS recently announced the general availability of Amazon Linux 2023 (AL2023), the new version of the AWS Linux-based operating system to run cloud applications. Taking a security-by-default approach, Amazon Linux 2023 provides a high-performance environment to develop critical applications, seamlessly integrating with AWS services.

Benchmark environment

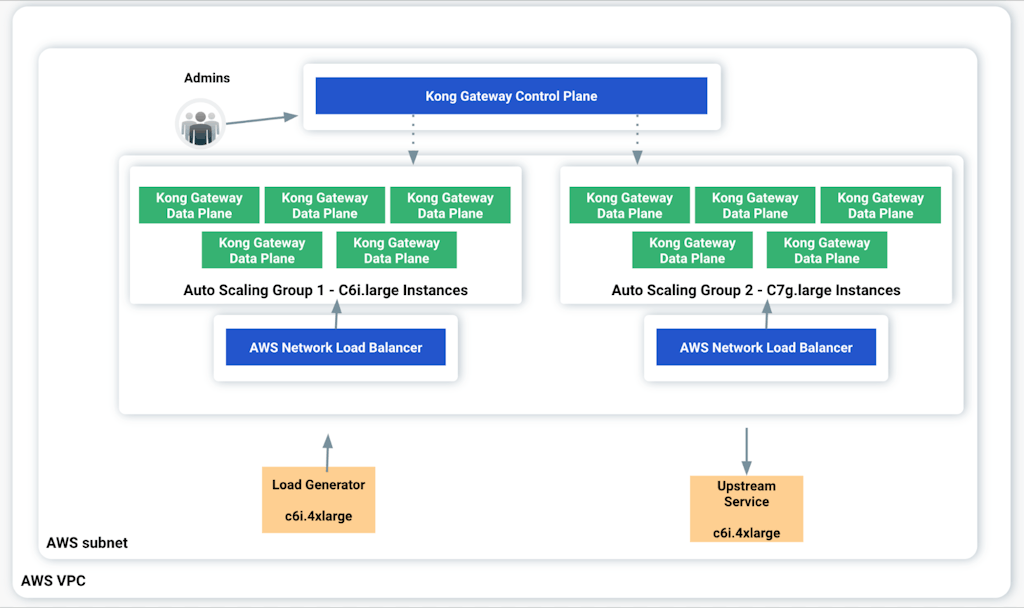

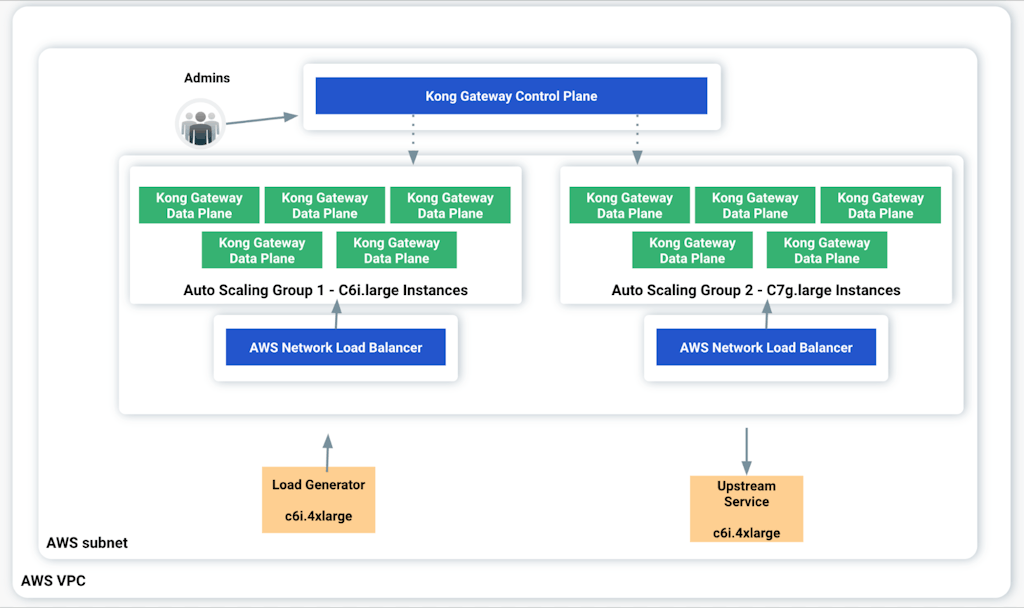

Any good benchmark experiment needs a well-defined environment design. Our setup was the following:

- Kong Gateway Version 3.2

- Kong Enterprise running in Hybrid Mode

- The Kong Control Plane (CP) running on a C6i.large instance type

- The Kong Data Planes (DP) running on C7g.large and C6i.large EC2 instance types, both with 2CPUs, 4GB.

- Because these are EC2s instances and given the high throughput expected, an auto scaling group was used to control the number of instances.

- An AWS Network Load Balancer was put in front of each set of Data Plane instances. One for the C6i Data Planes, and another NLB for the C7g Data Planes, to distribute the load generation

- One Nginx instance was used as the Upstream Service. It was deployed as a C6i.4xlarge (16 CPUs, 32GB) instance type.

- For Load Generation – wrk, an HTTP benchmarking tool, was used. It was also deployed on a C6i.4xlarge (16 CPUs, 32GB) instance type.

- All architectural components including Load Generator, Upstream Service, NLB, Kong CP and Kong DPs instances were confined and deployed in the same AWS subnet.

The diagram below depicts the topology:

Scenarios

In order to gain a deep understanding of the performance benefits we ran three testing scenarios. Each scenario incrementally increased the gateway configuration to handle more complexity.

Scenario 1: No plugins were configured on the gateway.

- The purpose of this test was to show the scalability of Kong.

Scenario 2: The Rate Limiting plugin was enabled on the gateway. The policy itself was set as 100000 req/sec to make sure all requests were going to be successfully processed with no 429 error code.

Scenario 3: For this scenario, the API Key plugin was enabled alongside the Rate Limiting plugin.

- The purpose of this scenario was to show the extra resource consumption due to the inclusion of the plugins.

Scalability and throughput

For all scenarios we ran the load generator tool, wrk for 3 minutes, 2 times each, disregarding the first run’s results. The Data Planes were submitted to the following throughputs:

- 80 connections in 8 threads (10 connections per thread): 1 to 5 DP instances

- 120 connections in 12 threads: 5 DP instances

- 160 connections in 16 threads: 5 DP instances

Results

For understanding performance, the most important metrics to understand and that we'll review through are requests per second (RPS), latency, and CPU usage.

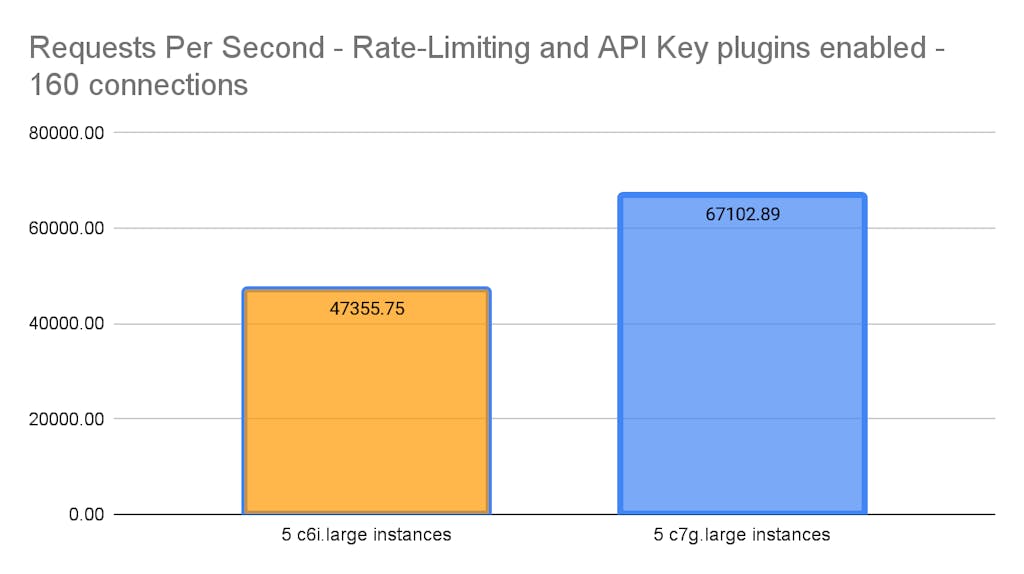

For brevity, although we tested the three scenarios above we'll focus the discussion on scenario 3 — the most complex gateway configuration with the Rate Limiting and API Key plugins enabled.

Requests per second

This is the throughput, the number of requests we can send for a given period of time. When it comes to interpreting requests per second, the higher the value the better.

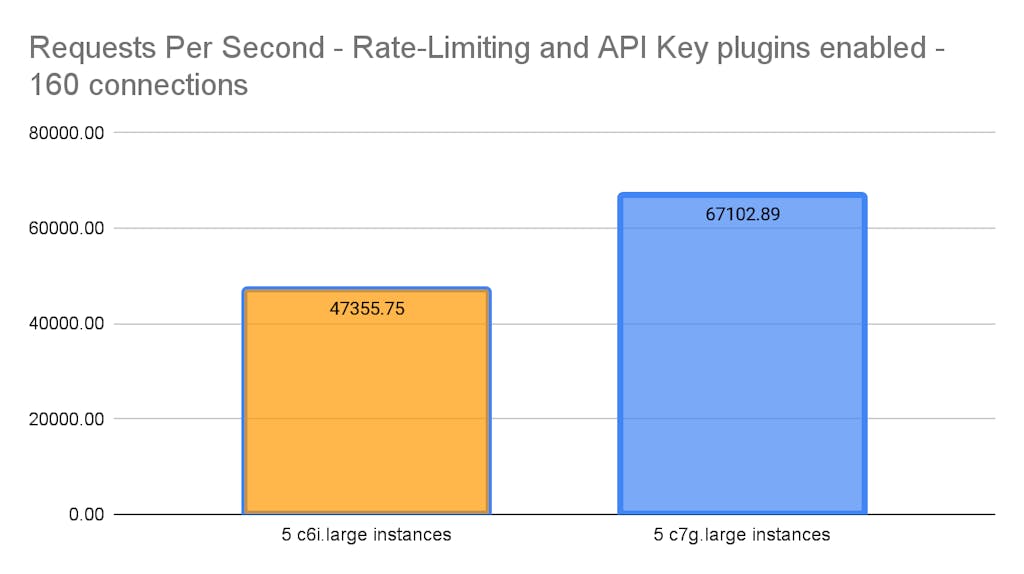

At a throughput of 160 connections, we were excited to see the C7g.large instances, using AWS Graviton3 processors and Amazon Linux 2023, provided a significant gain over the existing C6i instances.

On the left, the yellow bar, is the result for the C6i instance, the "control" for our experiment. And, on the right in blue, the "experimental" instances, the new C7g large instances running on combined a Graviton3 and Amazon Linux 2023 OS.

The C6i instances use third-generation Intel Xeon Scalable processors, which typically generate excellent results over most other instance types available. The C6i generated a request-per-second rate of 47K. While very good, the C7g's produced 67K, representing a 42% increase in improvement.

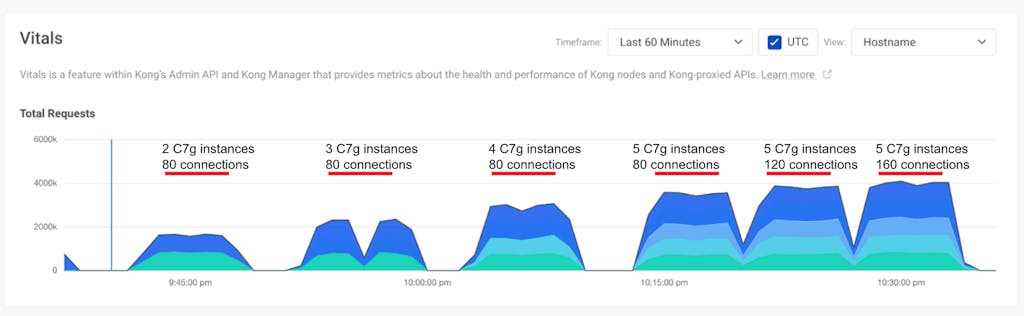

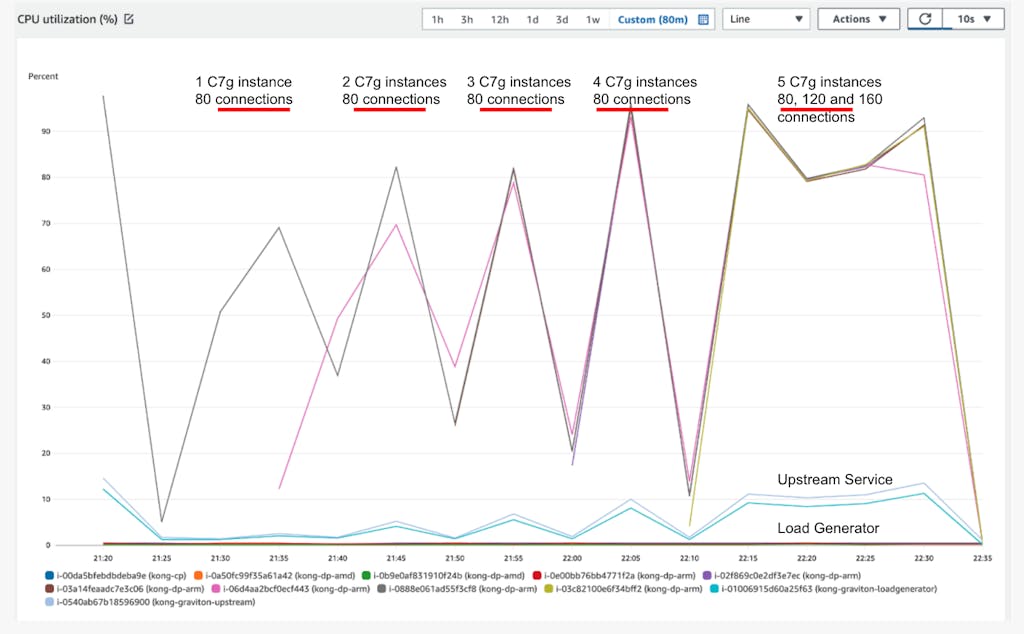

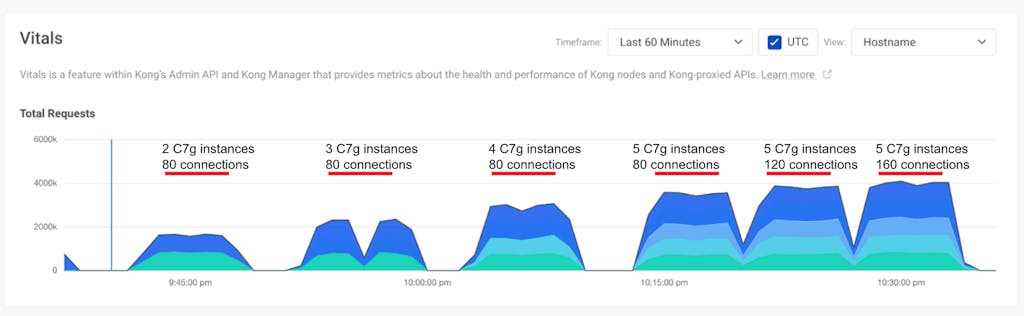

Kong Enterprise’s Vitals, also known as Analytics in the Kong Konnect SaaS platform, offered a really nice perspective as well. The following image below taken from Vitals shows the evolving performance of all of the scenarios, starting with a single instance until the most stressful one with 160 connections submitting requests to 5 C7g EC2 instances.

Latency times

Latency is defined as the time it takes for a packet to make a round trip from the destination to the source and back. When analyzing latency results, it’s important to remember that lower is better.

Focusing on scenario three, the C7g instances showed remarkable latency times when compared to the C6i’s. Ninety-nine percent of the requests sent to the C7g’s had a response time of 13.96ms or less whereas the C6i’s showed the 99th percentile response rate of 22.91ms. Overall, that translates as a 39% reduction in latency times.

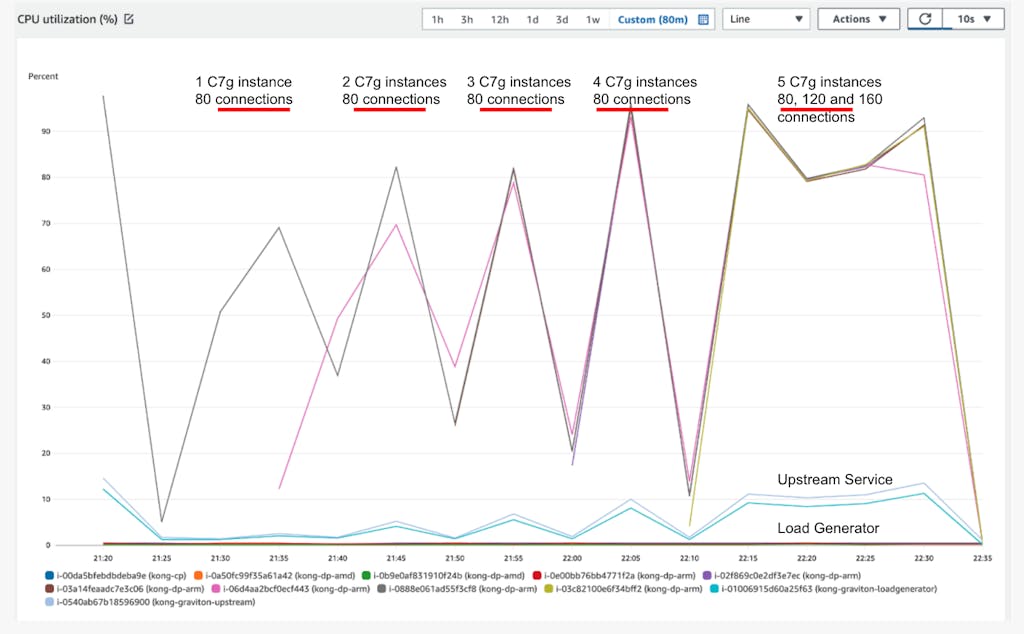

CPU usage

In order to monitor CPU usage, we used AWS CloudWatch and monitored the CPU usage of all instances, including the Load Generator and Upstream Service. As you can see, the response time and CPU usage were notably better when running the scenarios with Graviton3.

Conclusion

Kong is inherently one of the fastest and most optimized API management solutions, so it was exciting to see Graviton3 further enhance the platform's performance. The Kong ARM64 and Amazon Linux 2023 RPM packages and container images can be used for both Kong Enterprise and Kong Konnect hybrid deployments.

As you can see from our findings, the C7g processors delivered a major leap in performance and capabilities over the C6i processors. Running at 67K RPS with 160 connections, including two plugins, the C7g enabled Kong to process 12,000,000 requests with a 13.96ms response time, representing a 42% increase in improvement and 39% reduction in latency times compared to C6i processors. Kong is impressed with the results, and we hope the community is impressed as well.

But this story is not just about performance. Graviton3 C7g processors provide a performance boost for Kong resulting in significant cost savings for our customers of around 15/20%.

If you're among the millions of Kong Gateway users, we suggest checking out AWS Graviton3 to optimize the performance and cost of your environment. Look for Graviton3 to stake its position as the leading processor in the cloud business.