Configuring transparent proxying isn’t a requirement, however, it's recommended as a best practice because it retains the existing service naming and requires no changes to the application code.

Check the version of iptables, nf_tables are supported since 2.5.6. Iptables and iptables-nft should both function as commands on the server instance transparent proxying will be installed on.

Create a new user.

Redirect all the relevant inbound, outbound, and DNS traffic to the Kong Mesh data plane proxy. Apply exceptions for anything running on the server that isn’t going to be given a data plane proxy. Services that make external calls that will be part of the mesh will use Kong Mesh policies to access those external services. I have included the outbound ports as an example.

(To get a list of open ports on the server, run: sudo netstat -tulpn | grep LISTEN.)

Transparent proxying is successful if the message below is received.

# iptables set to divert the traffic to Envoy

# Transparent proxy set up successfully, you can now run kuma-dp using transparent-proxy.

The changes won’t persist over restarts, add this command to your start scripts or use firewalld.

Currently, we can't use kumactl to uninstall transparent-proxy. (This may be included by the time this blog is published.) However, we can use the following iptables cleanup commands so kumactl install transparent-proxy may successfully be run again. This is useful if additional ports need to be added later.

Create a token for the Redis data plane proxy

On Universal, a data plane proxy must be explicitly configured with a unique security token that will be used to prove its identity. The data plane proxy token is a JWT token.

Create a token for our Redis service on the server where the zone control plane is installed and copy it over if Redis is running on a different server.

Create a data plane definition for Redis

Now that we have our token, we can deploy our data plane proxy for our Redis service. First, we need to create a data plane definition.

Save the definition as a yaml file (e.g. dp.yaml). With our token file for Redis and data plane definition saved, we can pass these to the kuma-dp run command.

Update the zone control plane address as needed. If an error is received please view the troubleshooting section below.

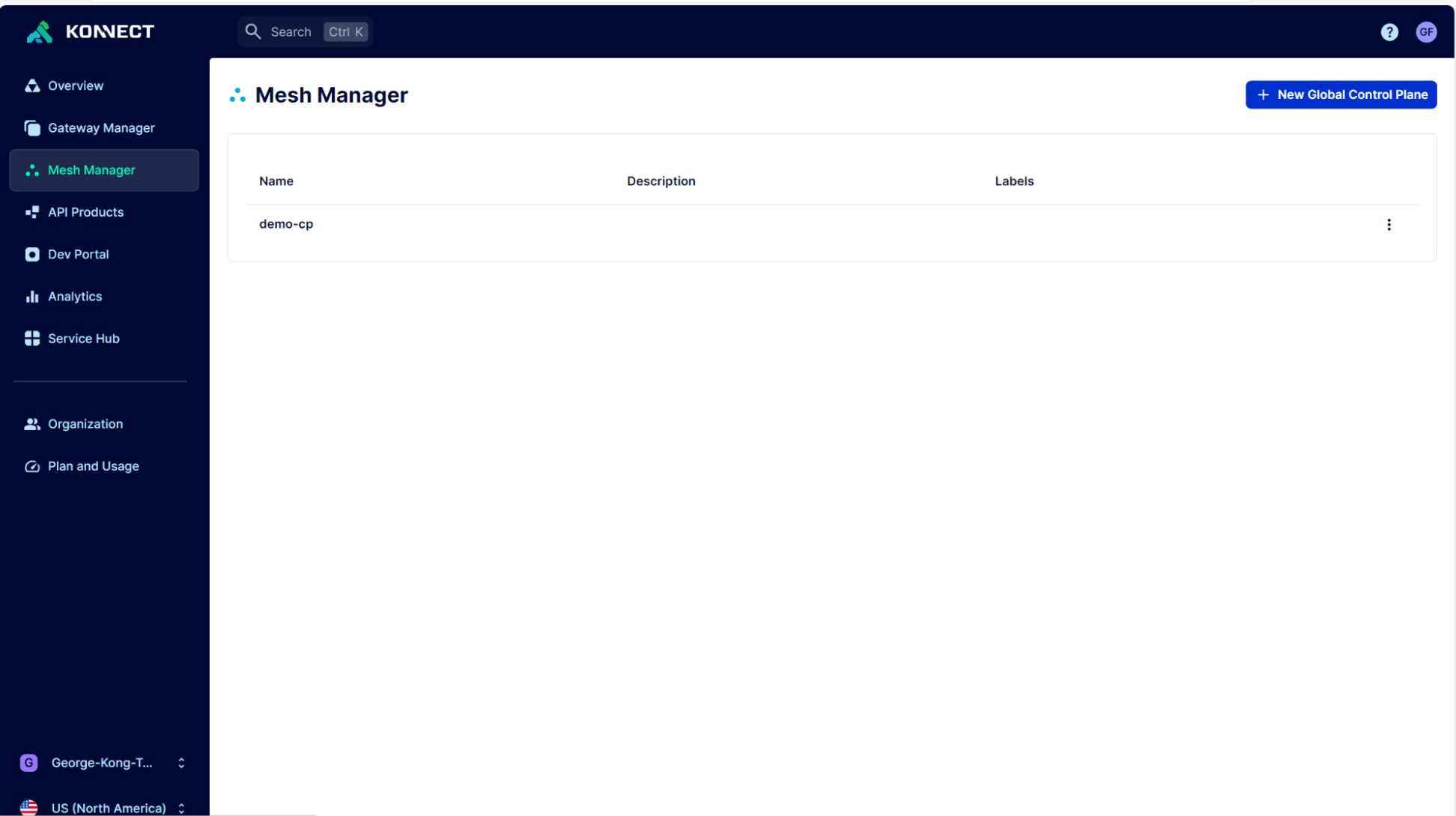

Konnect should now show that the data plane proxy is online and exists for our Redis service.