Ambient Mesh vs. Service Mesh: When to use each model

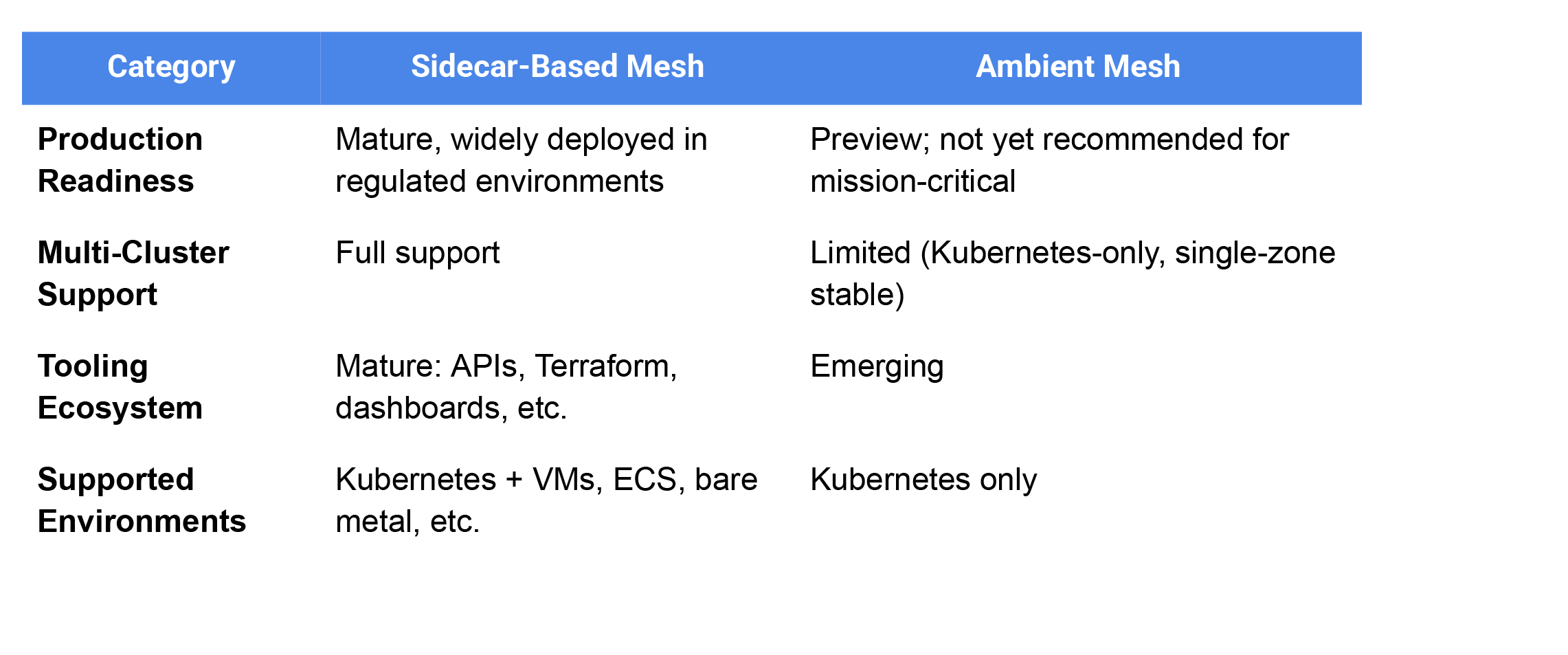

Choose ambient mesh if:

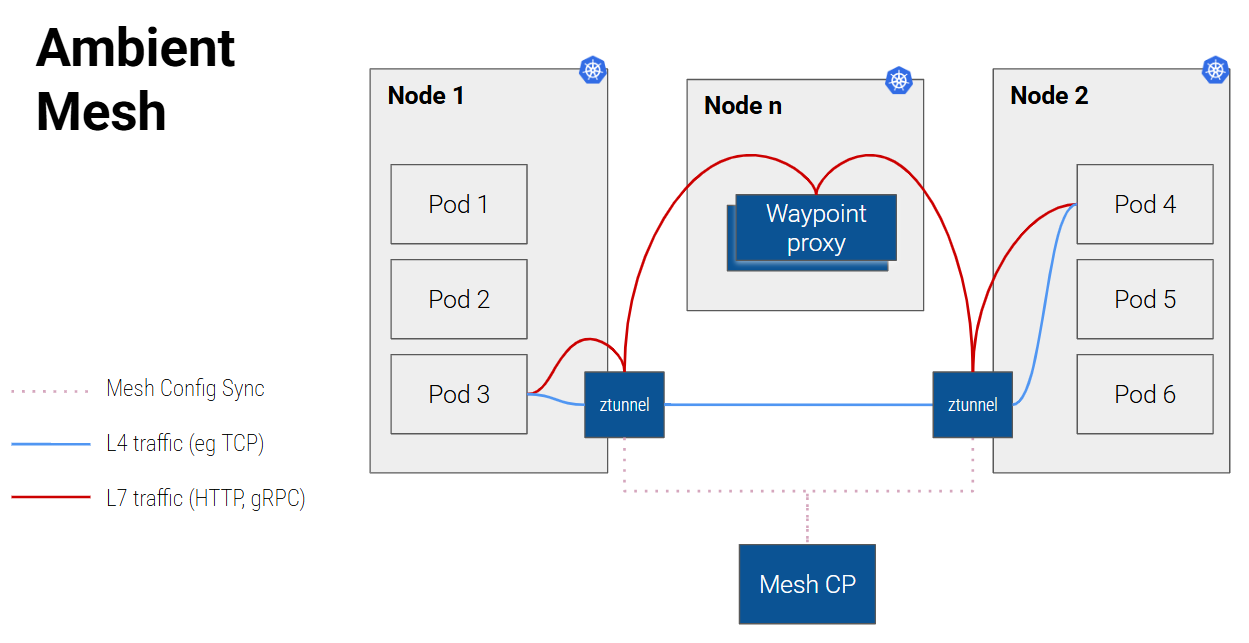

- You mostly need L4 security (mTLS) and basic policies

- You're running high-density clusters and infrastructure cost reduction is your highest priority

- You're working in single-zone Kubernetes environments

- You’re supporting non-regulated or lower-tier environments

- You have one team managing both platform and services (shared proxy components)

Choose sidecar-based mesh if:

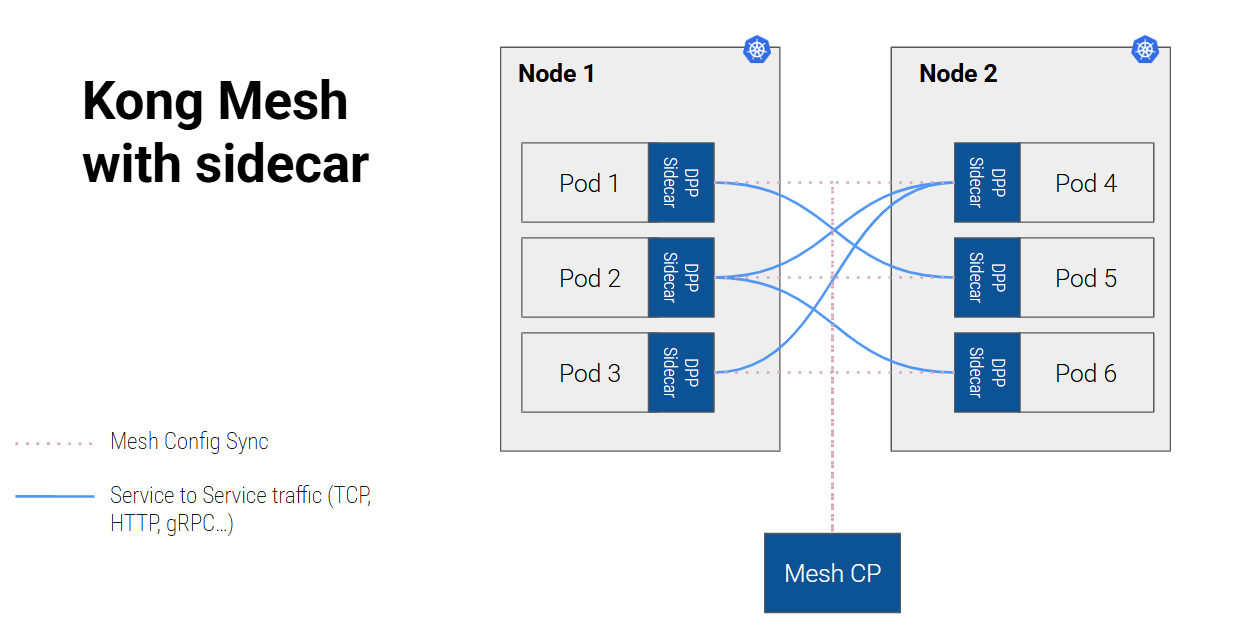

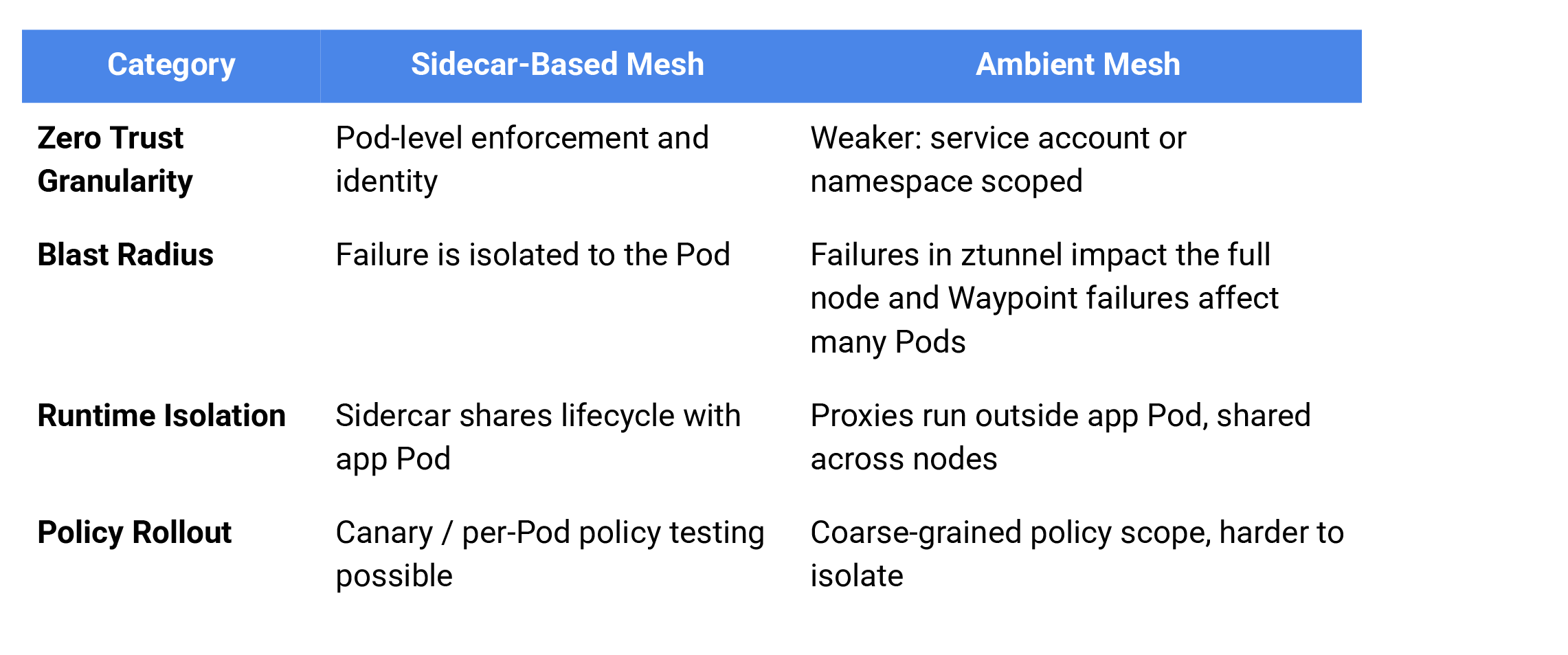

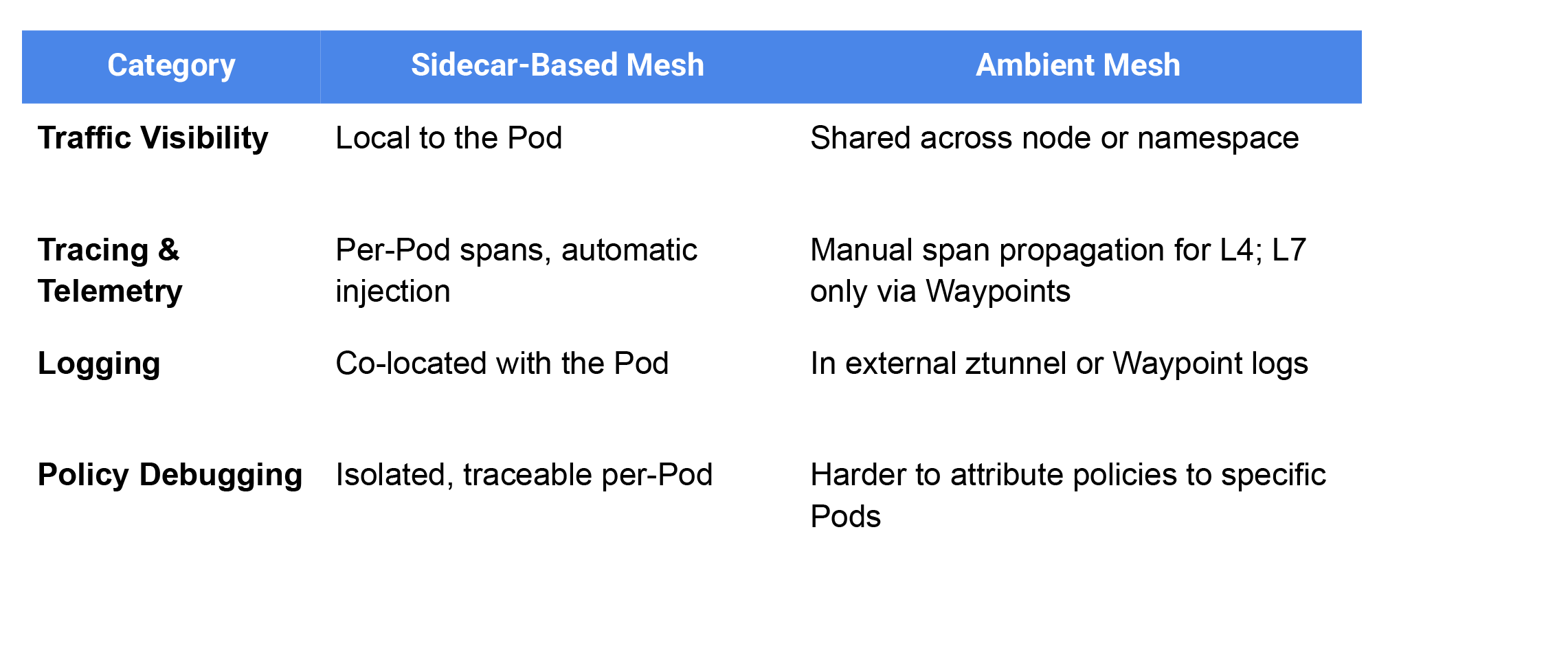

- You require fine-grained security, observability, and policy enforcement

- You operate in multi-zone, hybrid, or regulated environments

- You support multiple teams with self-service mesh configuration

- You run L7-heavy or latency-sensitive workloads

- You prioritize isolation and operational predictability over theoretical efficiency

Final thoughts

Ambient mesh seems, on the face of it, like a compelling evolution of service mesh design promising reduced resource usage and simpler onboarding for lightweight, L4-dominant applications. But that simplicity comes at the cost of operational complexity, L7 capability gaps, and reduced isolation. In many engineering tasks and disciplines simplicity often wins out over pure efficiency, and it’s no different with service mesh. The “neater” sidecar-based approach is easier to reason about, easier to deploy, and is easier to operate – particularly with Kong Mesh, built with enterprises and platform teams in mind.

At Kong we have taken a deliberate wait-and-see approach to investing in the sidecar-less ambient mesh approach. It’s still an early-stage technology, and even the proponents of ambient mesh like Istio aren’t recommending it yet for mission-critical environments, only for single-cluster environments. A recent blog post from Tetrate, a commercial distributor of Istio, presents similar arguments.

For almost all enterprise production environments — particularly those with diverse services, high compliance needs, or multiple teams — sidecar-based service meshes are still the right approach and provide the clarity, control, and maturity our customers can count on.

Here’s some more reading material on Kong Mesh: