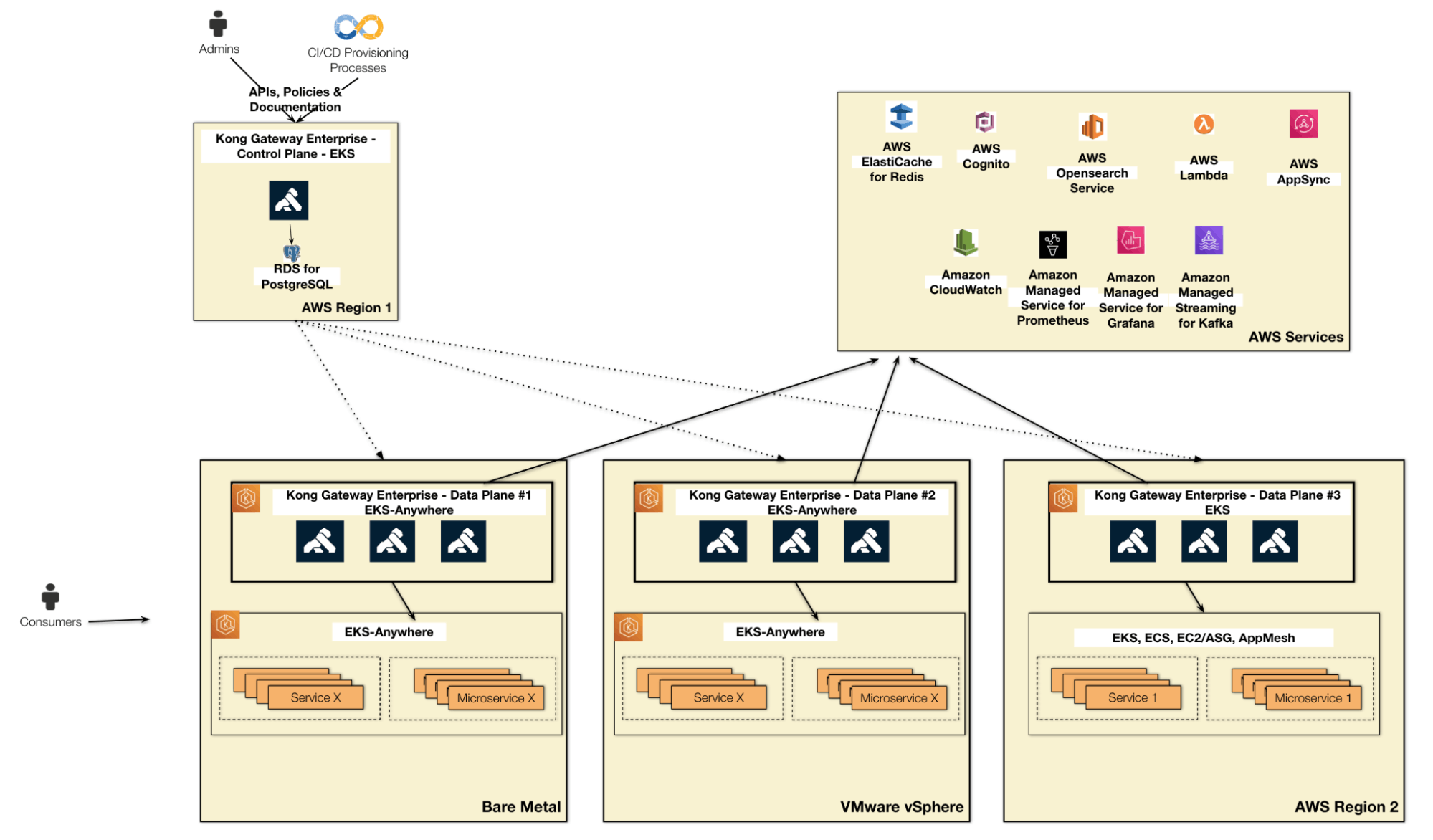

One of the most critical requirements for an Application Modernization project is to support workloads running on multiple platforms. In fact, such projects naturally include in their transformation process migrating workloads approach using the hybrid model.

Another typical technical decision that commonly comes up is the adoption of Kubernetes as the main platform for the existing services and microservices originated by the modernization project.

Kubernetes has become a de facto standard as a new platform for developing modern applications. Among the vast collection of technological resources offered by Kubernetes, perhaps the most important is the provision of a standardized environment for the complete life cycle of an application. In other words: regardless of the context where the application will be installed, whether once again On Premises or Cloud Computing, there will be a distribution of Kubernetes available.

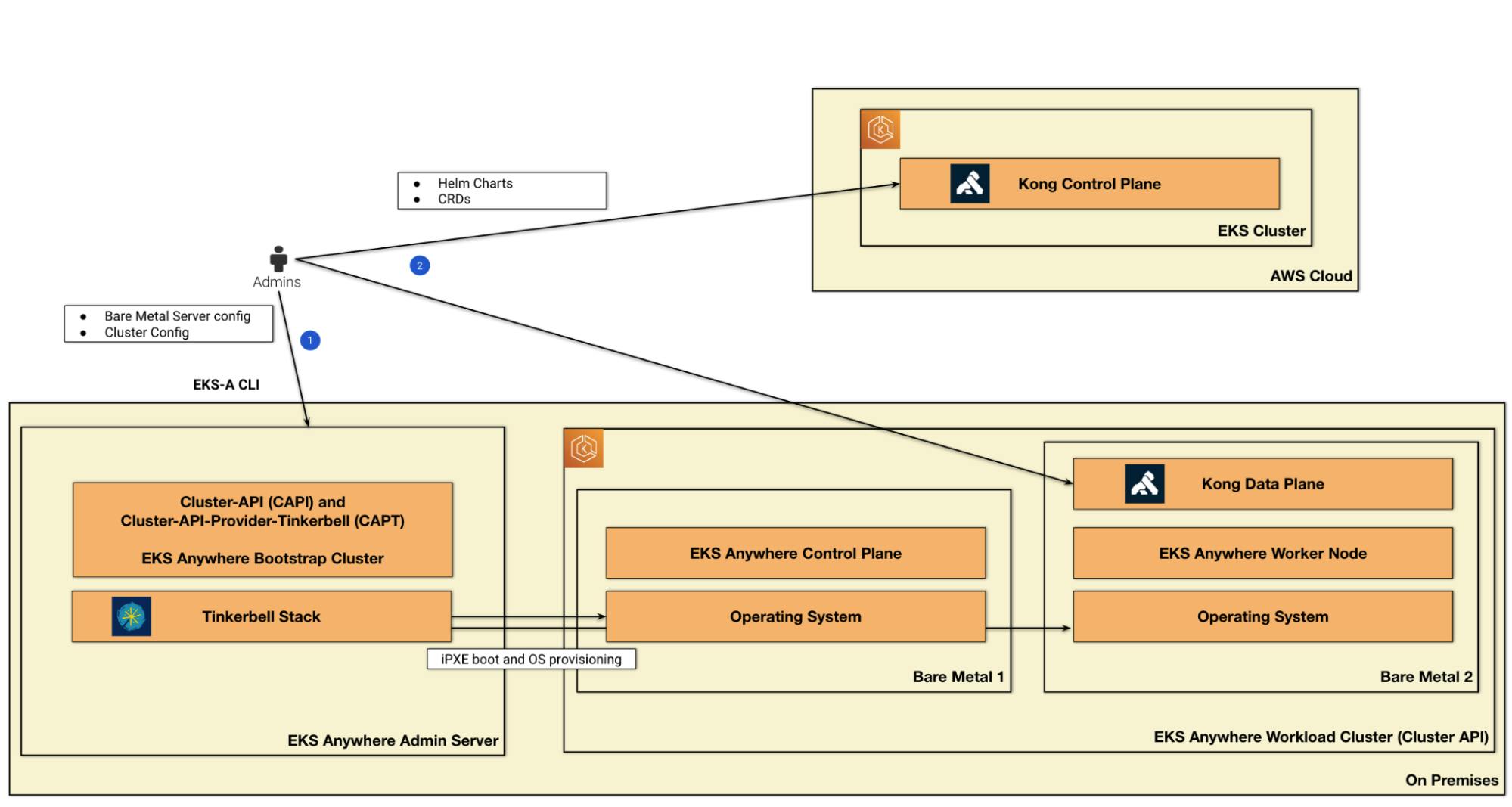

This is exactly the purpose of the Amazon EKS Anywhere (EKS-A) service: to provide the well known Amazon EKS technology across multiple environments.