One of the things that’s quite interesting about service mesh is that it has not been a very well-defined category for a very long time. Service mesh is not a means to an end. By looking at its adoption, we’ve been seeing a refocus on the end use case that service mesh allows us to enable. Some are around observability while others are around security and trust - being able to provide that identity to all of our services.

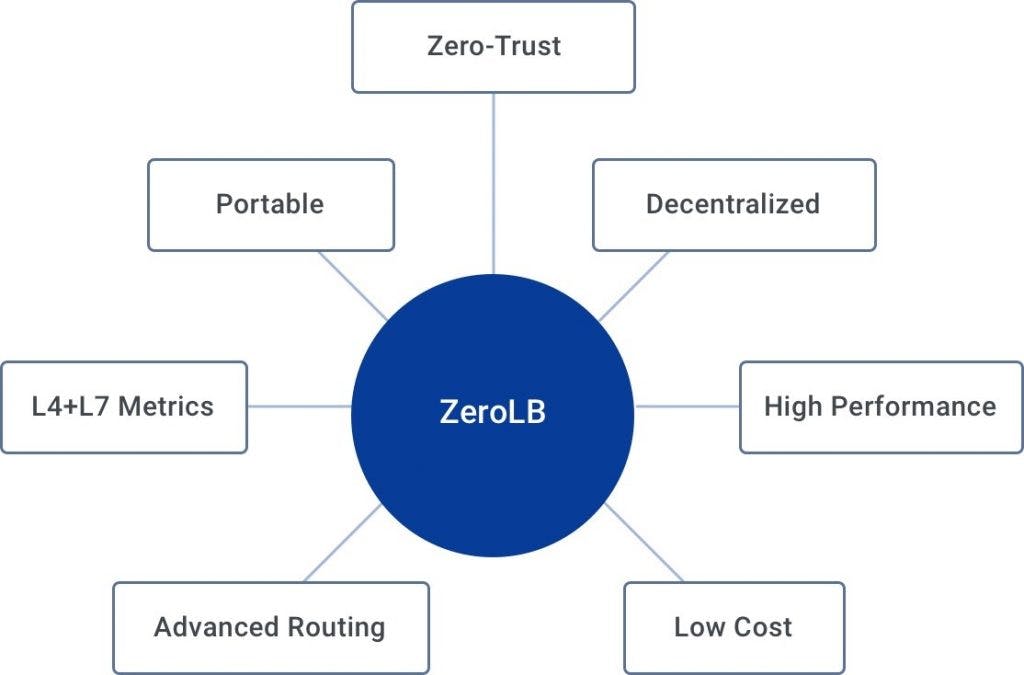

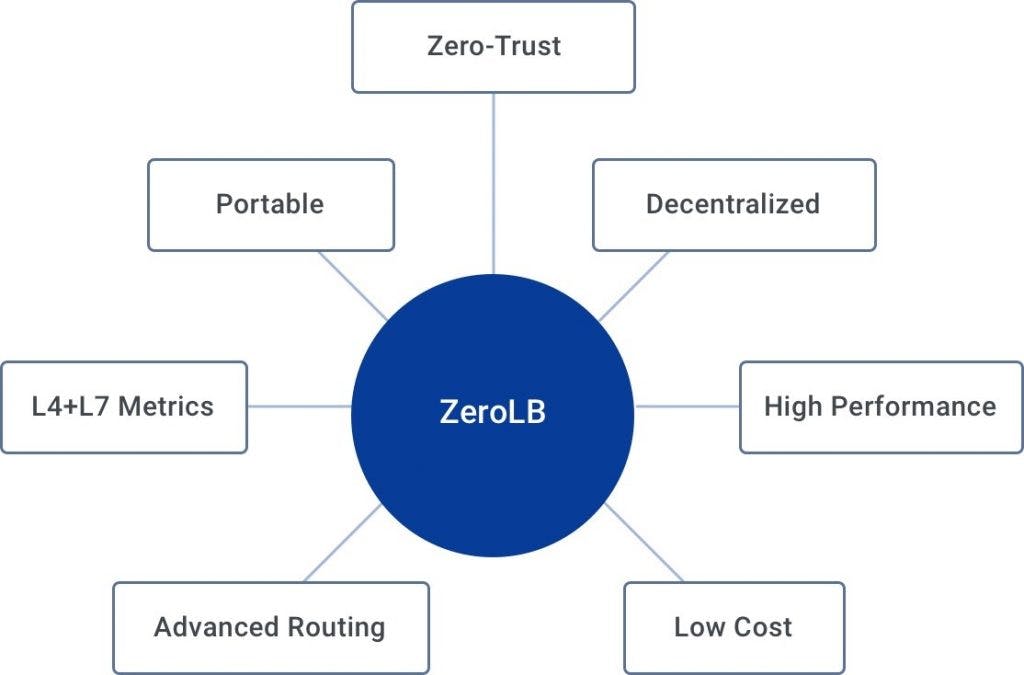

Another very important use case that we hadn't originally considered — but are seeing it being implemented with our customer base — is this idea that centralized balancers have no place in a decentralized architecture. Over the last few months, we have invested in this concept within our products, and we called it ZeroLB.

ZeroLB doesn't refer to a specific product but a pattern that we’re seeing in the industry: removing load balancers in front of services. The goal is to improve the performance of our services and the entire user experience. Also to save our customers lots of money because organizations no longer have to run cloud load balancers or enterprise load balancers in front of their operations.

How Does ZeroLB Work?

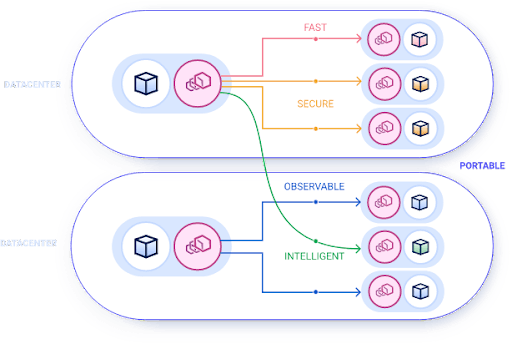

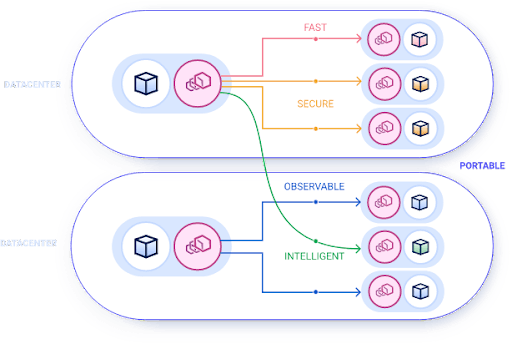

The industry has been going through this journey of not only decentralization but also portability. One of the biggest advantages of using containers to deploy our software on portable platforms like Kubernetes is being able to run them across every cloud, every data center.

ZeroLB delivered via a service mesh adds intelligence and portability to our load balancing, while making it portable on every cloud, including Kubernetes and VMs.

Almost every organization in the world has both legacy data centers and multiple clouds. The replicability and portability of our software is an amazing strategic advantage. If anything, it gives them leverage with their cloud providers. There is no reason we should be decoupled from the way we package our software (containers) and the way we run our software (Kubernetes), yet not do the same for our traffic: our load balancing.

Load balancing is in between every single request that we’re going to be making. Using cloud vendor-specific load balancers like the elastic load balancers that AWS or Azure provide do not allow us to provide portable functionality across the board.

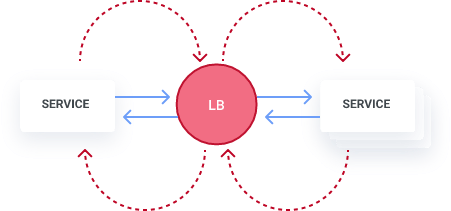

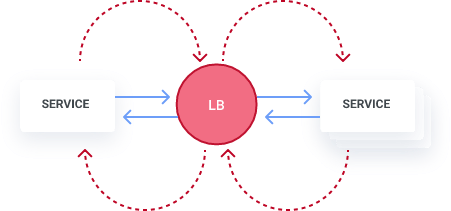

A centralized load balancer adds an extra hop in the network, making our microservices request slower. And microservices performance is all that matters - if the microservices are slow, the end user experience is going to be slow. Not only are we going to be adding processing latency between every request, but we’re also lacking portability across different clouds as well as within the data center.

Load Balancing: An Outdated Technology

Centralized load balancing technology is quite outdated, with it not having changed in the past 20 years. With modern, decentralized load balancing, we can dynamically configure across the board, leveraging the service mesh and client-side load balancing across multiple algorithms. This is load balancing that is self-healing across multiple regions and multiple clouds.

Centralized load balancers are slow, not-smart, add 2-4x more network latency, cost expensive and not portable across every environment.

Beyond the cost benefit or performance, we are talking about the actual functionality. ZeroLB is a much more mature and modern functionality for applications that can never go down, having a cycle proxy performing load balancer as opposed to a centralized load balancer.

With service mesh, we have the underlying capability of being able to inject smart load balancers across the board and move them across different clouds. When we build our software, we can replicate that same functionality in such a way that the software ships with fewer bugs. ZeroLB is really that change that will enable us to innovate faster and create better user experiences.

This comes with an entire swath of capabilities - including observability, security, traffic control and A/B testing.

Decentralizing Workloads

Decentralized workloads provide benefits on many different angles. We decentralized our applications in different services because we want to be able to scale in a much better way. Obviously, monolithic applications are very painful when it comes to that. We want to duplicate and extract functionality in such a way that different things have much cleaner boundaries between them in the way they work together.

It reduces the team coordination, which increases the velocity of development, and allows us to ship products and features in a much faster way. Now, because each service is independent, we can also scale them independently.

It’s all about being reliable and not having all the eggs in one basket. It’s about being able to replicate our infrastructure across multiple regions, multiple markets, to enter new markets and to be able to cater to our users in a much better way.

There is value in having centralized load balancers at the edge of our network. But within our infrastructure, when the applications and the services are consuming each other, we should not have a centralized load balancer in between that.

Reducing the Cost of Load Balancers

Reducing the Cost of Load Balancers

I recently spoke with a CTO of a very well-known and popular data analytics organization running in the cloud. "Help me, Marco, remove 16,000 Elastic Load Balancers in front of my services in my applications," he pleaded. "Not only do they prevent us from being able to support our clouds in a predictable way, they’re actually quite expensive."

Besides the performance implications, we can get rid of that cost. All of a sudden, we’re reducing the bandwidth by 2x or 4x by removing the centralized load balancer. And we have a much leaner infrastructure that service mesh provides us than the underlying capabilities of being able to manage a fleet of decentralized balancers.

For service members designed to operate in a decentralized way, those nodes become aware of everything else inside of the environment. They become aware of traffic policy permissions and different configurations for metrics, gathering and the load balancing concepts. It becomes aware of the other nodes in the environment that match its service name (or other service names) so that it can reach out and communicate with those, and it can handle its own load.

What’s really powerful about this is if we go in and we cut the line for the control plane, those sidecars already know about everything in the environment. You can’t push new changes to them because you’ve lost the control plane. But because they’re decentralized, they’re still able to operate and do that load balancing concept just out of the box.

Each of them have their own identifier. That indicates what those services are. They have their own DNS inside of the system that understands how to resolve that. All that’s happening behind the scenes - that’s something that you as an administrator have to manage or go in and add and remove.

Conclusion

If you would like to learn more about ZeroLB, I recommend the following on-demand webinars. First, my ZeroLB webinar where I introduce the concept and go in-depth on what we mean by eliminating the Load Balancer. And then, a technical demo by Bill DeCoste called Applications Decentralized - Leveraging Service Mesh and ZeroLB.

At the end of day, the load balancer shouldn’t be something we have to worry a ton about anymore. This isn’t a problem that we should be dedicating administrator time, developer time or operator time to go and care for and manage. We have enough intelligence in these systems to manage this. Balancing like that becomes a place where we’re handling security concerns but within the data center walls of interest service communication.

You don’t need to go the traditional load balancing path. There’s a better way that’s going to get you way more than just load balancing.

Load balancing should be something you don’t like. It shouldn’t be an assumed thing at this point. It should be an assumed thing that the system handles for you because ultimately it’s the right way to make things simpler for users.