Evolution of API Technologies: From the Cloud Age and Beyond

We live in a digital economy where Application Programming Interfaces (APIs) are foundational elements for businesses to operate and grow. As rightly outlined by Gartner, APIs interconnect individual systems that contain data about people, businesses and things, enable transactions, and create new products/services and business models.

The popularity of APIs has grown significantly in the last decade or so, but the history of APIs stretches back much further. (Check out our eBooks on the evolution of APIs and APIs in the cloud age for a deeper dive on this subject.)

Modern APIs for Cloud Connectivity

Cloud computing has revolutionized API development and deployment. Cloud vendors offer various cloud services through API endpoints. These API endpoints can be accessed through the browser, command-line interface (CLI) tools and SDKs.

Polycloud strategy

The future of software is distributed. With most organizations on the planet on some sort of cloud journey, the desire to accelerate the path to rapid, cost-effective innovation cycles is driving them all the consider the best of what the cloud platforms have to offer.

What is polycloud?

This "polycloud" approach to innovation lifecycle results in complete applications, which once resided in a single cloud platform of choice, now spanning multiple (and sometimes all) public cloud platforms.

Advantages of polycloud

The benefits of a polycloud approach include the ability to take advantage of the best-of-breed capabilities across the cloud platforms. Polycloud also allows organizations to embrace a broader set of developers, skills, languages, and tools to deliver better digital outcomes.

Docker and Kubernetes are good examples of technologies that made the move to — and across — polycloud incredibly easy and low risk.

Polycloud challenges

Polycloud is not without its challenges, however. One aspect that can be more difficult in the process with polycloud is consistency in terms of discoverability, management, security, and governance across what is a quite distributed IT substrate.

Kong helps organizations accelerating their cloud journey, and embracing polycloud footprints to stitch together, manage, secure, and make observable the numerous application components that comprise these new distributed applications. This happens across APIs controlling access into, out of, and between these applications, as well as the microservices from which they’re comprised. Broad service connectivity becomes the fabric allowing the future of software to remain distributed, but doing so in a secure and easy to manage and control fashion.

Common Types of APIs

In the cloud age, the most common architectures for APIs are RESTful APIs, gRPC and GraphQL. We will discuss each of these API types in brief in the sections below.

RESTful APIs

Representational State Transfer (REST) arrived over 20 years ago and was broadly adopted by developers who found SOAP cumbersome to use. APIs that adhere to REST are known as RESTful APIs. To be RESTful, an API adheres to the following requirements:

- Uniform Interface: Uniform Interface defines the interface between the client and the server. Each resource exposed by the API needs to be identifiable by a resource URI that the client can call. The API response should return a uniform resource representation (such as in a JSON or XML format) to the client, and that representation must have enough information for the client to use if it is to modify/delete the resource on the server.

- Client-server: The client and the server are independent of each other and unaware of one another's implementation details.

- Stateless: The API server will not host any session or state details about a client request.

- Cacheable: The client can cache a response from the API, while the API server adds the Expires header information to its response, letting the client know if the cached data is valid or stale.

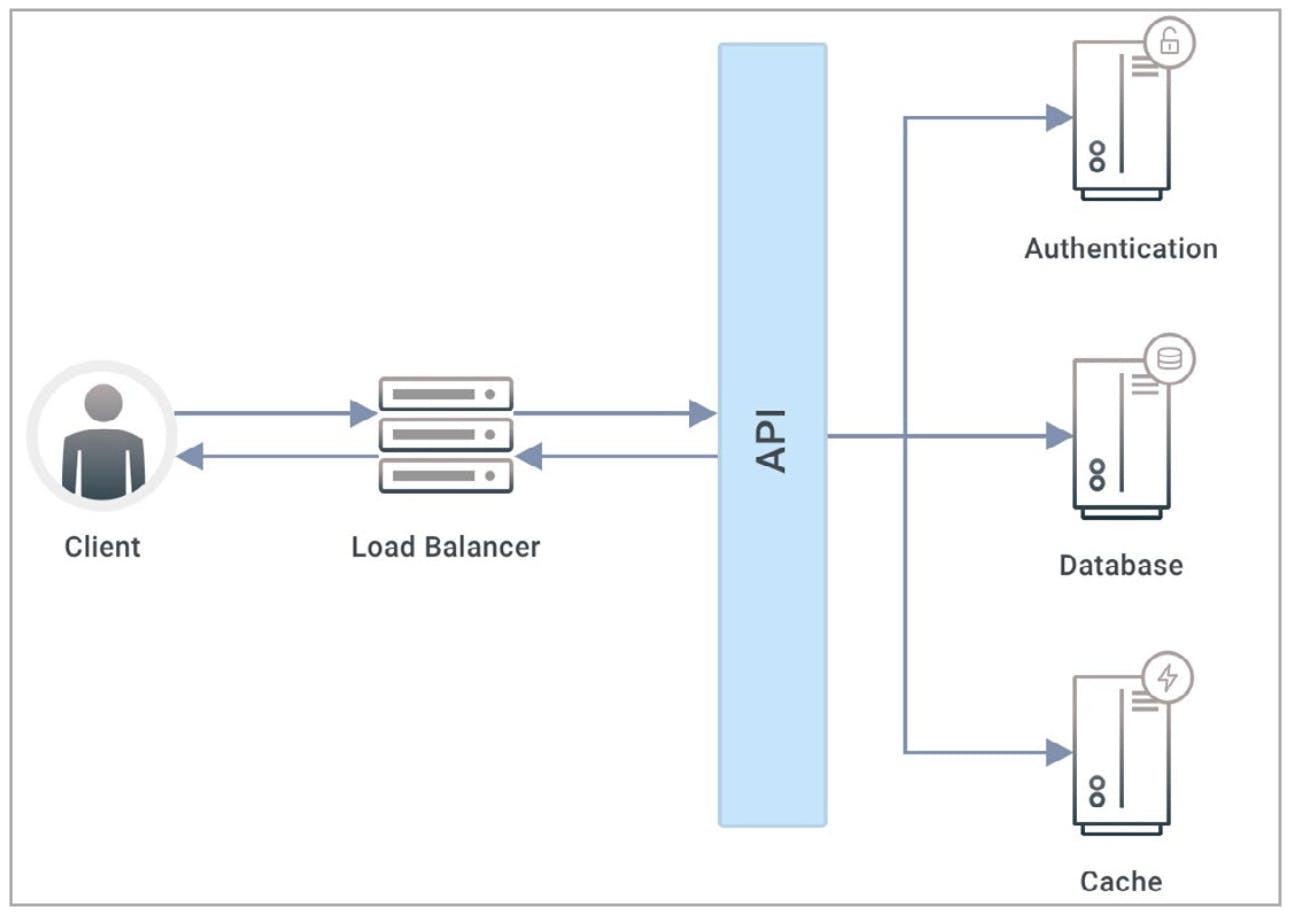

- Layered system: The client is unaware if it is directly connected to the API server or if it is going through multiple layers of applications (such as load balancing, authentication, transformation).

Figure: Layered system

- Code-on-demand (optional): Allows a REST API endpoint to return application code (such as JavaScript) to the client.

Although RESTful APIs support multiple message formats (such as HTML, YAML and XML), RESTful APIs predominantly use JSON documents, which are a series of sections with key-value pairs.

OpenAPI

OpenAPI is a formal specification for how to define the structure and syntax of a RESTful API. This interface-describing document is both human and machine-readable, which yields the following benefits:

- Portable format

- Increases collaboration between development teams

- Enables automated application development by code-generators

- Helps with automated test case generation

gRPC

Google introduced another framework for APIs called gRPC, which uses HTTP/2. A gRPC client can directly call a service method on a gRPC server. The gRPC server implements the service interface—consisting of its methods and parameters and the returned data types—and answers client calls.

In the "The Evolution of APIs: From RPC to SOAP and XML" eBook, we discussed how Remote Procedure Call (RPC) was one of the earliest means of communication between applications running on remote machines. gRPC is a framework for creating RPC-based APIs. gRPC is based on RPC but takes it a step further by adding interoperability with HTTP/2.

Compared to RESTful APIs, gRPC has benefits that include smaller messages sizes, faster communication and streaming connections (client-side, server-side and bidirectional).

GraphQL

GraphQL is a query language and runtime that allows users to query APIs to return the exact data they need. With a RESTful API, clients make multiple calls for data with different parameters appended to the URL. In contrast, GraphQL allows developers to create queries that can fetch all the data needed from multiple sources in a single call.

Loosely Coupled APIs

With APIs functioning as standalone pieces of software not dependent on the rest of the application's functionality, APIs evolved toward loosely coupled design. This approach ensured API services could be redesigned, rewritten and redeployed without running the risk of breaking other services. Strategies for making an API service loosely coupled include:

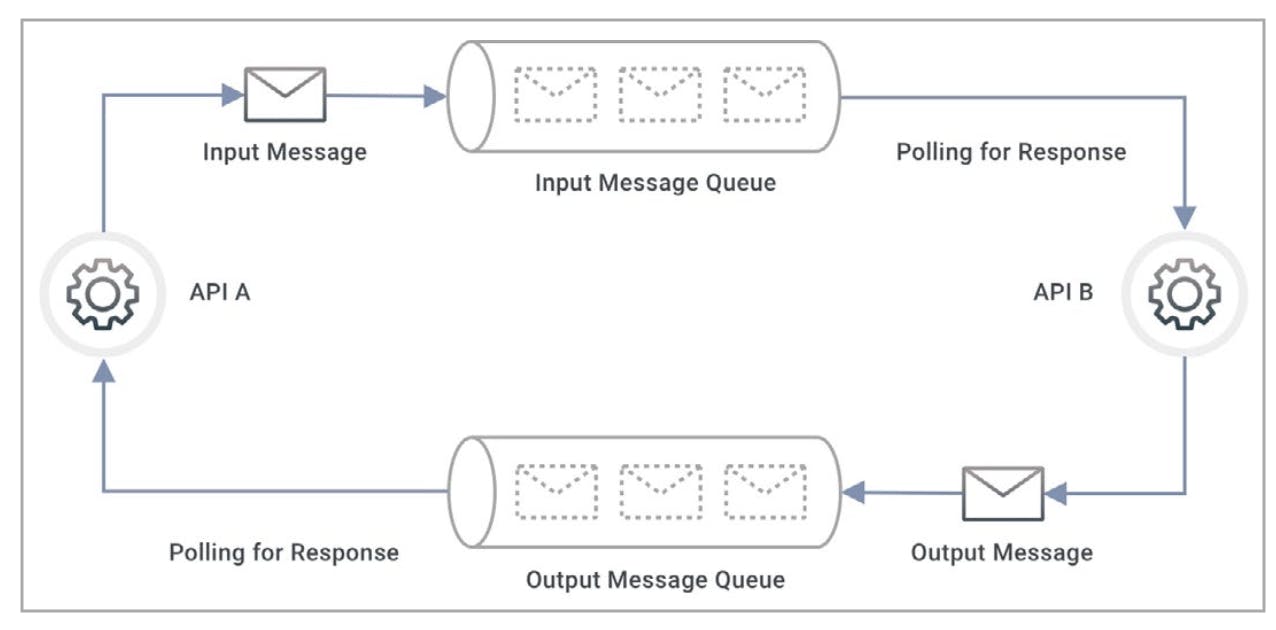

- Employ the use of message queues, which are software components that sit between two applications and help one application communicate with the other asynchronously. For example, API A can send its request to a message queue and then continue with its work. Meanwhile, API B polls the message queue periodically for messages. When it finds the message from API A, API B performs the requested function. Similarly, API B sends the function result to the message queue, and API A can retrieve that result at a later time.

Figure: Two Loosely Coupled APIs Using Message Queues

- Delegate the integration between APIs to an API middleware, which ensures the APIs can talk to one another by facilitating aspects such as connectivity logic, translation between message formats and protocols, and authentication/authorization.

- Build fine-grained APIs. In a coarse-grained application, application functionality spreads across only a few APIs. Instead, these APIs can be broken down further, with each subsequently smaller API performing only a single function. Smaller APIs become easier to develop, test, manage, deploy and upgrade.

Microservices

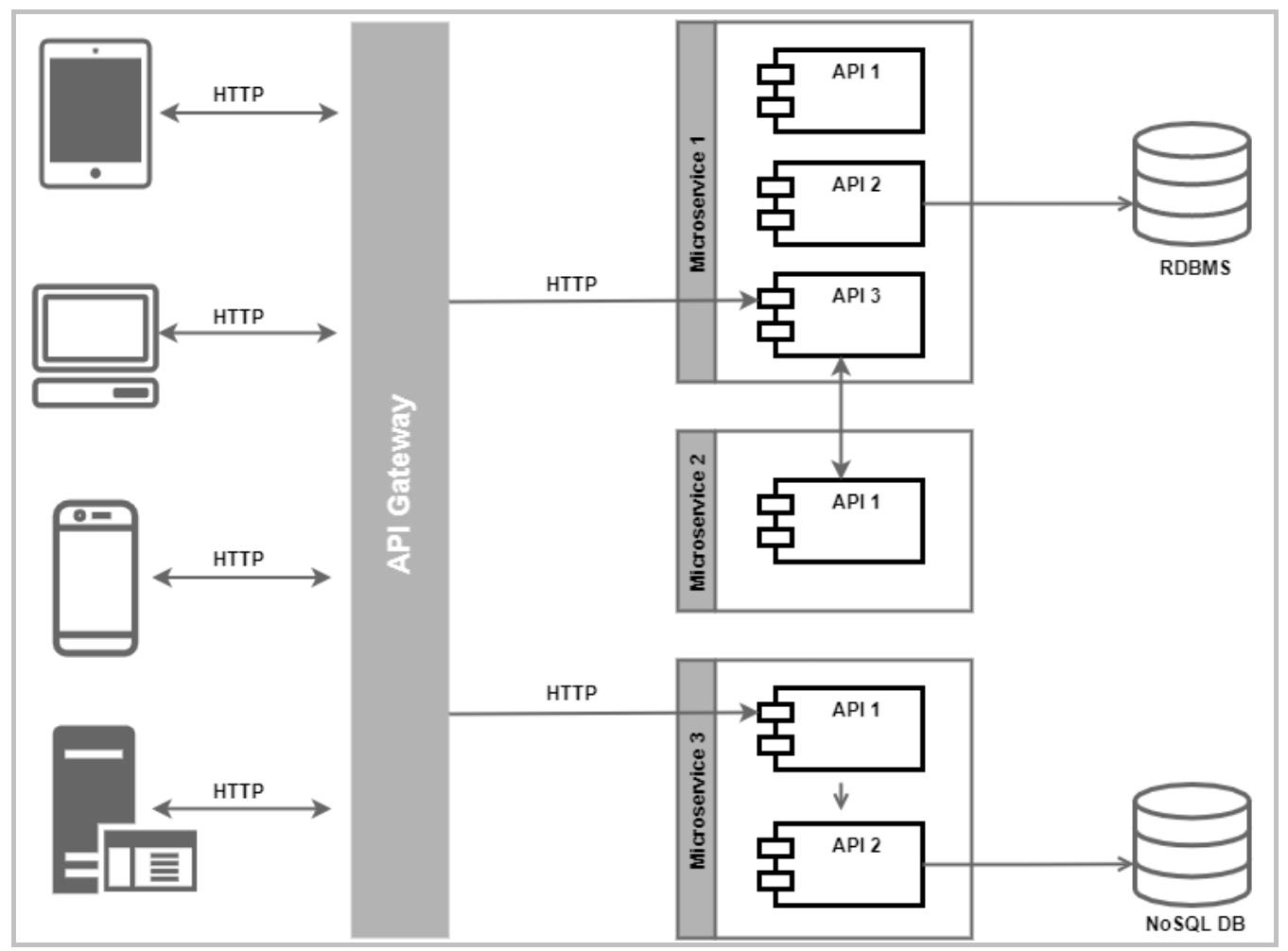

Microservices allow a complex application to be broken down into small, independent "services." Microservices can be written in any language and deployed anywhere, and their functionalities are exposed as APIs. Callers of those APIs might be end-user clients or even other microservices.

Figure: Example of a Microservice Architecture

What makes microservices unique is that they are loosely coupled and independent. In other words, you can change the program code and internal workings of an API within a microservice without touching the entire application. Like APIs, microservices can be written in any language and deployed anywhere. Because microservices are loosely coupled, a single microservice experiencing a spike in load or a failure won't bring down the entire application.

Serverless Functions

A serverless function is a standalone piece of code that a cloud provider runs on its managed environment, such that the customer (the developer) does not have to worry about infrastructure or scaling. As far as the developer is concerned, there's no server involved—it's serverless.

Service Mesh

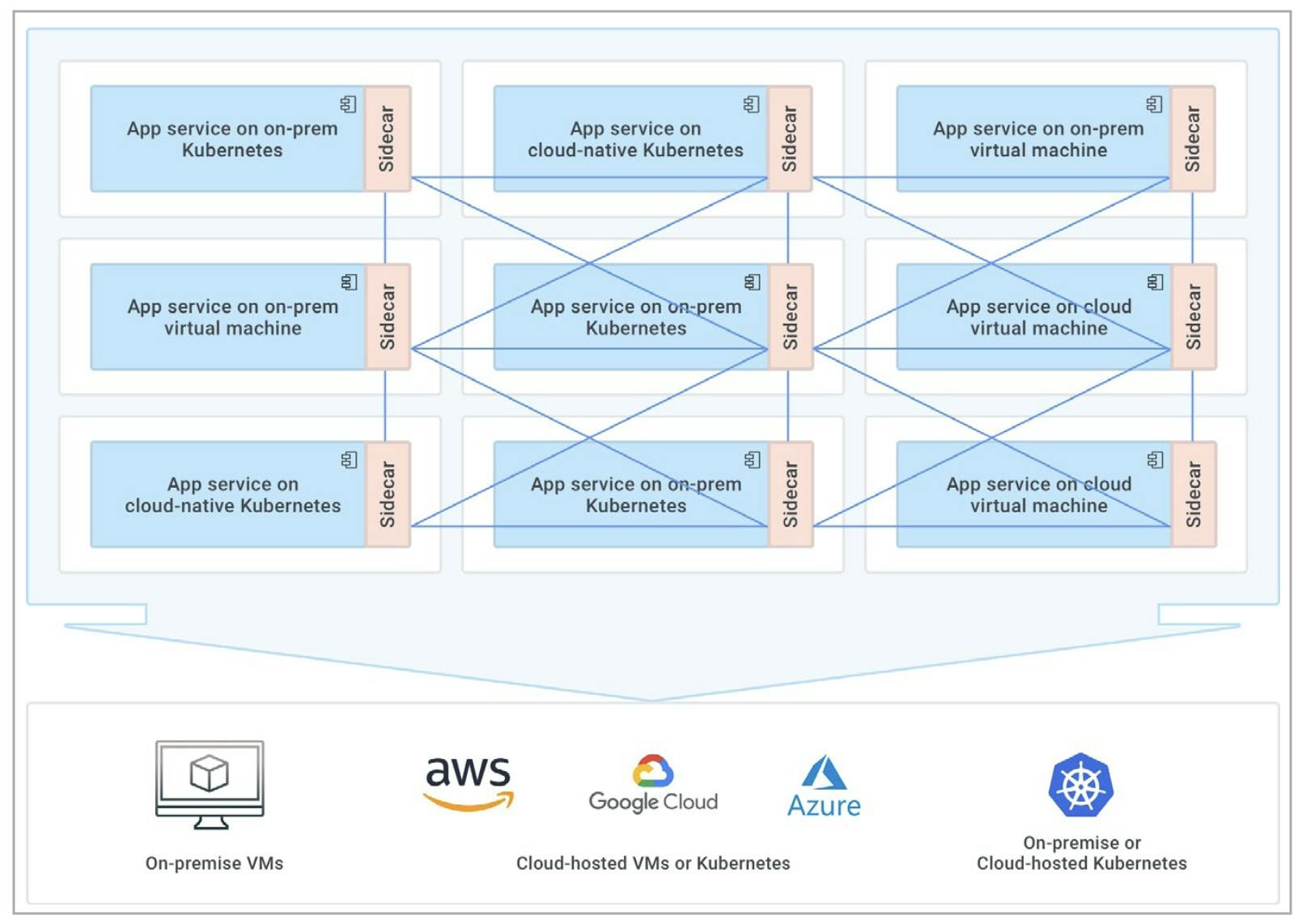

An application can comprise microservices running on physical machines, virtual servers both on-premise and in the cloud, Docker containers running in Kubernetes pods, or as serverless entities. To communicate with one another, these microservices might connect using direct links, VPNs and trusted virtual private clouds (VPCs) at a physical level. To manage the complexities of network performance, discoverability and connectivity, we have the service mesh.

A service mesh is a dedicated infrastructure layer built into an application to enable its microservices to communicate using proxies. A service mesh takes the service-to-service communication logic from the microservice's code and moves it to its network proxy.

The proxy runs in the same infrastructure layer as the service and handles the message routing to other services. This proxy is often called a sidecar because it runs side-by-side with service. The interconnected sidecar proxies from many microservices create the mesh.

Figure: A Service Mesh Running on Hybrid Cloud

The service mesh offers many advantages to microservices, including observability, secure connections and automated failover.

Future possibilities of APIs

The cloud age introduced the idea of an "API economy" — or the business practice of organizations exposing their digital services or information through the controlled use of APIs.

While the API economy looks attractive today, we believe it will become even stronger in the future. According to Gartner, by 2023, 65% of global infrastructure service providers' revenue will be generated through services enabled by APIs, up from 15% in 2018. Over the last 10 years, APIs have played a significant role in the growth of fintech, artificial intelligence (AI), blockchain, Internet of Things (IoT) and cybersecurity.

The Emergence of Web 3.0

Web 3.0 (originally called the "Semantic Web" by Tim Berners-Lee) is the web that will dominate tomorrow. It’s envisioned that Web 3.0 will be based on decentralized networks and protocols. A website will never go down because a chain of servers will serve it. In Web 3.0, blockchain will become ubiquitous, with no single authority of an entity over information.

The web we know today will become more accessible than ever, by smart devices that are internet-ready and can search, consume, process and share information just like phones and laptops do today.

Searching, consuming and sharing information and content will be based on semantics (meanings) rather than exact keywords, making Web 3.0 heavily dependent on AI to understand and predict the intentions of humans.

How will APIs look in Web 3.0? Most likely, APIs will be event-driven.

Event-Driven APIs

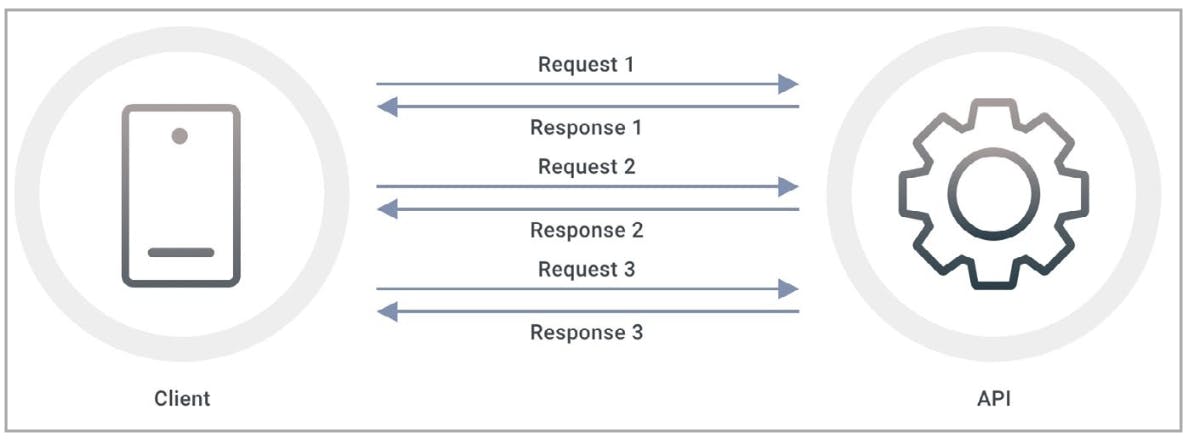

The traditional approach to consuming an API has been request-response. Applications send an API query, and the API sends back a result.

Figure: Traditional API Access with Request and Response

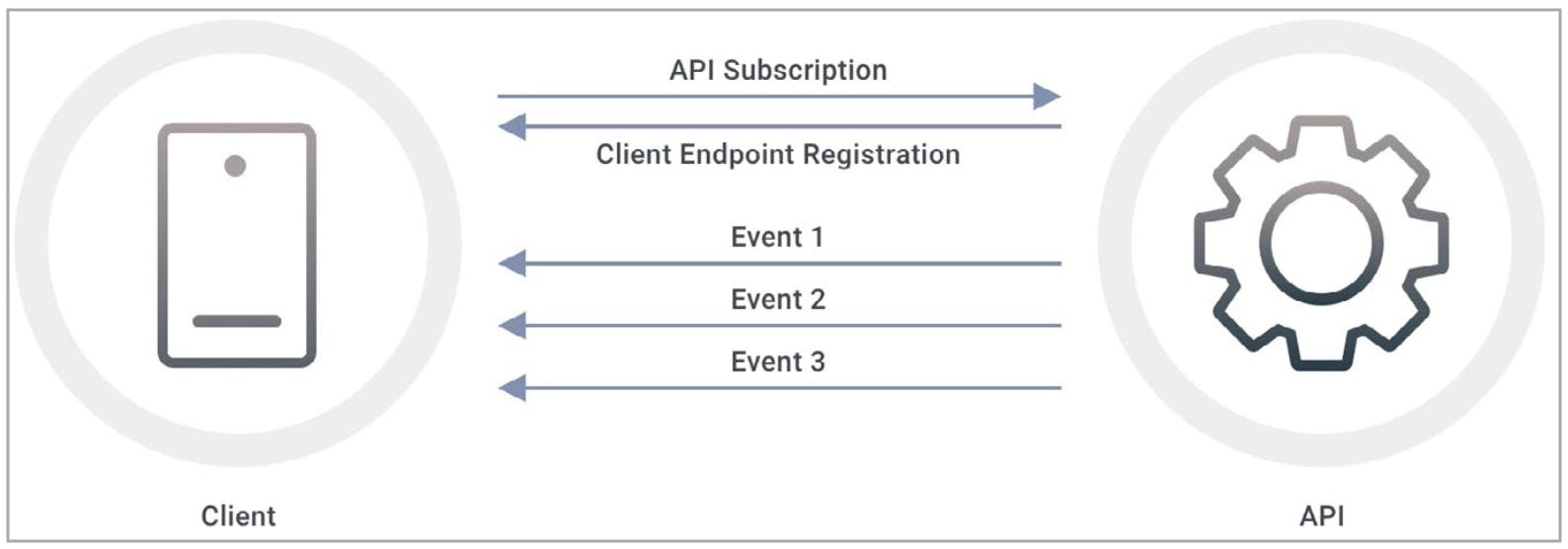

In Web 3.0, event-driven APIs will send data when an event occurs. A consumer subscribes to an API endpoint, indicating that it wishes to receive updates asynchronously when particular events happen. When a matching event happens, the API sends the event's data to all subscribed consumers.

Figure: Event-Driven API with Event Push

There are several approaches to building event-driven APIs, and they include webhooks, WebSocket and Server-sent events (SSE).

Blockchain

Blockchain is a distributed ledger of transactions based on trust and verification. Each transaction in the blockchain is immutable and is open to all nodes in the network.

APIs are making a huge impact in smart contracts, which are applications stored in a blockchain that run to enforce the agreement of a transaction in real-time. The agreement can be anything from exchanging information to e-commerce purchases.

At times, the smart contract needs to access data and functionality outside the blockchain. Ledgers use a blockchain oracle to query, verify and authenticate external data sources, but this could be a single point of failure. In the future, smart APIs running within the blockchain could be a possibility.

Artificial Intelligence

Cloud providers now offer several advanced AI-enabled APIs for developing cognitive applications like natural language processing (NLP), face recognition and video analysis. Using these services' APIs significantly reduces development time as the cloud product does most of the heavy lifting.

AI can also be used for automated documentation of APIs and monitoring of API security threats or optimization opportunities.

IoT

APIs for Internet-of-Things (IoT) are the glue that sits between heterogeneous devices and the applications that use them. Typical examples of IoT APIs can be seen in app-controlled devices like personal fitness trackers, lights, alarms and more. These devices capture information and send that over the internet to the manufacturer's backend system.

Preparing for the Future

What are some areas in which the development community ought to prepare for the next big change in the world of APIs?

- API Development Practices: With tools now available to help developers design, build, test and publish APIs, development practices are improving remarkably. Two particular areas seeing impact are API Linting—one such example is Insomnia—and APIOps.

- Enhancing API Performance: Enterprises need to ensure their APIs can stand up to the increased demand of Web 3.0. Techniques for improving responsiveness include more efficient caching, the use of connection pooling, limiting or compressing response data, and processing requests asynchronously or in batches.

- Securing APIs: Because APIs are also the target of malicious attacks, organizations should become familiar with the API Security Project from Open Web Application Security Project (OWASP), which lists some of the top security issues for APIs, along with mitigation strategies. Everyone involved in the design, development, testing and deployment of APIs should be part of the security initiative. Learn more about how Kong helps businesses future proof API security and scalability.

- Environment independence: APIs must be adaptable to all sorts of environments (such as bare metal, VMs and Kubernetes clusters) and within all sorts of deployment architectures (such as multi-cloud or hybrid-cloud). APIs can be made loosely coupled and asynchronous but still require a robust and intelligent communication and routing mechanism such as a service mesh like Kong Mesh.

The need for flexible, scalable cloud native solutions

Kong is well suited in all of the above areas. Kong doesn't hold organizations back from embracing APIs in the cloud age.

As the world's most popular API Gateway, Kong is flexible to run in nearly any environment or architecture a project requires. Both configuration and deployment of Kong support automation with common CI/CD tooling, thus enabling the all-important speed in development, scaling, and recovery.

Automation and elasticity as demand for APIs ebbs and flows. Independent form scaling is raw performance, expressed in latency, and throughput. Once again Kong does very well here.

While the merits of APIs remain unchanged in the cloud age, the tooling for APIs must be cloud native. This is one of the reasons Kong is superior versus legacy tools like Mulesoft. See how Kong delivers more speed, scale, and value in GigaOM’s report on Kong vs Mulesoft Anypoint Platform.

Talk to one of Kong’s API experts to learn more about tapping into API-driven innovation.

Developer agility meets compliance and security. Discover how Kong can help you become an API-first company.