What are Virtual Machines (VMs)?

A virtual machine (VM) is a fully-fledged, standalone operating environment running on a physical computer. Unlike the host computer it's running on, a VM is not a physical machine, thus the designation of "virtual". It does, however, have all the components of a physical computer system (CPU, RAM, disk, networking, and operating system) encapsulated in one or more files. As a result, it can process data just like any computer and, for all intents and purposes, be accessed by users while the physical machine provides the underlying hardware resources.

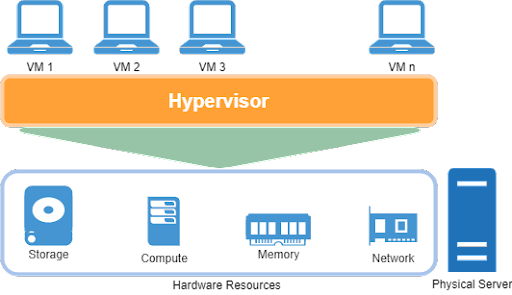

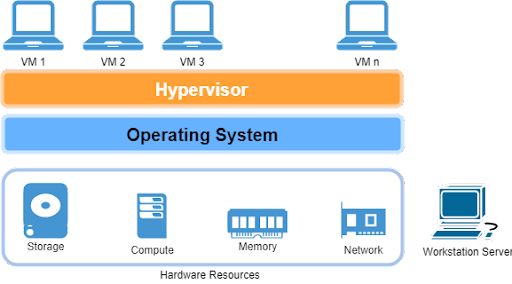

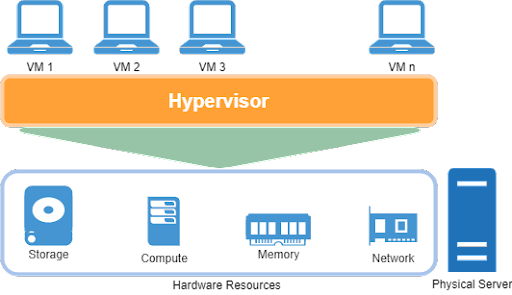

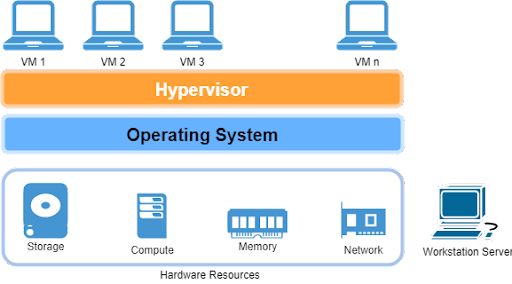

However, VMs can't directly access these hardware resources. There's a layer of abstraction between a VM and its physical host. This abstraction layer is called a hypervisor. A hypervisor is a specialized software program that runs on the physical host and interacts with both the host machine and the VM, abstracting the host computer's resources to the VM. The VM, on the other hand, thinks the resources presented to it by the hypervisor are coming from a physical machine.

It's typical to run multiple VMs on the same machine, so VMs can share the CPU, RAM, disk capacity, and the network bandwidth of the host computer. However, each VM only operates with the resources presented to it, completely unaware if other computers are accessing the resources it's sharing. This isolation ensures that a VM can't change the underlying settings of the host and, therefore, can't affect other VMs.

Since VMs are actual computer files, they can be snapshotted, replicated, copied to other physical machines, or even deleted.

Virtualization Types

Depending on the type of hypervisor, there can be two types of virtualizations:

Full virtualization, or type-1 virtualization, involves running a hypervisor on a bare-metal server (such as directly on top of the hardware level as standalone software). In this case, the hypervisor acts as a specialized operating system for the physical host. This is the case with most corporate virtualization scenarios. Some examples of such hypervisors include VMWare ESXi, Citrix XenServer, Redhat KVM, or Microsoft Hyper-V.

Type-2 virtualization involves running a hypervisor on top of an already running operating system (OS). In this case, there are two levels of abstraction for the VM—one from the hypervisor, and the other from the OS of the physical computer. The OS abstracts the hardware resources to the hypervisor, and the hypervisor then abstracts that to the VM. A typical example of type-2 virtualization is VirtualBox, or VMWare Workstation Player running on a Windows laptop.

Virtual Machine Use Cases

Enterprises started to widely adopt virtualization technologies in the mid-2000s as companies like VMWare helped demonstrate the simplicity and cost savings they offered. This ultimately paved the way to an even more revolutionary change: the cloud. Today, some of the main reasons organizations use VMs include:

- Running different types of workloads in the same physical server in an isolated way.

- Implementing optimal resource utilization of physical servers, thereby saving space and hardware cost.

- Allowing engineers to have their own development environment in their local workstations.

- Creating sandboxes for security, for testing unknown or new applications, or for analyzing malware.

- Developing and testing Standard Operating Environment (SOE) for both workstations and servers.

- Creating snapshots for disaster recovery and backup purposes.

Emerging VM Use Cases

As technology evolves, new use cases for virtual machines continue to emerge:

Edge Computing: VMs are being used to run applications at the edge of networks, closer to where data is generated, to reduce latency and bandwidth usage.

IoT Gateways: Virtual machines can act as gateways for Internet of Things (IoT) devices, providing a secure and manageable interface between IoT devices and the cloud.

AI and Machine Learning: VMs with GPU acceleration are being used to run complex AI and machine learning workloads, allowing for flexible resource allocation.

Blockchain Nodes: Virtual machines are often used to run blockchain nodes, providing isolation and easy management for distributed ledger technologies.

Virtual Machine Anti-Patterns

There are certain use cases for which VMs may not be the best solution, including:

- Managing large, monolithic, or legacy applications running on-premise that don't support virtualized environments.

- Using older versions of software that don't support virtualized environments.

- Using applications that require specialized hardware dongles or Hardware Security Modules (HSM) to physically attach to a machine to operate.

- Using software that's not licensed for use on virtual machines.

- Running clustered applications that require precise synchronization across all nodes, as VM clocks often don't synchronize well with the host clocks.

- Hosting data that is too sensitive for VMs running on a shared tenancy. These applications run best on dedicated servers on-premise.

Virtual Machines in the Cloud

Virtual machines are the main computing resource in any cloud environment. Customers can spin up, spin down, scale up, or scale down any number of these VMs to run their workload in the cloud. AWS, Azure, GCP, and DigitalOcean all have their version of a VM service. But in all cases, these VMs all run on physical machines hosted in the cloud provider's data center. The user only pays for the VMs for the duration of the usage.

Cloud VMs are an example of Infrastructure-as-a-Service (IaaS) and are the more recognizable form of virtualization. Other cloud models like Platform-as-a-Service (PaaS), Software-as-a-Service (SaaS), and some newer models like Database-as-a-Service (DBaaS) and Function-as-a-Service (FaaS) all make use of VMs. For example:

- Managed services like Amazon RDS, Redshift, EMR, Azure Synapse, or Google BigQuery use virtualization technologies to create database instances and achieve massively parallel processing (MPP) capability.

- Serverless functions running on AWS Lambda, Azure Functions, or Google Cloud Functions all run on a server somewhere behind the scenes. And that server is virtualized.

- SaaS solutions like Office 365, Google G-Suite, or Snowflake data warehouse use VMs to provide a consistent customer experience—the user just doesn't get to see the VMs that power these platforms.

Single and Multi-Tenant Cloud VMs

Cloud VMs run on physical machines, but these machines often host multiple customers' VMs. When spinning up a VM, there's no way to know which physical server it will be created on, nor what other VMs share that physical machine. This is the default behavior known as multi-tenancy.

Despite the ample controls to ensure VMs can't see each other, this can be an issue for security or performance-conscious customers. Perhaps for data sensitivity reasons or network concerns, an organization might want physical servers dedicated to its VMs alone. When a cloud customer spins up their VMs in a dedicated host, it's called a single-tenant host.

Cloud vendors like AWS provide options to select either single or multi-tenant setup when creating a VM. Opting for single tenancy VMs costs more. However, if your workload needs high security and isolation, it's the better option.

Advancements in Cloud VM Technologies

Recent advancements in cloud VM technologies include:

- Bare Metal Instances: Some cloud providers now offer bare metal instances, which are single-tenant physical servers that provide the performance of dedicated hardware with the flexibility of the cloud.

- Spot Instances: These are unused cloud provider capacity offered at steep discounts, ideal for fault-tolerant workloads.

- GPU-Enabled Instances: For AI, machine learning, and high-performance computing workloads, cloud providers offer VMs with powerful GPUs.

- ARM-based Instances: Cloud providers are increasingly offering ARM-based VMs, which can provide better price-performance for certain workloads.

- Confidential Computing: This technology uses hardware-based trusted execution environments to isolate sensitive data, providing an extra layer of security for VMs.

VMs vs Containers

Containers are a layer of abstraction above both VMs and physical machines. While a VM abstracts a complete machine, a container only abstracts an application and its dependencies.

A container is a packaged unit of a running application and all its dependencies, irrespective of where it's running. By itself, a containerized application starts as an image file—a standalone, lightweight text file with instructions on what other dependencies must be installed and configured to create a fully running application. As a text file, it's easy to port across different environments. A container is spun up by a container runtime engine. As long as there is a container runtime available for the machine's (physical or virtual) OS, the containerized application will run exactly the same everywhere.

Containers became popular for running microservices. In large distributed applications, microservices perform small, atomic operations. By running individual services within containers, applications become more portable, fault-tolerant, efficient, and performant.

By nature, containers are ephemeral—not saving data locally; rather, they save it to external storage media mapped to it as standard volumes. So, even if the container crashes, no data is lost. When a container orchestrator like Kubernetes is used, this becomes even more efficient. With Kubernetes, the entire process of managing network connectivity, load balancing, and scheduling of containers becomes automated.

VMs and Containers: Coexistence and Integration

While containers have gained significant popularity, VMs continue to play a crucial role in modern IT infrastructures. In fact, VMs and containers are often used together in various ways:

- Containers on VMs: Many organizations run containerized applications on VMs, combining the isolation and resource management benefits of VMs with the agility and efficiency of containers.

- VM-based Kubernetes Nodes: In cloud environments, Kubernetes clusters often use VMs as worker nodes, allowing for easy scaling and management of the underlying infrastructure.

- Nested Virtualization: Some cloud providers now offer nested virtualization, allowing VMs to run within VMs, which can be useful for certain development and testing scenarios.

- VM Images with Container Runtimes: Cloud providers often offer VM images pre-configured with container runtimes and orchestration tools, streamlining the setup process for container-based workloads.

Networking Virtual Machines to Run Microservices

Just like physical computers, VMs also need to be part of computer networks to perform most workloads. For example, a physical server may host four virtual nodes of a cluster, each needing to communicate with the other. Ensuring connectivity between all four VMs will allow the application to work cohesively.

In fact, VMs can represent an entire functioning network—made possible either through the networking feature of the underlying hypervisor, or having the VM connect through the host's network adapter to be part of a VLAN. This is the case with cloud VMs, which are created within Virtual Private Clouds (VPC). VPCs are virtual networks with their own IP CIDR range, subnets, and network ACLs. They also can implement firewall rules with Security Groups.

Using the VPC networking facility, a cloud VM can be configured to stay private, so it can't access the internet, but can communicate with other VMs within the cloud account. Or, a cloud VM can be public, where it can send traffic to and receive traffic from the internet.

It's also possible to achieve connectivity between VMs across network boundaries. Many organizations have both an on-premise, private cloud in their data center and a public cloud footprint. This is called a hybrid setup. Typically in a hybrid setup, the on-premise and the cloud network is connected through a VPN or a dedicated, high-speed direct link like AWS Direct Connect.

Sometimes organizations can also use multiple cloud providers to run their workloads. This is known as a multi-cloud setup. For example, an enterprise may use Azure AD for centralized authentication, and configure services running on their AWS account to authenticate through it. Connectivity in a multi-cloud setup is usually achieved through the public internet.

Problems when Running Microservice Applications in Hybrid and Multi-Cloud

Hybrid cloud setups allow enterprises to keep their on-premise virtual machines, while at the same time taking advantage of the public cloud's elasticity. With a purely multi-cloud setup, organizations can save capital and operational expenditure of a private cloud while making use of the public cloud's elasticity. However, both these approaches come with their own challenges—particularly when service-oriented applications span across networked environments.

Despite the rise of containers and container orchestrators, many organizations still run their microservices on virtual machines. When these distributed services try to communicate with one another, they face the risks associated with slow, unpredictable, or insecure networks.

Even when services could be loosely coupled with message queues, there still needs to be a reliable way for them to communicate. Creating highly available, intelligent routing mechanisms for service communication can be a cumbersome task. Similarly, ensuring a consistent network ACL for these VMs across public and private clouds can be difficult.

Introducing Kuma

Kuma is an open-source CNCF service mesh, originally developed by Kong. Organizations can use it in any microservice implementation, including cloud-native Kubernetes cluster, legacy on-premise virtual machines, or even a hybrid mixture of the two. This is the reason it's known as a multi-tenant, universal service mesh.

Kuma frees up individual microservices from having their own service-to-service communication logic. This connectivity and routing logic is abstracted away in a series of software-based network proxies. Rather than microservices directly communicating with one another, their requests are handled by sidecar proxies which then take care of the routing.

As Kuma centralizes this connectivity component, any changes to the connectivity rules can be applied from a single plane: the control plane. The control plane receives user input, creates and configures service meshes, adds services to the mesh, configures their behavior, and applies policies.

Kuma is built on top of Envoy and runs a sidecar alongside every service instance. Its job is to process incoming and outgoing service requests.

A single Kuma control plane can create and manage many data planes from an easy-to-use, advanced GUI, allowing simple management across hundreds—or even thousands—of services. Administrators can set up or use bundled policies for the following purposes:

- traffic routing

- traffic permissions

- zero-trust security

- health checks

- retries

- timeouts

- traffic metrics

- traffic logs

Typical administrative tasks like updating mutual TLS across all tenancies can be easily done with a single policy update.

Kuma and Virtual Machines

While Kuma is often associated with containerized environments, it's equally capable of managing services running on virtual machines. This makes it an excellent choice for organizations with hybrid environments or those in the process of migrating from VMs to containers.

Some key benefits of using Kuma with VMs include:

- Consistent Networking Policies: Kuma allows you to apply the same networking policies across VMs and containers, ensuring consistency in your hybrid environment.

- Enhanced Observability: Kuma provides detailed insights into service-to-service communication, even for services running on VMs.

- Gradual Migration: Organizations can use Kuma to gradually migrate services from VMs to containers, ensuring smooth transitions without disrupting communication.

- Legacy Application Support: Kuma can bring modern service mesh capabilities to legacy applications running on VMs, extending their lifespan and improving their performance.

Conclusion

As we have seen, VMs are widely used for several use cases—from developer workstations to cloud-hosted clusters—and for their simplicity and cost-effectiveness. Although container orchestration has taken application high availability to a new level, there's still room for improvement when distributed applications run in hybrid or multi-cloud networks and use VMs.

Using a proven and advanced service mesh control plane like Kuma can guarantee connectivity, security, scalability, performance, and seamless integration for these cross-boundary applications.

The future of virtual machines looks bright, with ongoing innovations in areas like edge computing, AI acceleration, and confidential computing. As cloud technologies continue to evolve, VMs are adapting to new roles and use cases, often working in tandem with newer technologies like containers and serverless computing.

For organizations looking to optimize their use of VMs, especially in complex, distributed environments, tools like Kuma offer powerful solutions to common challenges. By providing a unified approach to service connectivity.

FAQs

Q: What is a virtual machine (VM)?

A: A virtual machine (VM) is a fully-fledged, standalone operating environment running on a physical computer. It has all the components of a physical computer system (CPU, RAM, disk, networking, and operating system) encapsulated in one or more files, allowing it to process data just like any computer.

Q: What is a hypervisor?

A: A hypervisor is a specialized software program that acts as a layer of abstraction between a VM and its physical host. It runs on the physical host and interacts with both the host machine and the VM, abstracting the host computer's resources to the VM.

Q: What are some common use cases for virtual machines?

A: Common use cases for VMs include:

- Running different workloads on the same physical server

- Optimizing resource utilization

- Creating development environments

- Setting up security sandboxes

- Testing and developing standard operating environments

- Creating snapshots for disaster recovery and backup

Q: How do virtual machines differ from containers?

A: While a VM abstracts an entire machine, a container only abstracts an application and its dependencies. Containers are more lightweight and portable, but VMs provide stronger isolation and can run different operating systems on the same host.

Q: What is Kuma and how does it relate to virtual machines?

A: Kuma is an open-source service mesh that can be used with any microservice implementation, including those running on virtual machines. It manages service-to-service communication, providing benefits like traffic routing, security, and observability for applications running on VMs or containers.