Image credit: https://docs.mcp.run/blog/2024/12/18/universal-tools-for-ai/

With Anthropic’s recent updates to the MCP spec, they are clearly setting the stage for an explosion in growth in remote servers that will help address the above concerns. This is most evident in the addition of experimental support for a "Streamable HTTP" transport to replace the existing HTTP+SSE approach. This eliminates the hard requirement for persistent, stateful connections and defaults to a stateless MCP server.

Additionally, Anthropic recently updated the MCP spec to introduce MCP Authorization – based on OAuth 2.1. While a necessary step for remote MCP servers, there has been a lot of discussion around the issues introduced by this approach, namely, requiring the MCP server to act as both the resource and authorization server.

Cracks in the architecture: Scaling challenges for remote MCP

MCP servers going remote is inevitable—but not effortless. While the shift toward remote-first MCP servers promises extensibility and reuse, it also introduces a fresh set of operational and architectural challenges that demand attention. As we move away from tightly-coupled local workflows and embrace distributed composability, we must confront a number of critical issues that threaten both developer experience and system resilience.

Authentication and authorization woes

The MCP specification proposes OAuth 2.1 as a foundation for secure remote access, but its implementation details remain complex and problematic. MCP servers are expected to act as both authorization servers and resource servers. This dual responsibility breaks conventional security models and increases the risk of misconfiguration.

Unlike traditional APIs that can rely on well-established IAM patterns, MCP introduces novel identity challenges, particularly when chaining tools across nested servers with varying access policies.

One of the more subtle but critical vulnerabilities in the MCP model has been recently outlined by Invariant Labs. In what they call “Tool Poisoning Attacks (TPAs),” malicious actors can inject harmful instructions directly into the metadata of MCP tools. Since these descriptions are interpreted by LLMs as natural context, a poisoned tool could quietly subvert agentic reasoning and coerce it to leak sensitive data, perform unintended actions, or corrupt decision logic.

These risks are exacerbated when MCP servers are publicly discoverable or shared across organizational boundaries, and no clear boundary exists to verify or constrain which tools are trustworthy.

Fragile infrastructure: High availability, load balancing, and failover

When local tools break, it’s a personal inconvenience. When remote MCP servers fail, it’s a systemic failure that can cascade across an entire agentic workflow. High availability becomes a hard requirement in this world, especially when toolchains depend on server chaining. A single upstream server going offline could stall the entire plan execution.

Yet today, MCP lacks a built-in mechanism for load balancing or failover. These are critical gaps that need addressing as we rely more heavily on distributed composition.

Developer onboarding and ecosystem fragmentation

With the proliferation of MCP servers, discoverability becomes a pressing concern. How do developers find trusted, maintained servers? How do they know what tools are available or how to invoke them? While Anthropic has hinted at a registry system in their roadmap, no robust discovery solution exists today.

Without clear strategies for documentation, onboarding, and governance, developers are left to navigate a fragmented ecosystem where reusability and collaboration suffer.

Context bloat and LLM bias

Remote composition sounds elegant — until you realize that each server added to a session expands the LLM context window. Tool metadata, parameter schemas, prompt templates: it all adds up, especially in high-churn, multi-agent environments.

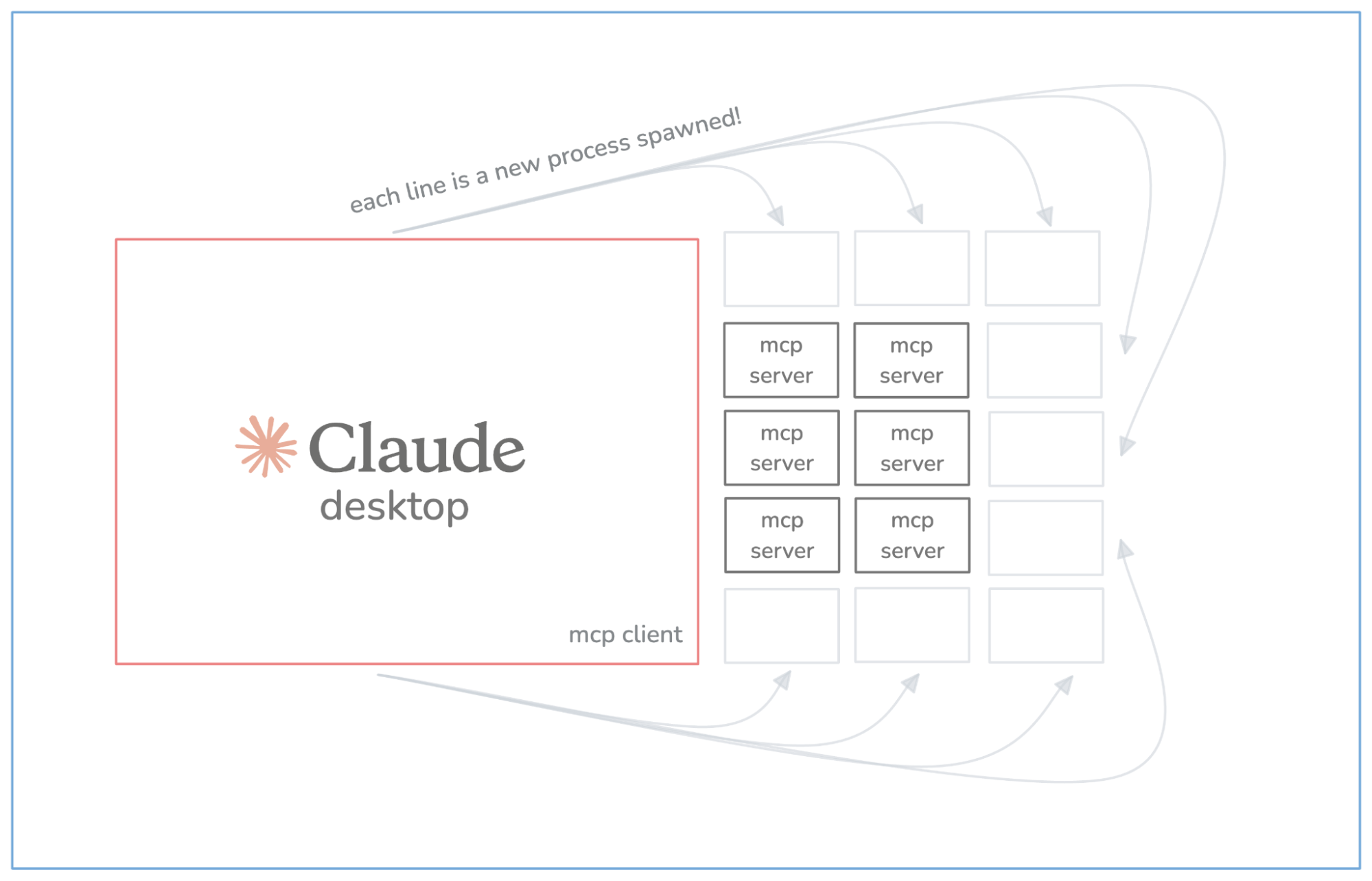

And once tools are injected into context, there’s no guarantee they’ll be used wisely. LLMs are often biased toward invoking tools that appear in context, even when unnecessary. This can lead to redundant calls, bloated prompt chains, and inefficient workflows. A problem that will be exacerbated by the increasing number of remote servers being registered.

The gateway pattern: An old friend for a new interface

To folks who live and breathe APIs, many of these challenges sound . . . familiar. Authentication quirks? Load balancing? Developer onboarding? These are the kinds of problems that modern API management tooling—especially API gateways—have been solving for well over a decade.

And as we stated earlier, MCP doesn’t replace APIs. It simply introduces a new interface layer that makes APIs more LLM-friendly. In fact, many MCP servers are just clever wrappers around existing APIs. So, rather than reinvent the wheel, let’s explore how we can apply the battle-tested API gateway to the emerging world of remote MCP servers.

Auth? Already solved

Gateways are already great at managing authentication and authorization, especially in enterprise environments. Instead of relying on each MCP server to act as its own OAuth 2.1 provider (a pattern that introduces security and operational complexity), we can delegate auth to a central gateway that interfaces with proper identity providers and authorization servers.

This simplifies token handling, supports centralized policy enforcement, and adheres to real-world IAM patterns that organizations already trust.

Security, guardrails, and trust boundaries

The gateway could serve as a vital security layer that filters and enforces which MCP servers and tools are even eligible to be passed into an LLM context. This provides a natural checkpoint for organizations to implement allowlists, scan for tool poisoning patterns, and ensure that only vetted, trusted sources are ever included in agentic workflows.

In essence, a gateway becomes a programmable trust boundary that stands between your agents and the open-ended world of MCP. When used properly, this alone could neutralize a large class of Tool Poisoning Attacks.

Resilience, load balancing, and observability built in

When MCP servers are registered behind a gateway, we get automatic benefits: load balancing, failover, health checks, and telemetry. Gateways are built for high availability, and they’re designed to route requests to the healthiest upstream server.

This is critical for agentic workflows where the failure of one link could disrupt the entire chain. Add in monitoring and circuit breakers, and you’ve got the makings of a reliable, observable infrastructure layer that MCP currently lacks.

Gateway as developer experience engine

Modern API gateways don’t just route traffic, but anchor entire developer ecosystems. API portals, internal catalogs, usage analytics, and onboarding flows are all well-supported in today’s API management stacks. There’s no reason MCP should be different.

By exposing MCP servers through something like a gateway-managed developer portal, we can offer consistent discovery, documentation, and access control, turning a fragmented server sprawl into a curated marketplace of capabilities.

Tackling context bloat and client overhead

The final two problems — LLM context bloat and bias — are tougher nuts to crack. But this is where a future, more intelligent gateway could shine.

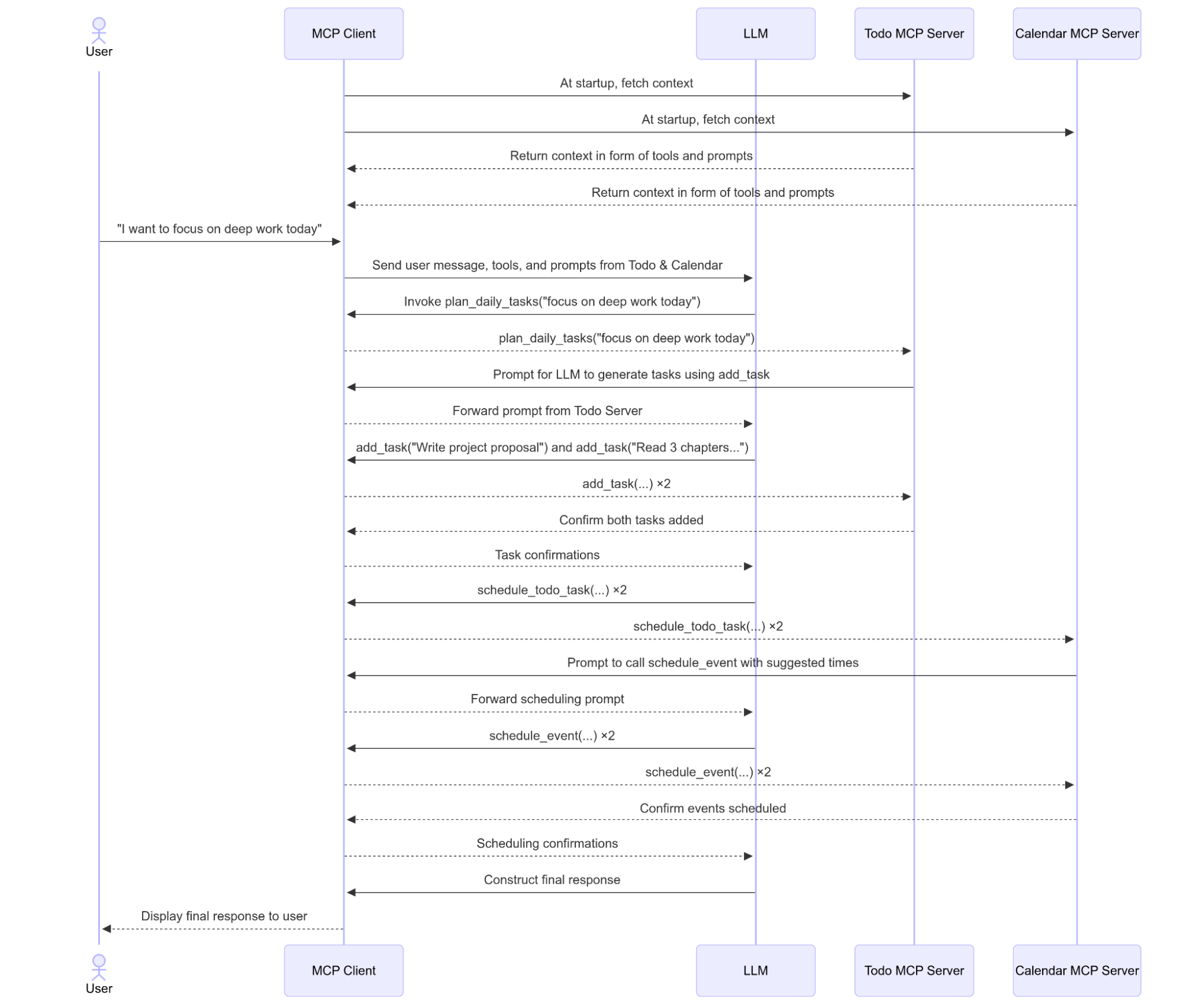

Imagine the gateway not just as a proxy, but as an adaptive MCP server. One that connects to upstream MCP servers, introspects their tools, and selectively injects relevant context based on the user’s prompt. It could maintain persistent upstream connections and handle tool registration dynamically, reducing the need for spawning redundant client processes and minimizing token bloat in the LLM context.

Tools like mcpx have started down this road already, but it makes sense to centralize and scale this capability in the gateway: after all, it’s already the front door to your organization’s APIs.

Conclusion

As AI agents evolve from novelties to core components of modern software, their need for structured, reliable access to tools and data becomes foundational. MCP introduces a powerful new interface for exposing that functionality, which enables agents to reason, plan, and act across services. But as MCP servers move toward a remote-first model, developer and operational complexity rise dramatically.

From authentication and load balancing to context management and server discovery, the road to remote MCP isn’t without potholes. Yet, many of these challenges are familiar to those who’ve spent time in the world of API infrastructure. That’s why an API gateway, long trusted for securing, scaling, and exposing HTTP services, may be the perfect solution to extend MCP into production-grade, enterprise-ready territory.

At Kong, we believe this convergence is already happening. With the Kong Konnect platform and Kong AI Gateway, organizations can begin to apply proven gateway patterns to emerging AI interfaces like MCP. From scalable auth to load balancing and developer onboarding, much of what’s needed for remote MCP is already here.

👉 Want to learn more? See how Kong is solving real-world MCP server challenges today.