Kubernetes continues to lead the container orchestration charge. In fact, according to the latest CNCF survey, 83% of respondents said they were using Kubernetes in production. Kubernetes provides you with key features such as self-healing capabilities, automated rollouts and rollbacks, automated scheduling, scaling, and infrastructure abstraction. This provides a truly extensible, highly available and infrastructure-agnostic environment to deploy all your modern microservices-based applications.

Microservices applications feature dozens, even hundreds, of separate modular services - all communicating with each other via Application Programming Interfaces (APIs). Your microservices also need to interact with one or more external clients such as a web server, application or an IoT device. This direct client-to-microservice communication means exposing the APIs for each microservice to the outside world.

Technology teams need to ensure that these APIs can be seamlessly secured, monitored and managed at scale. Not doing so would be catastrophic. For example, without proper security controls, these APIs may accidentally expose a company’s sensitive data and resources to bad actors, causing compliance violations, increasing fines and lowering customer trust.

In addition to this, the Kubernetes environment itself introduces a unique set of security and complexity challenges. As a result, managing and securing these APIs at scale in the Kubernetes environment becomes critical for your businesses to succeed.

This blog post will discuss some of the different ways to manage APIs in a Kubernetes environment and how those choices can affect your overall experience and performance.

What Is a Kubernetes Ingress?

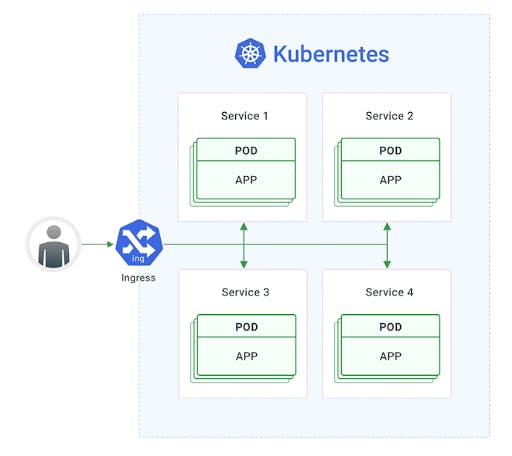

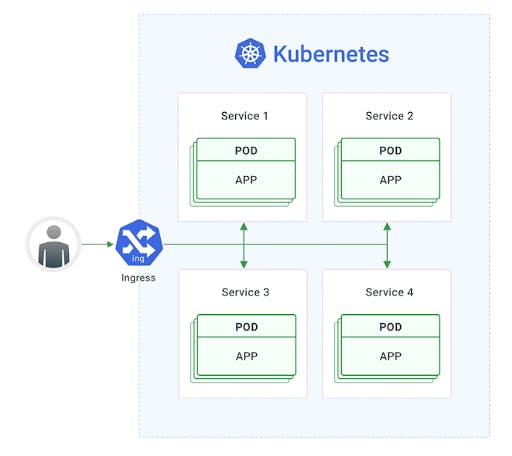

A typical Kubernetes Deployment consists of one or more Pods maintained by ReplicaSets and exposed by one or more Services. In Kubernetes, a Service is responsible for exposing an interface to those pods, which enables network access from either within the cluster or between external processes and the service.

External access is achieved by the Ingress API object provided by Kubernetes. With Ingress, services within the cluster are exposed to the outside of the cluster via HTTP and HTTPS routes.

Diagram 1: Kubernetes Ingress

Services exposed by Ingress can be configured based on routing rules (which are typically over HTTP or HTTPS). Kubernetes Ingress supports multiple protocols, traffic load balancing, SSL/TLS termination, path-based routing, etc. and allows users to manage and configure them in Kubernetes clusters easily. Kubernetes Ingress consists of two core components:

- Ingress API object: An API object that manages external access to services that need to be exposed outside the cluster. It consists of the routing rules.

- Ingress Controller:Ingress Controller is the actual implementation of Ingress. It is usually a load balancer that routes traffic from the API to the desired services within the Kubernetes cluster.

Kubernetes Ingress can be easily set up with rules for routing traffic without creating a bunch of load balancers or exposing each service on the node. This makes it the best option to use in production environments. However, standalone Kubernetes Ingress is not enough to maintain and manage large-scale production APIs, as it can’t do more than traffic routing and load balancing. This leads us to the topic of API gateways and its core concepts and why it’s beneficial.

What Is an API Gateway?

An API gateway can be best described as a layer that is present between the clients (both internal and external clients) and the service/product APIs which can be accessed via a centralized ingress point.

With the increase in the number of microservice architecture applications, a complex application is divided into smaller components based on its distinct functionality and other factors. Microservices are easier to develop, deploy and maintain individual parts of a complex application. However, this comes with the cost of difficulty for clients (both internally and externally) to access the information in a fast and secure fashion.

An API gateway can solve most of these problems, as it can act as a central interface for the clients who are consuming these microservices. The main function of the API gateway is routing and along with it, offers a number of API management features such as:

- Centralize IT governance standards enforcement, which commonly include authentication and authorization, quality of service, access control, etc.

- Decouple API consumers from API producers to shield consumers from back-end implementation details, such as protocols and message formats, and facilitate change management.

- Provide up-to-date documentation that incorporates dynamic elements such as the ability to easily experiment with APIs, developer and application onboarding, and statistics such as API utilization.

- Accelerate API development by virtualizing or mocking APIs to allow for simultaneous development of upstream APIs and API consumer applications.

API gateways are an essential part of modern API management solutions, particularly when it comes to complex microservices-based applications.

Conclusion

Managing the APIs in a Kubernetes environment can be a daunting, complicated and time-consuming task. In this blog, we discussed how we can use Kubernetes Ingress or an API gateway to address this challenge. Each technology comes with its own set of advantages and capabilities. Download the eBook to learn about the differences between an API gateway and Kubernetes Ingress.

A completely optimized and Kubernetes-native solution is available by Kong for seamless API management. Check out the live hands-on tutorial on Kong Gateway on Kubernetes, and try it out for yourself here.