Kong for Kubernetes is a Kubernetes Ingress Controller based on the Kong Gateway open source project. Kong for K8s is fully Kubernetes-native and provides enhanced API management capabilities. From an architectural perspective, Kong for K8s consists of two parts: A Kubernetes controller, which manages the state of Kong for K8S ingress configuration, and the Kong Gateway, which processes and manages incoming API requests.

We are thrilled to announce the availability of this latest release of Kong for K8s! This release's highlight features include Knative integration, two new Custom Resource Definitions (CRDs) - KongClusterPlugins and TCPIngress - and a number of new annotations to simplify configuration.

This release works out of the box with the latest version of Kong Gateway as well as Kong Enterprise. All users are advised to upgrade.

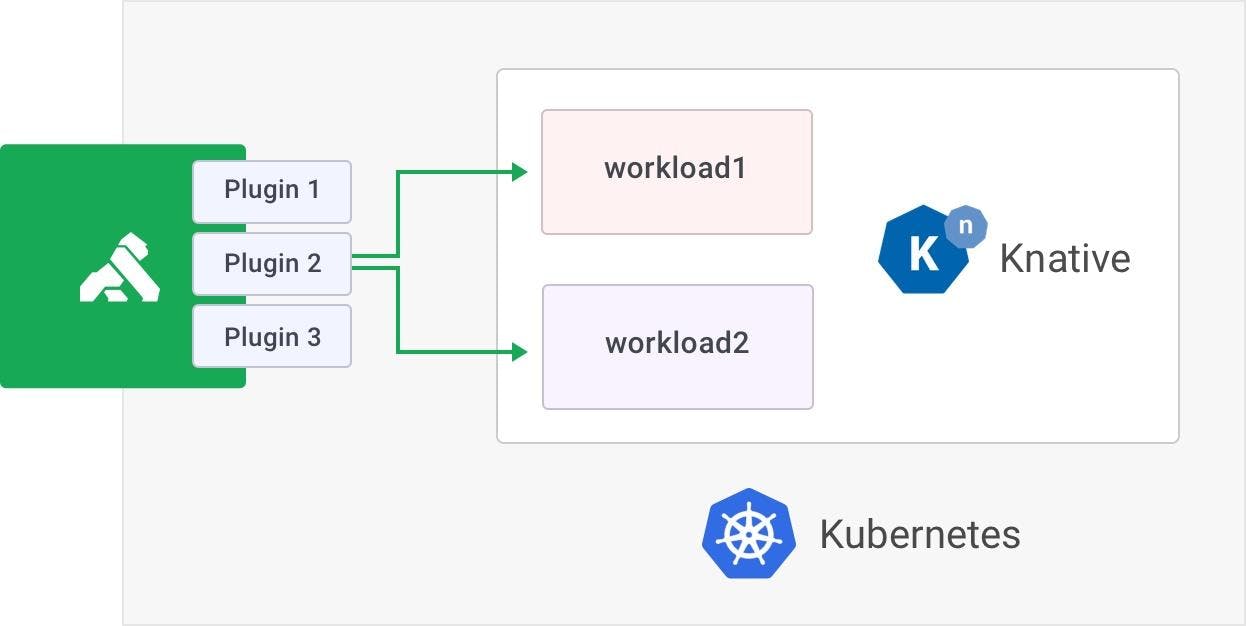

Kong as Knative's Ingress Layer

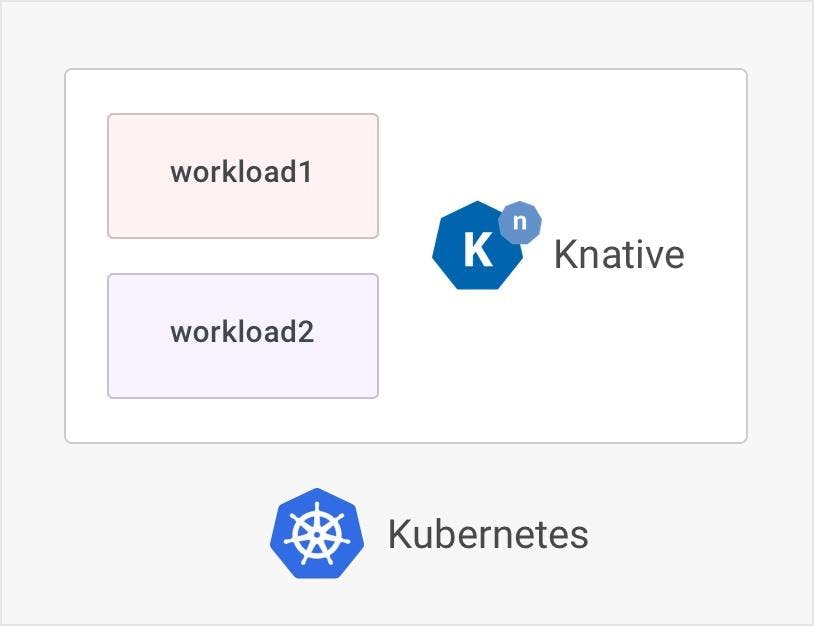

Knative is a Kubernetes-based platform that allows you to run serverless workloads on top of Kubernetes. Knative manages auto-scaling, including scale-to-zero, of your workload using Kubernetes primitives. Unlike AWS Lambda or Google Cloud functions, Knative enables serverless workload for functions and application logic of any Kubernetes cluster in any cloud provider or bare-metal deployments.

In addition to the ingress and API management layer for Kubernetes, Kong can now perform ingress for Knative workloads as well. In addition to Ingress resources, Kong can run plugins for Knative serving workloads, taking up the responsibility of authentication, caching, traffic shaping and transformation. This means that as Knative HTTP-based serverless events occur, they can be automatically routed through Kong and appropriately managed. This should keep your Knative services lean and focused on the business logic.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

metadata:

annotations:

konghq.com/plugins: free-tier-rate-limit, prometheus-metrics

spec:

containers:

- image: gcr.io/knative-samples/helloworld-go

env:

- name: TARGET

value: Go Sample v1

Here, whenever traffic inbound for helloworld-go, Knative Service is received, and Kong will execute two plugins: free-tier-rate-limit and prometheus-metrics.

New CRD: KongClusterPlugins

Over the past year, we have received feedback from many users regarding plugin configuration. Until now, our object model allowed for namespace KongPlugin resources.

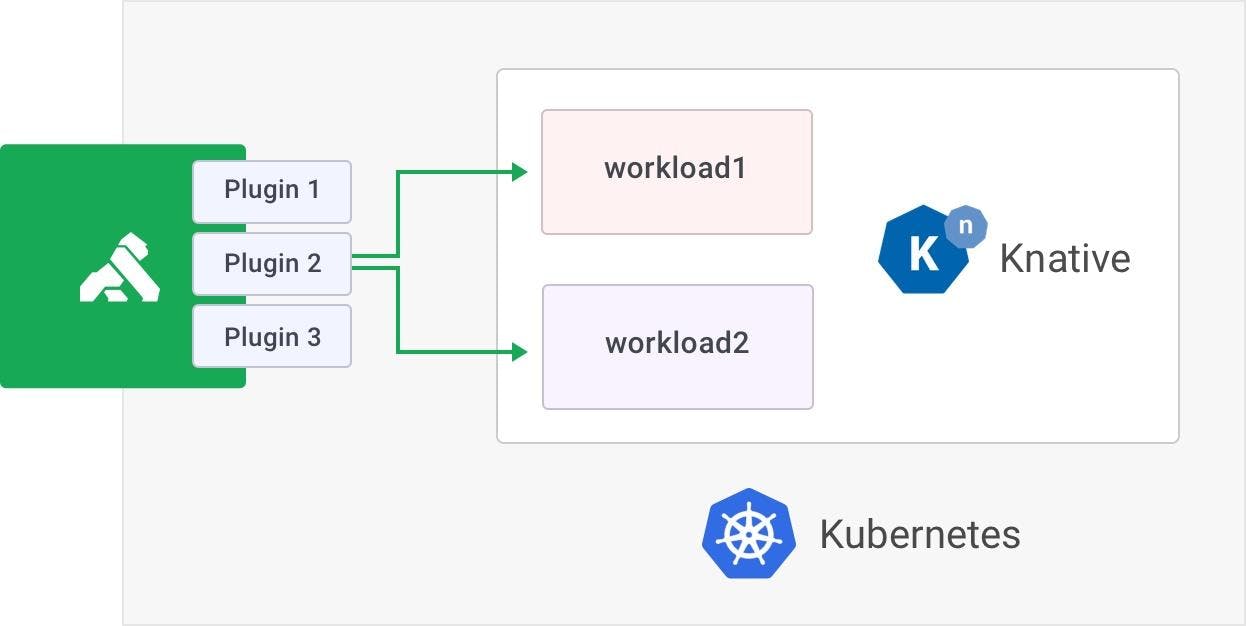

In this model, Service owners are expected to own the plugin configuration (KongPlugin) in addition to Ingress, Service, Deployment and other related resources for a given service. This model works well for the majority of use cases but has two limitations:

- Sometimes it is important for the plugin configuration to be homogeneous across all teams or groups of services that are running in different namespaces.

- Also, sometimes the plugin configuration should be controlled by a separate team, and the plugin is applied on to an Ingress or Service by a different team. This is usually true for authentication and traffic shaping plugins where the configuration could be controlled by an operations team (which configures the location of IdP and other properties), and then the plugin is used for certain services on a case-by-case basis.

To address these problems, in addition to the KongPlugin resource, we have added a new cluster-level custom resource: KongClusterPlugin.

The new resource is identical in every way to the existing KongPlugin resource, except that it is a cluster-level resource.

You can now create one KongClusterPlugin and share it across namespaces. You can also RBAC this resource differently to allow for cases where only users with specific roles can create plugin configurations and service owners can only use the well-defined KongClusterPlugins.

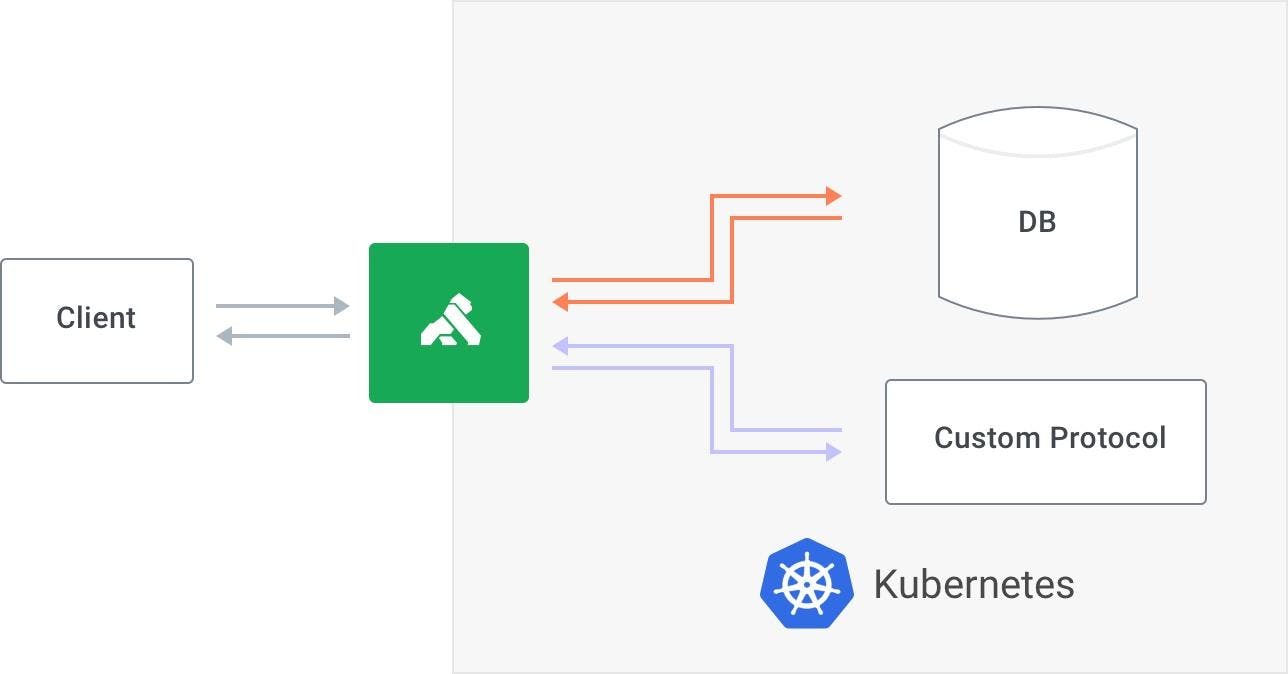

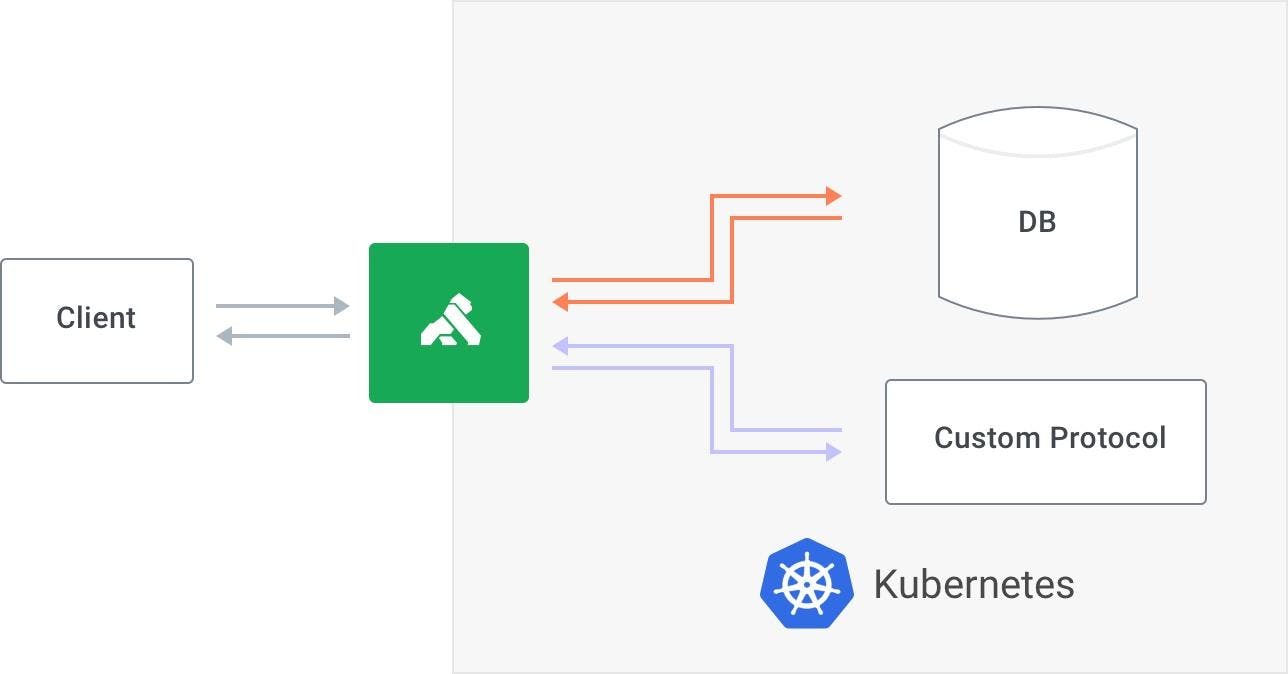

Beta Release of TCPIngress

In the last release, we introduced native support for gRPC-based services.

With this release, we have now opened up support for all services that are based on a custom protocol.

TCPIngress is a new Custom Resource for exposing all kinds of services outside a Kubernetes cluster.

The definition of the resource is very similar to the Ingress resource in Kubernetes itself.

Here is an example of exposing a database running in Kubernetes:

apiVersion: configuration.konghq.com/v1beta1

kind: TCPIngress

metadata:

name: sample-tcp

spec:

rules:

- port: 9000

backend:

serviceName: config-db

servicePort: 2701

Here, we are asking Kong to forward all traffic on port 9000 to the config-db service in Kong.

SNI-Based Routing

In addition to exposing TCP-based services, Kong also supports secure TLS-encrypted TCP streams. In these cases, Kong can route traffic on the same TCP port to different services inside Kubernetes based on the SNI of the TLS handshake. Kong will also terminate the TLS session and proxy the TCP stream to the service in plain-text or can re-encrypt as well.

Annotations

This release ships with a new set of annotations that should minimize and simplify Ingress configuration:

With these new annotations, the need for using the KongIngress custom resource should go away for the majority of the use cases.

For a complete list of annotations and how to use them, check out the annotations document.

Upgrading

If you are upgrading from a previous version, please read the changelog carefully.

Breaking Changes

This release ships with a major breaking change that can break Ingress routing for your cluster if you are using path-based routing.

Until the last release, Kong used to strip the request path by default. With this release, we have disabled the feature by default. You are free to use KongIngress resource or the new konghq.com/strip-path annotation on Ingress resource to control this behavior.

Also, if you are upgrading, please make sure to install the two new Custom Resource Definitions (CRDs) into your Kubernetes cluster. Failure to do so will result in the controller throwing errors.

Deprecations

Starting with this release, we have deprecated the following annotations, which will be removed in the future:

These annotations have been renamed to their corresponding konghq.com annotations.

Please read the annotations document on how to use new annotations.

Compatibility

Kong for K8s supports a variety of deployments and run-times. For a complete view of Kong for K8s compatibility, please see the compatibility document.

Getting Started!

You can try out Kong for K8s using our lab environment, available for free to all at konglabs.io/kubernetes.

You can install Kong for K8s on your Kubernetes cluster with one click:

or

Alternatively, if you want to use your own Kubernetes cluster, follow our getting started guide to get your hands dirty.

Please feel free to ask questions on our community forum — Kong Nation — and open a Github issue if you happen to run into a bug.

Happy Konging!