Over the past ten years, Clubhouse and other innovative startups built software quickly. They started from scratch and blew past their incumbents. But the fact of the matter is that speed is no longer a differentiator. Everyone can move quickly. We've seen it as Facebook and Twitter quickly duplicated Clubhouse's "innovative" functionality.

Today, it’s all about agility—taking the momentum that you’ve already built up. Agility makes it easier for companies to quickly replicate innovations in the market and adopt them as their own.

By aligning around a few key principles, you too can stay agile:

- Don't throw away or duplicate code. The benefit is a shift to innovation.

- Bring along old applications. That way, you can inherit policy, security and best practices.

- Maintain connectivity across the heterogeneous. This will result in simplified application maintenance.

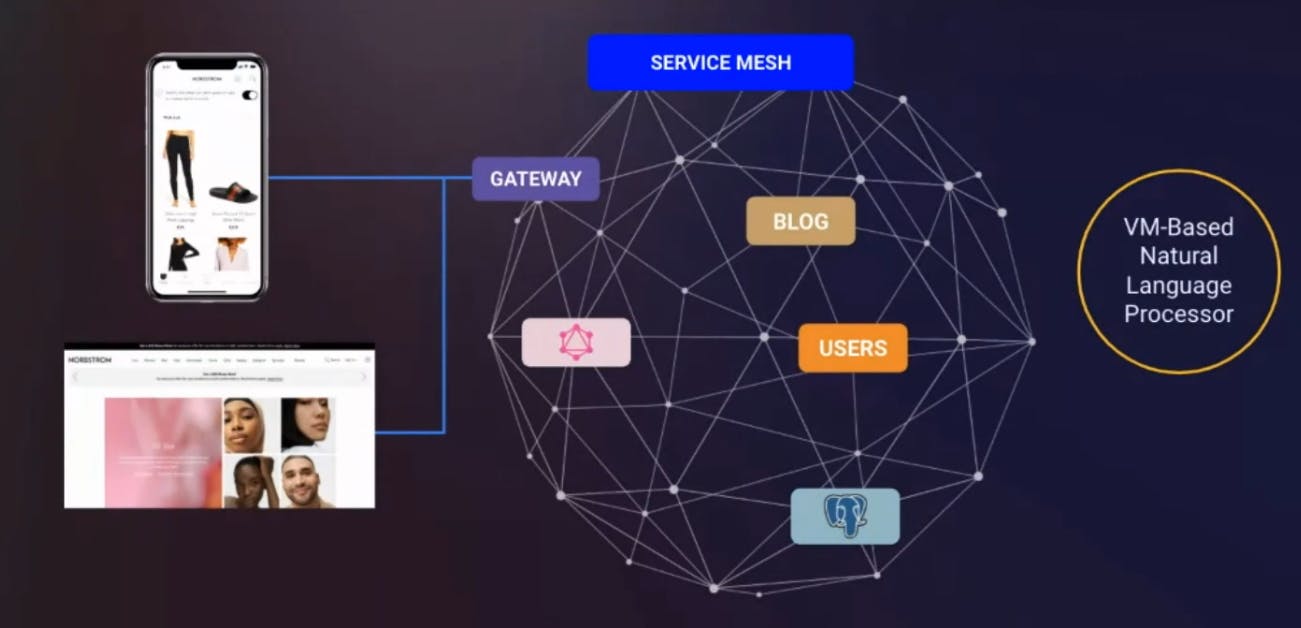

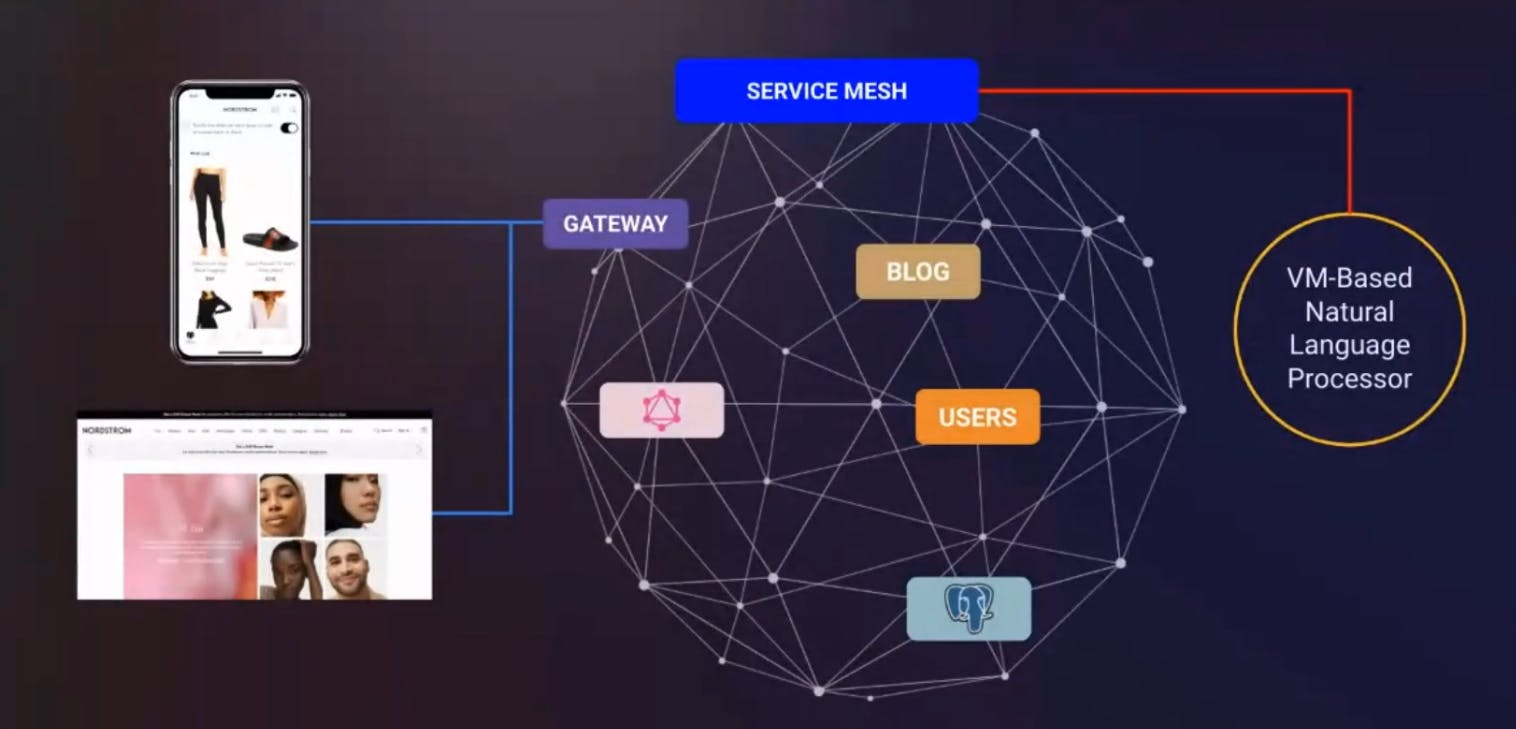

A service mesh like Kuma or Kong Mesh can help you remain agile and scale by providing end-to-end service connectivity across architectures and modern protocols to connect clouds to virtual machines. So whether you're working at a retailer trying to connect their old monolithic-based inventory or fulfillment systems or a bank that can't seem to move off of those decades-old servers, it's critical to stay agile across environments.

Let's see how with an example.

Example: Connecting a Legacy Service to a Modern Architecture

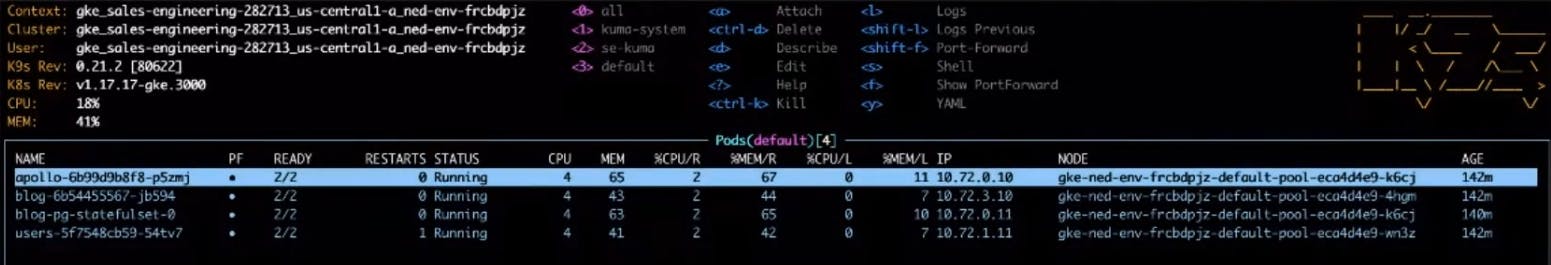

In my example, I have containerized apps in my Kubernetes environment. Many of these were scooped out from legacy monoliths. Unfortunately, it hasn't been possible or sensible to transition all of my applications yet.

Specifically, the current problem is with my VM-based natural language processor service. Not being able to connect this natural processing language service has stopped my whole solution from working.

In an enterprise with many legacy systems, this could go beyond VMs. It could be a rack server or a bunch of servers in a data center.

The preferable thing to do with a service mesh is to get a data plane proxy on that service, but that's not possible right now due to internal politics. So getting something installed on this machine is probably not going to happen today. I'm going to keep trying to get access, but for now, this needs to get done today.

In this scenario, where we don't have access to the VM-based natural language processor, we can do something called an external service in the mesh, which will give us the proxy. Doing this is a common first step into getting connectivity going across services to which you have limited access.

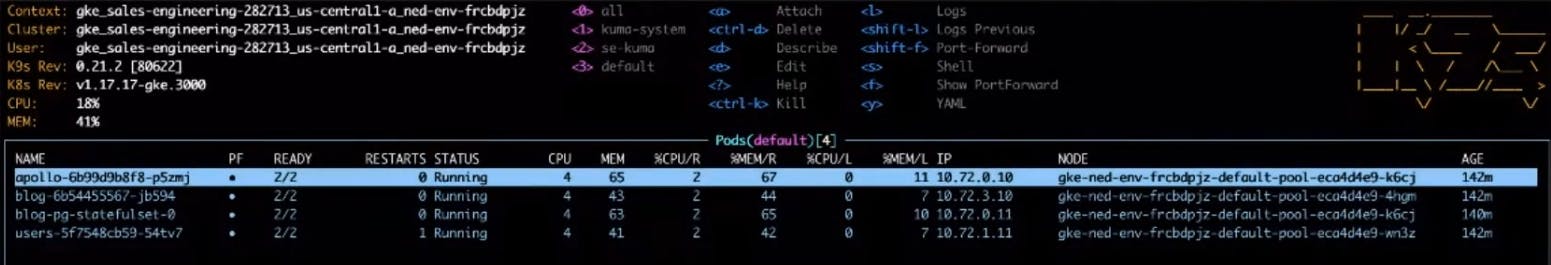

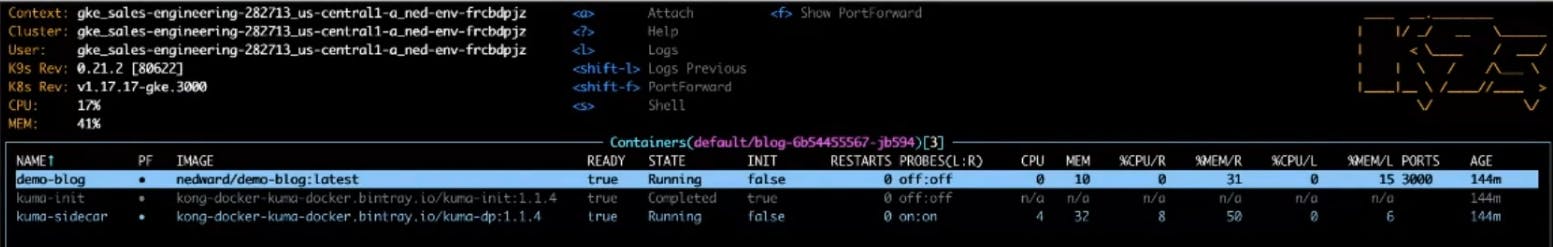

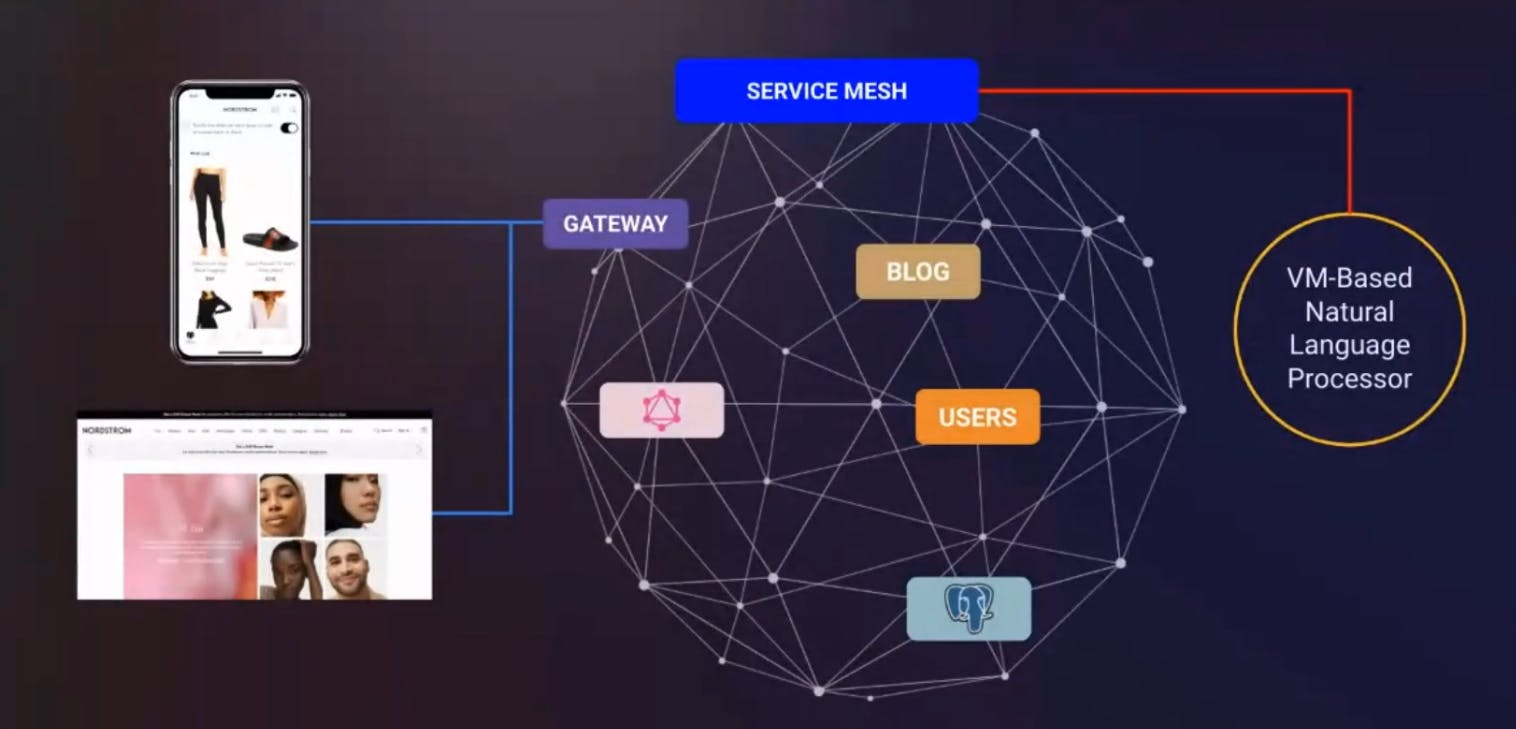

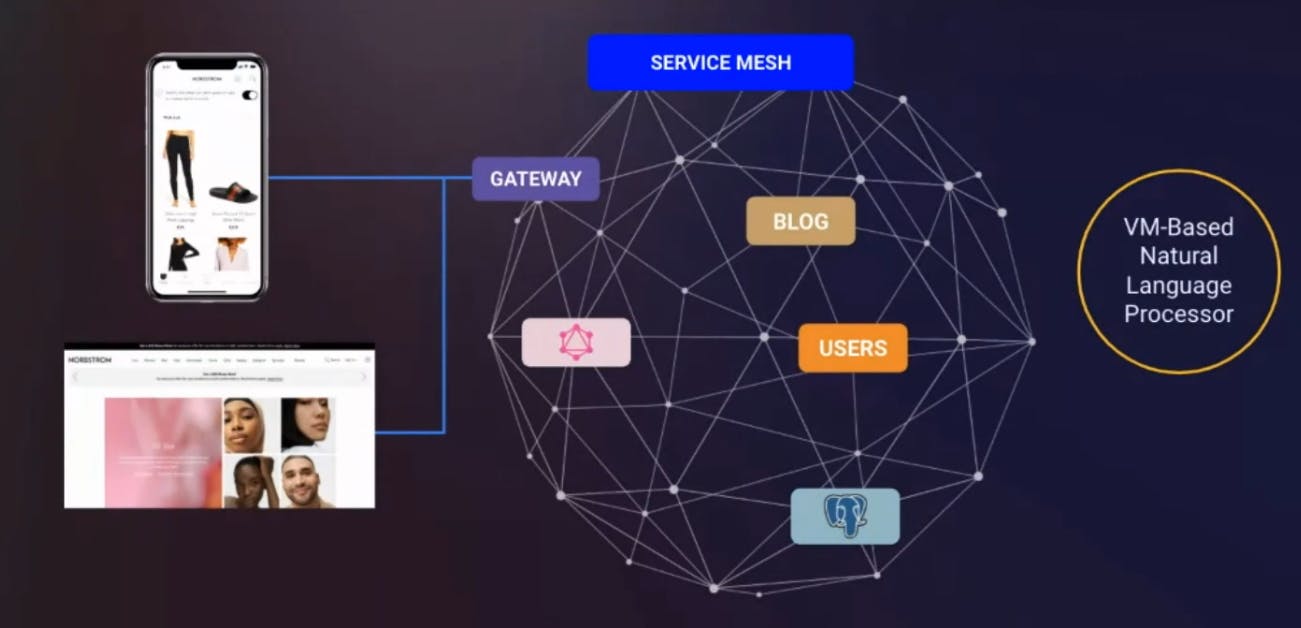

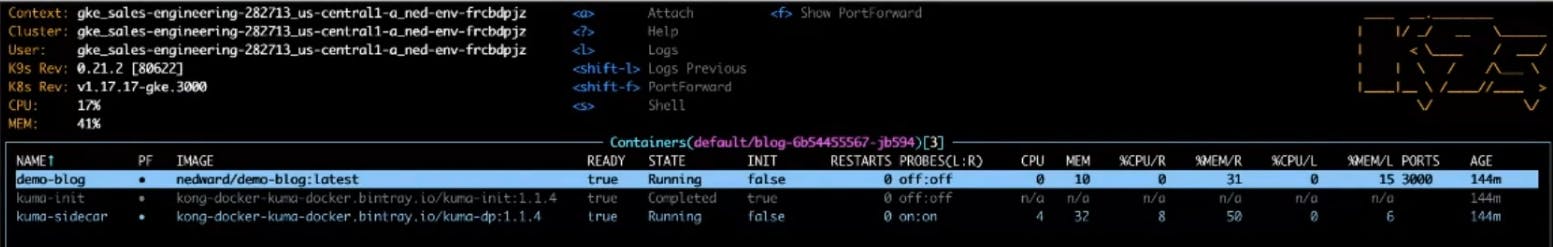

In the below screenshot, you can see my environment. I have the Apollo service (my GraphQL), a blogging service, a user service and Postgres. I'm just missing that natural language processing service to get my application working.

Check the VM Service Connection

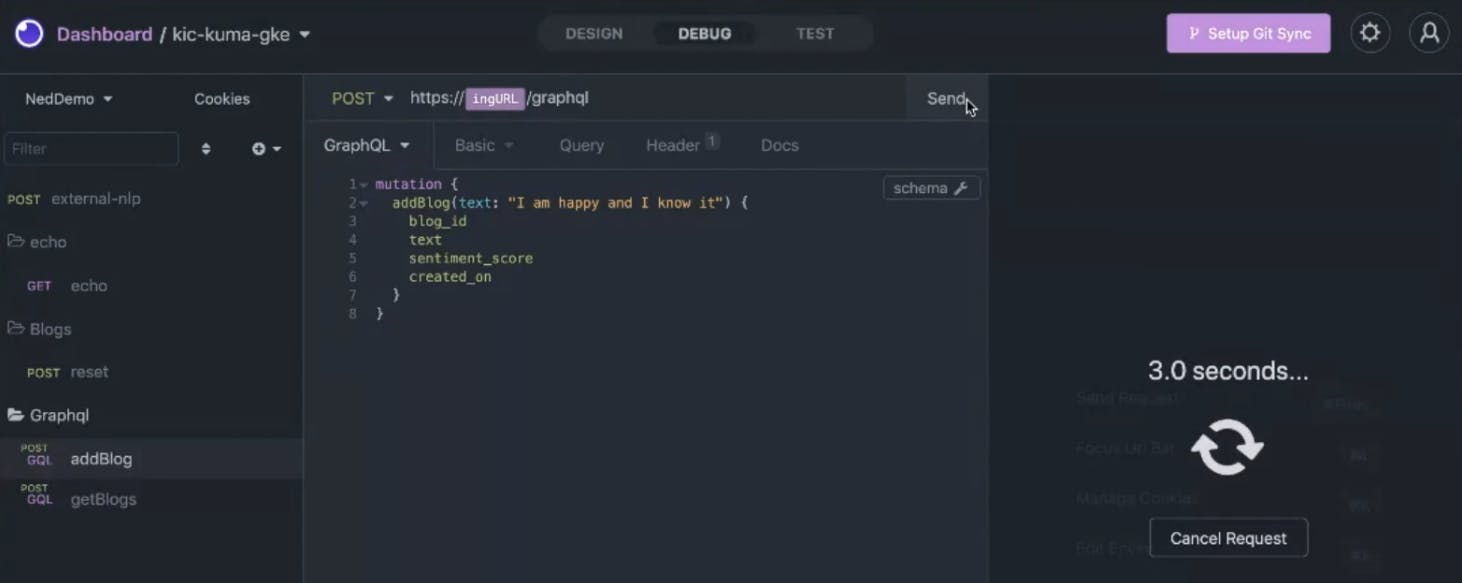

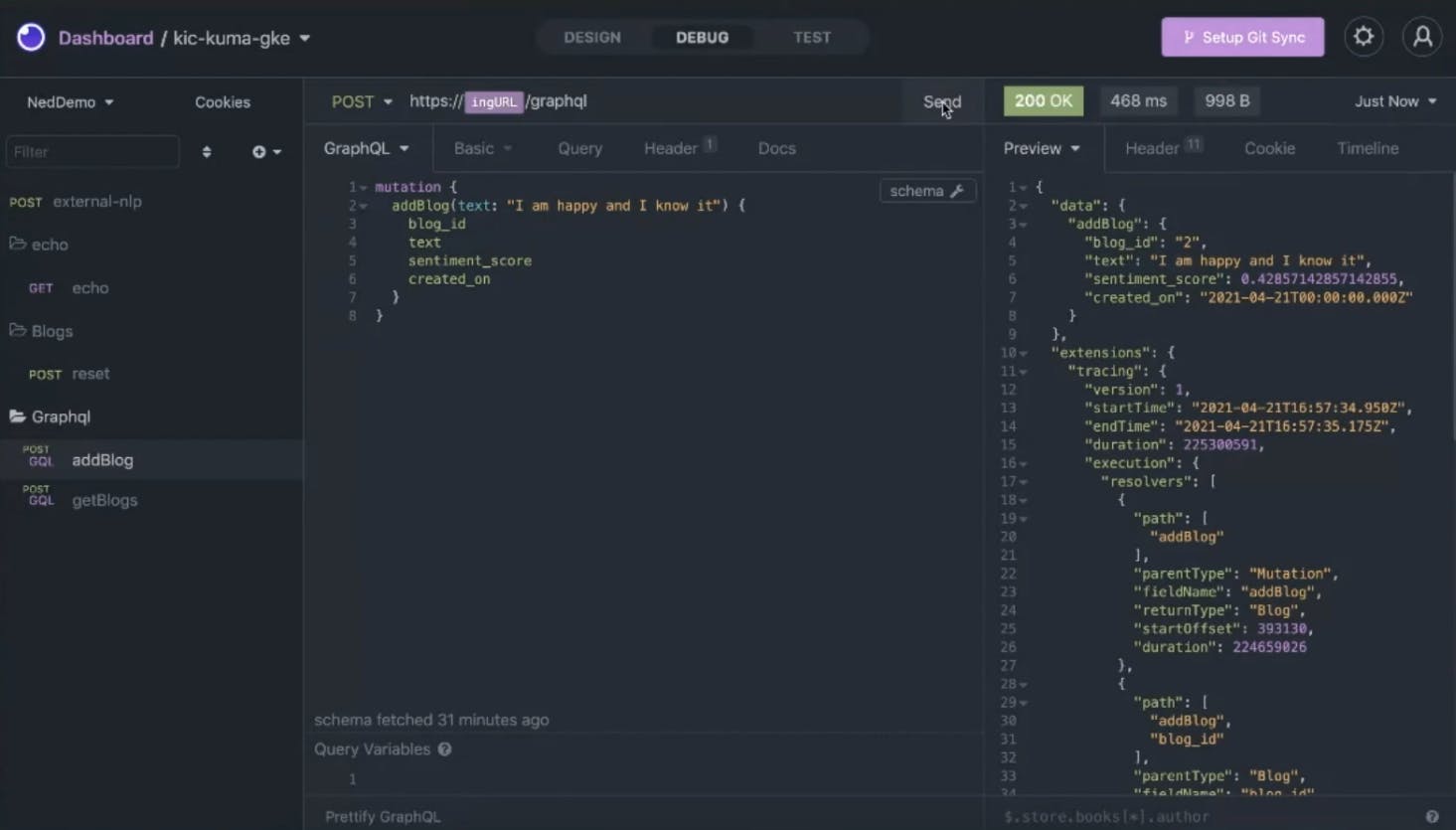

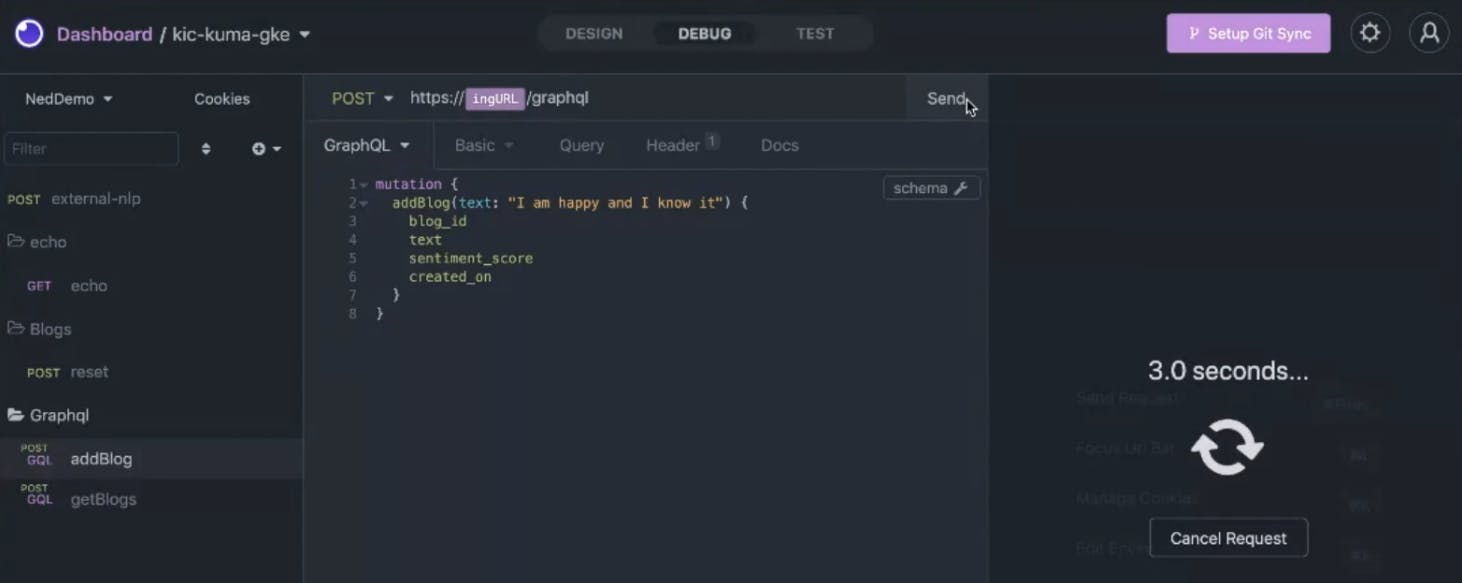

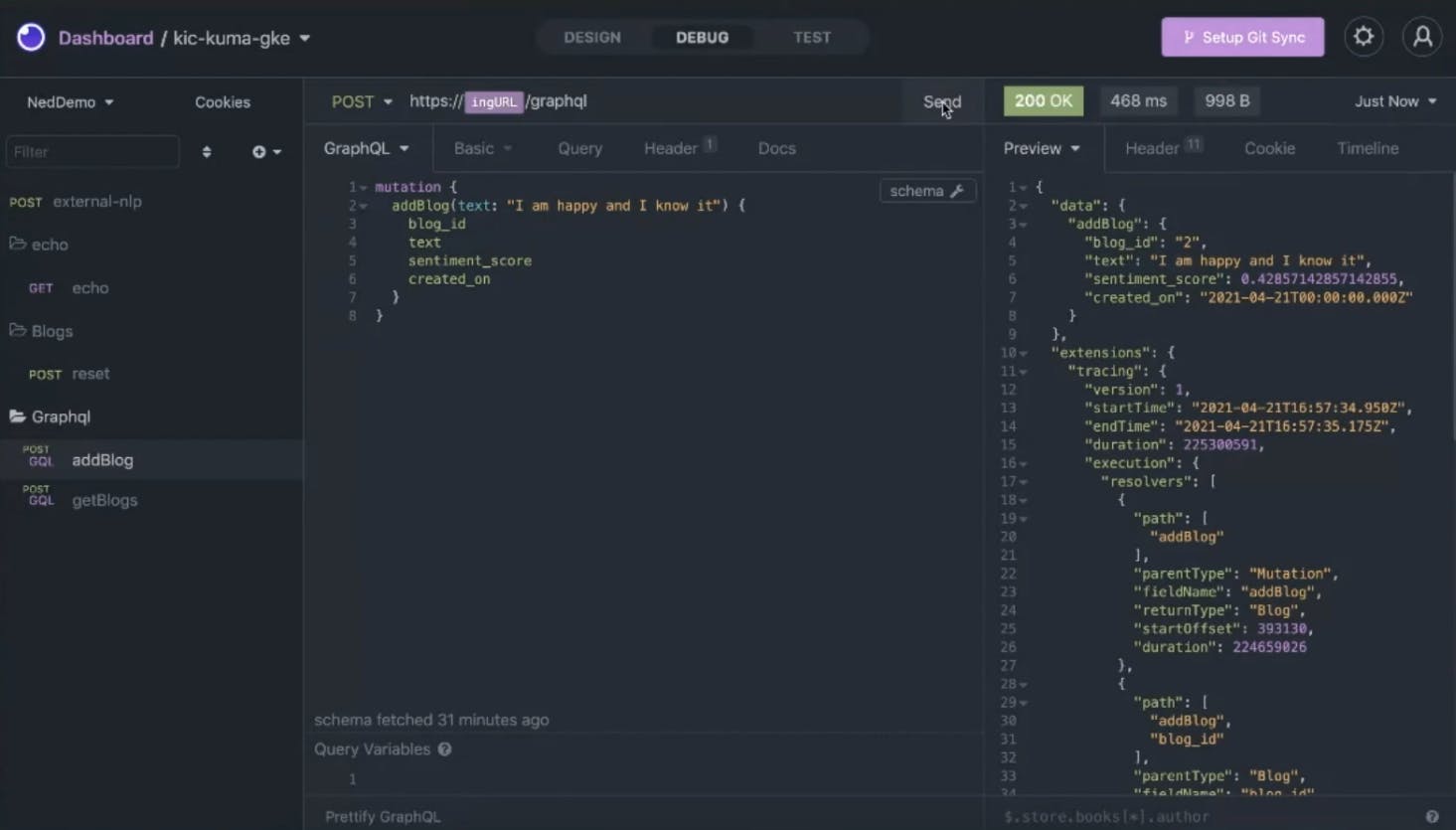

I can test that in Insomnia. If I go over to run this, we time out in 15 seconds because it’s a hard dependency.

Access VM Service with Service Mesh

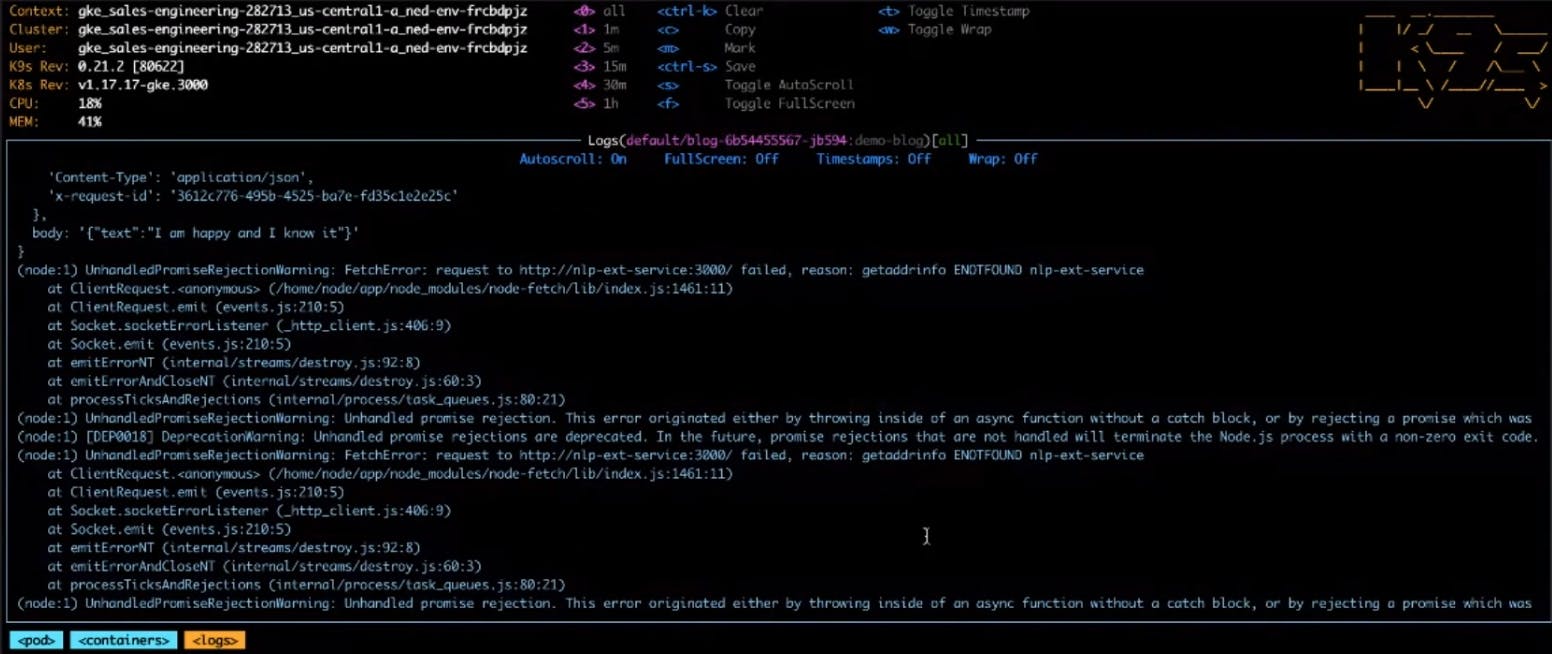

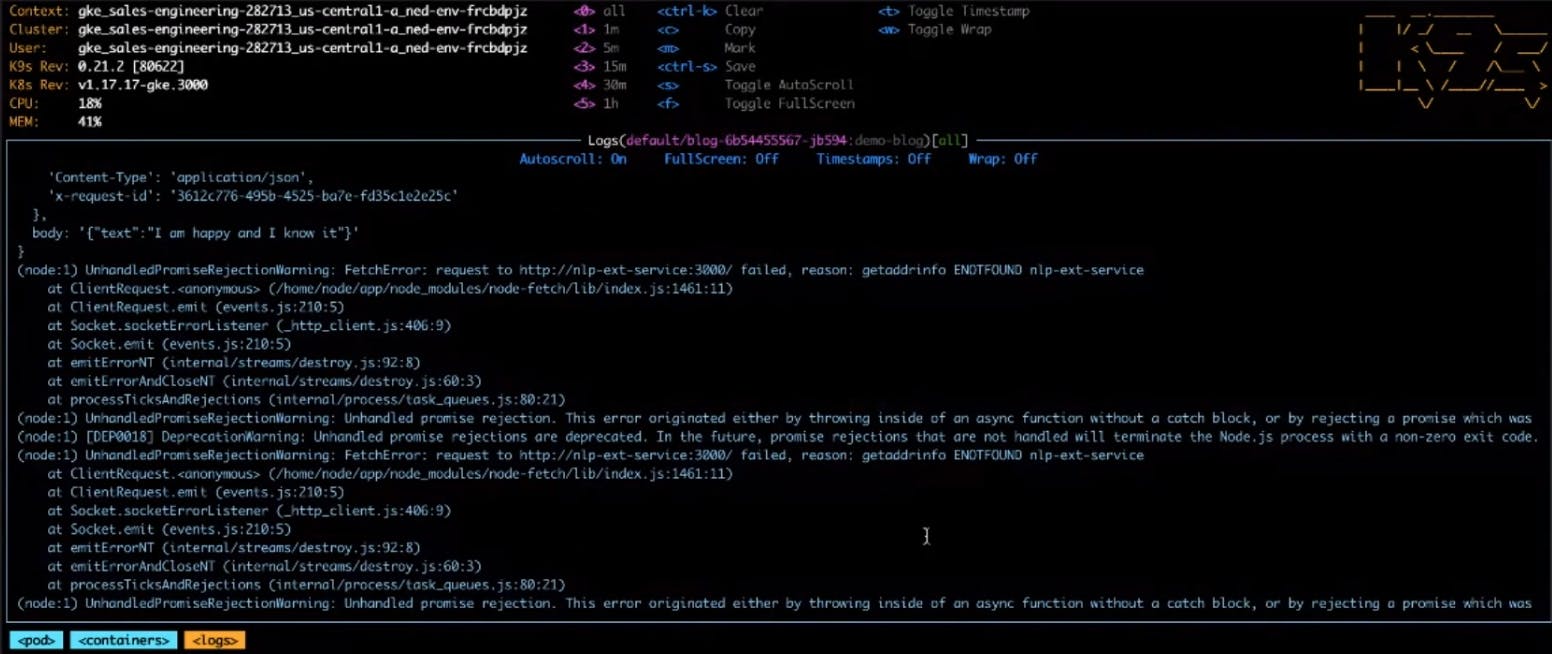

In the Apollo service below, we can see it's asking for the natural language processing service. So how do we get access to this kind of external service and still use our service mesh?

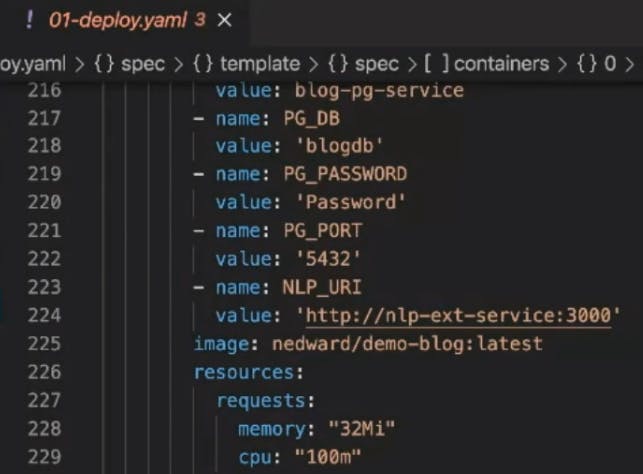

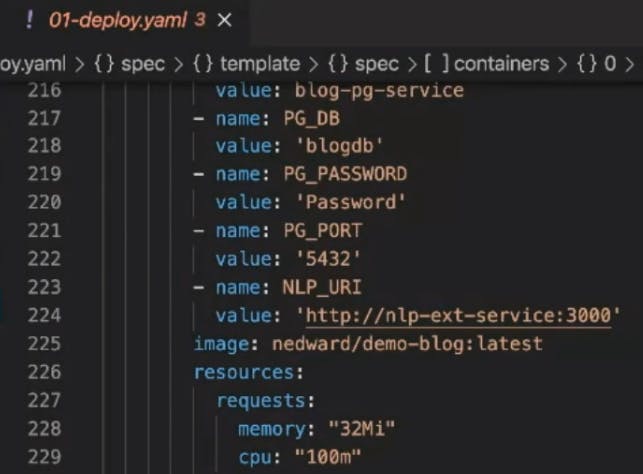

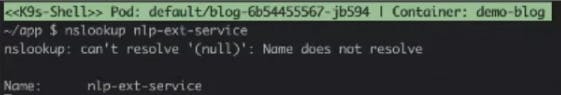

One thing that we can do is build this into our deployment. In my Kubernetes definition, I have my blog service. I have some environment variables and a value for NLP, which is just a URL. It’s this NLP EXT service, and it’s coming to port 3000. And that’s what’s failing because the connectivity issue isn't resolved yet.

In my service mesh, I'll build an external service policy that’s essentially going to point to that external service outside of the service mesh and give me the ability to proxy to it and meet it halfway. So I could still apply policies and get visibility. But, still, it won't be the same benefit of putting a data plane proxy onto that server that would pull in the service mesh to enforce policy completely.

Nonetheless, it does give some advantages. For instance, I could extend my mutual TLS policy beyond or at least cover that first half.

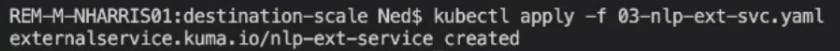

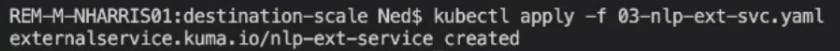

I'll go ahead and apply this.

That created an external service that will now be accessible by my application through the service mesh.

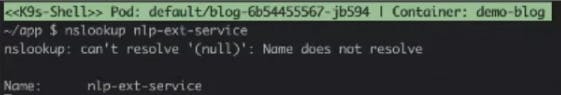

I'll do a nslookup to check. It's now going through my DNS because I have a DNS layer. So I'm basically resolving to it since I built that service.

Test VM Service Connection Again

If I test this again in Insomnia, I'm back in business. All this is just leveraging an external API to our Kubernetes environments, actually in a whole different Google project in reality. It’s running on a VM. But again, this could be a bare metal server.

Conclusion

Ideally, this would buy you some time to solve the internal politics and get that data plane proxy on the service. That way, you'll get the complete benefits from the service mesh. But it doesn’t have to be an all-or-nothing proposition.

In this quick example, I took this service that I didn’t control and gave the mesh a way to know about it in its DNS space. Then, it used our natural Kubernetes configurations and manifested to connect to it as business as usual.

Service connectivity is central to making your business more agile. I hope this article gave you some ideas for how to get a quick win on your journey from monolith to microservices.