APIs are at the backbone of every modern application that powers our day-to-day lives. As a matter of fact, API traffic today is at least 83% of the world’s global internet traffic [1], which underlines the importance of modern API infrastructure that can unlock innovation, agility, fast release cycles, IP reuse and more scalable teams. Most of this innovation is driven by technologies that are open source and platform-agnostic, like Kong Gateway.

Today, the growing importance of APIs resulted in an explosion of a myriad of vendors, old and new, that claim to be the next-generation Kong Gateway, but are they? Let's take a deeper look at factual data and Kong's technology, starting with its adoption, to see why Kong is the king of the API jungle.

A deeper look at Kong's adoption

The value of any API that we create is fundamentally driven by its adoption in production: We could build hundreds of APIs in our organization, but if none of them are adopted, then these APIs are just looking at each other. Likewise, the value of our API infrastructure is also driven by its adoption because it's a proxy metric (pun not intended) to the reliability and innovation that it provides when running such a critical piece of infrastructure.

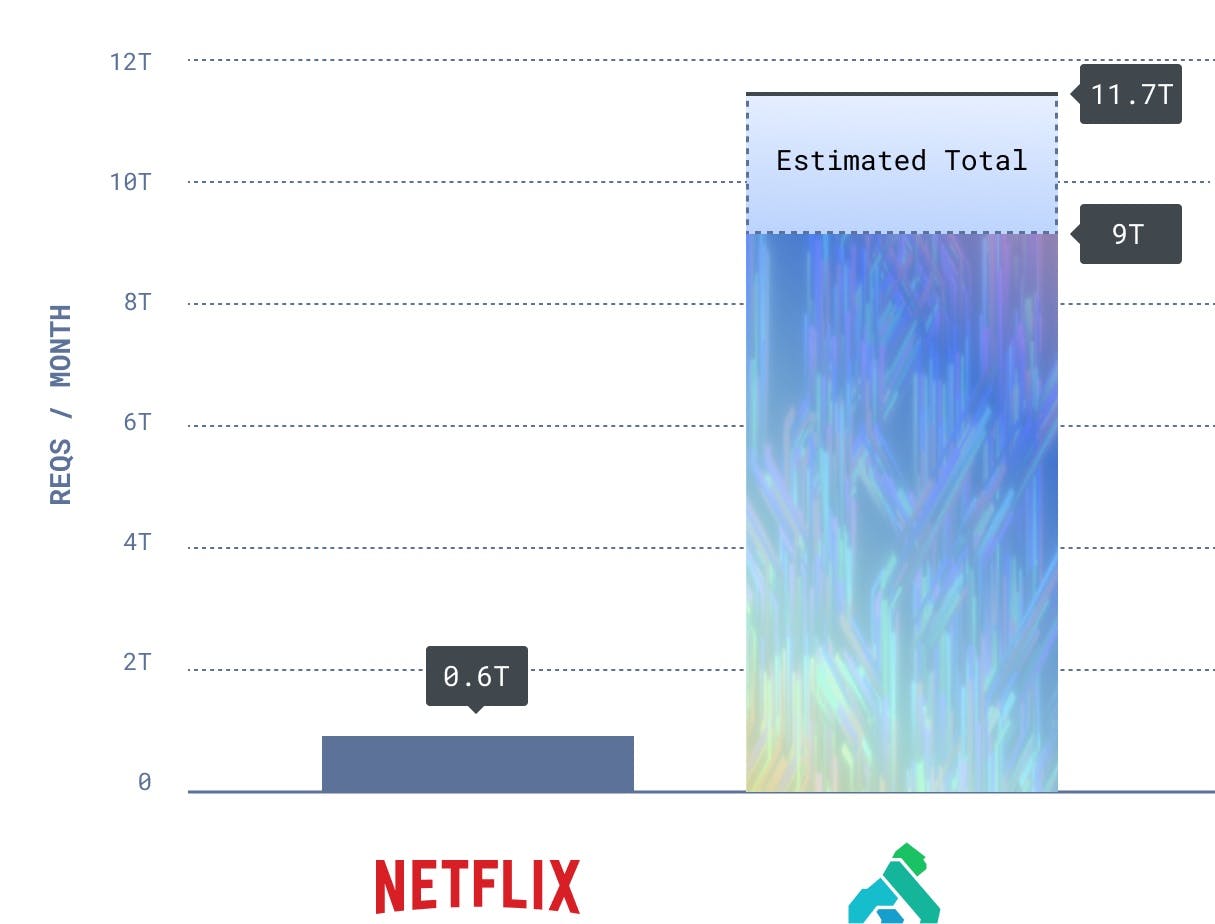

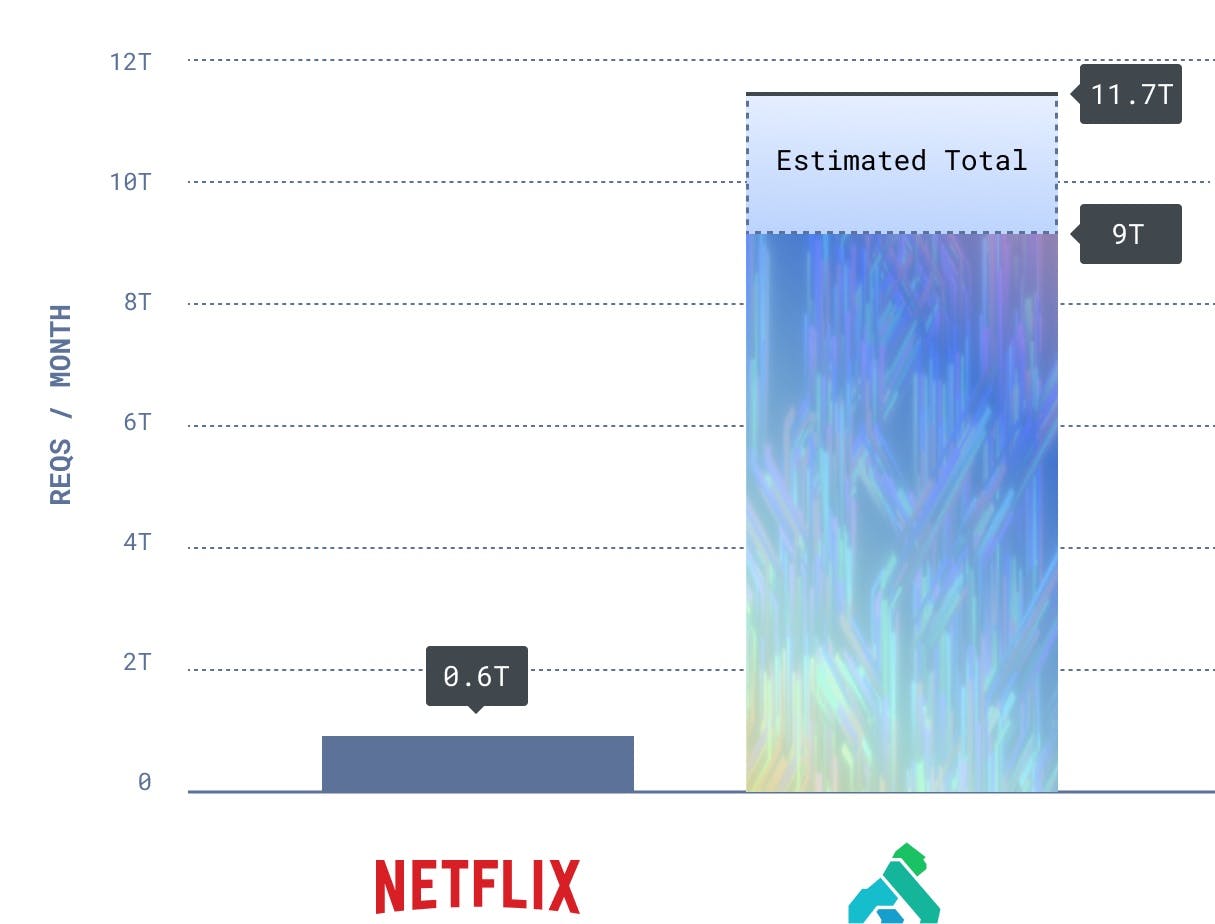

Today, Kong Gateway is by far the most widely used open source API gateway in the world across different metrics. First, Kong today powers the world with approximately 9 trillion requests per month, a number that we derive from the anonymous reports that users can enable (or disable) in their Kong instances. As such, this is a conservative number as it represents just a subset of the reporting nodes (we estimate the real number API requests running through Kong Gateway to be 30% higher, approximately 11.7 trillion requests per month).

To put these numbers in context, let's take a look at Netflix, our industry's favorite example when it comes to API-first organizations that entirely run on APIs. Netflix in 2014 processed 2B API requests per day [2], which is equivalent to approximately 60B/reqs per month. Netflix's growth has increased exponentially since then, so let's 10x this number to 600B/reqs per month over the years, and we come up with the following chart:

Kong Gateway today processes an estimated traffic of ~20x Netflixes worldwide. Kong is trusted to run a significant part of the world's API infrastructure across every vertical and industry in the world, without ever going down.

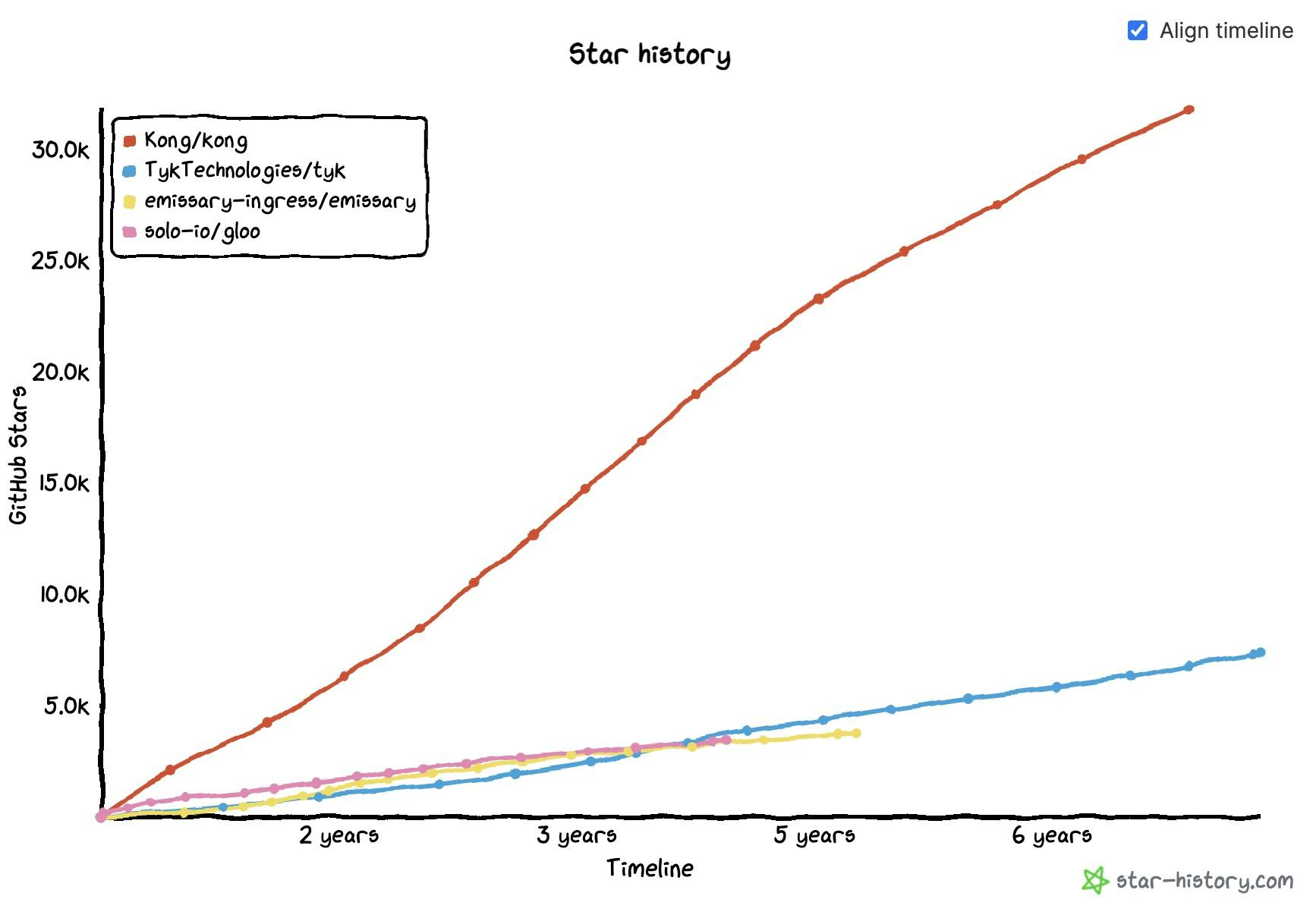

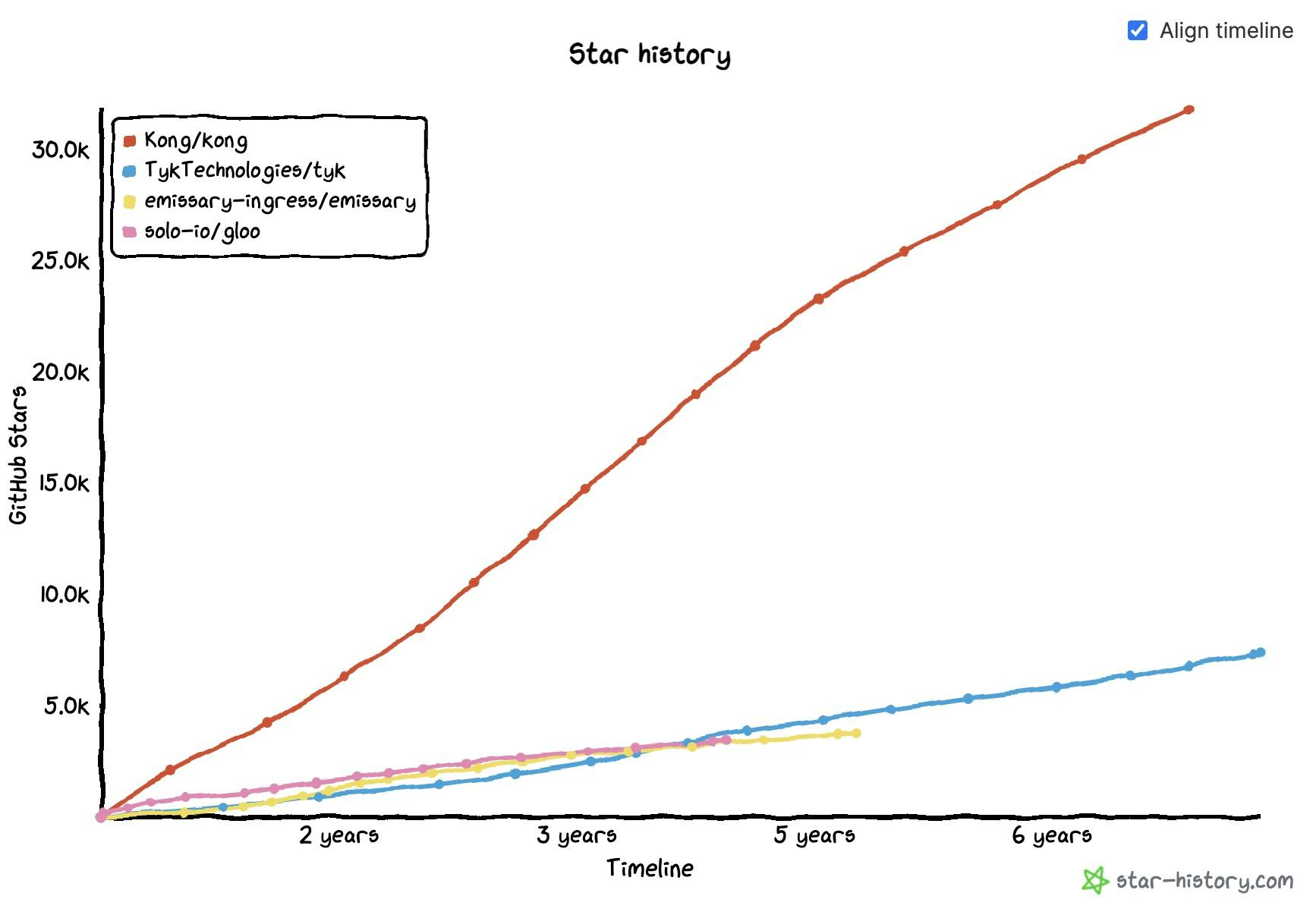

When we take a look at the adoption metrics in the open source community, we can even further see that Kong Gateway leads the pack in developer mindshare and momentum:

Both Kong's absolute adoption and growth rate are the highest in the open source API ecosystem - and the #1 API tool on GitHub. Kong invests a significant amount of resources in open source development, with the goal of building the strongest technology for modern cloud native API infrastructure.

Kong Gateway's ecosystem is also quite large, with 1000+ plugins and integrations [3] created by the community that augment the overall capabilities of Kong. In the next section of this blog, we will understand how Kong's technology helped create a thriving ecosystem via its SDKs.

While Kong is a heavy contributor of OSS, it also provides an enterprise platform that helps the company grow - and by doing so, it is able to allocate even more resources to open source innovation. Today, Kong's underlying technology, which goes above and beyond just the API gateway capabilities, is being used by organizations of the caliber of PayPal, Nasdaq, GSK, AppDynamics, Miro, Moderna, RightMove, HSBC, Wayfair, OlaCabs and many more across every industry vertical like technology, banking and financial, healthcare, consumer, travel, federal and telecommunications.

Let's take a look at Kong's underlying technology to further understand why Kong is being adopted at this scale.

Kong's Gateway Technology focused on speed

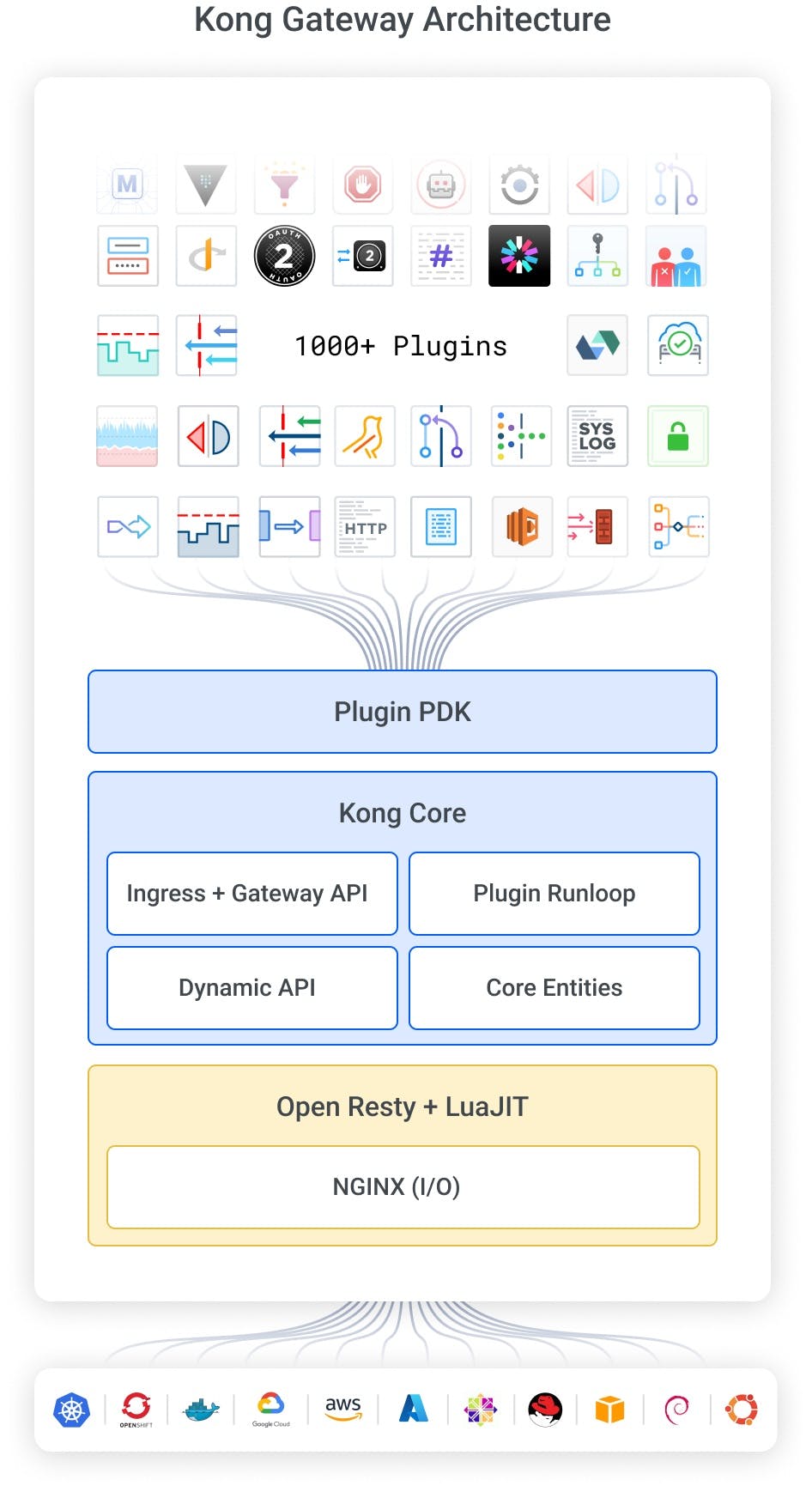

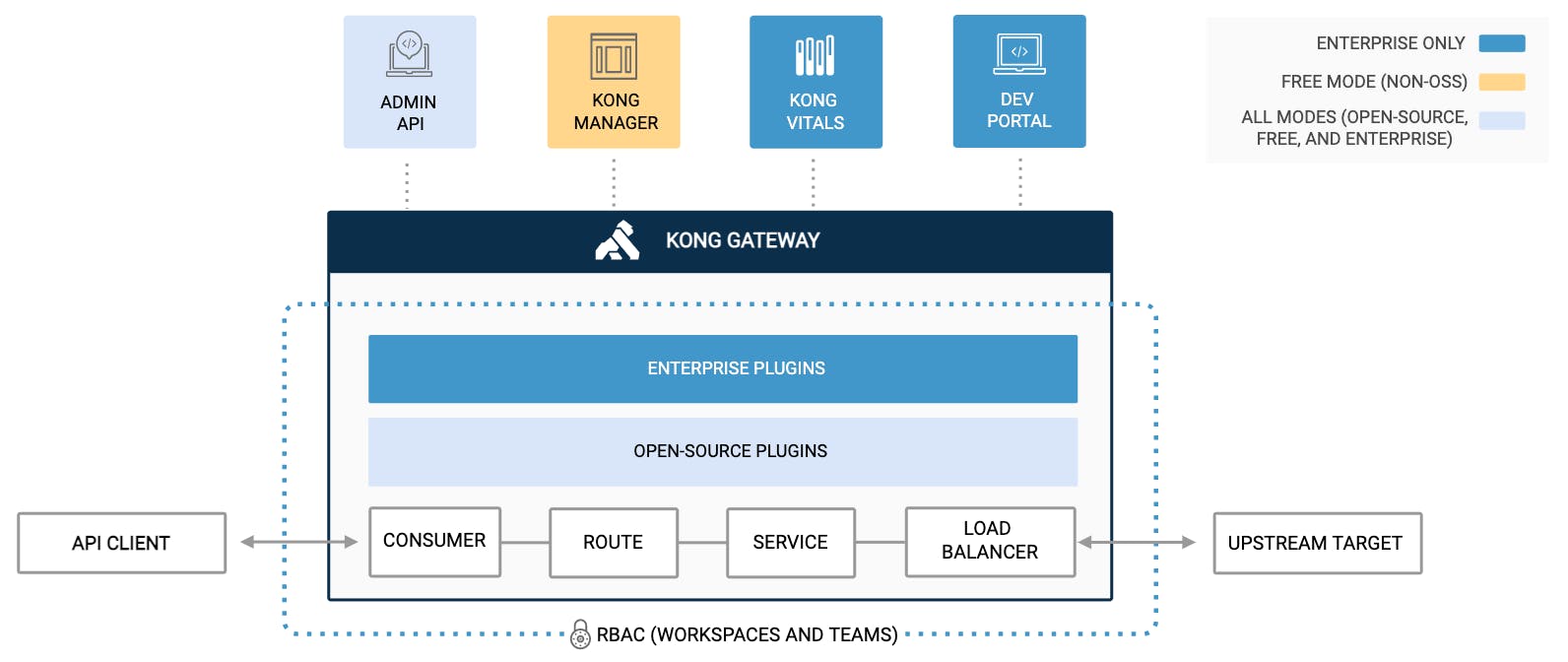

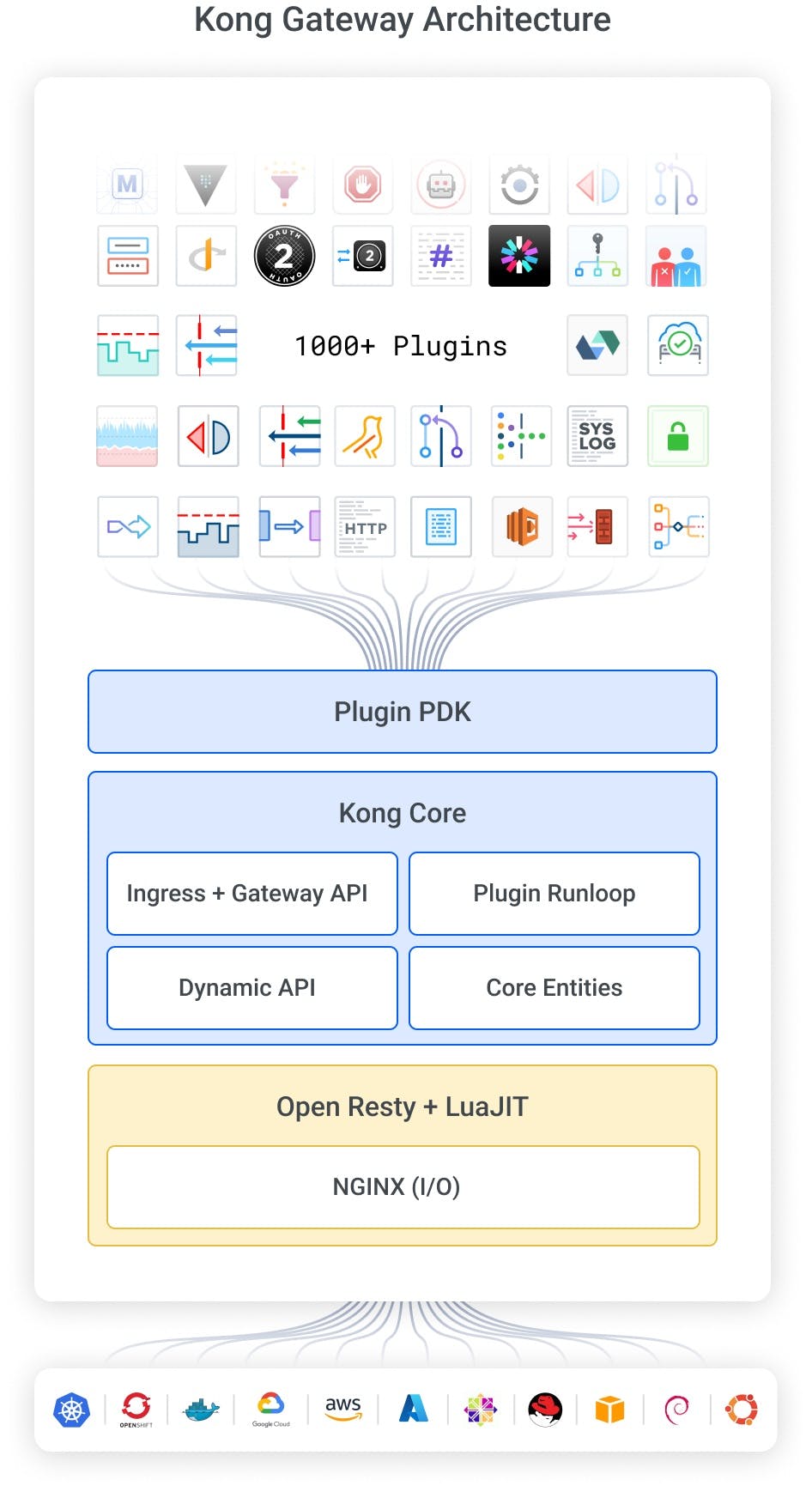

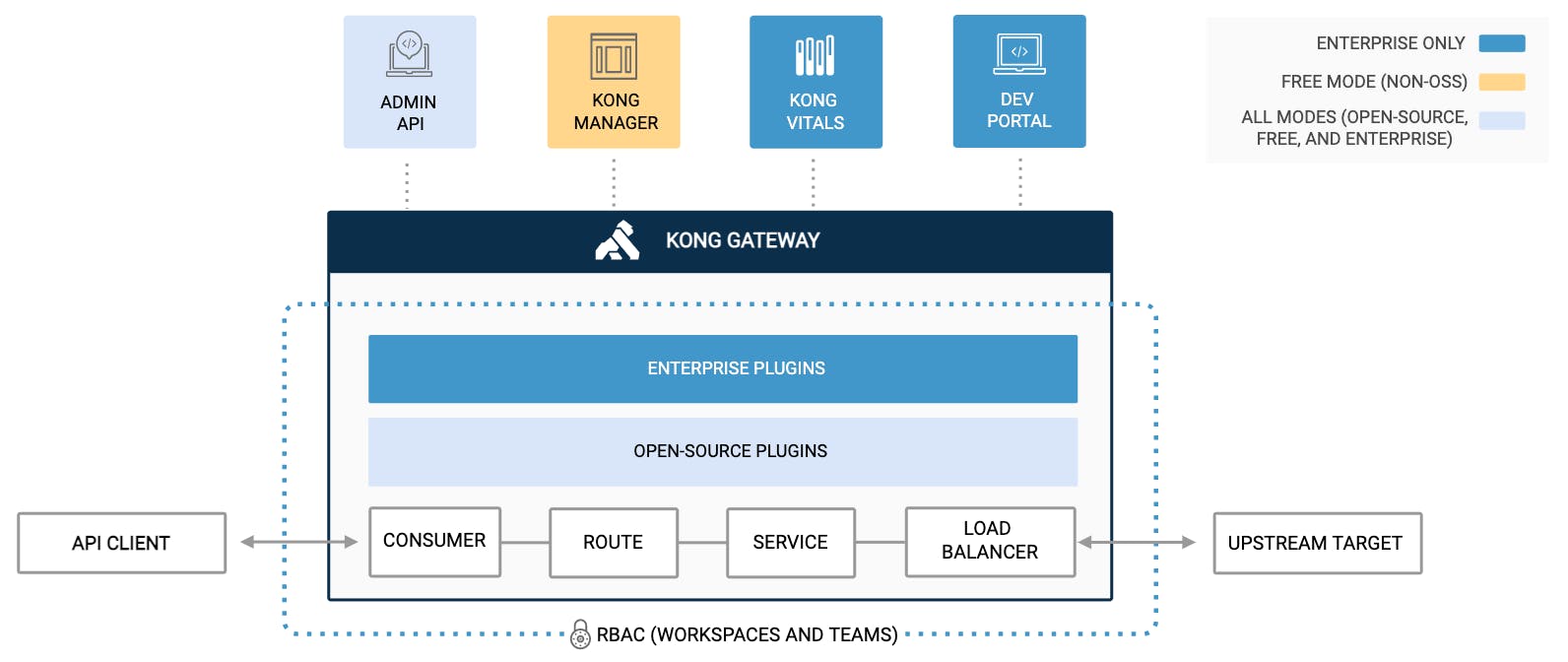

Kong's adoption is being driven - at the core - by Kong's technology, which we will now analyze in detail. We can divide Kong's technology into three layers:

These are the high-performing architectural layers that are powering Kong Gateway, with 1000+ plugins built by Kong and by the ecosystem that users can choose from.

Let's take a deeper look at these three layers to understand why Kong is built the way it is.

The underlying proxy technology that Kong uses is open source NGINX. Within Kong, NGINX is used for a very specific purpose: accepting the incoming requests and managing the I/O of the request/response lifecycle. No more, no less. The actual API management functionality is provided by Kong’s own codebase in the second and third layers and IP built over years. These layers are the result of significant investment in R&D and a myriad of features and performance improvements handcrafted over the years, as we will learn shortly.

As such, NGINX is being used in a very specialized way and provides less than ~5% of the overall features that Kong delivers in the other layers.

When we decided to build Kong, we wanted to rely on rock solid I/O for the underlying operations, and NGINX — the most adopted web server in the world processing the largest majority of traffic on the Internet [4] - was the obvious choice. NGINX has been created in an extremely optimized way, with a level of meticulous details that are hard to find in other projects - closer to perhaps the Linux kernel. One example of this can be seen here and here, where NGINX keeps hash table buckets aligned on the cache line boundary to avoid sub-optimal CPU caching behavior when accessing the bucket.

The performance of NGINX is outstanding. For example, Netflix has pushed more than 100 gigabits per second on a single CDN node [5]. Cloudflare is another example of heavy usage of NGINX and uses NGINX to power its global CDN infrastructure that handles 18%+ of the world's internet traffic [6] or about 5M requests per second. NGINX is so fast and performant that it is the technology of choice not only in the cloud and for containers (with the NGINX ingress being the most adopted technology on Kubernetes and maintained by the Kubernetes team) but also on physical infrastructure where providers are limited by rack space and server resources and have to get the most out of them. NGINX is so ubiquitous in our industry that it has become like electricity: it just works.

Kong has deep expertise in the full stack of Kong gateway, including the NGINX core, which is regularly expanded by Kong with features and capabilities not natively available otherwise, and has contributed to upstream NGINX. NGINX provides Kong with the fastest, most lightweight and reliable core engine for managing requests with non-blocking I/O that Kong can freely manipulate to provide advanced API connectivity capabilities via Kong Core and its plugins.

Over the years, many new underlying proxy technologies emerged with their own strengths and weaknesses, but none have the performance, adoption and reliability of NGINX.

Kong is leveraging NGINX via another technology called OpenResty, one of the top three most used web servers in the world, along with vanilla NGINX and Apache. OpenResty is a fantastic technology that embeds the super-fast LuaJIT into the highly performant NGINX [7]. LuaJIT allows NGINX to be extended with modules created in a higher-level language (Lua). By leveraging LuaJIT, the Kong codebase runs on top of the fastest JIT compiler in the world - faster than V8 [8] - that is being regularly maintained and improved by the OpenResty team. LuaJIT is heavily used in constrained environments like embedded systems and mobile applications because of how fast and lightweight it is. LuaJIT is extremely performant because of its clever tracing compiler that can identify optimal trace start points, and continuously optimizes the generated assembly for hotspots. The assembly itself is handcrafted for optimal performance on the target processor. Additional optimizations like Static Single Assignment Form IR, code sinking using snapshots, spare snapshots and careful register allocations make LuaJIT operate at very close to native speeds and in some cases allows LuaJIT to operate faster than static code generated by regular compilers. LuaJIT's interpreter itself is written in assembly to optimize execution at every step, and overall it is regarded as a work of art in our industry.

OpenResty is a vibrant, stable and well maintained project. Cloudflare, for example, is using OpenResty across its CDN infrastructure and other users include Shopify, TikTok, Affirm, GrubHub. OpenResty is fundamentally a control flow layer for NGINX's I/O, which embeds the NGINX runtime and allows Kong Gateway to control the request and response life cycle in order to provide its functionalities (by doing so, Kong is extending NGINX). Kong also contributes to the OpenResty project as part of our commitment to continually improve the entire stack. For all of these reasons, Kong is leveraging OpenResty to power its API gateway and provide sub-millisecond processing latency to deliver the best performance to APIs and microservices. This makes Kong Gateway the fastest gateway in the world.

Kong Gateway further improves this stack with fully dynamic configuration, a RESTful API, native support for Kubernetes and extensibility via plugins in five different languages (Ruby, Python, Lua, Go and Javascript) - all innovations that keep driving the incredible amount of production deployments of Kong Gateway in the industry.

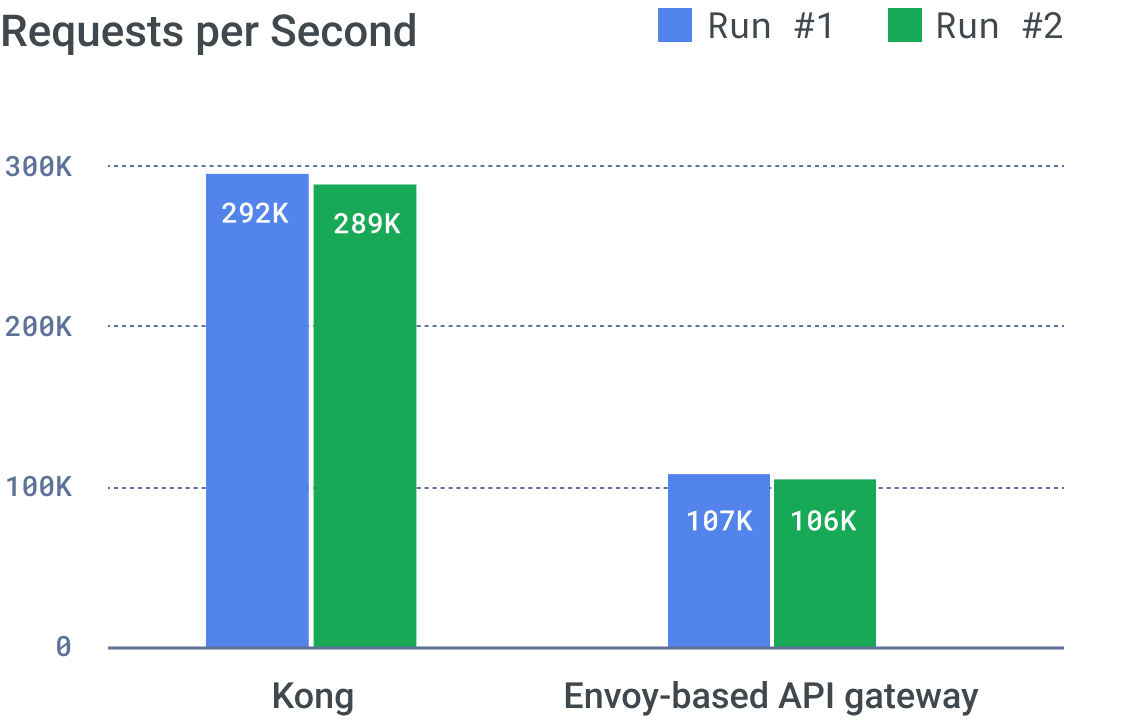

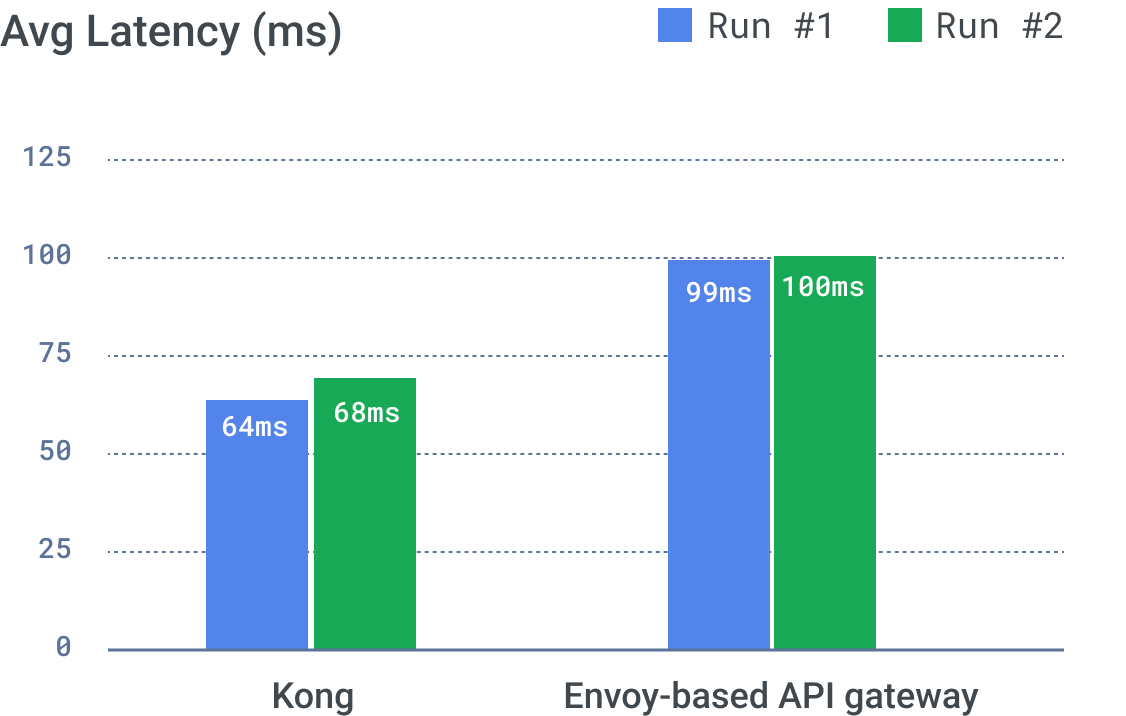

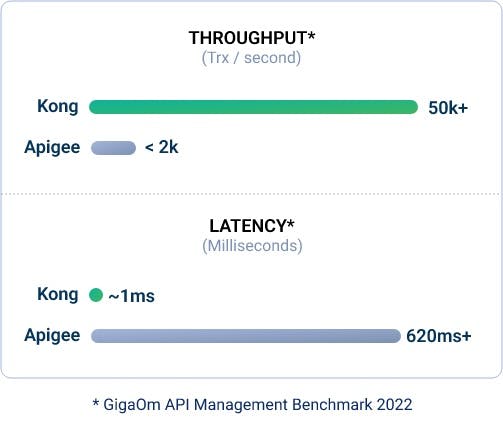

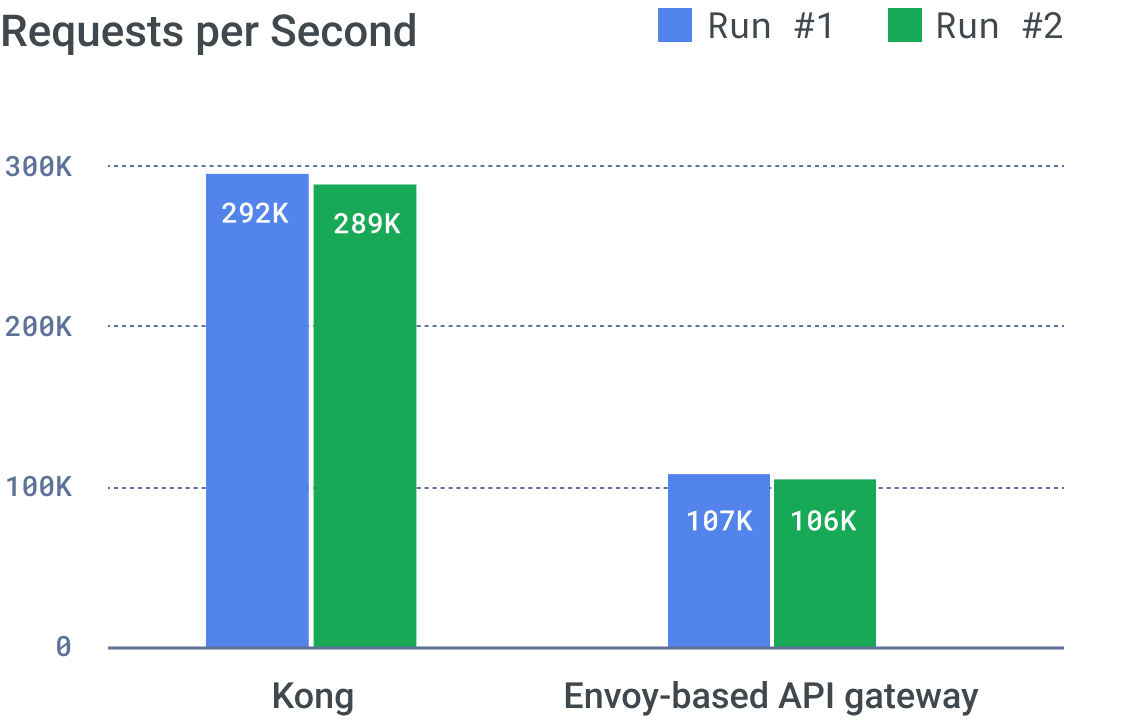

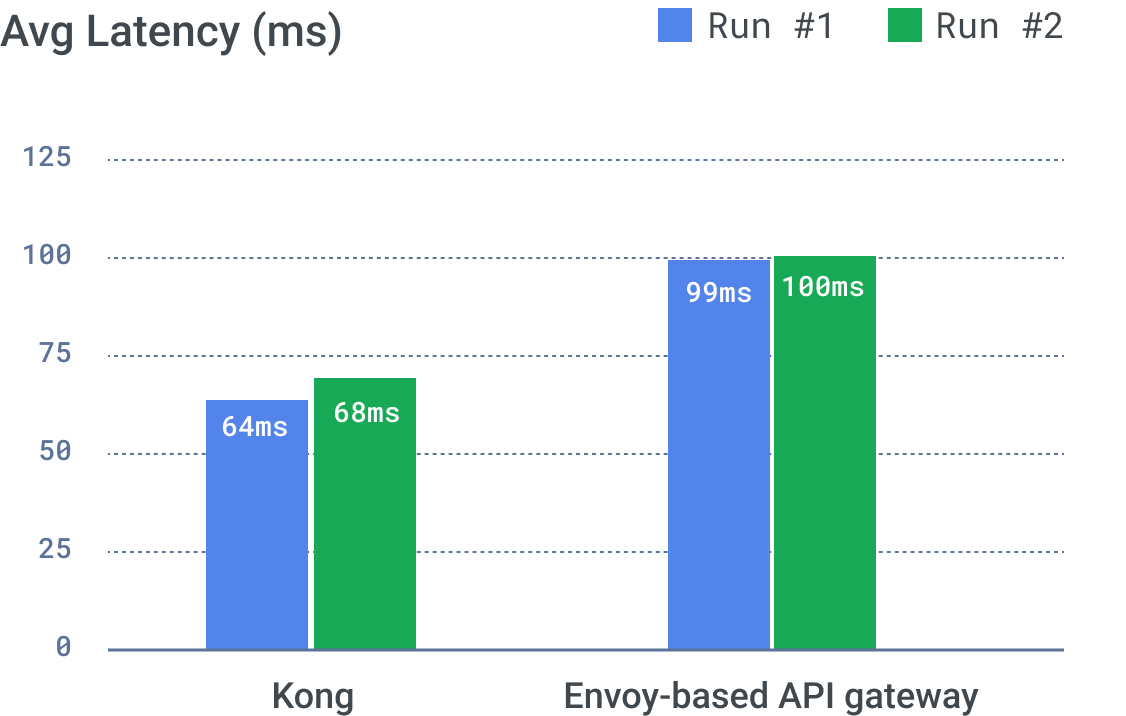

To prove Kong Gateway technology stack is superior, let's take a look the following performance benchmarks against another project based on Envoy proxy which claims to be "next-generation":

Kong Gateway has been benchmarked against an API gateway built on top of Envoy, built by a vendor that claims to be the "next generation". Kong is both faster and presents less latency in our benchmarks.

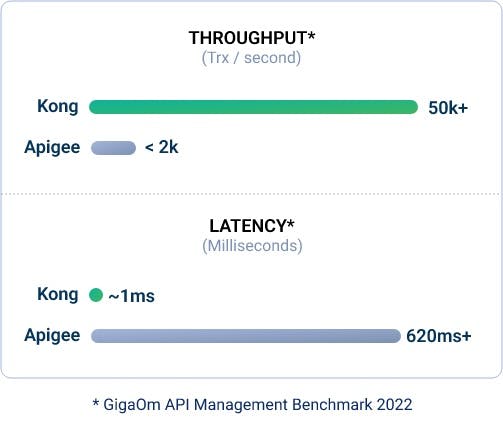

Kong Gateway has been benchmarked against an API gateway built on top of Envoy, built by a vendor that claims to be the "next generation". Kong is both faster and presents less latency in our benchmarks.Below, a comparison with a more traditional API gateway instead:

The full report can be downloaded here.

The full report can be downloaded here.

These benchmarks highlight the technology innovation - and hyper-optimization - of the underlying core engine that makes Kong Gateway the most adopted API gateway in the world. Performance is critical in modern microservices architectures, but it's not the only requirement. Let's take a look at everything else that Kong Gateway has to offer.

Kong Core

NGINX is fundamentally a static proxy that notoriously requires reloads for configuration changes, but this is not the case with Kong Gateway. As a matter of fact, Kong adds an incredible range of capabilities to the underlying NGINX core that are fully dynamic and configurable declaratively via native Kubernetes CRDs, YAML configuration (via decK) or imperatively via a RESTful Admin API. Every operation can be done with no reloads or restarts, and changes are being applied instantaneously across the entire cluster.

The RESTful admin API that Kong created is quite comprehensive, and it is being used both by automation CI/CD pipelines and directly by the APIs and the applications that want to manipulate the state of the gateway programmatically (for example, when provisioning authentication keys at runtime for new users). The Admin API also powers the GUI of Kong Gateway itself (called Kong Manager) and provides extensions for manipulating services, routes, consumers, plugins, etc.

Kong Plugins, which we will explore later, can also expand the Admin API with their own custom methods, creating an environment that is extremely customizable to address virtually any need. This has been appreciated by Kong's users and enterprise customers because it gives them full control over the entire API gateway stack.

Kong Gateway is Kubernetes native and can be entirely configured via its Kubernetes Ingress Controller, which supports both the Ingress specification and the new Kubernetes Gateway API specification. As a matter of fact, Kong is one of the founding members of the K8s Gateway API committee that helped release the first set of specifications in the Kubernetes ecosystem.

We also understand that not every user of Kong is going to be running Kubernetes, and that's why Kong can also run on VMs or bare metal via either a declarative YAML configuration or via a Postgres storage backend, which also allows the creation of a global cluster with perfectly synced Kong Gateway nodes. When running with a PostgreSQL backend, any managed database like AWS Aurora, RDS and others also work, as long as they support a postgres-compatible interface.

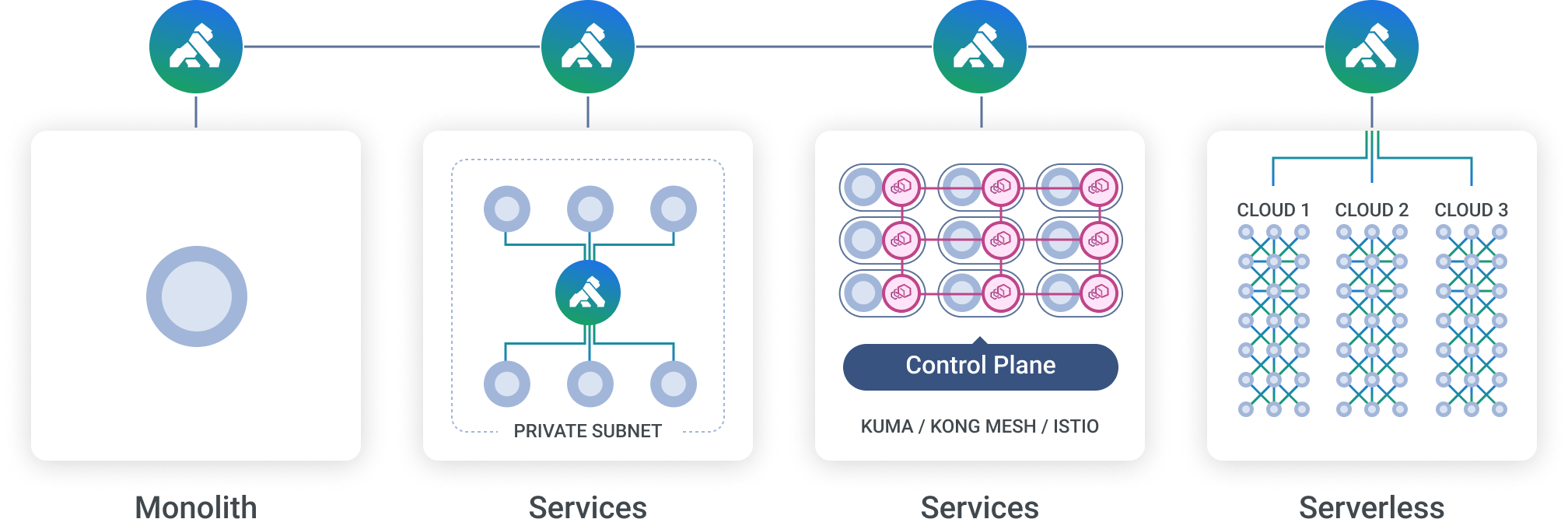

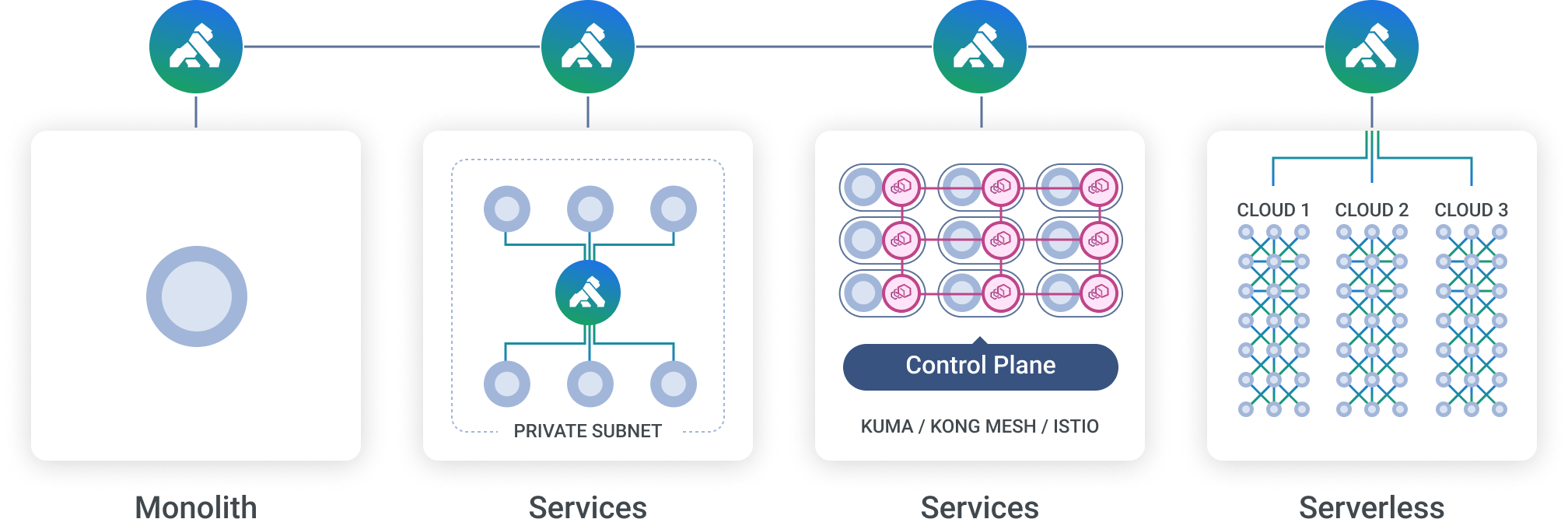

Kong has been built with architectural flexibility in mind, and as such, it supports applications built in a wide variety of ways, including microservices, service meshes, functions as a service (FaaS), containers, VMs, multi-cloud and more.

The Kong Core is highly optimized to be a runloop for plugins, and it is hyper-optimized to be very fast and performant, as demonstrated in the benchmarks earlier.

Kong bundles support for REST, gRPC, WebSockets, GraphQL, Kafka, TCP and UDP as native protocols that are supported since day one in order to support the API teams in whatever technology they want to use in their applications. Also, Kong supports a very unique concept of "Consumers" (that we invented in the first place to solve use cases for our own API marketplace - Mashape - of 20,000 APIs and 300,000 API consumers back in the days [9]). They are not supported in other proxy technologies, which allows us to define grouping and management rules for the consumers of our APIs, what tier they belong to, and how to secure and control them.

Kong keeps pushing the performance envelope. Kong uses LMDB, a very fast lockfree in-memory database to speed up concurrent reads and writes to shared memory. A new core-router implemented in Rust is not only optimized for match speed but also for config loading, allowing Kong to pick up new configuration without losing throughput. A new events mechanism allows Kong to scale throughput linearly on machines with more cores without allowing IPC at scale to become a bottleneck, allowing Kong to perform well on machines of all sizes. A new wheel-based implementation of timers allows Kong to judiciously use limited resources to push more throughput on the same hardware.

All of this done dynamically with a lightweight, highly performant and scalable runtime.

Kong Plugins

And finally, let's talk about Kong plugins, which add functionality on top of the gateway with features like traffic control, security, authN/Z, observability and much more. There is truly a Kong plugin for everything. As a matter of fact, there are about 1000 plugins [9] built by the community that can be used, in addition to the 90+ official plugins available on the plugin hub.

By introducing the concept of plugins, Kong Gateway becomes a modular system that keeps the underlying core very lightweight and fast, while all the API management functionality is being delivered by the plugin-oriented architecture. As such, plugins can be installed, removed and configured individually, exposing the end-user (like you) to a gradual learning curve, which in turn makes Kong Gateway very easy to use as its adoption demonstrates. Plugins in Kong also provide their own configuration schema, which is automatically being validated by Kong Core and can extend the functionality of Kong quite significantly while still relying on non-blocking I/O helpers delivered by the PDK (Plugin Development Kit) to keep the performance high.

The innovation that Kong has delivered with the plugin architecture allowed the project to scale its functionality enormously in a compartmentalized way while at the same time maintaining the core in pristine conditions.

Today, plugins can be built with Lua, Go, Python, Ruby and Javascript. Also, we are about to fully release WasmX this year as a public tech preview, which is an NGINX module built by Kong that brings the power of WebAssembly to Kong Gateway and will allow us to build plugins in any WASM compatible language. WasmX has already been demonstrated at Kong Summit 2021, and we are spending a meticulous amount of time and effort to make sure it's ready for prime time and battle-tested for the most intensive use cases that our users and customers are going to run through it.

Plugins are built using an SDK that Kong Gateway makes available to every developer that we call "PDK" (Plugin Development Kit). With the PDK, developers can expand Kong with new functionality that does not exist natively in the product to address edge cases or specific requirements. The amount of programming languages supported, the large surface that plugins can cover in their functionality and the expressiveness of the configuration schema provide unmatched flexibility in what Kong Gateway can do.

This type of extensibility has been critical for our largest users and customers because they are always in control of the gateway, which in a world where APIs are at the backbone of our digital world, cannot be a black box but rather needs to be extended quickly with new requirements.

Conclusion

In this blog post, we have analyzed the core technologies that power Kong Gateway and how they work together to provide a reliable foundation for our APIs. The performance, the scalability, the unmatched extensibility options via plugins and the ease of use are all reasons why Kong today is the most adopted API gateway in the world, with an enterprise platform that supports the full lifecycle management of APIs via other products like API Analytics, API Portal, Kong Mesh (for service mesh) and more.

While this blog post has been fully focused on Kong Gateway, we haven't even scratched the surface on the technology innovations that Kong Inc. delivers across every other product in our stack, like Kong Mesh for a service mesh built on top of CNCF's Kuma and Insomnia for API design and testing. Kong Gateway is a very important product in the bigger picture of API connectivity, but it is not the only one, and each one of our other products are built with the same standard and rigor as Kong Gateway. Our goal is to create products that will be powering modern API and microservice-oriented architectures: from north-south to east-west, from APIM to service mesh, with one unified stack.

In the world of APIs, there have been cycles of new technologies and new proxies that both emerged and disappeared over the years, and we are proud that Kong Inc. is an adopter and contributor of both NGINX in Kong Gateway and Envoy Proxy in Kong Mesh, with the goal to provide an API connectivity stack that leverages each proxy runtime where they fit best, while innovating and being in control of every technology layer in the stack.

Try Kong Gateway today at https://konghq.com/install.

References:

[1] – https://www.akamai.com/newsroom/press-release/state-of-the-internet-security-retail-attacks-and-api-traffic

[2] – https://www.slideshare.net/danieljacobson/maintaining-the-front-door-to-netflix-the-netflix-api/19-14000000000Netflix_API_Calls_Per_Day

[3] – https://github.com/search?l=Lua&q=kong&type=Repositories

[4] – https://w3techs.com/technologies/overview/web_server

[5] – https://papers.freebsd.org/2021/eurobsdcon/gallatin-netflix-freebsd-400gbps.files/gallatin-netflix-freebsd-400gbps-slides.pdf

[6] – https://www.wpoven.com/blog/cloudflare-market-share/

[7] – https://www.stackscale.com/blog/top-web-servers/#:~:text=the%20same%20time.-,Most%20popular%20web%20servers,are%20Nginx%2C%20Apache%20and%20OpenResty.

[8] – https://gist.github.com/spion/3049314

[9] – https://stackshare.io/kong/how-mashape-manages-over-15000-apis-and-microservices

[10] – https://github.com/search?q=kong+plugin