Kong Launches AI Gateway 3.11 to Help Cut Token Costs, Unlock Multimodal Innovation and Power Agentic AI

AI Gateway 3.11 includes new multimodal capabilities, AI prompt compression, and support for AWS Bedrock guardrails

SAN FRANCISCO, July 15, 2025 – Kong Inc., a leading developer of cloud API technologies, has announced a major update to Kong AI Gateway 3.11 with several new features critical in building modern and reliable AI agents in production. New updates include expanded multimodal Generative AI (GenAI) APIs, an AI prompt compression plugin to reduce token cost, and support for AWS Bedrock Guardrails. Together, these capabilities help organizations significantly reduce LLM-related infrastructure costs by optimizing token usage, eliminating redundant requests, and enabling scalable multimodal deployments across teams.

“We’re excited to introduce one of our most significant Kong AI Gateway releases to date. With features like prompt compression, multimodal support and guardrails, version 3.11 gives teams the tools they need to build more capable AI systems—faster and with far less operational overhead. It’s a major step forward for any organization looking to scale AI reliably while keeping infrastructure costs under control,” said Marco Palladino, CTO and Co-Founder of Kong Inc.

Key capabilities of Kong AI Gateway 3.11 include:

New GenAI APIs

- Advance AI Innovation: Kong AI Gateway 3.11 introduces more than 10 new GenAI capabilities out of the box, enabling enterprises to build faster, smarter, and more multimodal AI applications. This release supports advanced features such as batch execution of multiple LLM calls, audio transcription and translation, image generation, stateful assistants, enhanced response introspection, and more. Developers can now orchestrate real-time, multilingual, and visually rich AI experiences with support from leading models including Azure, OpenAI, AWS Bedrock, Gemini, Cohere, and Hugging Face.

Prompt Compression

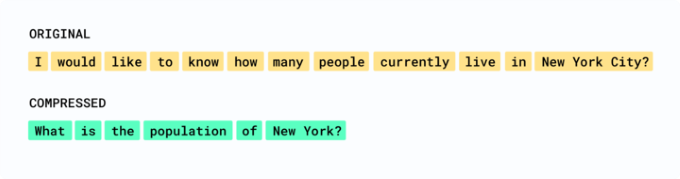

Reduce Token Costs: LLM costs are typically based on token usage, which means the longer the prompt, the more tokens are consumed per request. The prompt compression plugin removes padding and redundant words or phrases while keeping 80% of the intended semantic meaning of the original prompt. With this approach, organizations can achieve up to 5x cost reduction. This feature complements existing cost-saving tools like Semantic Caching, which prevents redundant LLM calls, and AI Rate Limiting, which manages usage limits by application or team.

Fig. 1 GenAI prompt example

AWS Bedrock Guardrails Support

- Strengthen Guardrails: The latest AI Gateway update now includes support for AWS Bedrock Guardrails to help safeguard AI applications from a wide range of both malicious and unintended consequences such as hallucinations and inappropriate content. Users can monitor applications and provide incremental improvements in quality, and react immediately by adjusting policies without any changes to the application code.

Kong AI Gateway is Available as Part of Kong Konnect

Kong Konnect is a unified API platform designed to support every aspect of modern service connectivity. Enterprises can build, run, discover, and govern APIs, microservices, AI models, event streams, and more—all from a single, globally distributed control plane. With support for multiple runtimes and protocols, Kong empowers platform and security teams to implement best practices at scale while enabling developers to innovate faster.

To learn more about AI Gateway visit: https://konghq.com/products/kong-ai-gateway

About Kong

Kong Inc., a leading developer of cloud API technologies, is on a mission to enable companies around the world to become “API-first” and securely accelerate AI adoption. Kong helps organizations globally — from startups to Fortune 500 enterprises — unleash developer productivity, build securely, and accelerate time to market. For more information about Kong, please visit www.konghq.com or follow us on X @thekonginc.