Embracing the API-First Paradigm

Delivering World-Class Digital Experiences Through a Modern API-Native Infrastructure

The Path to an API-First Operating Model: Enabling Agility, Efficiency, and Competitive Advantage

Access is everything. In our hyper-connected world, access to data — at any time, from any device — is paramount. Whether you’re browsing the weather forecast on your phone, checking into your flight at the airport, booking your next telehealth appointment, or ordering pizza delivery, seamless digital access to data and fast processing for digital transactions are what make great digital experiences.

Organizations small and large have to compete on a new front: the customer experience their audience will encounter through their websites, devices, or kiosks. Across all industries, organizations aim to achieve faster time to market, reduce total cost of ownership (TCO), and create an overall better experience for their customers. And all have now realized that this race can only be won through a better developer experience.

Luckily, we’ve entered a new industrial revolution, one in which software is at the center, and APIs help connect the dots. Think about a huge assembly line on which applications are now built from microservices — various components already developed and ready for use — and APIs connect these microservices to create the finished product. In our current API economy, where more than 80% of all web traffic is made solely of API calls, organizations are now able to expose their digital services and assets through APIs in a controlled way. The creation and reuse of software components to make up a new digital service has brought agility, efficiency, and a higher-quality customer experience to those organizations who have adopted an API-first approach enabling and promoting better developer experience. And with a better developer experience comes the promise of greater customer digital experiences and greater competitive advantage.

In this paper, we’ll look at the critical steps to building a winning developer experience through an API-first operating model.

The World is Digital

Transitioning to an API-First Infrastructure

The future of software is distributed, and connectivity is the backbone of distributed systems. For some, this future may feel well underway, but in reality, we’re only in the early days of APIs and microservices. Every new API we add or monolith application we decouple into microservices adds another connection. With all of the investment in APIs and microservices, a huge amount of stress is placed on the network. We need to ensure the network is reliable, secure, and performant. More than that, we need to make sure that all APIs are optimized to have the best connectivity possible.

Developing software applications rapidly, efficiently, and effectively is now table stakes for growing businesses. Customers want to quickly develop new products and services to drive new revenue streams, improve the customer experience, engage with customers in a more meaningful way, and constantly find ways to reduce the risk and cost of doing business.

This represents both an opportunity and a risk — the word “digital” is really code for modernization.

Technologies have evolved. What used to be a monolithic and centralized stack has evolved to a decentralized ecosystem with the potential of enabling unprecedented agility and velocity — just like the cloud found rapid adoption because it provided significant agility advantages over on-premises data centers. Initially developers used the cloud for development and testing, but over time, the cloud matured towards running enterprise-scale production systems, including building cloud native applications.

Then came containers as a key technology for microservices and cloud native applications managed through Kubernetes. Along with containers came a shift towards agile approaches — DevOps and CI/CD processes enabled developer teams to promote functionality more quickly into production, with better quality and lower cost. And because the lifetime of a container is very short compared to a virtual machine, the infrastructure itself has become highly dynamic.

There have been two consequences of this decentralization:

- Services became smaller, with the number of APIs continuing to explode while the number of agile teams rapidly increases everywhere.

- Services became also harder to control, with less and less visibility into what is now an API jungle.

An additional transformation was moving from mono-client to disparate clients — we went from browsers to edge clients (e.g., iPhones, machines, kiosks), partner applications, and customer applications. With this evolution, it became critical to be able to consume back-end logic via APIs to enable all front-end clients.

And so it is clear: The key to building a great application is to be able to connect its services. In this new complex world, APIs and their connections are not only enabling decentralization but also are becoming the backbone of enterprise infrastructures.

This is why today API management is a big deal. API management provides a solution that can ensure reliable connections for both the cloud native and legacy workloads — and a solution that can help accelerate the transition for companies embracing modern API-first architectures.

What an API-First Operating Model Looks Like

The API-native operating model puts the developers at the center. The layers between developers and customers/users have vanished, and developers are now in the front row to deliver digital experiences that will make or break their organization’s position in the market.

In his book “Ask Your Developer: How to Harness the Power of Software Developers and Win in the 21st Century,” Twilio CEO and co-founder Jeff Lawson wrote that corporations had to evolve from “build vs. buy” to “build vs. die.” He said that the act of building is the act of listening to your customers. In other terms, build your own software to produce the best digital experience for your customers.

In the early 2000s, we saw a transition where nearly every corporation became a software development organization. What we’re seeing now is that every corporation is becoming an API organization. A decade ago, software was eating the world; now APIs are eating the world in this second act.

But how can corporations equip their developers with the essential tools to get it right? To get it fast into production? To get it secure? To scale and stay compliant as they grow? How can they focus their developers on building applications instead of pipes?

Accelerate your Journey to Decentralization: Increase pace of innovation, gain new revenue streams, and lower TCO.

With so many opportunities come many risks without a strong foundation. This foundation is a modern, cloud native, full lifecycle API management platform.

Full Lifecycle API Management Platform

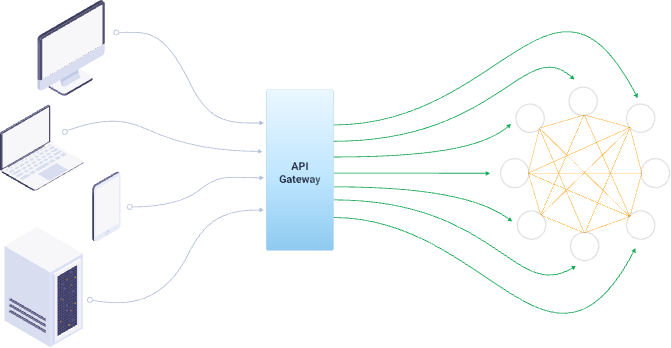

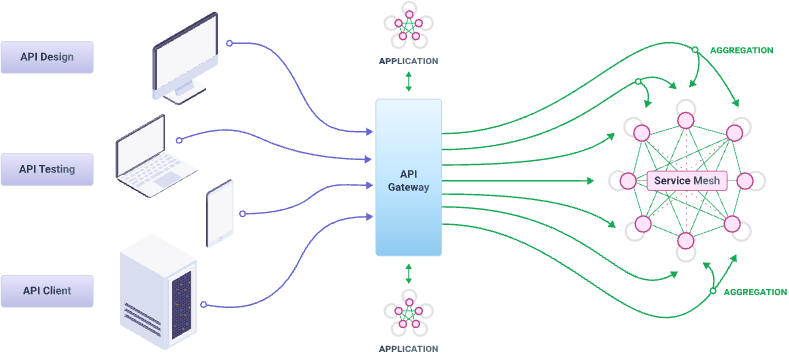

First, at its core, an API management platform will have an API gateway that provides unified ingress control and performs traffic management functions like load balancing, TLS termination, and HTTP/TCP protocol optimizations on inbound traffic before routing it to the correct microservice within a Kubernetes cluster. The API gateway is mostly used for traffic to the edge of the corporation or for internal communication within applications inside the perimeter.

First, at its core, an API management platform will have an API gateway that provides unified ingress control and performs traffic management functions like load balancing, TLS termination, and HTTP/TCP protocol optimizations on inbound traffic before routing it to the correct microservice within a Kubernetes cluster. The API gateway is mostly used for traffic to the edge of the corporation or for internal communication within applications inside the perimeter.

API Gateway for Unified Ingress and Control

For Edge an Internal Communication

Second, a comprehensive API management platform will provide a service mesh that enables and controls communication between microservices within an application. A service mesh provides a management layer but also security, high availability (through load balancing), and observability.

Service Mesh Overlay for Security, HA, and Observability

The third component of an API management platform is API design and testing. It provides a collaborative environment for developers to plan and define the architectural decisions made when building an API and then to test APIs to ensure they meet expectations when it comes to functionality, reliability, and security for the application.

Including Development API Testing and Creation

In summary, a full lifecycle API management solution enables developers and DevOps to build, run, and govern all APIs. It enables developers to design and test their APIs and run them through an API gateway or a service mesh.

It also enables DevOps to provide an environment for developers to reduce rework via services cataloging, provide security and control, and lower the cognitive load so developers focus on what they are building. It encourages self-service via a portal, goes past the complexity of complying with one organization’s regulations and governance, and ensures that development — at such a fast pace — does not become the Wild West.

Finally, it enables small teams to work with agility while staying in the guardrails of development required by DevOps for the sake of the organization’s security and governance.

Full Stack API Connectivity

Unlocking the API-First Operating Model

There are several benefits to an API-first operating model that span across the organization. The API-first operating model should deliver the following:

Time to Market

- Fast and easy deployment of applications

- Better customer experience

- Competitive advantage

Developer Experience

- Developer productivity

- Operational efficiency

- Architectural freedom

Scalability

- Performance and full service connectivity

- Extensibility, built for growth

- Low total cost of ownership (API reuse, automation)

Security

- Reliability

- Business continuity

- Batteries included (to focus developers on building apps, not pipes)

Time to market is the ultimate benefit, one that will impact the organization’s competitive advantage and most likely its market share. In Kong’s 2022 API & Microservices Connectivity Report, 75% of technology leader respondents said they fear competitive displacement if they fail to keep pace with innovation — with 4 in 5 predicting they will go under or be absorbed by a competitor within six years if digital innovation lags. These are dramatic statistics that aren’t coming from business leaders but technology leaders.

As we wrote earlier, developers are now at the center of API-first organizations. They have become the “customers.” Providing a world-class developer experience is not just critical; it’s essential. In the same report, technology leaders listed the following as the top three challenges hindering innovation:

- Reliance on legacy and monolithic technologies

- Lack of IT automation

- Complexity of using multiple technologies

And an overwhelming 86% of the same tech leaders think microservices are the future. Obviously.

With these challenges in mind, a full lifecycle API management platform accelerates and simplifies the adoption of the API-first operating model by orchestrating the build, run, and governance stages of APIs.

Full Lifecycle API Management

The Kong Experience and API Platform

We’ve seen hundreds of customers, from top Fortune 500 to smaller cloud native organizations, evolving to a model where applications — especially customer-facing applications — are built internally with an API mindset. All of them had a few critical requirements for their connectivity platforms:

- End-to-end service connectivity (API gateway and service mesh)

- Architectural freedom

- Hybrid and multi-cloud

- Kubernetes native

- Multi-protocol

- Fast, scalable, and secure

- Extensible

End-to End Connectivity

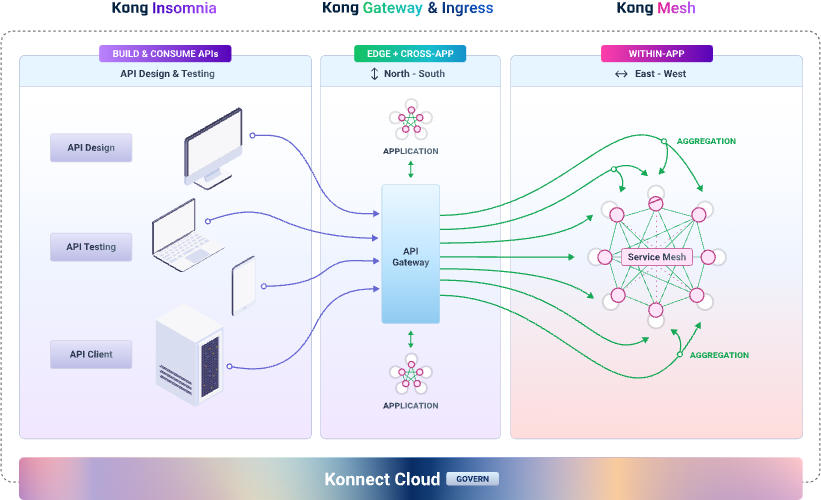

Whether you choose an API gateway, an ingress controller, a service mesh, or a combination of these three API runtime engines, Kong ensures developers can achieve end-to-end connectivity.

Kong enables connectivity across apps, at the edge, and within apps. Kubernetes-native, Kong Gateway is the most adopted API open source project to date and continues to be widely popular with developers as well as with over 500 customers, from Fortune 500 to smaller cloud native, API-first organizations.

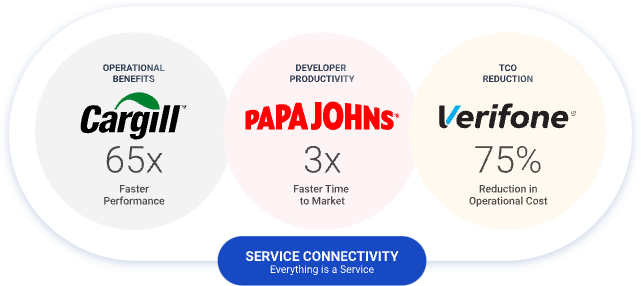

Here are three examples of Kong customers’ outcomes after implementing Kong technology.

Cargill uses Kong to create a unified API platform across legacy and cloud native applications. The challenges Cargill faced were threefold: an increased demand for digital experiences, development friction from siloed legacy IT systems, and difficulty maximizing resource efficiency.

Kong’s platform helped expose APIs for new digital products while providing a unified developer experience across legacy and cloud native systems, as well as horizontal and vertical scaling with Kong and Kubernetes.

The results at Cargill are significant:

- Up to 65x faster deployment with automated validations

- 450+ new digital services created in the past six months

- Dynamic infrastructure that auto-scales up and down with demand

At Papa Johns, Kong enables the full lifecycle of service management in a hybrid environment. Papa Johns had issues rapidly launching new digital services. As a consequence, there was direct revenue loss due to delayed feature launches to digital channels and third-party delivery platforms and aggregators. Papa Johns deployed Kong as an API management platform, including the developer portal and integrations into their CI/CD pipeline. The environment supported is a hybrid one combining on-prem, Google Cloud, and Kubernetes Ingress.

As with Cargill, the Papa Johns results are remarkable:

- Accelerated time to market for new services by 3x

- Achieved over 70% of sales from digital channels, and the strongest month of overall sales and new customer growth in the company’s 35-year history

- The Commerce Platform Team is at the center of the technology strategy at Papa Johns for tapping into innovation wherever it resides, with integrations to various store, digital, and partner channels

Finally, Verifone trusted Kong for its global, omnichannel payments solution after needing to streamline a large-scale, complex global payments system without disrupting services for global customer and merchant base. Verifone needed an infrastructure to govern and securely expose APIs for applications running in a microservices architecture with high reliability and chose Kong as an API management platform to centrally secure, manage, and monitor all global traffic. Verifone moved from monolithic to microservices architecture with flexibility to manage APIs across on-prem, cloud, and hybrid deployments.

And here are the results:

Saved development teams hundreds of hours per month via automated generation and maintenance of API contracts with OpenAPI and centralized monitoring of API traffic

Kong API Gateway

Kong Gateway’s large adoption among all types of customers globally derives from strong operational benefits, developer productivity and experience, and reduction of overall total cost of ownership.

Operational excellence through performance, scalability, and extensibility

- Best-in-class performance: 50K+ TPS/node with sub-milliseconds latency

- Ultra-lightweight and infinitely scalable: 20MB runtime with horizontal and vertical scaling

- Fully automated: 100% declarative configuration that enables rapid adoption in modern CI/CD deployments

- Rapid deployment to any architecture and infrastructure: single binary for on-prem, multi-cloud, Kubernetes, and serverless

API developer productivity and experience

- Simple plugin policies (100+ plugins): Plugin policies are easy to add in for developers and can be created in multiple languages to fit any use case or organizational needs. This provides performance benefits and makes the platform more extensible.

- End-to-end security with comprehensive authentication: Kong’s ability to provide security out-of-the-box reduces risk of configuration issues undermining security. Furthermore, Kong comes packaged with the world’s most comprehensive OpenID Connect Plugin to enable rock-solid authentication for APIs.

- Automated testing: Automating tests and fitting Kong into existing CI/CD workflows minimizes the need for additional testing tools and allows tests to be baked into the CI/CD process.

Kong’s business benefits, TCO, and ROI

Kong helps organizations drive revenue through digital experience and reduce costs through more effective hardware, software, and engineering resources.

- Kong allows app development teams to ship faster with higher quality, better discover and re-use existing APIs and microservices, and meet policy requirements easily.

- Kong’s high performance enables SREs and DevOps teams to more easily meet SLAs and SLOs, minimize risk, and move towards zero-trust through security and identity at every level. These teams can better observe their APIs to proactively address issues before they hit customers.

- Finally, Kong allows enterprise architects to simultaneously reduce cost, increase governance, and minimize the risk of lock-in.

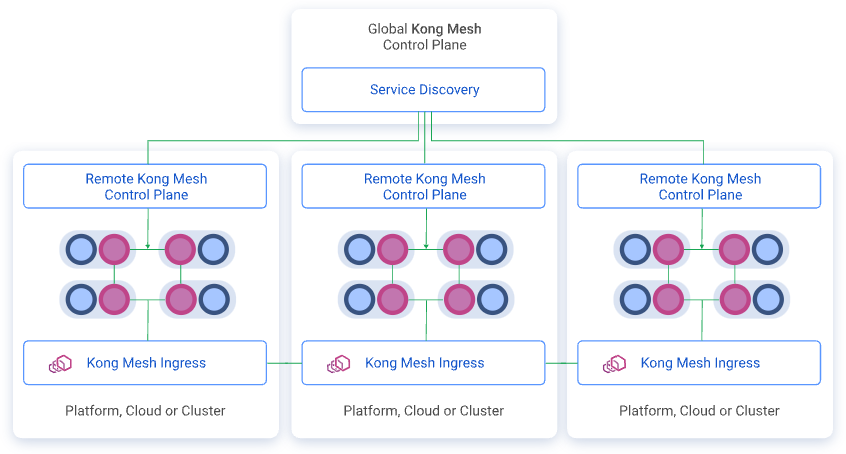

Kong Mesh

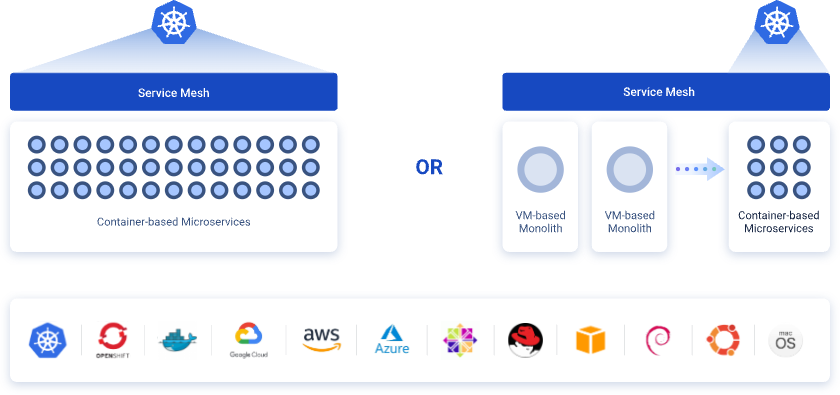

Kong Mesh is built on top of Kuma, an open source service mesh project started by Kong in 2019 and later donated to CNCF. It’s an enterprise-grade service mesh for multi-cloud and multi-cluster on both Kubernetes and VMs.

But first, why a service mesh?

Because of this:

Think of the complexity of these graphs. Each dot represents a microservice. Imagine having to deal with securing the communication between each red dot. Imagine having to spot when a new dot appears on the map. Imagine having to figure out if it is friend or foe. This is exactly what organizations have to deal with today.

Service mesh is a newer, emerging technology, and some customers have asked us: Why should they need a service mesh?

Service mesh deals with connectivity within apps, between microservices. The explosion of APIs is nothing compared to the one happening right now between components of apps — microservices — needing to be connected. Standard development, security, and observability become instant ROI for any organizations building apps based on reusable microservices.

Kong Mesh

Built on top of the Envoy proxy, Kong Mesh is a modern control plane focused on simplicity, security, and scalability.

Start, secure, and scale with ease

- Turnkey universal service mesh with built-in multi-zone connectivity

- One-click deployment and one-click, attribute-based policies

- Multi-mesh support for scalability across the organization

Run Kong Mesh anywhere

- Manage service meshes natively in Kubernetes using CRDs.

- Start with a service mesh in VM environments and migrate to Kubernetes at your own pace.

- Deploy the service mesh across any environment, including multi-cluster, multi-cloud, and multi-platform.

Powered by Envoy Proxy

Envoy-based Kong Mesh, just like Envoy proxies, is fast by design.

- Its lightweight nature removes bloat from each service.

- It reduces performance overhead as the client-side load balancing eliminates hops to a centralized laid balancer.

- It utilizes system resources efficiently and routes traffic to services with spare capacity.

- It maximizes up-time by monitoring the health of services to intelligently retry or re-route traffic, and seamlessly scale up or down.

The three main reasons you should consider a service mesh today are availability, security, and observability.

- Ensure service discoverability, connectivity, and traffic reliability/availability: Intelligently route traffic across any platform and any cloud to meet SLAs. With a unified control plane, Kong Mesh helps:

- Eliminate management complexity by consistently applying policies to services and reducing the risk of misconfigurations that cause transactions to drop

- Increase developer productivity by adding instantly out-of-the-box policies that eliminate the need to build network functionality into each service

- Achieve zero-trust security: Restrict access and encrypt all traffic by default and only complete transactions when identity is verified.

- Achieve zero-trust by design by automatically providing mTLS encryption and identity across every single API, microservice, and database through a one-click setup.

- Inject compliance through fine-grained traffic policies to ensure appropriate connectivity and data privacy for every single API, microservice, and database.

- Streamline security responses and provide the central IT team with control to rapidly deploy critical security patches across all networks.

- Gain global traffic observability instantly: Gain a detailed understanding of service behavior to increase application reliability and the efficiency of developer teams by accelerating the delivery of performance improvements.

- Enable distributed tracing into each service to monitor and troubleshoot microservices behavior without introducing any dependencies to the existing code base.

- Kong Mesh includes 60+ charts, including service map, tracing, and logs.

The benefits of Kong Mesh don’t stop at availability, security, and observability. Kong Mesh is also extremely impactful for organizations looking to streamline DevOps to ship code faster and provide immediate return on investment (ROI). Kong Mesh enables a faster deployment with zero downtime. It improves release management with built-in versioning, feature flagging, as well as canary and blue/green deployments to streamline CI/CD and DevOps workflows. It helps scale developer efficiency by providing fine-grained access to mesh services, including databases, to increase service reuse and eliminate silos across applications and developers.

So API gateway or service mesh, you may ask?

How about end-to-end connectivity? To unlock the API-first operating model and set up your organization for success in competing with the best digital experiences, a full service connectivity approach and platform are needed. Here’s a way to better think about these two connectivity products and how they integrate with each other to deliver a comprehensive solution.

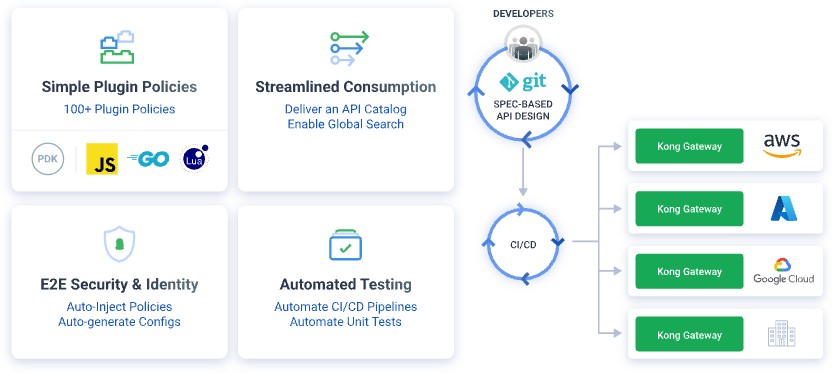

Kong Insomnia: Collaborative Design and Testing of APIs

Kong Insomnia is the leading open source API debugging, design, and testing tool for developers. Widely adopted by developers, Insomnia accelerates API development with standards and collaboration by leveraging Git pipelines and OpenAPI. With more than 10 million downloads, it enables easy debugging of REST, SOAP, GraphQL, and gRPC APIs straight from the Insomnia API client.

With more than 20,000 Github stars, Kong Insomnia automates testing and integration in CI/CD processes. It helps developers build out an API testing pipeline with the Insomnia Unit Tests and the Insomnia CLI tooling.

Kong Insomnia accelerates time to market and reduces costs with automation. With Insomnia, developers consuming new APIs can rapidly explore and consume existing services of different protocols (spawning REST, GraphQL, and gRPC). Insomnia’s API consumption capabilities are too vast to completely list out here — especially given the fact that Insomnia is extended through more than 300 community-built plugins. Highlights include the generation of client code in 18 different languages/frameworks, handy plugins such as random response value generators, and a popular mocking service. Using Insomnia, users can generate Kong Gateway and Kong Ingress Controller runtime configurations directly from their API specs.

With Insomnia, developers creating new APIs can:

- Rapidly design, publish and consume services

- Leverage consistent API specs and documentation

- Benefit from an increased level of automation through the CI/CD pipeline

- Automate tests to ensure ongoing, automated, high quality APIs

With Insomnia, DevOps engineers can:

- Boost API adoption

- Ensure consistency with GitOps

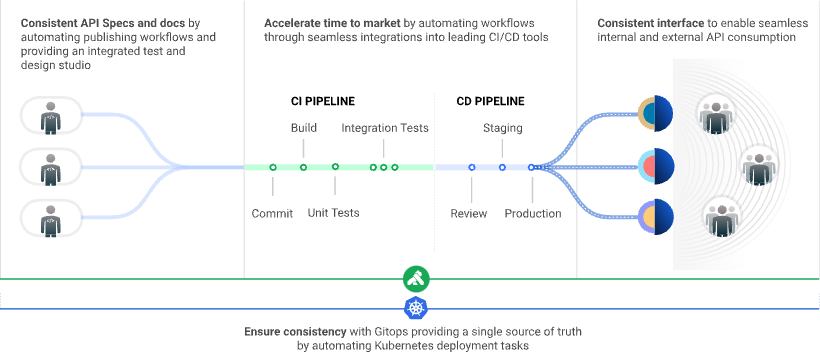

Rapidly Design, Publish, and Consume Services — Accelerate time to market and reduce costs with automation

Kong Insomnia enables spec-based development to help developers provide consistent APIs and docs. This is a significant step forward to make APIs more consumable once they’re published in the developer portal.

With a design-first approach comes the need for a design environment, and once developers have built and tested their spec, they can then push them straight into Git to seamlessly flow into the CI/CD processes. It takes discipline to ensure the spec is always written for an API, especially as we see more and more different teams writing more and more services.

This is when DevOps risks losing organizational governance. Kong Insomnia provides the ability to generate documentation automatically from live traffic. The real challenge in a world of thousands of versions of services — all changing at different times and by different developers — is to make sure all these specs aren’t just written but are kept up-to-date. Kong Insomnia has built-in intelligence to flag any API traffic that doesn’t match its documentation. This critical capability is well-praised by developers and consumers of APIs alike, and it ensures that API docs are always accurate.

Insomnia also ensures specs are automatically integrated into Git and any CI/CD process and that automation can continue with the management and control of those APIs. Kong Insomnia also integrates with GitOps to ensure consistency for any Kubernetes deployment tasks.

Those combined capabilities of Kong Insomnia and its integration into the rest of the Kong product stack help engineering organizations decentralize and achieve architectural freedom.

Kong Konnect: Bringing It All Together in One Single SaaS API Platform

Kong Konnect is the API management platform that brings all Kong technologies together. It’s a SaaS platform that provides end-to-end connectivity, sub-millisecond performance for thousands of transactions per minute, universal deployment, visibility across hybrid and multi-cloud environments, and boundless extensibility with powerful plugins and integrations. More importantly, it helps you transition from the monolithic world of mega applications to the distributed world of microservices.

Kong Konnect was built with end users in mind to streamline the unique workflows of every developer, DevOps engineer, and architect.

Kong Konnect provides DevOps engineers with a single management pane across all of their Kong Gateway clusters, enabling a single point of management across multiple environments, geographies and clouds with a sophisticated yet easy-to-use team-based governance and RBAC model.

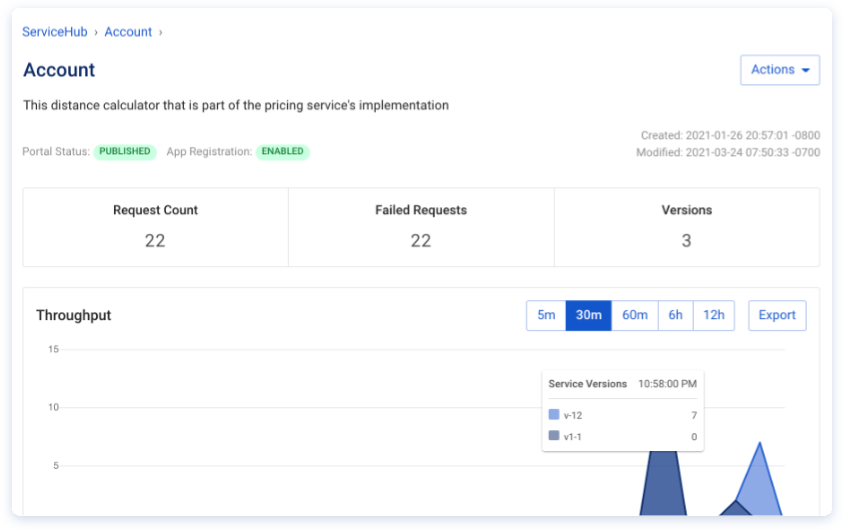

Whether discovered through existing Kong Gateways or catalogs independently, enterprise architects and developers can seamlessly publish their services for discovery on Kong Konnect’s ServiceHub, a single service registry of record to socialize their APIs across the rest of the organization.

Enterprise architects can leverage Kong Konnect for reliable real-time analytics monitoring of their APIs.

Last but not least, Kong Konnect enables API owners to publish their APIs to a customized API portal with the ability for developers to register and manage their applications.

Conclusion

End-to-end connectivity provides the foundation for amazing digital experiences, which is why an API-first operating model is essential for organizations to gain and maintain competitive advantage. The foundation for an API-first operating model is an API management platform.

The right API management platform delivers the end-to-end connectivity that is so critical to provide seamless communication. It provides connectivity that is reliable, secure, and observable across the full stack regardless of the underlying infrastructure.

The right API management platform puts developer experience first. By decoupling the connectivity concern from the application, developers can focus on the application itself instead of spending their time building pipes.

The right API management platform delivers developers architectural freedom — so they can choose the best technology to build a service. They can choose to build or run their service in any cloud, on any platform, using any protocol, or any technology, and the end-to-end API management platform will ensure seamless communication between all the heterogeneous components.

This is the power of an API management platform and the key to a great developer experience, world-class digital experiences, and gaining competitive advantage.

Partner with Kong for API Excellence — Kong supports every step of your journey

The secret to a better customer experience

See how APIs and better developer experiences lead to better digital experiences—and a serious competitive advantage.