Harness the Combined Power of API Management and GraphQL

Leverage GraphQL for next-generation API platforms with technologies from Kong and Apollo.

Introduction

Kong and Apollo technologies can be used to build, deploy, and operate modern APIs. Learn more in this integrated blueprint with reference architecture, which includes an overview of how a GraphQL request is processed at runtime through the different layers of the architecture.

Reference Architecture for a Modern API Platform

Kong provides an API gateway (Kong Gateway), service mesh (Kong Mesh), and multi-cloud API management (Kong Konnect) for the microservices in your organization. You can preserve existing investments and workflows for your domain APIs and enhance them by weaving a GraphQL layer into your existing architecture. Apollo provides a GraphQL developer platform (GraphOS), which includes developer tooling, a schema registry, and a supergraph CI/CD pipeline and high-performance supergraph runtime (Apollo Router).

Many aspects of this reference architecture are cross-cutting in nature, including security, traffic shaping, and observability.

Security is often handled with a defense-in-depth or zero-trust approach, where each layer of the stack provides security controls for authentication, authorization, and blocking malicious requests.

Client-side traffic shaping with rate limits, timeouts, and compression can be implemented in the API gateway or supergraph layer, and subgraph traffic shaping (including deduplication) can be implemented at the supergraph layer.

Observability via Open Telemetry is supported across the stack to provide complete end-to-end visibility into each request via distributed tracing along with metrics and logs.

Build, Deploy, and Operate APIs with a Modern API Platform

Every layer of the modern API platform presents unique challenges for building, deploying, and managing APIs. Kong and Apollo technologies tackle these challenges, promoting developer efficiency and autonomy while ensuring that organizational and design best practices scale seamlessly across multiple teams.

Developer tooling is designed to integrate into your existing software development lifecycle (SDLC) and CI/CD workflows. Developer portals make API service discovery simple, and schema registries make it easier to publish and consume APIs at different layers of the stack. API security and performance policies can be defined and managed at each layer, along with observability capabilities to understand API usage, the impact of breaking changes, and operational concerns like performance and monitoring. Each layer of the stack has tools to make enabling these capabilities easy for platform engineers.

API gateway

Kong Gateway is the world’s most adopted API gateway. It offers strong performance, scalability, and extensibility. Compatible with various systems, including bare metal, Kubernetes, and other containerized platforms, the gateway accommodates a variety of protocols and can integrate with both traditional and newer technologies.

Kong Gateway is designed to optimize today’s application modernization needs through automation across the full lifecycle of APIs and microservices. With Kong Gateway, developers can instantly add traffic control, security, authentication, and transformation functionality leveraging a wide array of out-of-the-box plugins ensuring best practices are followed without stifling flexibility and productivity. Kong Gateway can also run natively on Kubernetes, with Kong Ingress Controller.

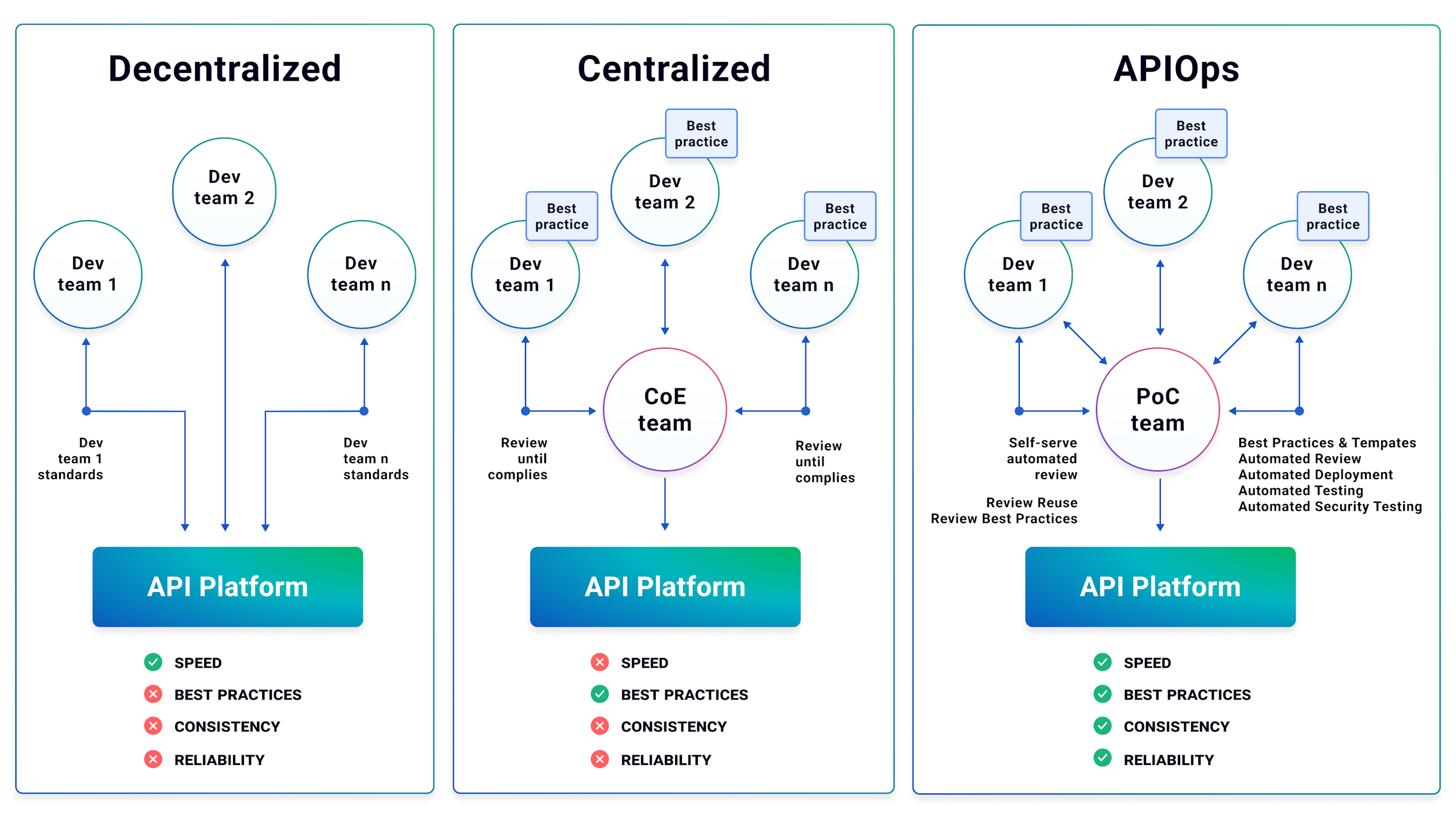

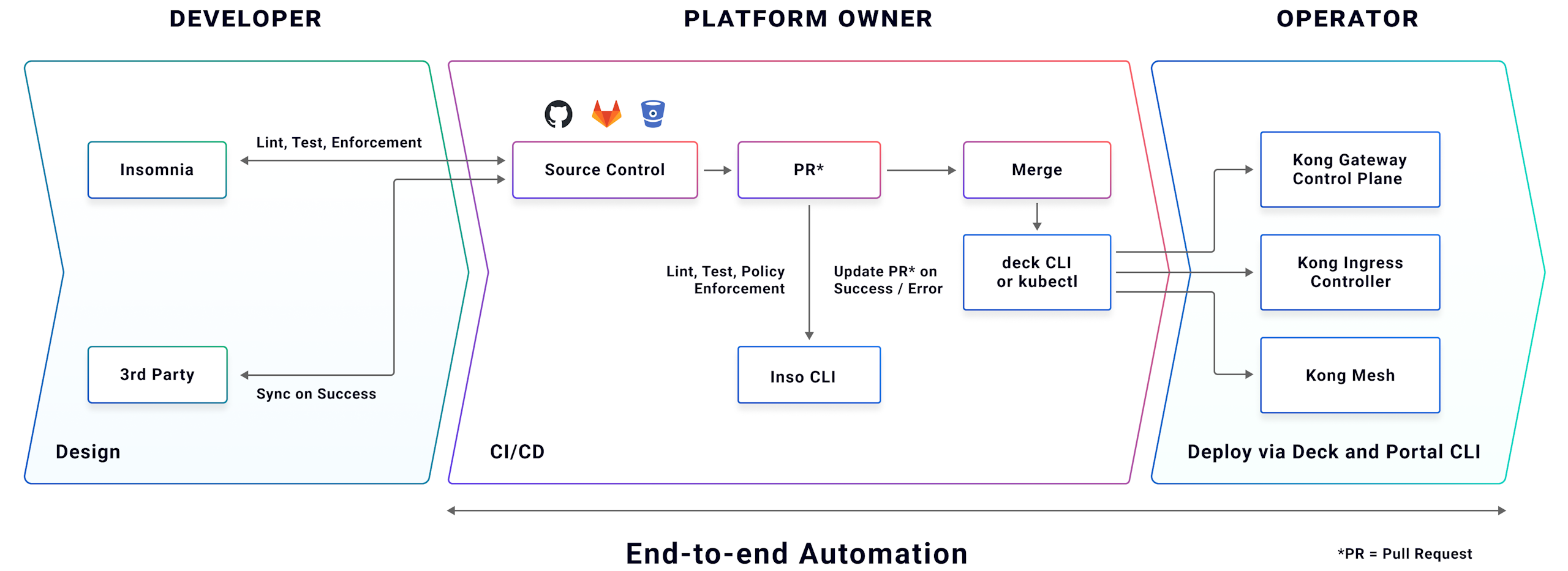

APIOps is a process that takes the proven principles of DevOps and GitOps and applies them to API platform management. Kong provides full support for APIOps automation to ensure reliable and repeatable API delivery. Kong Gateway configuration can be managed using a REST-based API or a modern declarative configuration system including drift detection. Full and partial declarative configurations can be stored in version control systems and assembled, validated, and applied through CI/CD pipelines.

This tooling enables developers to autonomously design and develop APIs, including generating gateway configurations from OpenAPI Specifications (OAS). Federated governance defined by the API platform team is applied to both the individual service and the composed API, ensuring that delivered APIs are consistent, reliable, and secure.

Supergraph Developer Tooling and CI/CD Pipelines with Policy Controls

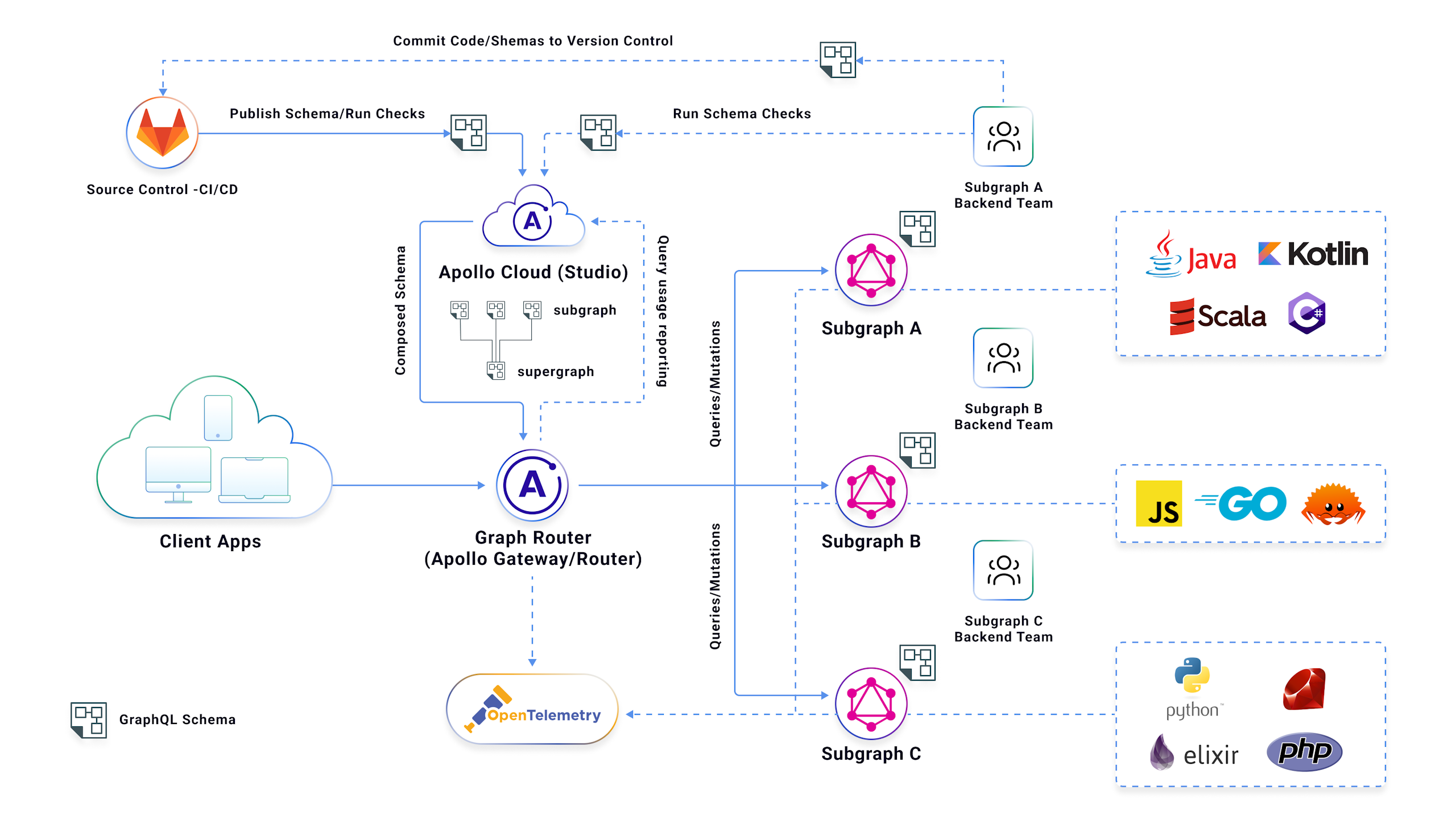

Apollo GraphOS provides everything needed to build and deploy any number of GraphQL changes per day with streamlined multi-team collaboration powered by Apollo’s federated GraphQL architecture. GraphOS provides a schema registry, managed build pipeline with customizable schema linting, graph-native observability with developer decision support, and breaking change prevention to ensure changes can be deployed with speed and safety. GraphOS includes developer tooling for app developers (schema explorer, query builder, docs) as well as subgraph developers (30+ compatible subgraph frameworks, schema insights, Rover CLI for dev laptop and CI/CD pipelines).

A platform team is typically responsible for configuring the supergraph build pipelines in Apollo GraphOS and configuring and operating the supergraph runtime fleet for each environment (dev, test, prod) using Apollo Router. This enables subgraph developers to autonomously own and contribute their slice of the graph without the platform team becoming a bottleneck. Graph variants are created for each environment (dev, UAT, prod) and security is configured to restrict who can publish to each supergraph variant.

With the supergraph infrastructure in place, each subgraph team can independently build and deploy their subgraphs, and then make them available in the supergraph by publishing to the Apollo GraphOS Schema Registry. This is typically done as the last step in a CD workflow or with a post-deploy analysis job if a progressive delivery controller is used — once an updated subgraph is ready to accept traffic.

Once a new subgraph schema is published, GraphOS automatically composes it into a new supergraph schema, runs linting checks defined by the API platform team to ensure best practices, and if all checks pass, the router fleet will pull a new supergraph schema via CD via a multi-cloud Apollo Uplink endpoint.

Subgraph teams can also shift left the detection of breaking changes with GraphOS schema checks, using developer tooling (Rover CLI) both on the developer laptop and in the CI workflows for each subgraph. GraphOS provides GraphQL field usage insights that help API developers understand which apps and app versions are using a field, how much traffic, and the potential impact of breaking change and which app they need to coordinate with.

Apollo Router processes all inbound GraphQL requests, plans and executes requests across subgraphs, and enforces graph-native policies for security, performance, and operational concerns. At this point, the router fleet is able to serve traffic and the Apollo GraphOS provides developer documentation with updated supergraph schema, so application teams can immediately use new fields and types in the GraphQL queries that apps need to power new customer experiences.

Microservices Management with Service Mesh

Microservices bring many benefits, but also disadvantages. The network is unreliable, service discovery is hard, and there are few controls around who is accessing data from each API.

Service meshes abstract these concerns away from developers, reducing the complexity of service development by removing the need to manually build these capabilities directly into service code. This allows development teams to concentrate on building value instead of redundant connectivity code across languages and projects.

Kong Mesh is an enterprise-grade service mesh built on top of Envoy that solves these issues with a focus on simplicity, security, and scalability. Kong Mesh automates service discovery, security, advanced traffic routing, observability, and failover logic using centralized policies. These policies are applied through a REST-based management API or through a kubectl-like declarative resource model.

Kong Mesh additionally supports zones, which allow organizations to model physical network connectivity across their environment. Platform teams can design zones around concepts such as Kubernetes clusters, cloud providers, regions, data centers, or network latency boundaries. Services within zones can communicate directly, and cross-zone communication is handled out of the box. Service meshes can be deployed within or across zones for maximum flexibility.

Kong Mesh can be installed and managed standalone or through Kong Konnect, and it operates on Kubernetes or virtual machine deployments. Meshes can be created per line of business, team, project, or environment thanks to Kong Mesh’s ability to create multiple isolated service meshes within the same cluster.

Ingress traffic to a mesh is handled by an API gateway. Kong Mesh supports many different ingress controllers, but it works best when paired with Kong Gateway via the Kong Ingress Controller. All external traffic passes through the gateway and is subject to any policies defined at the gateway level, similar to those defined in the edge gateway.

The API request lifecycle

To help visualize how this architecture works, let’s look at how a GraphQL query request traverses the architecture layers in some detail.

Kong API Gateway

Kong Gateway sits at the edge of the network to proxy all incoming traffic. In a GraphQL request, this is typically an HTTP POST with the GraphQL query in the body of the request along with any required headers.

This example shows an Authorization header containing a JSON Web Token (JWT) and a query to fetch information about the current user and a list of products:

Service Routing

Kong Gateway evaluates the incoming HTTP request against configured Routes and attempts to find a match. The gateway can match routing based on a number of capabilities, including HTTP methods, host, headers, request path, and Server Name Indication (SNI). Kong Gateway supports requests over HTTP, TCP, and GRPC protocols.

In this example, a route is configured to match the path /graphql on the host example.com which matches the above request.

Authentication / Authorization

Once the Route and Service are determined, the request is run through all configured plugins, starting with security. In the above GraphQL example, JWT authentication is used. Kong directly supports JWT and dozens of other security and authentication technologies through its extensive plugin ecosystem. Requests are verified to contain valid signatures and claims, and violating requests are immediately rejected.

Traffic Management

GraphQL adds new challenges when it comes to traffic management. Not all requests are equal, which makes traditional rate-limiting strategies ineffective. Kong Gateway provides specialized GraphQL plugins that take query complexity into account when implementing rate limiting.

As GraphQL is an HTTP request at its core, you can use any of Kong’s other Layer 7 plugins such as proxy caching, request validation, configurable upstream timeouts, and even mocking to unblock the UI team as the subgraph service is being built.

Kong plugins define a logical default processing order. However, operators may dynamically build a plugin dependency graph to define the plugin execution order. A common example used is allowing rate-limiting evaluation to be processed prior to authorizing requests. Requests violating any configured traffic management rules are immediately rejected.

Observability

The OpenTelemetry (OTel) standard has driven observability into the mainstream. Understanding the request lifecycle and where time is being spent is key to building reliable, performant APIs.

Kong Gateway and Kong Mesh both support OTel without any additional dependencies. Information about plugin execution, DNS lookups, and upstream performance are generated and propagated to any OTel protocol (OTLP) compatible server. Along with OTel, Kong supports many popular analytics, monitoring, and streaming platforms including DataDog, AppDynamics, Zipkin, Prometheus, Kafka, and more.

Request Forwarding

Once Kong Gateway has executed all configured plugins, it is ready to forward the request to the upstream service. Kong Gateway supports load-balancing capabilities to distribute requests across a pool of instances of an upstream service. Requests are allocated using a round-robin algorithm by default, but Kong also supports consistent-hashing, least-connections, and latency. The latency algorithm uses peak EWMA (exponentially weighted moving average), which ensures that the balancer selects the upstream target by lowest latency.

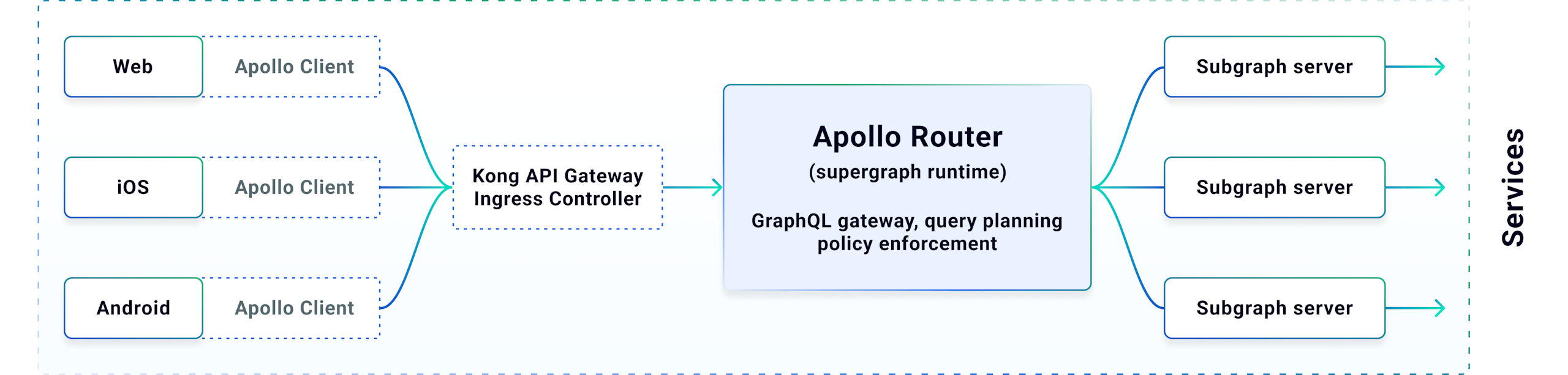

For our example GraphQL request, Kong Gateway forwards the request on to the Apollo Router, which is a high-performance supergraph runtime.

Supergraph Runtime Execution

Apollo Router processes all inbound GraphQL requests and plans and executes requests across subgraphs. In order to ensure performance, Apollo Router was written in Rust to ensure increased throughput, reduced latency, and reduced variance. It also enforces graph-native policies for security, performance, and other operational concerns, which can be configured by platform teams in YAML, Rhai scripting, or their language of choice.

GraphQL Query Parsing & Validation Against the Public API Schema

As a spec-compliant GraphQL server, the router parses and validates each GraphQL request to ensure the query conforms to the GraphQL schema. Apollo Router is powered by the declaratively composed supergraph schema that contains every type and field in your graph and which subgraph(s) they can be fetched from, including various federation directives that define build and runtime policies. The full supergraph schema may internally include fields that are otherwise `@inaccessible` for applications to use, and these are not included in the public API schema that the router validates GraphQL requests against.

Graph-Native Security and Performance Policy Enforcement

GraphQL-native security and performance policies are enforced early in the request lifecycle to block malicious traffic at the edge of your supergraph and protect the underlying microservices from excessive load. For example, subgraph schema directives like `@authenticated` and `@requireScopes` enable the Apollo Router to dynamically calculate the required JWT claims to access all the fields in a query and gracefully degrade the response by removing unauthorized fields from the query along with a suitable field-level error. Apollo Router can enforce multiple GraphQL-native security policies, including query depth and height limits, contracts, and a safelist of known queries.

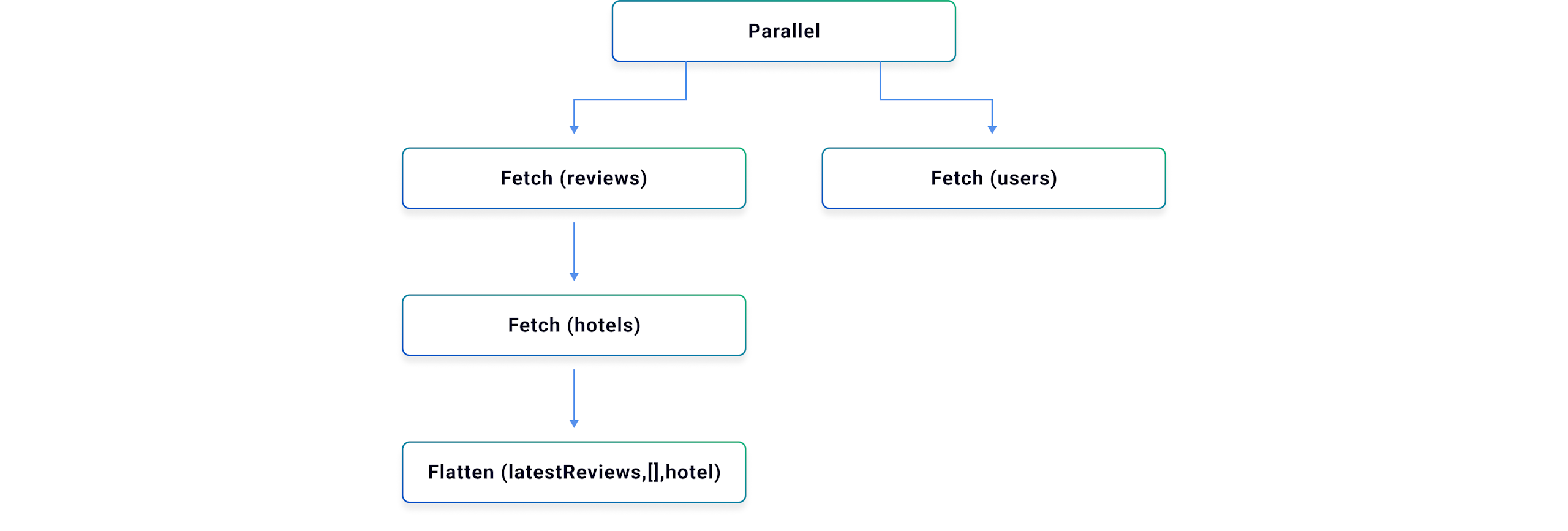

Intelligent Query Planning

Apollo Router creates an optimized query plan for each GraphQL operation (query, mutation, subscription) that minimizes the cost, complexity, and latency for each query, so it can optimally fetch and join data from multiple subgraph APIs into a single unified response for apps to consume.

With Apollo Federation 2, fields can be denormalized across subgraphs for improved performance using the `@shareable` subgraph directive to relax the default single source of truth. Multiple subgraphs can provide the same root query fields, and subgraphs can `@override` a field to migrate it from one subgraph to another. When clients need to defer the slow part of a query, often due to a slow underlying REST API, they can specify the `@defer` directive in a query so the rest of the query can be returned immediately for more responsive UX.

Apollo Router’s query planner is able to take all of the supergraph schema and directives into consideration and generate an optimal query plan. This minimizes the number of subgraph API calls and adheres to the declarative policies defined in the subgraph schemas and composed into the supergraph schema that powers the router.

Query Execution and API-Side Joins

The router then executes the query plan in parallel where possible and in sequence when API-side joins require data to be fetched from multiple subgraphs in sequence. To support API-side joins of GraphQL data (similar to how tables are joined in a database) each of the over 30 subgraph frameworks provides the ability to fetch additional data for a GraphQL entity using the available `@key` fields defined in the subgraph schemas.

The router can then satisfy the client query by orchestrating the subgraph API calls using both the standard GraphQL query, mutation, and subscription fields a subgraph provides, along with the ability to fetch additional entity fields to process API-side joins. The final result is then flattened into the requested query shape and returned to the client.

Supergraph Observability

Graph-native telemetry is emitted by the router during request execution, so Apollo GraphOS can power field usage insights and schema checks that assess the impact of potentially breaking changes. This includes query shape, field usage, and optionally select headers — but not the query response or Authorization header.

Open telemetry tracing and metrics are also natively supported by Apollo Routerd to support APM use cases for performance, monitoring, and alerting. Built-in support for Datadog, Jaeger, Open Telemetry Collector, Zipkin, and Prometheus is also included — along with options for sampling, limits, and custom attributes/resources.

Health checks, commonly used in Kubernetes deployments, are also provided to ensure each Apollo Router instance is ready before Kubernetes sends traffic to that instance.

Supergraph Runtime Extensibility

Apollo Router provides a well-defined extensibility model to hook into the relevant portions of the API request lifecycle, with full access to the GraphQL query and supergraph schema. Rhai scripting, similar to Lua scripting in NGINX and Envoy, enables lightweight in-memory manipulation of headers, cookies, and request context. Co-processors for Apollo Router allow an HTTP sidecar, written in any programming language, to hook into the request lifecycle to support more advanced and bespoke integrations.

Domain-Driven Microservices

Apollo Router makes requests to domain services that live at the microservice layer. At this stage, the subgraph service has transformed the original GraphQL request into a domain service-specific request, which may mean REST, gRPC, or even direct database queries.

API Gateway at the Mesh Edge

These requests pass through Kong Gateway as either Layer 4 or Layer 7 traffic at the mesh edge. As before, Kong’s flexible routing engine checks the incoming requests for matching definitions, and any configured plugins are executed before the request is forwarded to the upstream destination.

Service Discovery / Inter-Service Connectivity

The upstream domain service may require coordination among multiple microservices or database objects to completely fulfill the request. Keeping track of a service’s dependencies is hard in a microservices world, but it can be solved using Service Discovery. Kong Mesh ships with a DNS resolver to provide service naming — a mapping of hostname to virtual IPs of services registered in Kong Mesh. This allows services to communicate using simple DNS names, greatly reducing application-level code and configuration complexity.

Zero-Trust Security

Instead of relying on traditional security methods that grant access based on network location (e.g., inside or outside of a corporate network), zero-trust security operates on the assumption that threats could be anywhere, and as such, no device or service should be automatically trusted, irrespective of where they connect from.

In Kong Mesh, zero-trust security is inherently integrated by using mTLS to encrypt the traffic between services and authenticate the services to each other. By doing so, even if malicious entities gain access to the network, they cannot readily interpret or tamper with the traffic, nor can they pretend to be a valid service without the proper credentials.

Furthermore, the mesh can provide fine-grained control over which services are allowed to communicate with each other via Traffic Permissions, further tightening the security stance.

Traffic Management and Observability

Kong Mesh handles a variety of traffic reliability features by applying declarative policies to data plane proxies. Common traffic policies include health checks, retries, circuit breaking, and more. Observability details are tracked at each stage of the mesh, forwarding details to collection services and configured via policies applied to the data plane.

Once the request is fulfilled, a response is returned via the gateway to the subgraph where it is assembled into the full response for the original GraphQL request to be returned to the client.

Powering a Next-Generation API Platform

Kong and Apollo technologies are complementary, and when used in conjunction, they provide everything you need to power a modern API platform. Both companies have built best-in-class SaaS solutions that power the unified API platform.

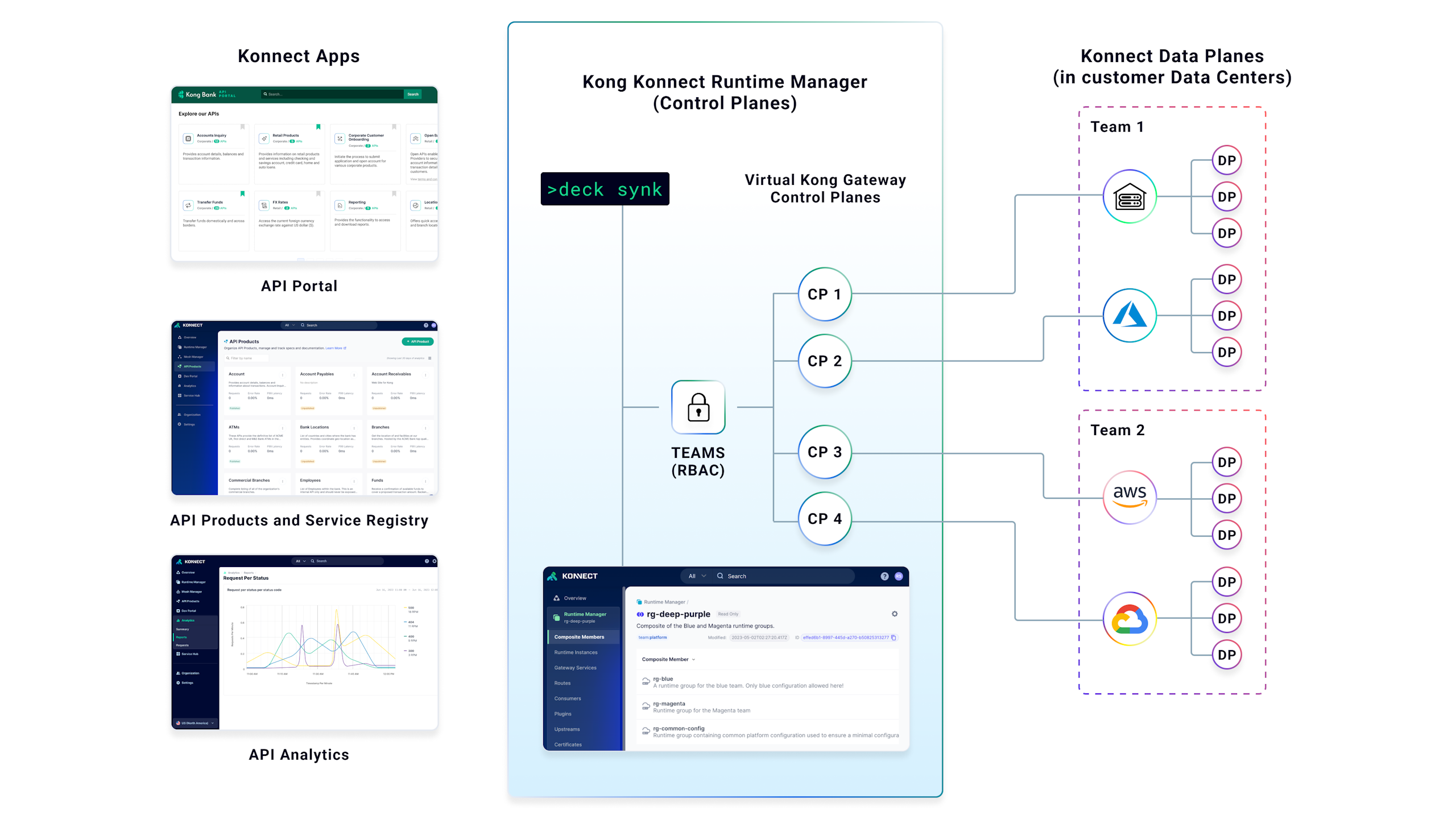

Kong Konnect

Kong Konnect provides a unified API management platform designed to decrease operational complexity and enable scaled federated governance across multiple teams. Kong Konnect enables the management of Kong Gateway, Kong Ingress Controller, and Kong Mesh, offering a single management interface across all Kong runtime technologies.

Kong Konnect is designed to deliver comprehensive API lifecycle management, ensuring adaptability across various clouds, teams, protocols, and architectural designs. It encompasses API configuration, API portals, service catalogs, and deep API analytics functionalities.

Kong Konnect empowers organizations to build and operate the API gateway and domain-driven microservice layers:

Deliver a global API registry — Kong Konnect’s Service Hub ensures every service, regardless of technology, is cataloged and searchable, creating a single source of truth across the organization.

Deliver comprehensive API portals — Developers can navigate APIs, obtain detailed reference documentation, experiment with endpoints, and register applications to consume APIs — all through a single, customizable API portal.

Real-time monitoring and analytics — Kong Konnect provides instantaneous access to vital statistics, monitoring tools, and pattern recognition, allowing businesses to gauge the performance of their APIs and gateways in real time.

Employ modern operational methods — Kong Konnect enables a Kubernetes-centric operational process with the Kong Ingress Controller integration. Declarative configuration and Kong Konnect management APIs enable a DevOps-ready, config-driven API management layer.

Leverage an ecosystem of plugins — Kong Konnect is enabled with an extensive catalog of both community and enterprise plugins. These plugins introduce vital functionalities such as authentication, authorization, rate limiting, and caching — saving critical API developer time and resources.

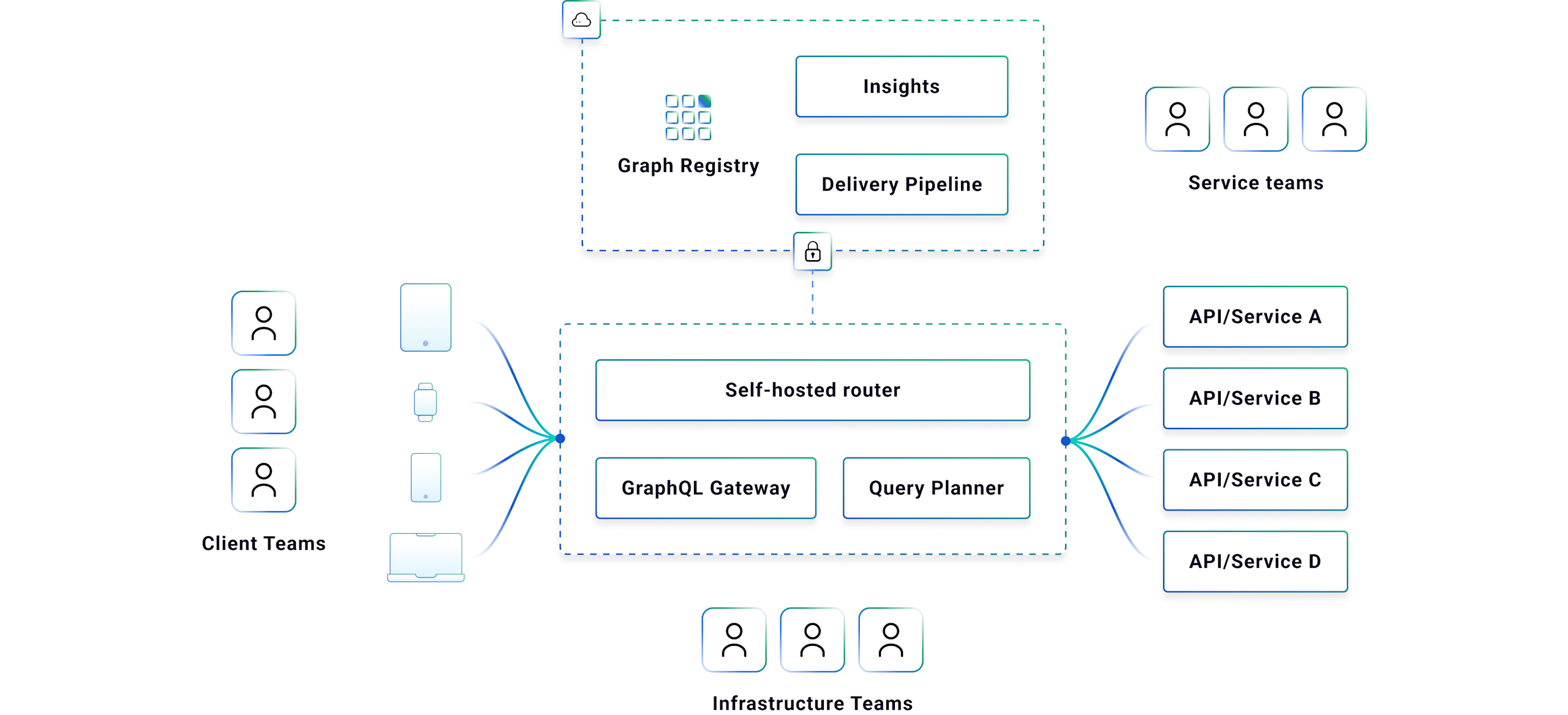

Apollo GraphOS

Apollo GraphOS provides the architecture, infrastructure, and workflows to ship a self-service GraphQL platform. This platform provides an intuitive service access layer for client teams, enabling them to ship features faster and deliver better application performance — regardless of how many underlying services they use.

GraphOS enables organizations to build and operate the supergraph layer on top of existing APIs using:

Modular graph development — Monoliths cause bottlenecks that slow down app development at every scale. With GraphOS, you build your graph on a modular, scalable architecture with subgraphs that link to each other.

Fast, unified query execution — GraphOS links your subgraphs together into the supergraph with a blazing-fast, cloud-native runtime. Access all underlying capabilities with a single GraphQL query and get automatic support for advanced GraphQL features like @defer.

Safe and rapid graph evolution — Modern apps change by the hour, and your API architecture needs to do the same. GraphOS gives you the tools to develop schemas collaboratively with a single source of truth, deliver changes safely with graph CI/CD, and improve performance with field and operation-level observability.

Graph-native security, performance, and governance — GraphOS supports build and runtime policies that can be defined by the appropriate team and enforced by GraphOS at build-time in the GraphOS CI/CD pipeline and at runtime by Apollo Router. Distributed policy ownership and centralized policy enforcement points are key to scaling your graph efficiently, so each team can own their slice of the graph and deploy autonomously with speed and safety.

Advanced runtime options for enterprises — To meet the most demanding enterprise requirements, GraphOS offers a flexible runtime deployment model to give enterprise architecture and operations teams maximum control.

In summary, Apollo GraphOS is engineered to meet the challenges of modern application development head-on, offering scalability, speed, and security while supporting collaborative and autonomous team workflows.

Conclusion

With Kong’s unrivaled API platform and Apollo’s unmatched GraphQL capabilities, organizations are empowered to navigate the intricacies of the modern API landscape.

Combining Kong's strengths in API management with Apollo's expertise in GraphQL, the reference architecture outlined provides organizations with a well-rounded, effective solution. The practical guidance and best practices laid out set a clear standard for what effective API and GraphQL management should look like.

Want to learn more about

API management and

GraphQL?

Download the joint paper by Kong and Apollo for practical insights, vendor-agnostic best practices, and real-world architectures for a next-generation view of API platforms.