AI & LLM Governance with Kong

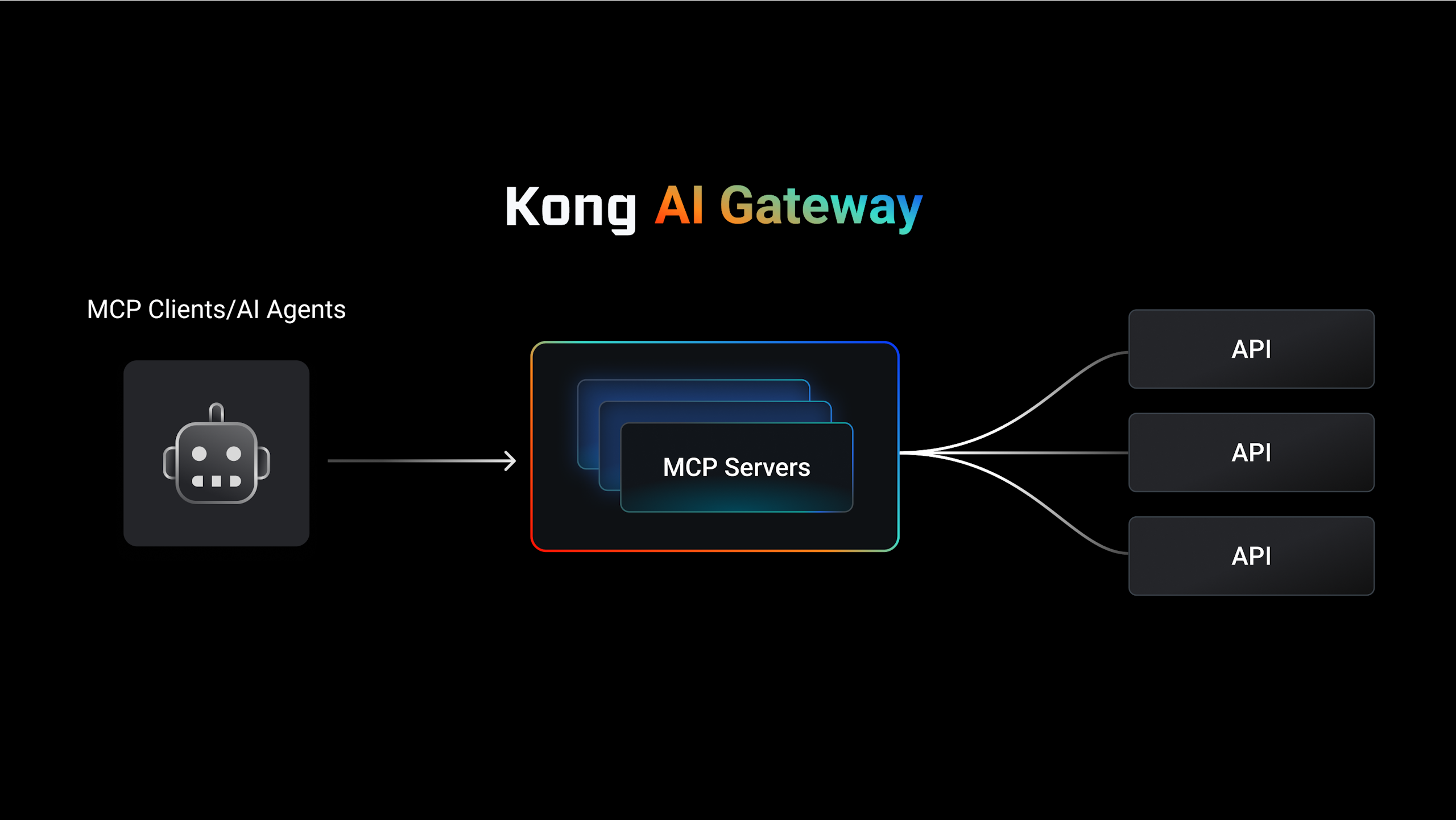

Expand governance and control beyond agentic workflows into the LLM and API layers.

Bring together MCP, AI coding tools, and every single agent-critical API, LLM, and eventing resource — all in a single place.

AI coding tools are the first real promise of the agentic future, but they need access to tools to consume APIs and enable agentic workflows. Up until now, this has been messy, with many organizations struggling to govern the rapid proliferation of MCP, resulting in security issues and challenges to unify agentic access to APIs, AI resources, and real-time data.

That changes now.

Govern MCP server creation, testing, exposure, security, consumption, and observability — all in one platform.

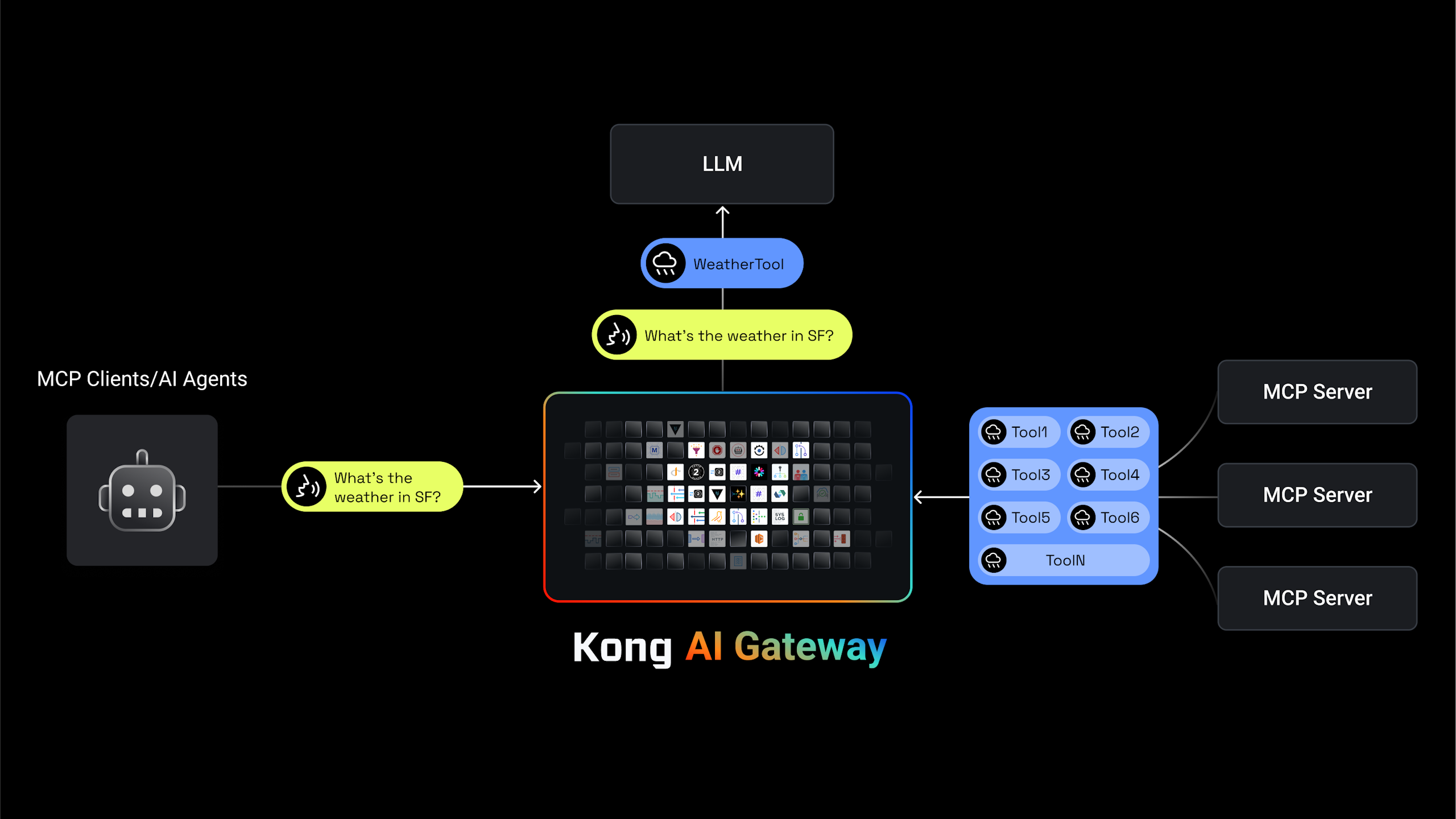

Solve the hard problems around MCP security, server generation, observability, and cost control. And start to plan your MCP monetization strategy, too.

Control and optimize cost structures through token throttling and semantic caching.

Offload MCP server generation to your MCP Gateway to ensure consistent and rapid MCP server generation on top of existing API and event resources.

Use Kong APIOps and Gateway policies to ensure that every MCP server is protected by the Gateway’s standardized security policies that are purpose-built for MCP and LLM use cases.

Secure all MCP servers in one place with Kong’s dedicated MCP authentication plugin. Acting as an OAuth 2.0 resource server, Kong centralizes security and applies consistent security policies across every Kong-managed MCP server automatically.

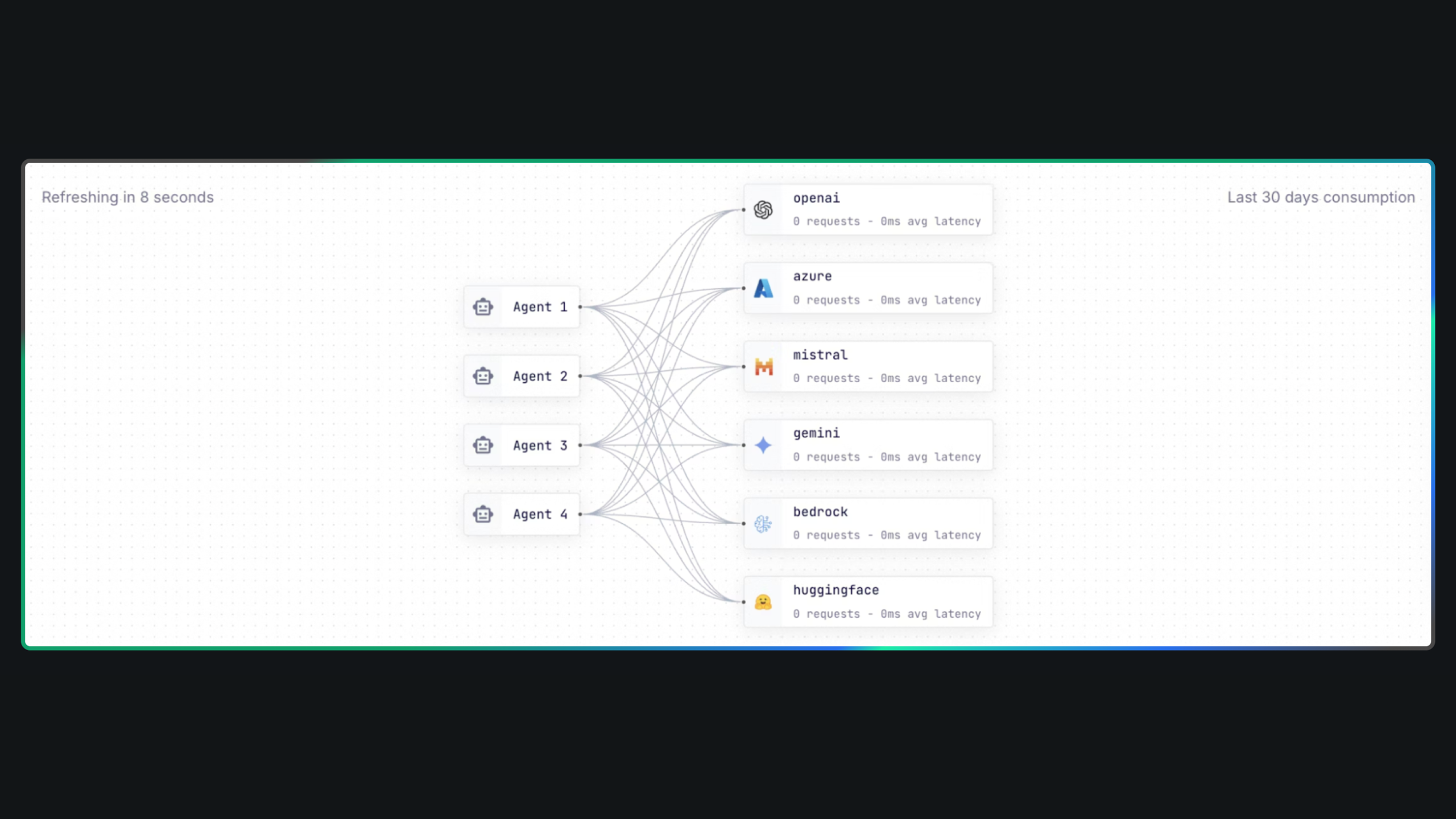

Capture information around the tools, workflows, prompts, etc. that comprise interactions between MCP clients and servers. Combined with the dashboards and advanced analytics in Kong Konnect, teams get a single pane of glass view into all API, Eventing, LLM, and MCP performance and behavior

Configure your AI coding tools to consume the Developer Portal MCP server and deliver seamless, secure API access for agentic development workflows.

Design elegant metering and billing controls that sit right in between Agents and MCP servers that serve various APIS, event streams, and data sources — all within a unified agentic monetization solution.

Leverage Kong’s semantic intelligence to deliver relevant tools to the LLM based on the prompt. This drastically improves LLM performance and reduces the overall costs of agentic workflows.

Kong named a Magic Quadrant™ Leader for API Management, plus positioned furthest for Completeness of Vision.

Expand governance and control beyond agentic workflows into the LLM and API layers.

Make your AI & LLM initiatives secure, reliable, and cost-efficient by governing every step with the Kong AI Gateway.

Build an API strategy that’s AI-ready and roll out your projects with confidence.

Contact us today and tell us more about your configuration, and we can offer details about features, support, plans, and consulting.