Power OpenAI applications with Kong

Unleash the full potential of your OpenAI applications: Achieve scalability, security, insights and cost efficiency.

Introduction

ChatGPT has been taking on the world of development since its introduction by OpenAI in November 2022. ChatGPT is an artificial intelligence chatbot, whose ease of use made it the fastest adopted application of all times with over 100 millions users in January 2023, just two months after its release.

There is a lot to like in ChatGPT as it helps with many tasks that are - or used to be - time consuming. Examples of tasks include helping writers develop a blog, students write a term paper, marketers translate product descriptions, developers write, debug, and document code, designers create a new brand, composers generate new music, and so much more.

As a result, many organizations are looking for ways to take advantage of ChatGPT.

OpenAI models, plugins and APIs

OpenAI provides a variety of services to help build applications using artificial intelligence capabilities. Among those, ChatGPT is the most prominent. These capabilities include chatting, text searching, classification, completion and comparison, as well as image recognition and speech to text translation. OpenAI services are accessible over a web user interface and through APIs.

Diagram 1. OpenAI products and services as of June 2023.

OpenAI also allows the submission of plugins for the ChatGTP service, which may be consumed by their AI models. Plugins are available on the plugin marketplace for ChatGPT Plus to customers and developers. Plugins submitted to OpenAI must be accessible over APIs.

Governing consumption of OpenAI APIs

OpenAI’s wild adoption is also fueling AI adoption in the Enterprise. In a Gartner survey, 45% of business executives were looking into Enterprise-AI.

While it is generally prudent to apply sound governance practices when consuming, or making APIs consumable, in the case of AI APIs, it is crucial.

Here are some practical examples of how Kong can help make OpenAI API interactions more secure, reliable, and observable.

Control and visibility over ChatGPT model usage

OpenAI APIs are priced not based on request volume or a flat subscription, but based on tokens as a measure of the complexity of the work the models perform. API consumers need to submit credentials to use APIs, and their organizations are billed for said consumption.

The cost for tokens vary based on the model the tokens are used against. More complex models give better results at a higher cost. API consumers may quickly run up a high bill for their organization if they do not pay attention to which models they use, how much they ask the model to do, or if they are neglecting to put limitations on the tokens a model is allowed to use against a task.

Diagram 2: OpenAI models pricing for a few language models

Organizations would likely benefit from limiting what models are accessible, how heavily they may be used, who uses them, and what data is exposed to these models. Some telemetry on the API usage is also prudent, with reports and potential alerts under certain conditions. We illustrate some of these use cases below.

Practical policy applications

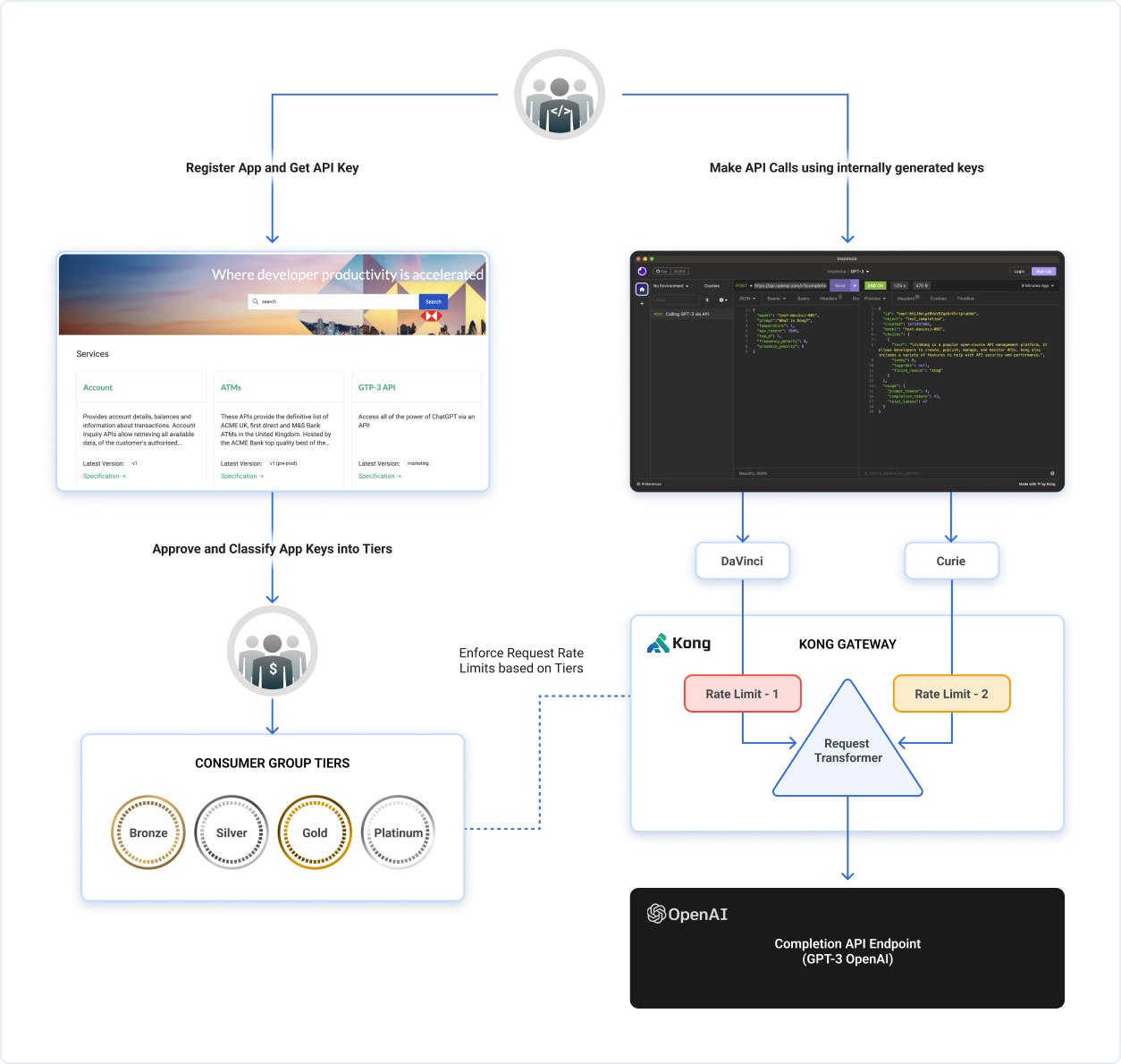

Instead of issuing each developer an API Key, a consuming organization can opt to manage the submission of API keys to OpenAI by relying on internal authorization - perhaps via OIDC - to limit what services and models a developer may use. In the diagram below, Kong proxies two AI models to consumers, the Davinci and the Curie models. The developers are limited to which model they may use based on their permissions.

Diagram 3: Kong Gateway routes requests to OpenAI while applying internal Authentication and Authorization

Further refinements may be applied, such as transforming requests that set excessively high limits on the allowed number of tokens, or asks for a large number of completions from a model.

The following diagram illustrates a more comprehensive API consumption flow, and introduces a mechanism for developers to provision internal credentials via the Kong Developer Portal.

Diagram 4: Rate limits applied to consumer groups

In this example, rate limits are applied to developers based on their membership in Consumer Groups. Because the Davinci model costs 10 times more than the Curie model, organizations may limit the consumption of high cost AI services to critical applications. The rate limiting plugin is one example of control that organizations can apply to OpenAI services usage within their development teams. Therefore access may be controlled outright, as well as based on volume or accrued cost.

Data protections

Data integration with a model can be accomplished via the creation of finely tuned models or the incorporation of data as part of the GPT prompts. Safeguarding proprietary data when using models involves stringent control over these channels. One method of securing data involves confirming only specific, approved models are used, which can be ensured by vetting incoming requests at the Gateway level. This helps prevent unauthorized models from gaining access to sensitive information.

As such, prompts passed to OpenAI may be scanned, and additionally logged, for both validating confidential information to prevent unintentional data leakage, as well as auditing and analytics activities later on. Such policies may be performed via a combination of out-of-the-box and custom Kong plugins. Although logging does not facilitate real-time validation, it guarantees that any data leakages are both detectable and auditable.

It is good to note that when requests can be blocked and validated within the request path using a custom plugin, a significant latency may be potentially introduced to each request. This may be a necessary measure, contingent on the level of security required.

Telemetry and analytics

As Kong integrates with a variety of metrics and logging systems; show-back and chargeback arrangements are possible. Each developer may receive a monthly, or upon request, report on their usage, and how much cost they accrued. This is also possible at the organizational level, as each API request carries a known token cost.

Kong Konnect provides an out-of-the-box report building mechanism to get a bird’s eye view of the usage of various APIs, and filter it based on a variety of parameters, to get a better understanding and visibility on API usage.

Diagram 5: Sample report showing daily API usage

By leveraging Kong to govern the consumption of OpenAI APIs, organizations can control the usage and scope of ChatGPT capabilities and protect confidential data.

In summary, Kong can provide many controls to make OpenAI - and ChatGPT more specifically - smooth and secure for organizations. These controls include:

- Overage protections for the OpenAI services

- Streamlined authentication and authorization

- Rate limitations

- Metrics and alerts

- Dynamic, context sensitive data protections

- Logging of prompts

Governing consumption of APIs by OpenAPI

As of the time of this writing, May 2023, OpenAI accepts plugins to be submitted for use by their models. The plugins may not be monetized yet, and their usage once made accessible to ChatGPT plus customers may be unpredictable. For this reason, it is prudent to apply some guardrails for their usage by OpenAI such as:

- Applying some level of telemetry (metrics and logging) to understand the nature and frequency of the volume of requests as well as when and how the plugin is used by the ChatGPT service and how it may be improved

- Applying rate limiting, especially if the API has constrained resources

Conclusion

Today’s wild adoption of OpenAI in application development calls for control and visibility in the usage of ChatGPT services. Bi-directional APIs for OpenAI products and services are bound to play a large role in their usage and further adoption. But the use of an API platform like Kong Konnect will help control and regulate OpenAI usage - based on each corporation's policies and sound API practices- and avoid overuse and data leaks by OpenAI API consumers and plugin developers.

Secure and Govern APIs

Empower teams to provide security, governance and compliance.

Questions about optimizing cost and securing your OpenAI applications?

Contact us today and tell us more about your configuration and we can offer details about features, support, plans and consulting.