Reduce Kafka cost and spend by virtualizing partitions and clusters

Expose real-time data from Kafka to more consumers while lowering your overall Kafka and infrastructure cost structures.

Kafka is great, but it can get (unnecessarily) expensive

Event and message brokers are great for streaming large volumes of critical data, but safely sharing data across teams and services can be expensive and operationally complex.

Kafka’s limitations often force teams to duplicate clusters, topics, and data to meet diverse client needs, driving up cost. Now, it doesn’t have to.

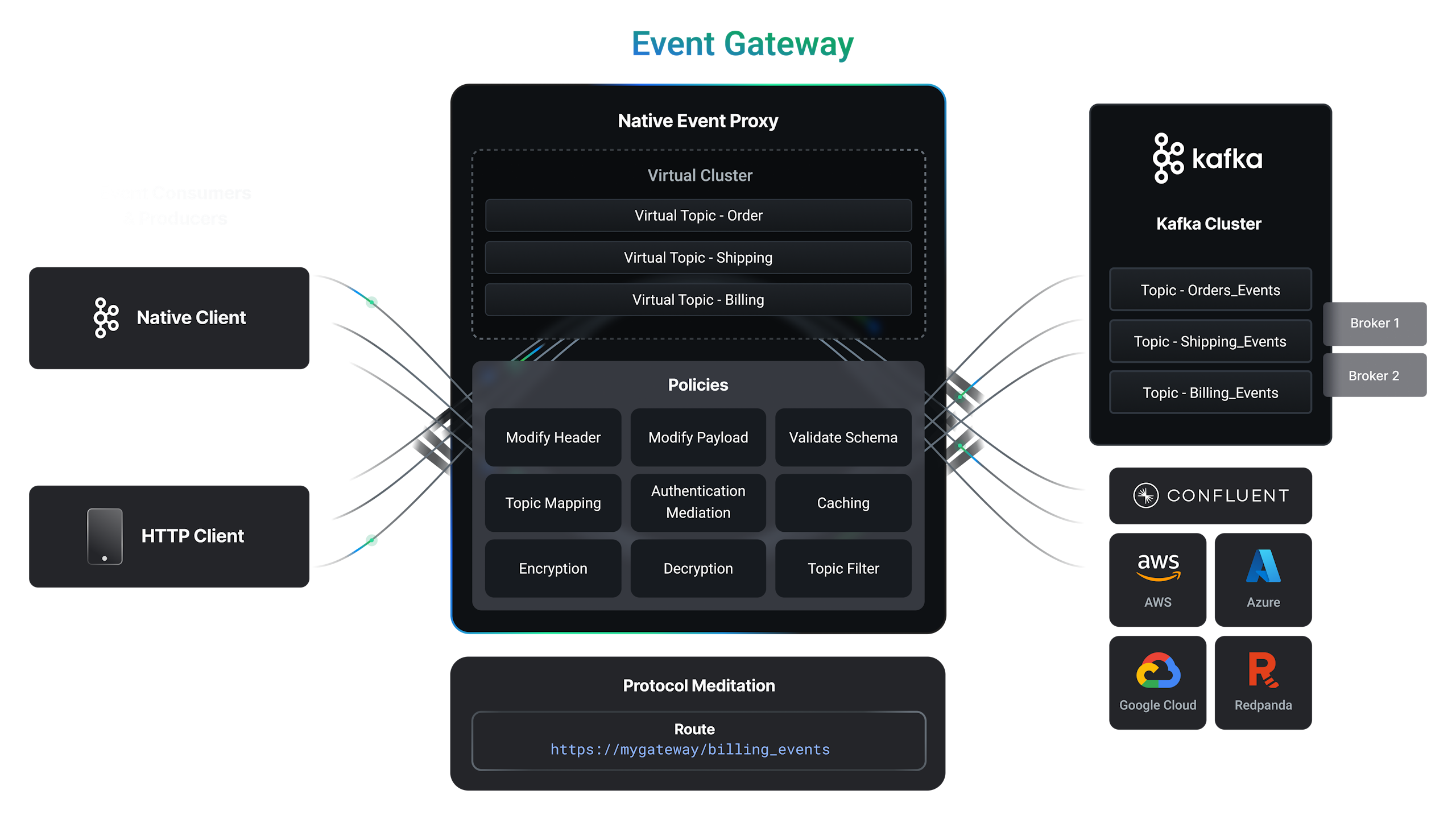

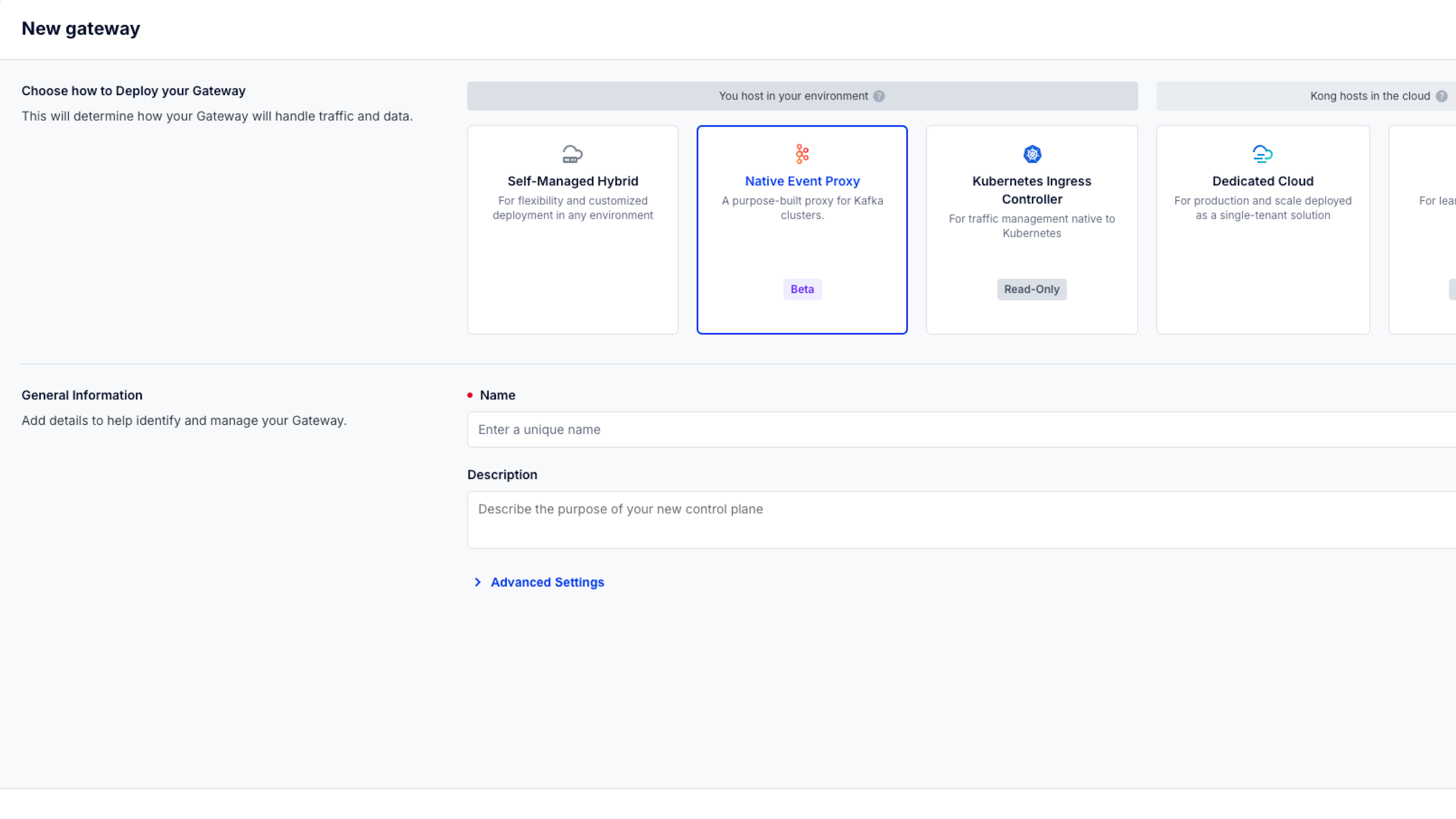

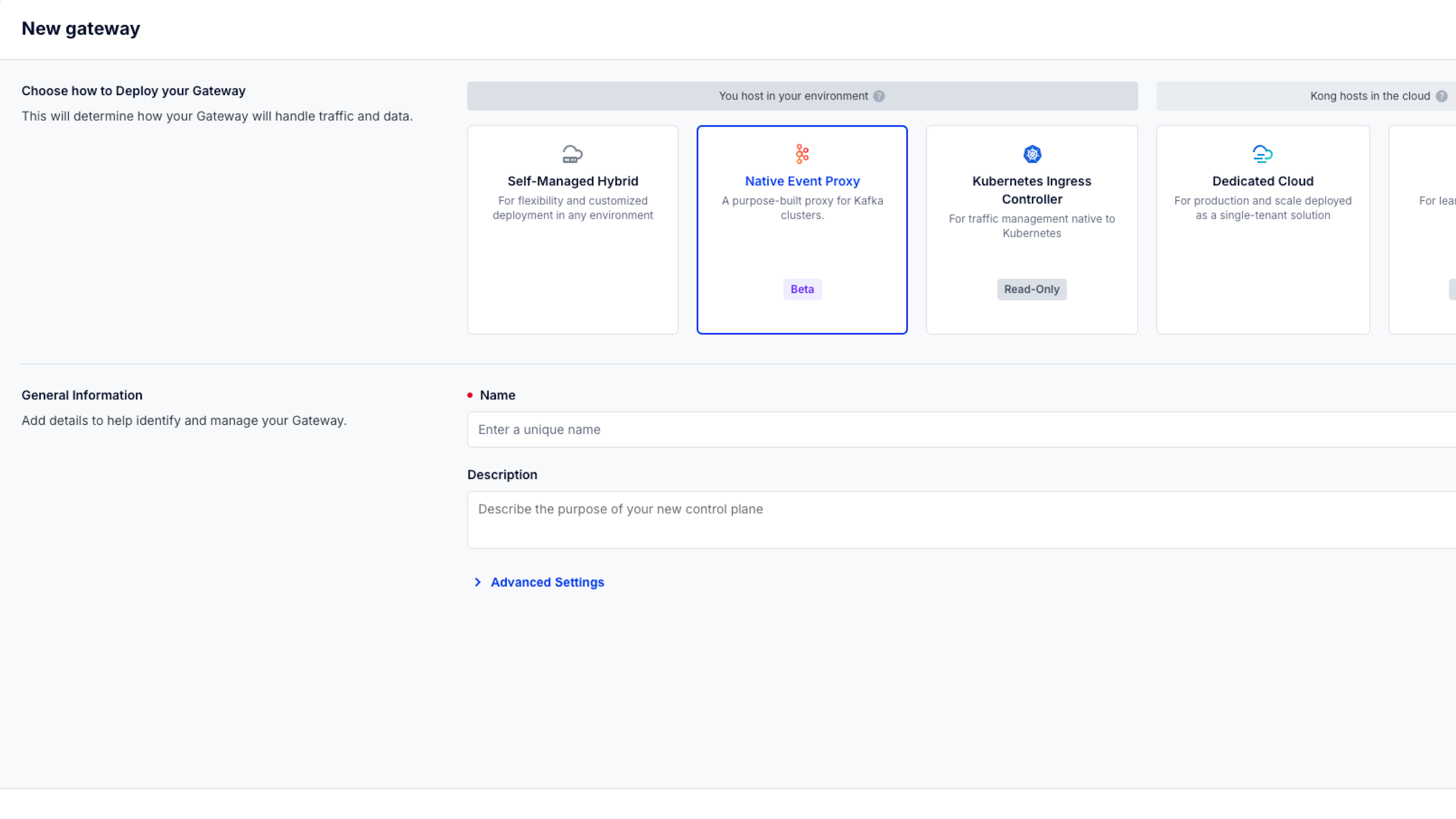

Kong’s Event Gateway helps reduce Kafka cost and complexity by virtualizing Kafka infrastructure as you expand your total event streaming program.

Don’t replace Kafka. Cut Kafka costs and increase ROI through optimized infrastructure patterns.

Kafka topics, clusters, and partitions introduce more cost in the physical world. Virtualize areas of redundant infrastructure to lower your overall infra costs.

Open up the value of real-time in Kafka to more internal and external clients and consumers — without sacrificing the security or the benefits of the Kafka protocol.

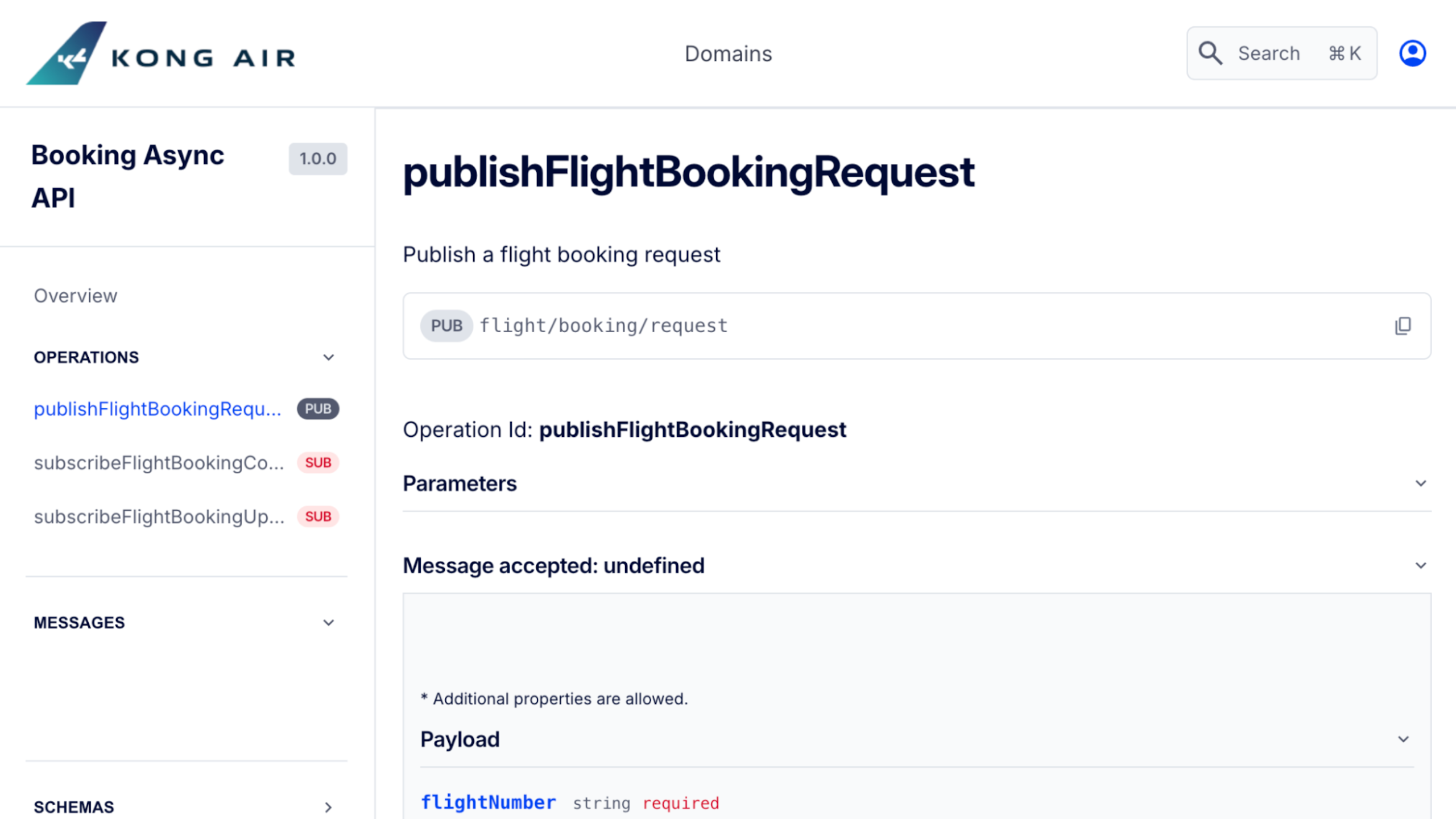

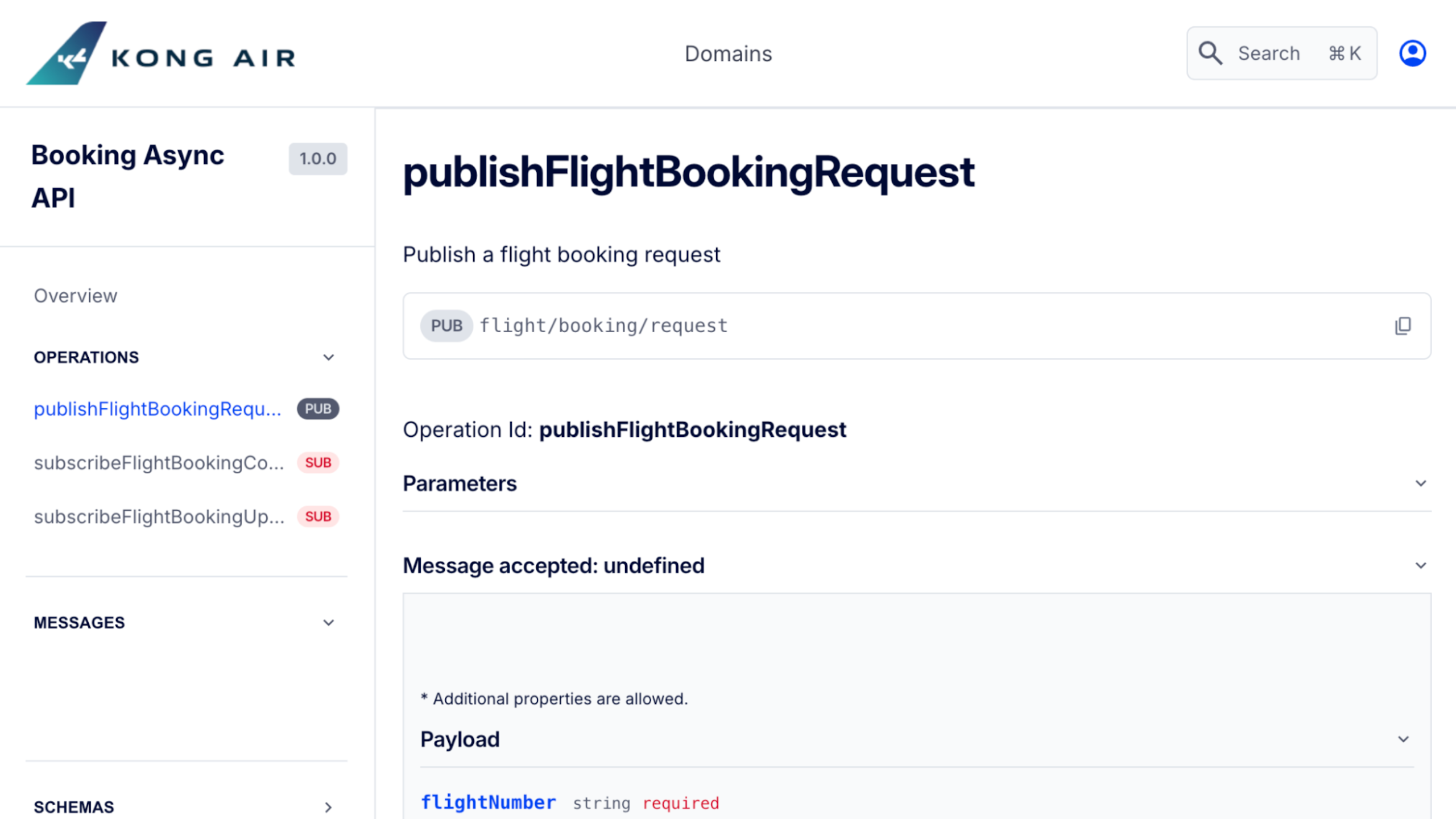

Publish and monetize Kafka data as self-serve event API products in a unified API catalog for APIs, events, LLM APIs, and more.

All-in on Kafka? We’ll help you cut Kafka cost, reduce spend, and drive ROI.

Unify the API and eventing developer experience

Ship real-time customer experiences faster by giving engineers a single, unified platform to build, run, discover, and govern both traditional APIs and access to real-time data in Kafka.

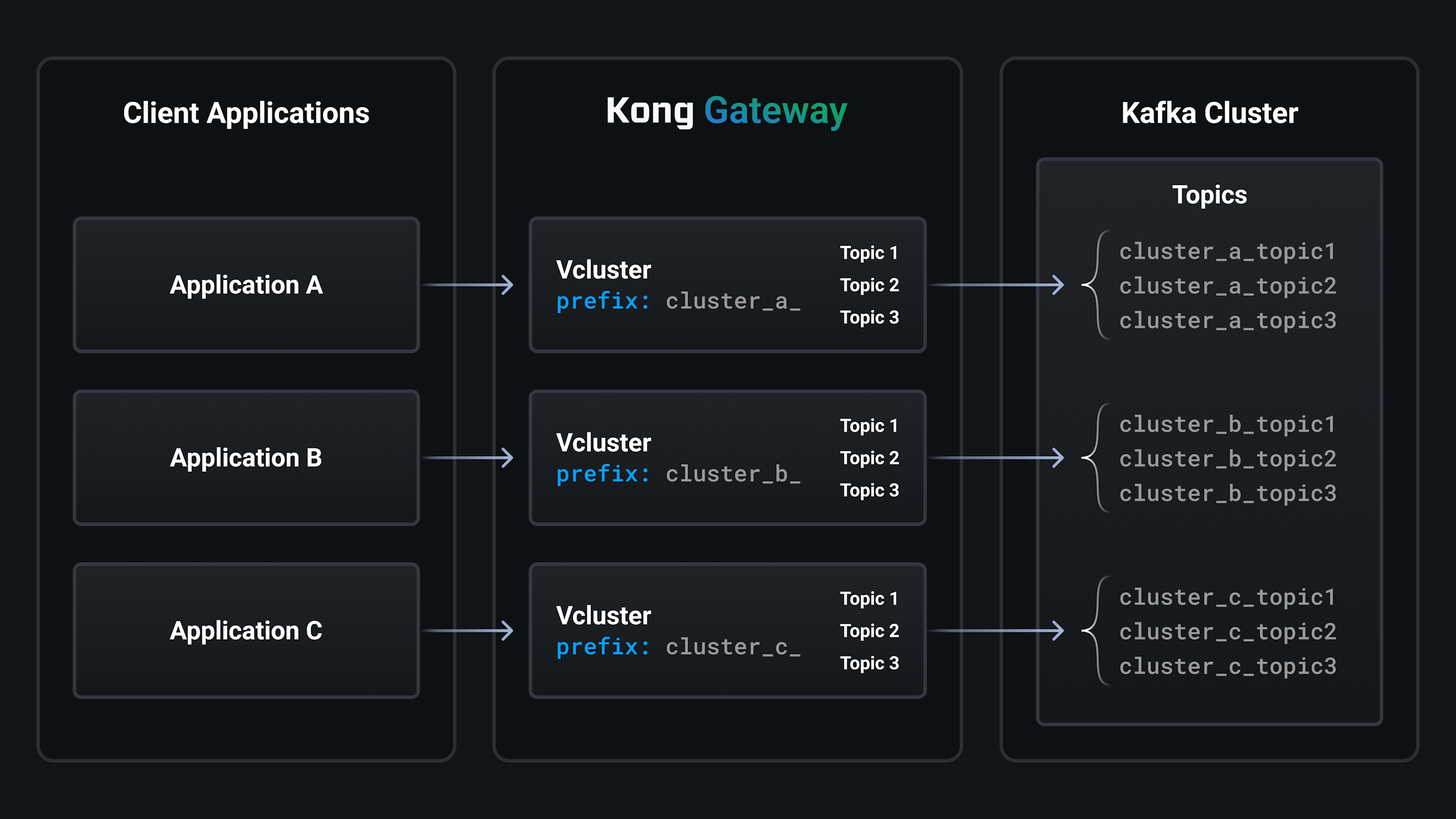

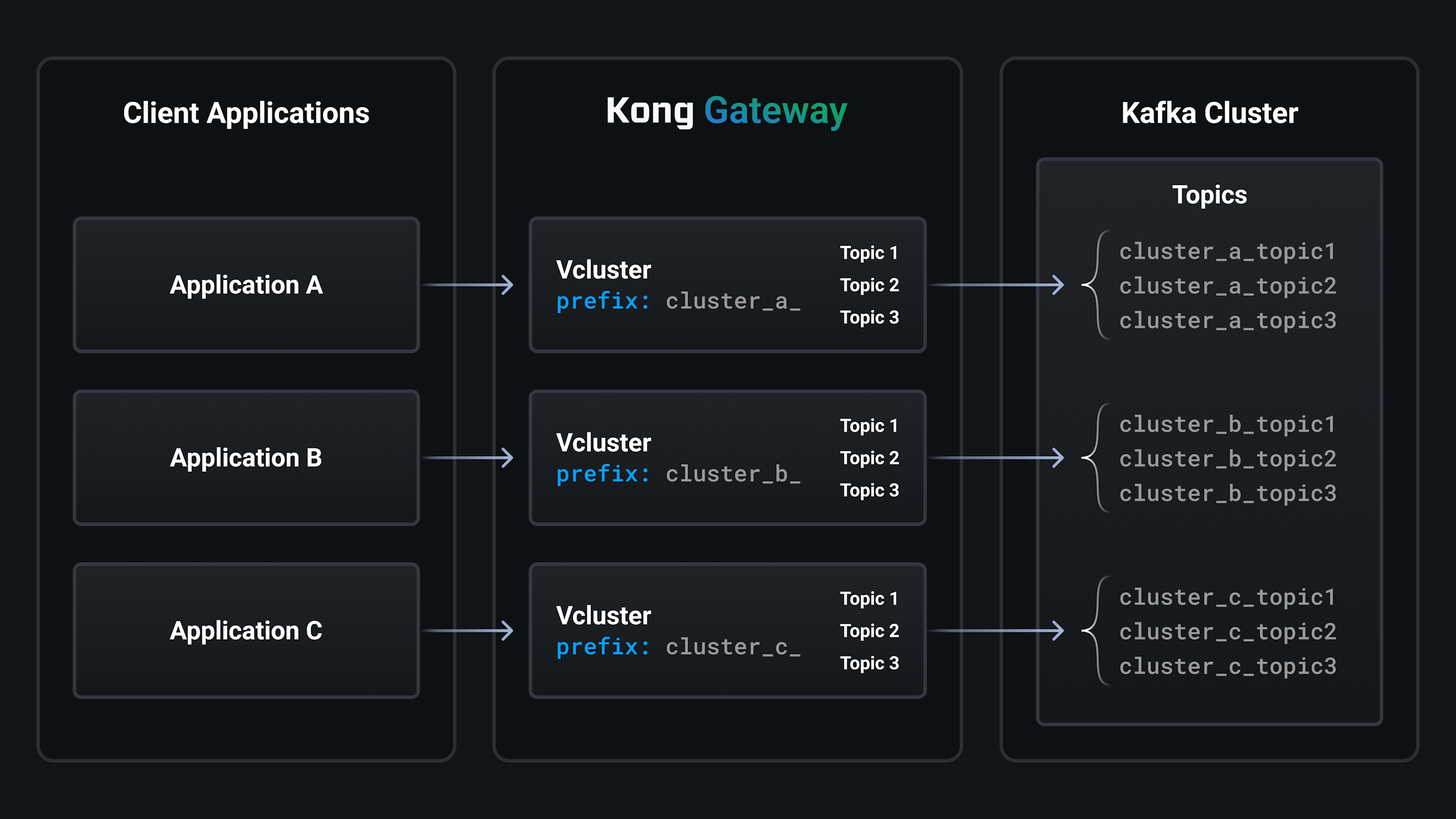

Virtualize clusters to make Kafka feel multi-tenant without adding costs

Virtual clusters allow you to have the flexibility, resource efficiency, and isolation provided by dedicated physical clusters, without having to foot the bill. This means teams can work in dedicated Kafka environments — without having to pay for them.

Virtualize topics to meet client isolation needs — without the extra bill

Store related data in centralized physical topics and offload client isolation and data segmentation to virtual topics managed by Kong. This means you only need to add, and pay for, additional partitions when load and throughput requirements dictate it.

Build more diverse real-time customer experiences

Make it easier for non-Kafka experts to consume real-time data in Kafka through protocol mediation. Now, HTTP-based applications can consume and produce data to and from Kafka — all via normal HTTP APIs.

Productization and monetization

Build a consumer-facing marketplace where both traditional API products and event data products live as self-serve, monetizable assets.

Related Resources

Questions about eventing with Kong?

We’re here to help. Contact us today, tell us more about your use cases, and we’ll walk you through our API platform for event streaming.