Why AI guardrails matter

It's natural to consider the necessity of guardrails for your sophisticated AI implementations. The truth is, much like any powerful technology, AI requires a set of protective measures to ensure its reliability and integrity. These guardrails aren't just a good idea; they are fundamental for operating AI safely, efficiently, and within your established parameters.

Here's a closer look at their critical importance:

- Prevent harm: A primary objective is to ensure your AI does not inadvertently generate or propagate harmful, biased, or inappropriate content. Guardrails actively filter content at both the input and output stages, preserving brand reputation and user trust.

- Protect sensitive data: In an era of heightened data security concerns, preventing information leakage is paramount. Guardrails act as a critical defense, ensuring that sensitive information, such as Personally Identifiable Information (PII), is appropriately redacted or sanitized before it can be exposed.

- Manage costs: The operational expenses associated with consumption-based LLM APIs can escalate rapidly. Guardrails provide mechanisms like intelligent rate limiting and token consumption caps, giving you precise control over your budget and resource allocation.

- Maintain compliance: Navigating the complex landscape of internal policies, ethical guidelines, and regulations (particularly AI projects in highly regulated environments) is a continuous challenge. AI guardrails facilitate programmatic enforcement of organizational policies, establishing a repeatable and auditable AI governanceframework.

- Ensure stability: Critical AI services demand uninterrupted availability. Guardrails fortify your AI APIs against various threats, including injection attacks, misuse, and overloading, thereby maintaining service stability and reliability.

Categories of AI guardrails

OK, so we've talked about why AI guardrails are a big deal. Now, let's get into the nitty-gritty of what they actually look like. It's not a one-size-fits-all situation. There are different flavors of guardrails, each with its own superpower in keeping your AI in check. Understanding these categories is key to building a robust and comprehensive safety net for your AI integration.

Let's explore the main types of AI guardrails you'll encounter:

1. Content moderation and prompt safety

First up, let's talk about keeping things clean and safe in the conversation between users and your AI. This category is all about making sure the inputs your AI receives aren't problematic and that the outputs generated are appropriate. It's like having a really good editor and a vigilant bouncer working together to maintain a respectful, secure interaction. Here's how it breaks down:

- Input filtering: This is your first line of defense. Before a prompt even gets to your AI, clever techniques like pattern matching and semantic analysis can help catch anything that looks unsafe or goes against your rules. It's about nipping potential issues in the bud, right at the start.

- Output moderation: Sometimes, even with careful input, an AI might generate a response that needs a little tweaking. Output moderation is about reviewing and adjusting those responses to remove sensitive bits or content that violates your policies. It's the final check before the AI's response is delivered.

- PII protection: Protecting personal data is non-negotiable. This involves using specialized tools to automatically spot and remove or anonymize sensitive information, like names or addresses, in both the prompts and the AI's replies. It's a critical step in maintaining privacy and compliance.

2. Rate limiting and cost controls

Next, let's tackle the practical side of managing your AI resources, especially when it comes to keeping costs in check. This category focuses on setting boundaries for how often and how much your AI is used. It's essential for preventing unexpected bills and ensuring fair usage across your applications and users. Here's what you need to know:

- Token-aware rate limits: It's not just about how many requests are made, but also how much "work" the AI is doing, measured in tokens. Applying limits based on token consumption helps prevent a single user or application from consuming excessive resources and driving up costs.

- Granular token usage control: To balance a smooth user experience with preventing unintended or excessive consumption, we provide precise control over how tokens are utilized. This allows you to set consistent limits that ensure predictable resource consumption and manage costs effectively across all interactions with your AI endpoints.

3. Access control and authentication

Security is paramount, and this category is all about ensuring only the right people and applications can access your AI services. It involves setting up robust systems to verify identities and define what each user or application is allowed to do. Think of it as the security detail for your AI endpoints.

- Strong authorization: Industry-standard methods, like OpenID Connect/OAuth 2.0 and other authentication techniques, confirm the identity of anyone trying to access your AI. This is about building a strong wall around your services.

- Role-based access: Not everyone needs the same level of access. Role-based access allows you to grant permissions based on a user's team, role, or project. This "least privilege" approach minimizes potential risks by ensuring users only have access to what they absolutely need.

4. Prompt management and injection prevention

This category dives into the art and science of crafting effective prompts for your AI, while also protecting against malicious attempts to manipulate its behavior. It's about ensuring consistency in how your AI is instructed and safeguarding it from "injection attacks" that could compromise its responses or leak sensitive information.

- Centralized prompt templates: Instead of having individual users or applications construct prompts from scratch, managing templates centrally ensures that your AI receives consistent and well-structured instructions. This reduces errors and promotes predictable behavior.

- Input sanitization: Just like cleaning data before processing, input sanitization for prompts involves removing or escaping characters and tokens that could be used in an injection attack. It's a critical step in preventing malicious actors from hijacking your AI's responses.

5. Observability and monitoring

So, you've got your guardrails in place, but how do you know they're doing their job? And what do you do when something unexpected happens? That's where observability and monitoring come in. This category is all about having the right tools and systems to see what's happening with your AI services in real-time, track down issues, and ensure everything is operating as it should. Think of it as having a high-tech health check for your AI.

- Tracing and metrics: This is where we get granular. By collecting detailed traces of AI requests, along with metrics like latency and error rates, you gain deep insights into performance and potential bottlenecks. It's like following the breadcrumbs to understand exactly how your AI is processing information and where things might be going sideways.

- Audit logs: For compliance and security, you need a clear record of who did what and when. Audit logs capture API interactions and any policy violations, providing a historical account that's invaluable for investigations, audits, and understanding patterns of usage or misuse. It's your AI's official diary.

6. Governance and compliance

Last, but certainly not least, we have governance and compliance. This category is the backbone of responsible AI integration. It's about establishing clear rules, processes, and accountability for your AI guardrails themselves. How do you define them? How do you update them? How do you ensure they align with your organization's broader policies and external regulations? This is the framework that keeps everything in order.

- Declarative policies: To avoid confusion and ensure consistency, we define guardrails not just as abstract rules, but as code or configurations. Keeping these under version control means you have a reproducible and auditable record of your guardrail setup. It's like having a blueprint for your AI's safety features.

- Change management: The world of AI is constantly evolving, and so should your guardrails. This involves having a process for regularly reviewing and updating your guardrails based on new business needs, emerging threats, and advancements in AI capabilities. It's about making sure your safety net is always up-to-date and effective.

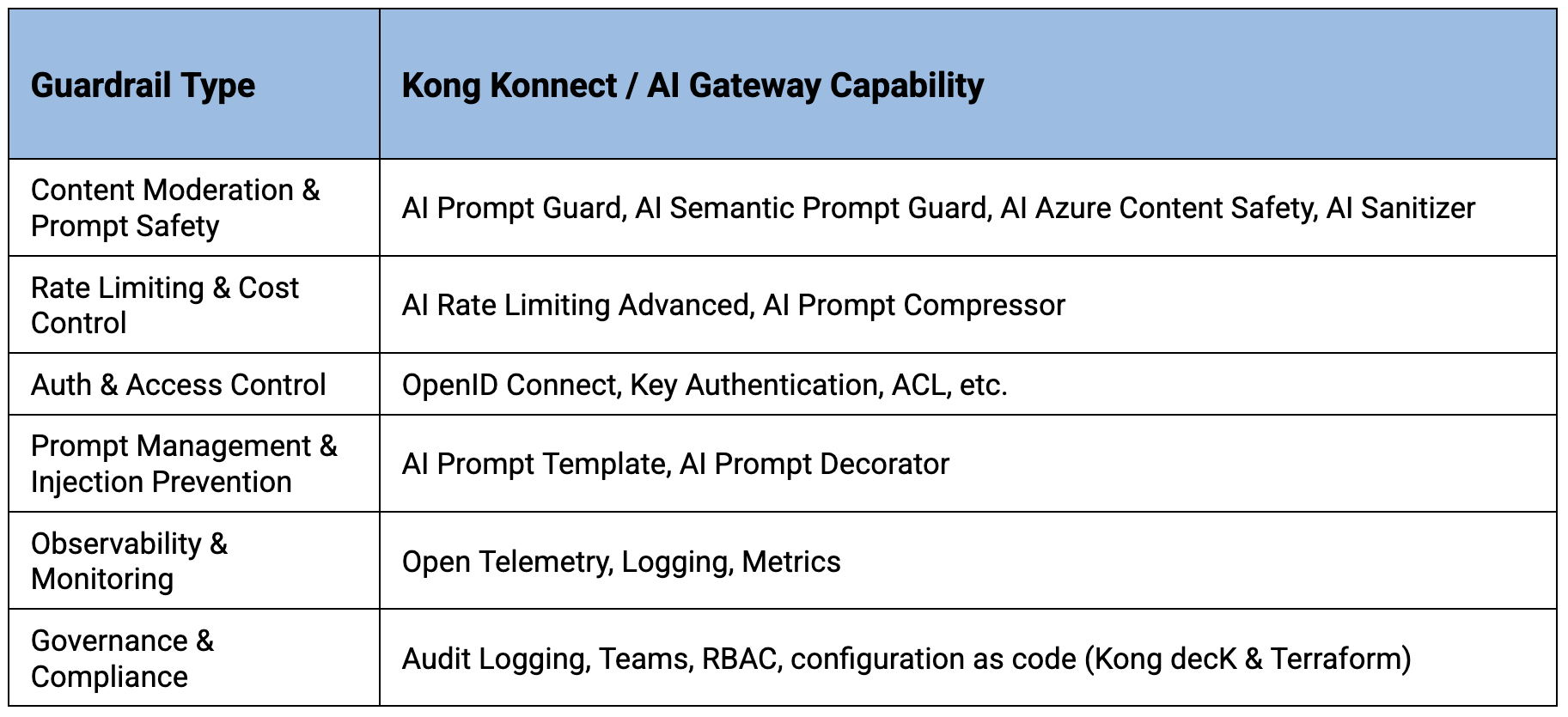

Implementing AI guardrails with Kong

Alright, let's get down to brass tacks and talk about putting these AI guardrails to work. Knowing about AI guardrails is great, but actually implementing them? That's where the rubber meets the road. For this, we're going to look at how Kong Konnect, and more specifically the Kong AI Gateway, can be your best friend in this process.

Kong offers a powerful set of tools (think of them as your AI safety toolkit) to help you implement these guardrails at scale. It's all about using the right plugins and patterns to build that robust safety layer we've been talking about.

Kong’s AI Gateway offers a powerful toolkit of plugins and patterns to implement these guardrails at scale.