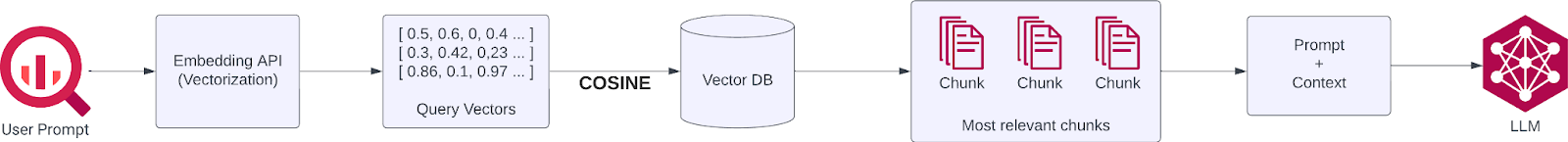

How retrieval works

When a user makes a request:

- An embedding of the user prompt is created using the same embedding API used during ingestion.

- This prompt vector is then compared against the stored vectors in the vector database.

- The vector database returns the most relevant chunks.

“Can you explain how the similarity is calculated and measured?”

We use Nearest Neighbor algorithms with:

- Cosine Similarity: Measures the angle between vectors. This is ideal for semantic similarity, making it the preferred method for test comparison in most RAG applications.

- Euclidean Distance: Measures the actual distance between points. It’s more appropriate for use cases involving coordinate or pixel-based data.

In short: Cosine Similarity is generally the best choice when dealing with text-based embeddings in RAG.

Inject the chunks into the prompt

Once the relevant chunks are retrieved, they can be included in the LLM request to provide context.

“Should I include the RAG context as a system message or user message?”

That’s a great question. It depends on your use case:

- System messages offer strong guidance to the LLM, making them ideal for injecting context. But be cautious malicious chunks can lead to prompt injection. If they’re in a system message, the consequences are more severe.

- User messages allow the context to be closer from the question. This can be slightly less influential than a system message but is generally safer in terms of prompt injection risk.

With Kong AI Gateway, you can choose the injection method easily by configuring the plugin’s "Inject As Role" field, allowing flexibility between system, user, or assistant roles.

Any pro tips?

Yes, there's more to consider! While the ingestion and retrieval steps are essential, we strongly recommend adding security and optimization layers to improve both performance and safety.

Many policies are important, such as:

- Validate incoming prompts

- Sanitize retrieved content

- Monitor for abuse or injection patterns

- Compress the prompt for reducing cost

And guess what?

All of this is available using Kong AI Gateway!

Let’s explore the Kong AI Prompt Compressor plugin to compress retrieved chunks before sending them to the LLM. This helps reduce prompt size, improve latency, and stay within token limits.

AI Prompt Compression

The AI Prompt Compressor is a Kong plugin designed to compress prompts before they are sent to an LLM. It leverages the LLMLingua library to perform intelligent compression while preserving the semantic meaning of the text.

To use this plugin, you'll need to deploy the Compress Service (available as a Docker image) close to your Kong Data Plane. Configuration is done easily through the Konnect UI.

How does it work?

The AI Prompt Compressor plugin compresses your final prompt before it's sent to the LLM.

- Uses LLMLingua 2 for fast, high-quality compression.

- Can compress based on ratio (e.g., reduce to 80% of original length) or target token count (e.g., compress to 150 tokens)

- Use a define compression ranges, for example: Compress prompts under 100 tokens with a 0.8 ratio or compress it to 100 tokens

- Supports selective compression using <LLMLINGUA>...</LLMLINGUA> tags to target specific parts of the prompt.

Why does it matter?

When used alongside the AI RAG Injector, this plugin helps retrieve relevant chunks and ensure your final prompt stays within reasonable limits — avoiding oversized requests that can lead to:

- Increased latency

- Token limit errors

- Unexpected bills from your LLM provider

For our setup, we chose LLMLingua 2 over LongLLMLingua. Why?

- In a RAG context, chunks are already filtered for relevance.

- LLMLingua 2 offers a better trade-off between latency and compression quality.

- It's task-agnostic, fast, and uses advanced NLP techniques to preserve meaning while reducing token count.

Key benefits

- Faster responses

- Lower latency

- Potentially improved LLM output for models with context length limitations

- Lower token usage, which means lower cost

In short: "Compress your prompts without sacrificing performance or quality."

And of course… the best part: smaller prompt = smaller bill 😄

Now, let’s see it in action!

Prepare the Kong Environment

Before diving into the configuration, let’s set up the Kong infrastructure using Konnect and Docker Compose.

You will need access to our Cloudsmith repository to pull the Compress service image. Please contact Kong Sales to obtain the necessary credentials.

Step 1: Create your control plane in Konnect

- Go to your Konnect account: https://cloud.konghq.com/

- Create a new Control Plane.

- When prompted to configure a Data Plane, choose the Docker option.

- Download the following:

- The cluster certificate (cluster.crt)

- The cluster key (cluster.key)

- Your Control Plane Endpoint ID (used in environment variables)

Step 2: Clone the project repository

$ git clone https://github.com/AntoineJac/ai-compress-demo

$ cd ai-compress-demo

Step 3: Add credentials and update the Docker compose file

- Save the downloaded cluster.crt and cluster.key to the /certs directory in the cloned project.

- Open the docker-compose.yaml file.

- Replace all instances of {$CP_ID} with your actual Control Plane Endpoint ID.

Step 4: Deploy the architecture

Start all containers with:

$ docker compose up -d

What this setup includes

- A custom Docker network to allow seamless communication between services

- A Kong Data Plane connected to your Konnect Control Plane

- A Redis Stack Server acting as the Vector Database

- A Compressor Service used by the AI Prompt Compressor plugin

Performance tip

For optimal performance of the compression service, it is strongly recommended to run it on a host with NVIDIA GPU support.

For this demo, we run on a GPU-enabled AWS EC2 instance:

- Image: Deep Learning Base OSS NVIDIA Driver GPU AMI (Ubuntu 24.04)

Easy as CUDA drivers are pre-installed by default on this image!

- Instance Type: g4dn.xlarge

Make sure your instance is supporting CUDA drivers

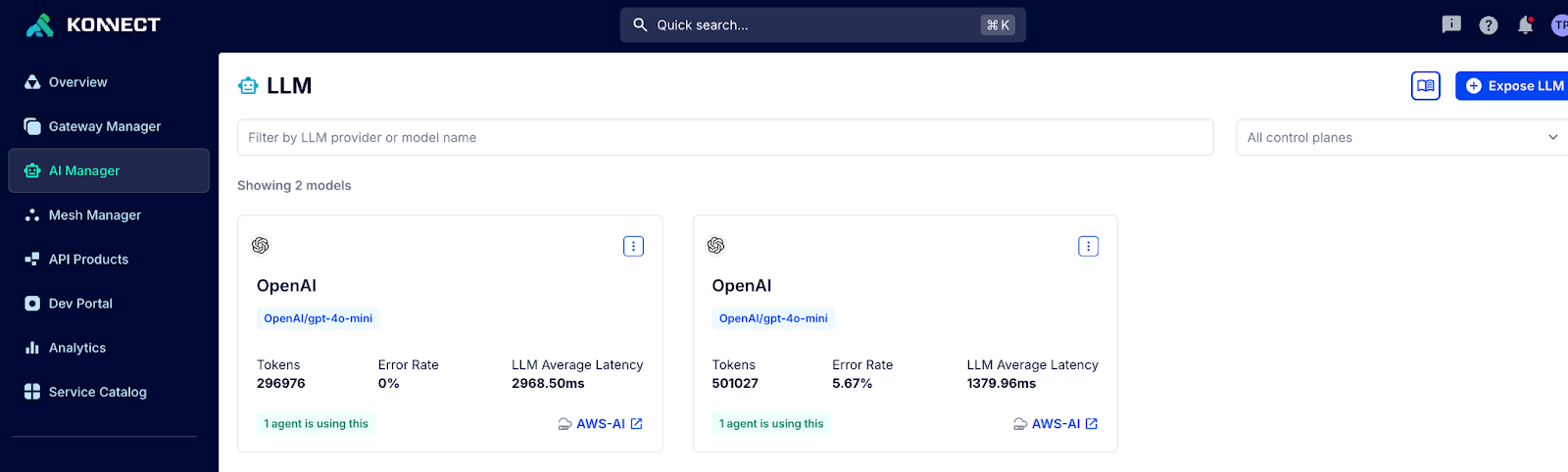

Configure your Kong setup

We will use the new AI Manager tool for this: