Stop me if you’ve heard this one before, but there’s a lot of data out there — and the amount is only growing. Estimates typically show persistent data growth roughly at a 20% annual compounded rate. Capturing, storing, analyzing, and actioning data is at the core of digital applications, and it’s critical for both the day-to-day operations and detecting trends, for reporting, forecasting, and planning purposes.

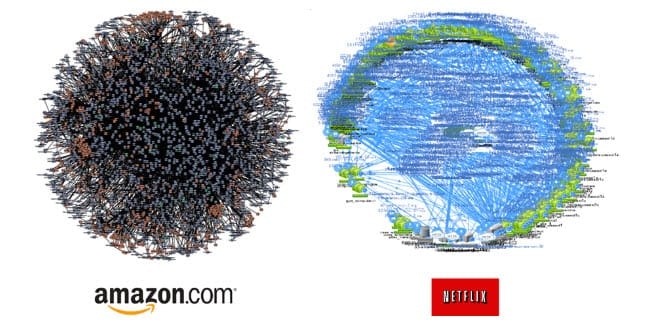

Both centralized and decentralized approaches for data management exist with natural tradeoffs to be weighted. Increasingly, the decentralized approach is favored . . . but not without challenges to consider and address.

Regardless of the approach being used, the objective is to keep up with the volume, variety, and velocity of data, while ensuring privacy and security, in such a way that all relevant stakeholders can both contribute to creating and receiving data in a timely (and, ideally, painless) manner.

Challenges of centralized data sharing

In a centralized data sharing model, stakeholders agree to have a central entity to collect, transform, cleanse, and organize data in a unified environment.

This may take a few forms, such as a data warehouse, data lake, or the hybrid, data lakehouse, for example. Any data or APIs to be introduced into the system must go through the processes and governance model the centralized system uses.

While a centralized system can be considered efficient and consistent given that there is no data replication, it also introduces a few challenges.

Privacy and security risks

Centralized systems must be tightly controlled and monitored as a security breach can lead to a high potential for data theft or loss. Therefore security measures must be taken to ensure centralized data and API platforms are properly protected and regularly monitored.

Arguably, centralizing the data and APIs and strongly protecting them ensure consistent and standardized protection mechanisms. While this may be true, it doesn’t remove the risk of a breach being able to access, or corrupt, all the central data. The well-known idiom of “don’t put all your eggs in one basket” comes to mind. By centralizing the data, the downside of a breach can be catastrophic.

Lack of control

As a consequence of the strong security measures in centralized deployments, access and control of the platform is typically conservatively provisioned. This intentional approach has the consequence of limiting opportunities for innovation, cooperation, and competition.

Participants who use the platform will find they have less flexibility and control. This also may result in central platform owners and operators becoming a bottleneck since users of the platform rely on them and cannot self-serve. Due to this, innovation is reduced, and potential use cases are either not fully met, or not met at all.

Walled gardens

Centralized systems have another downside. Given that typically a single owner normalizes the data, the participants in the system typically cannot enrich or alter this data on which they operate for their use cases. They do not have control over exporting or altering the central data. That data is not portable.

There is just a single model that isn’t easily changed to keep it stable for use by a wide variety of teams. This “walled garden” with limited data portability constricts the ability of teams to build their own models. It becomes a slow, or hard to change, least common denominator.

Benefits of decentralized data exchange

Decentralized data exchange with APIs is favored for building agile scalable applications.

Decentralization lets stakeholders have more direct control and access and therefore increases autonomy and innovation. And because there’s no centralized data exchange or access point, there’s also no single point of failure — and therefore better scalability and reliability.

However, decentralization isn’t without a cost as well. With data being replicated into multiple locations, consistency must be ensured. And with more data and API platforms, integration, and governance can become complex.

Finally, with data being distributed, the attack surfaces for accessing this data also increase. So it’s important to address or mitigate said challenges to best take advantage of the benefits of decentralization.

Enhanced privacy

With decentralization, the ownership and administration of data are organically assigned to the stakeholders who are working with the data in their environment. Access to the local data is limited by default. As such, the data is tailored and accessible to those who need it, and where it fits their bespoke use case(s). And while the data may be available with other teams, each team manages its own access control and any local alterations.

User control

In a decentralized data exchange environment there’s more freedom and flexibility for stakeholders or application participants to decide how to best store, structure, analyze, replicate, or propagate their data. This increases their ability to innovate through the autonomy they have and to better meet the requirements of their use cases. The teams don’t just have a replica of the data, but may have local enhancements or enrichments, or certain mixes of relevant and necessary data.

Data interoperability

In a decentralized model, teams eventually diversify their data and potentially reach new insights, or functionalities which may become useful to other teams. Given the free movement of data, different teams reliably exchange information via APIs as needed.