In his most recent blog post, Marco Palladino, our CTO and co-founder, went over the difference between API gateways and service mesh. I highly recommend reading his blog post to see how API management and service mesh are complementary patterns for different use cases, but to summarize in his words, "an API gateway and service mesh will be used simultaneously." We maintain two open source projects that work flawlessly together to cover all the use cases you may encounter.

So, in this how-to blog post, I'll cover how to combine Kong for Kubernetes and Kuma Mesh on Kubernetes. Please have a Kubernetes cluster ready in order to follow along with the instructions below. In addition, we will also be using `kumactl` command line tool, which you can download on the official installation page.

Step 1: Installing Kuma on Kubernetes

Installing Kuma on Kubernetes is fairly straightforward, thanks to the `kumactl install [..]` function. You can use it to install the control-plane with one click:

After everything in `kuma-system` namespace is up and running, let's deploy our demo marketplace application:

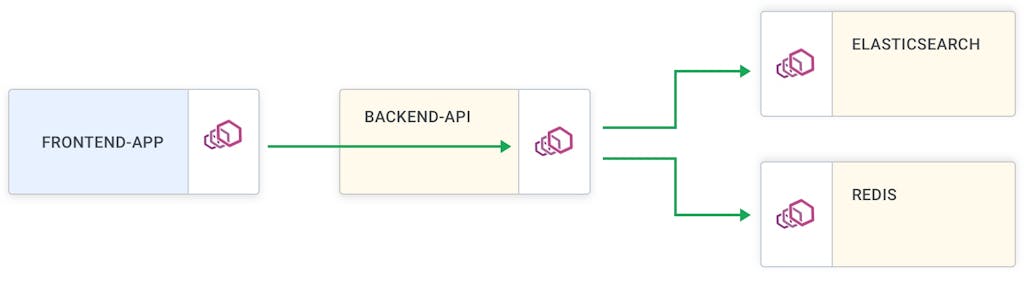

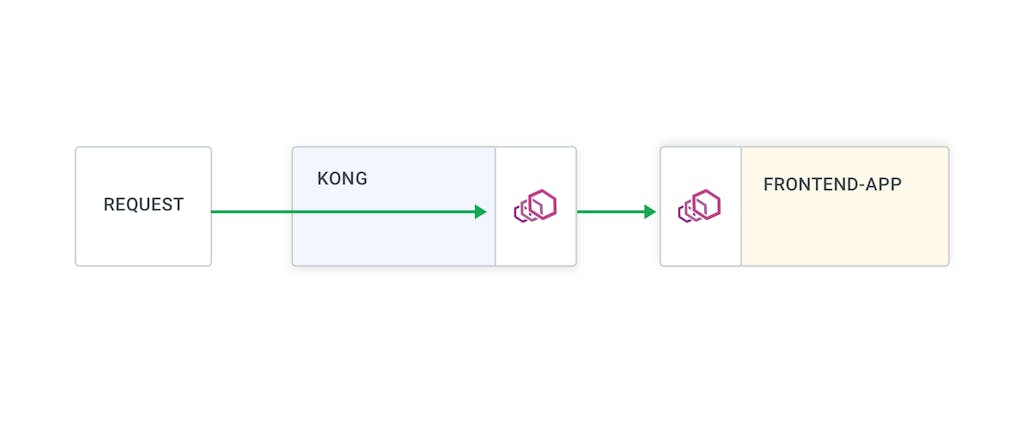

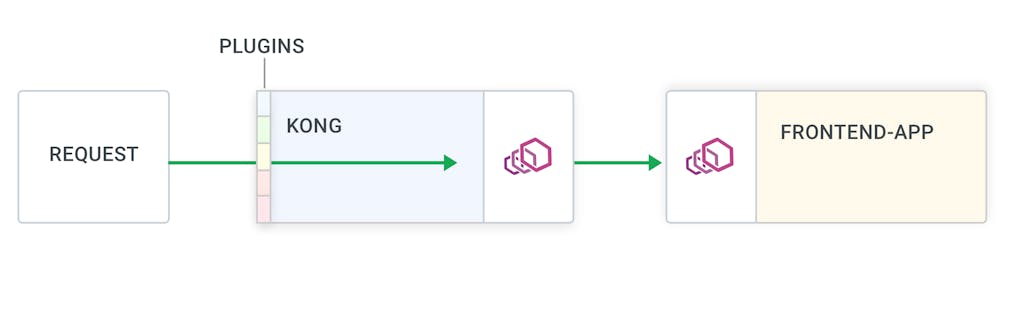

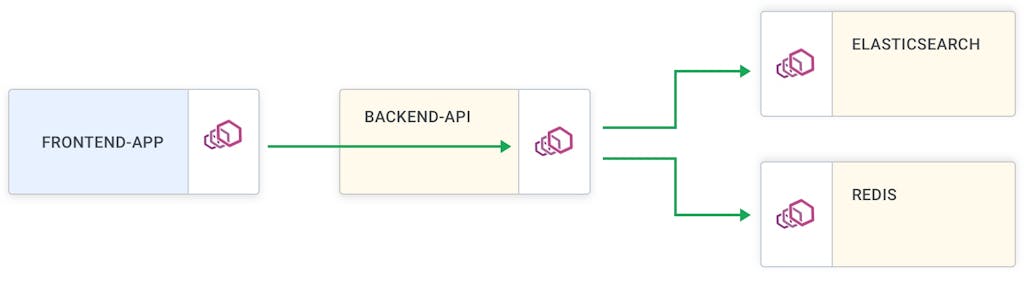

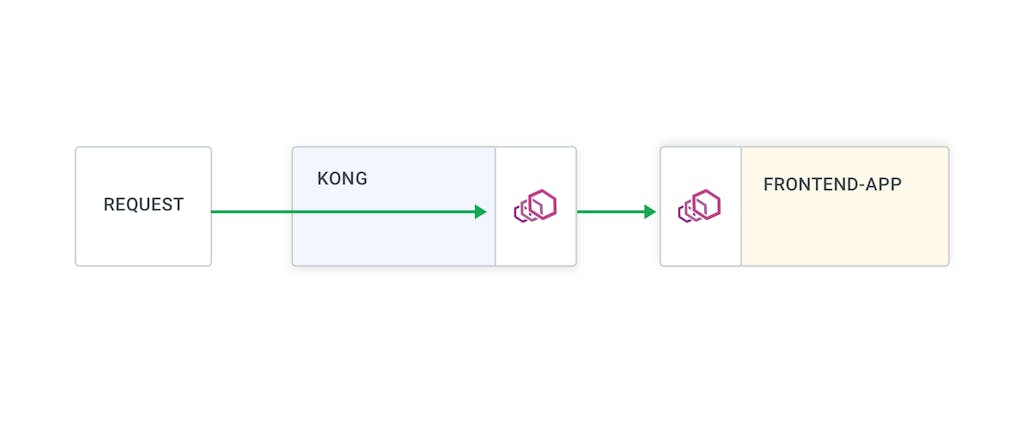

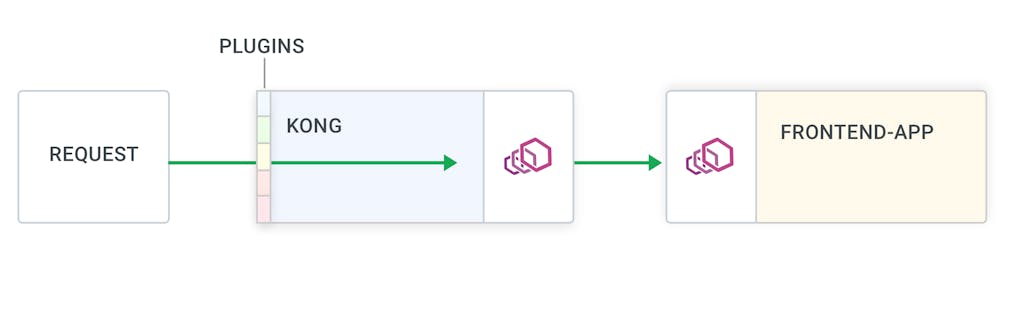

The application is split into four services with all the traffic entering from the frontend app service. If we want to authenticate all traffic entering our mesh using Kong plugins, we will need to deploy the gateway alongside the mesh. Once again, to learn more about why having a gateway and mesh is important, please read Marco's blog post.

Step 2. Deploying Kong for Kubernetes

Kong for Kubernetes is an ingress controller-based on the open source Kong Gateway. You can quickly deploy it using `kubectl`:

On Kubernetes, Kuma `Dataplane` entities are automatically generated. To inject gateway Dataplane, the API gateway‘s pod needs to have the following `kuma.io/gateway: enabled` annotation:

Our `kuma-demo-kong.yaml` already includes this annotation, so you don’t need to do this manually.

After Kong is deployed, export the proxy IP:

And check that the proxy IP has been exported; run:

Sweet! Now that we have Kong for Kubernetes deployed, go ahead and add an ingress rule to proxy traffic to the marketplace frontend service.

By default, the ingress controller distributes traffic amongst all the pods of a Kubernetes service by forwarding the requests directly to pod IP addresses. One can choose the load-balancing strategy to use by specifying a KongIngress resource.

However, in some use cases, the load-balancing should be left up to kube-proxy or a sidecar component in the case of service mesh deployments. For us, load-balancing should be left to Kuma, so the following annotation has been included in our frontend service resource:

Remember to add this annotation to the appropriate services when you deploy Kong with Kuma.

3. Add Policy

With both Kong and Kuma running on our cluster, all that is left to do is add a traffic permission policy for Kong to the frontend service:

That's it! Now, if you visit the `$PROXY_IP`, you will land in the marketplace application proxied through Kong. From here, you can enable all those fancy plugins that Kong has to offer to work alongside the Kuma policies.

Thanks for following along 🙂