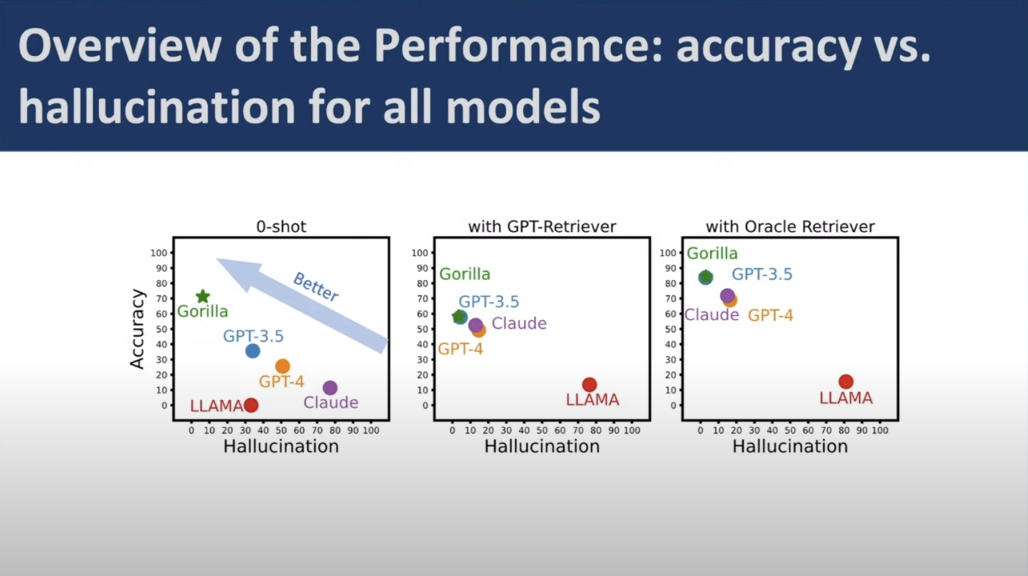

Shishir says for LLMs using zero-shot learning (ZSL), Gorilla excels in cutting down hallucination and upping accuracy.

At his API Summit 2023 session, Shishir proposed the hypothetical scenario that a user wants to search for an A100 GPU in the Eastern United States. Using a program like Gorilla, the proper APIs will be selected for the given task to optimize the accuracy of the outcome. These APIs will then scan the current world state, relay that information back to Gorilla, and then ultimately end up back to the user with a comprehensive result. Gorilla would be able to find results for something with the GPU capacity to process the request as well as the quota required for the allocation of the proposed cloud data. Seems pretty helpful, doesn’t it?

Not only does this model take much of the stress off the user, but it also increases the importance of the already significant APIs. In essence, the creation and expansion of AI can coalesce with the existing technology of APIs to create a mutually synergistic relationship. Instead of trying to build up different tools to add to the user's arsenal, tools can be combined to create something even more beneficial.

Challenges to overcome

While these ideas are great and all, some hurdles must be overcome along the way. There could be LLMs implemented within the constructs of APIs immediately, but there would be massive issues with the outcomes they produce. Like Goldilocks, the correct outcome wouldn’t be too narrow or broad of a search, but it would be just right. But how do we find that sweet spot?

When searching for an answer online, sometimes the first result you get isn’t the one that best suits your question. Being too brief in a search isn’t going to yield the desired result for whatever the question was. This concept perfectly translates to LLMs and how AI can best respond to the request of the user. If the AI were to merely look at the first online answer to your question, then it may not be as accurate as the user hopes for.

Similarly, when the scope of an LLM is cast too wide, there’s the chance of AI hallucinations.

Fine-tuning this idea is similar to focusing a camera lens. While a lens has the ability to focus on both close and distant sights, one must tailor the lenses to perfectly center on the intended image. Using concepts, codes, and algorithms, the Gorilla Project proposes solutions to these obstacles.

What is the Gorilla project?

An LLM trained to invoke APIs

The Gorilla Project intends to create an LLM that capable of selecting and using the correct APIs for a given task. To be able to achieve this, the LLM has to be trained in a specific way for the optimal API to be utilized.

The idea introduced by Gorilla to go about doing so is Retrieval Awareness Training, or RAT. In this model, the LLM is capable of fine-tuning the retrieval of information by being able to sift through correct or incorrect results. The benefit of this training is to allow for results to be anywhere from “robust to low-quality,” as Shishir describes it, in the retrieval process.

Current assessments of Gorilla are yielding incredibly positive results. For LLMs using zero-shot learning (ZSL), Gorilla excels in minimizing hallucination while increasing accuracy. Compared to the ZSL of another AI like GPT 3.5, Gorilla is around twice as accurate and hallucinates about 60% less. With specialized retrievers such as Oracle and GPT-Retriever, the LLMs can better compete with Gorilla, but using ZSL for Gorilla is proving to be dramatically more effective than alternative AI.

Gorilla is currently available on GitHub, where users can download the product and try it out themselves. Between all the downloads, Gorilla has already surpassed 300,000 invocations. This outreach is crucial since the technology will only get better the more it is utilized.

Ongoing research challenges

Shishir and his team are continuing to adapt to new challenges as well as anticipate others throughout their process of tweaking Gorilla. While the product is fully functional as is, there are still some upcoming concerns that need to be addressed in attempts to perfect the technology.

When the LLM calls on the correct API, all’s well. But what happens when the called API doesn’t work for the intended purpose? APIs can be fairly intricate, being composable with nuance for specific intentions. Having Gorilla be able to understand the composability of APIs will only make it better at calling on the perfect one for a given task. However, on the off chance that the API does fail when Gorilla calls it, there must be an effective protocol for what would happen next. The developers behind the Gorilla project are anticipating this problem and are working on a solution for the fallbacks.

Being able to maintain state is another key factor that is looking to be implemented in Gorilla. In the example proposed by Shishir, the user hypothetically asks Gorilla to “start my GPU VM in East-US”. Later on, when the user would want to shut the GPU down, the user would write “shut down that instance”. People can deduce what “that” is referring to, but the program has to be taught how to make that correlation. While it would just work for typing “GPU VM” instead of “that”, it would be a more seamless experience for the user to easily communicate with the AI if it can infer what to do. Especially if there has been conversation or inputs between those two commands, how would Gorilla be able to take the command and recall what was previously started with minimal context? These features are aimed to enhance the user experience while also making the program as intuitive as possible.

In its current state, Gorilla can invoke the API while the user is required to execute it. While this makes sense from a security and verification standpoint, future ideas entail that Gorilla can select, invoke, and execute the proper API all by itself.

“This will unlock the next paradigm where you can have LLMs just talk to each other and talk to the world, and you as a user are only going to look at the end state”, Shishir said.

Again, the goal is to try and make the LLM do all of the heavy lifting to produce one clear and cohesive outcome for the user.

Conclusion

The current state of AI and LLMs is making a positive impact with the way users interact with technology. While we may just be scratching the surface of AI’s seemingly endless possibilities, Gorilla is making one giant leap in the ideal direction. Looking to have LLMs invoke APIs is the next step, and the Gorilla project is shaping the trajectory for where AI will go next.

The more users that download and use Gorilla, the better the program can become. Shishir and his team rely on feedback from any and all users to better shape Gorilla into an optimal service. (This is where you come in.)

You can try out Gorilla and find more information from Shishir and his team here.