With the 1.3 release, Kong is now able to natively manage and proxy gRPC services. In this blog post, we'll explain what gRPC is and how to manage your gRPC services with Kong.

What is gRPC?

gRPC is a remote procedure call (RPC) framework initially developed by Google circa 2015 that has seen growing adoption in recent years. Based on HTTP/2 for transport and using Protobuf as Interface Definition Language (IDL), gRPC has a number of capabilities that traditional REST APIs struggle with, such as bi-directional streaming and efficient binary encoding.

While Kong supports TCP streams since version 1.0, and, as such, can proxy any protocol built on top of TCP/TLS, we felt native support for gRPC would allow a growing user base to leverage Kong to manage their REST and gRPC services uniformly, including using some of the same Kong plugins they have already been using in their REST APIs.

Native gRPC Support

What follows is a step-by-step tutorial on how to set up Kong to proxy gRPC services, demonstrating two possible scenarios. In the first scenario, a single Route entry in Kong matches all gRPC methods from a service. In the second one, we have per-method Routes, which allows, for example, to apply different plugins to specific gRPC methods.

Before starting, install Kong Gateway, if you haven’t already.

As gRPC uses HTTP/2 for transport, it is necessary to enable HTTP/2 proxy listeners in Kong. To do so, add the following property in your Kong configuration:

Alternatively, you can also configure the proxy listener with environment variables:

In this guide, we will assume Kong is listening for HTTP/2 proxy requests on port 9080 and for secure HTTP/2 on port 9081.

We will use the gRPCurl command-line client and the grpcbin collection of mock gRPC services.

Case 1: Single Service and Route

We begin with a simple setup with a single gRPC Service and Route; all gRPC requests sent to Kong’s proxy port will match the same route.

Issue the following request to create a gRPC Service (assuming your gRPC server is listening in localhost, port 15002):

Issue the following request to create a gRPC Route:

Using gRPCurl, issue the following gRPC request:

The response should resemble the following:

Notice that Kong response headers, such as via and x-kong-proxy-latency, were inserted in the response.

Case 2: Single Service, Multiple Routes

Now we move on to a more complex use-case, where requests to separate gRPC methods map to different Routes in Kong, allowing for more flexible use of Kong plugins.

Building on top of the previous example, let’s create a few more routes, for individual gRPC methods. The gRPC "HelloService" service being used in this example exposes a few different methods, as we can see in its Protobuf definition (obtained from the gRPCbin repository):

We will create individual routes for its "SayHello" and "LotsOfReplies" methods.

Create a Route for "SayHello":

Create a Route for "LotsOfReplies":

With this setup, gRPC requests to the "SayHello" method will match the first Route, while requests to "LotsOfReplies" will be routed to the latter.

Issue a gRPC request to the "SayHello" method:

(Notice we are sending a header kong-debug, which causes Kong to insert debugging information as response headers.)

The response should look like:

Notice the Route ID refers to the first route we created.

Similarly, let’s issue a request to the "LotsOfReplies" gRPC method:

The response should look like the following:

Notice that the kong-route-id response header now carries a different value and refers to the second Route created in this page.

Note: gRPC reflection requests will still be routed to the first route we created (the "catch-all" route), since the request matches neither SayHello nor LotsOfReplies routes.

Logging and Observability Plugins

As we mentioned earlier, Kong 1.3 gRPC support is compatible with logging and observability plugins. For

example, let’s try out the File Log and Zipkin plugins with gRPC.

File Log

Issue the following request to enable File Log on the "SayHello" route:

Follow the output of the log as gRPC requests are made to "SayHello":

Notice the gRPC requests were logged, with info such as the URI, HTTP verb, and latencies.

Zipkin

Start a Zipkin server:

Enable the Zipkin plugin on the grpc Service:

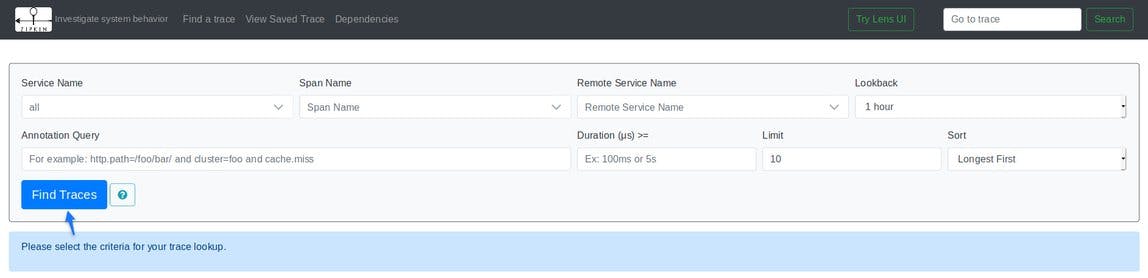

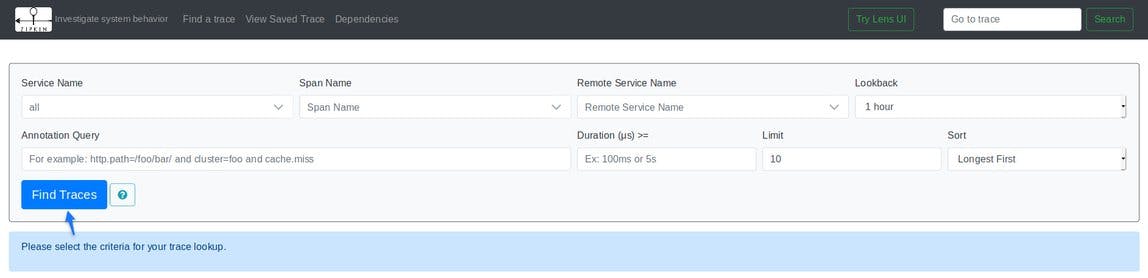

As requests are proxied, new spans will be sent to the Zipkin server and can be visualized through the Zipkin Index page, which is, by default, http://localhost:9411/zipkin:

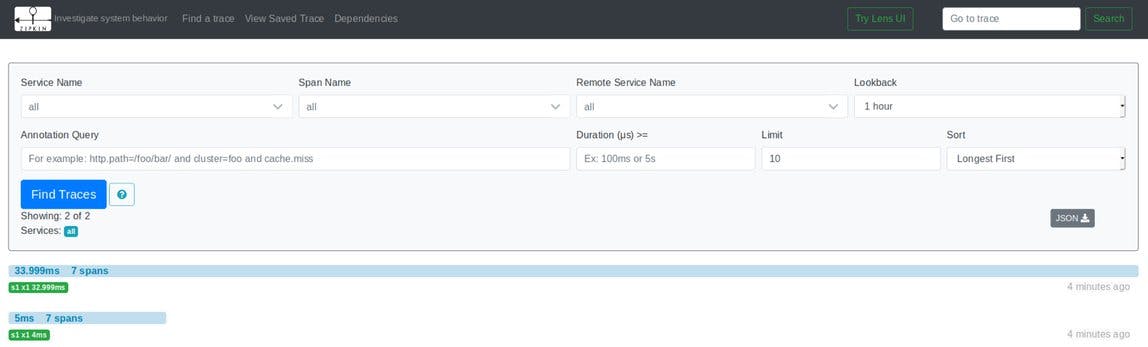

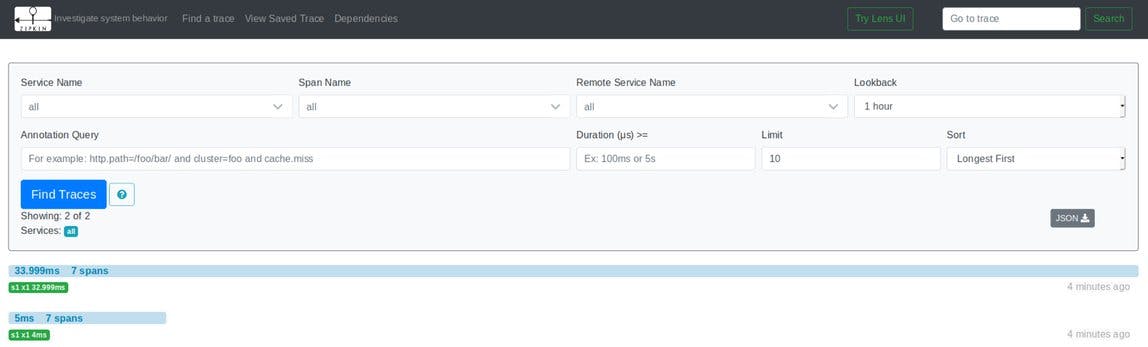

To display Traces, click "Find Traces", as shown above. The following screen will list all traces matching the search criteria:

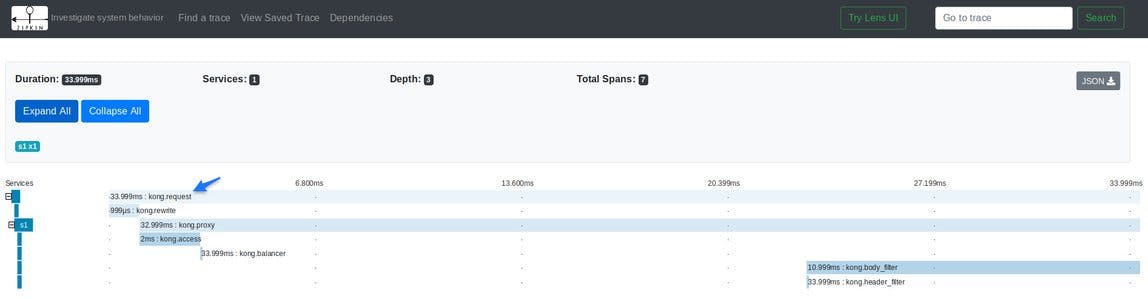

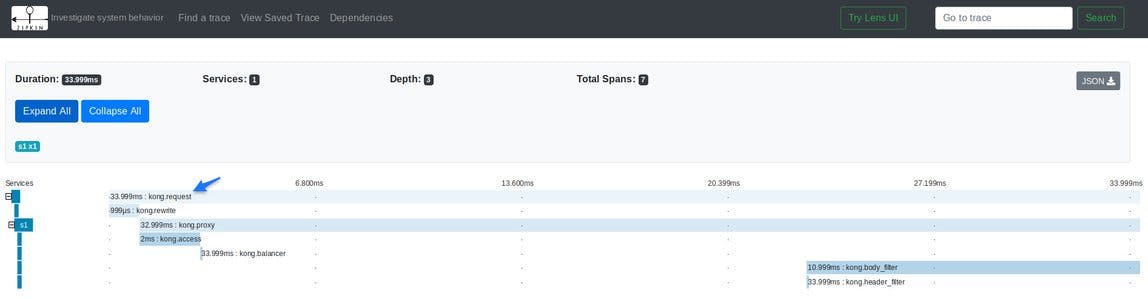

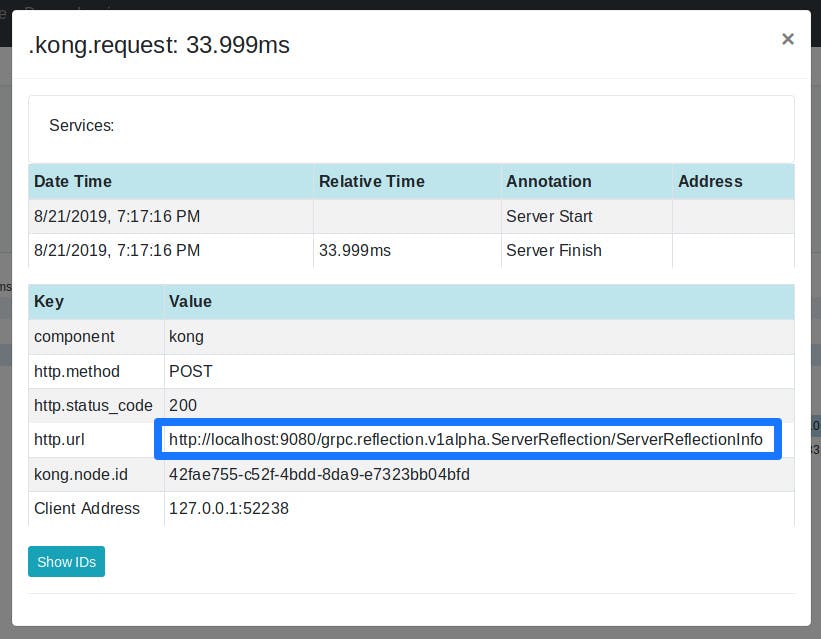

A trace can be expanded by clicking into it:

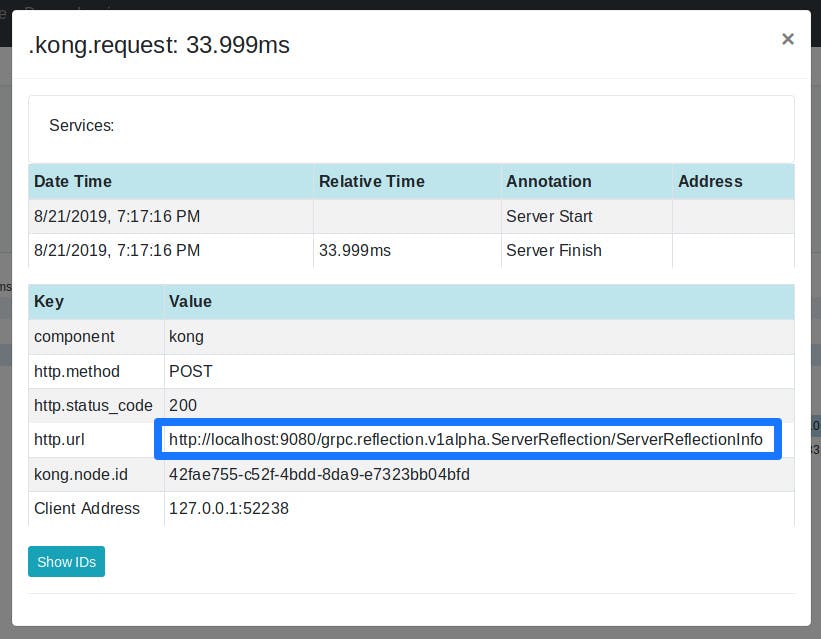

Spans can also be extended, as displayed below:

Notice that, in this case, it’s a span for a gRPC reflection request.

What’s Next for gRPC support?

Future Kong releases will include support for natively handling Protobuf data, allowing gRPC compatibility with more plugins, such as request/response transformer.

Have questions or want to stay in touch with the Kong community? Join us wherever you hang out:

⭐ Star us on GitHub

🐦 Follow us on Twitter

🌎 Join the Kong Community

🍻 Join our Meetups

❓ ️Ask and answer questions on Kong Nation

💯 Apply to become a Kong Champion