Rather than take the manual approach, let's work with an infrastructure supported by Kong Konnect. We'll outline the steps for getting set up with Kong Konnect, but remember, you only need to do this at the start. After you are up and running with Kong Konnect, adding new services or adding monitoring or logging solutions is simple.

Initial Kong Konnect Setup

First, make sure that you have a Kong Konnect account.

Kong Konnect requires that you have a Kong Gateway runtime deployed. When Kong Gateway starts up, it reaches out to Kong Konnect, establishing a connection for updates. We'll install our Kong Gateway runtime on our local machine. We're using Kong Gateway Enterprise (in free mode) at version 2.4.1.1, which we've installed on our local Ubuntu machine.

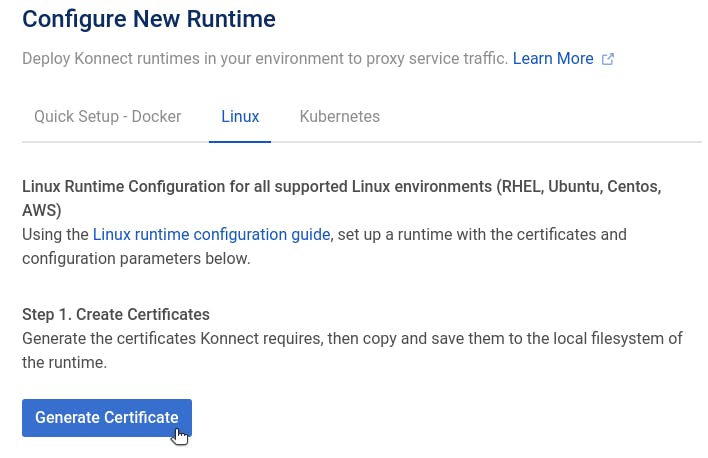

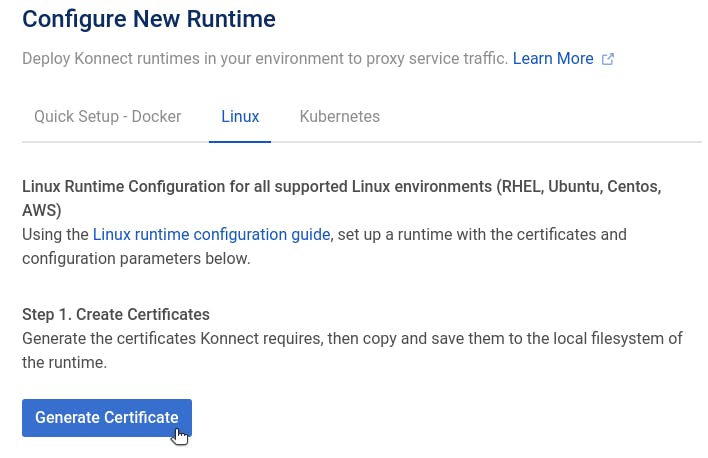

Generate Certificates

We need to configure Kong Gateway with certificates for authentication to Kong Konnect. After logging in to your Konnect account, click on Runtimes and find the environment where you will deploy your Kong Gateway. For our Linux environment, we click on Generate Certificates to get the files we need.

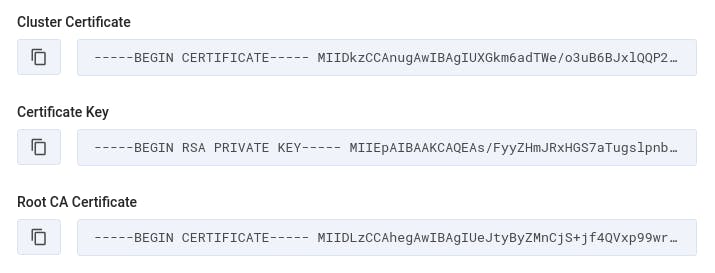

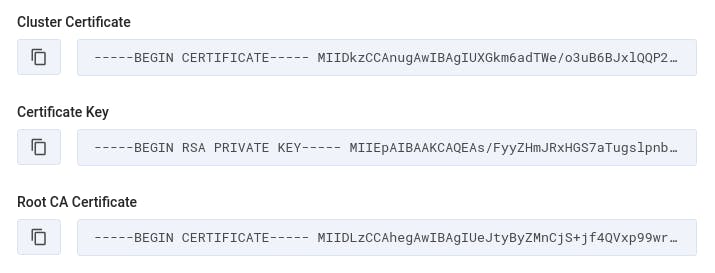

We immediately see three strings that we need to copy to files on our local machine.

The "Cluster Certificate" should be copied to a file called tls.crt. We should copy the "Certificate Key" to a file called tls.key. Lastly, the "Root CA Certificate" should be copied to a file called ca.crt.

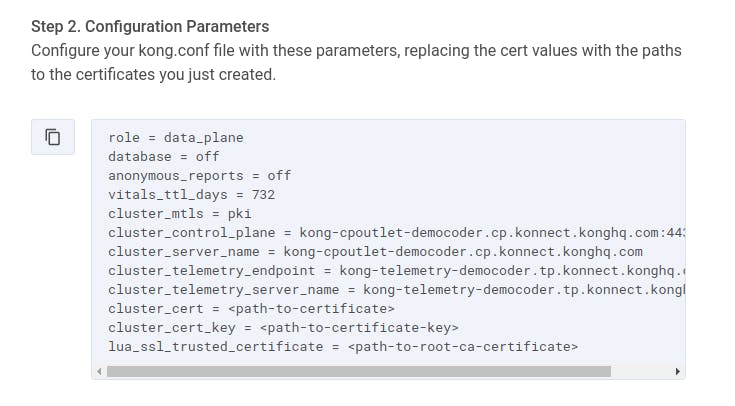

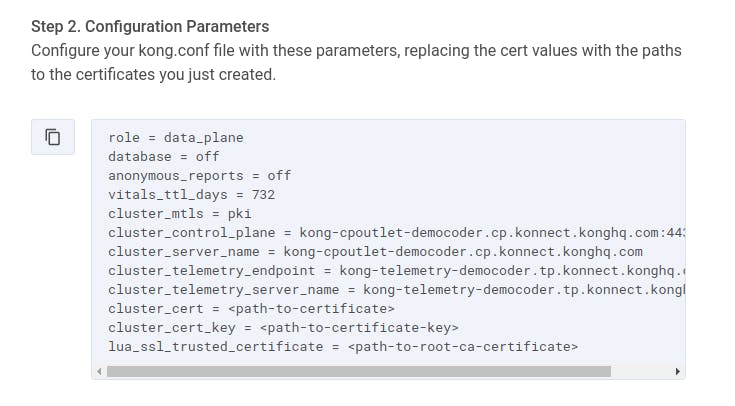

Next, Konnect displays a list of configuration parameters that we need to add to the Kong Gateway startup configuration file (typically located at /etc/kong/kong.conf):

For the last three lines of these parameters, you'll need to enter the absolute path to the three certificate-related files you just created.

In addition to the above parameters, we also want to set the status_listen parameter so that Kong's Status API will be exposed for a monitoring tool (like Prometheus) to receive metrics. This parameter is at around line 517 of our kong.conf file, and we set it to 0.0.0.0:8001.

With our parameters in place, we run the command kong start.

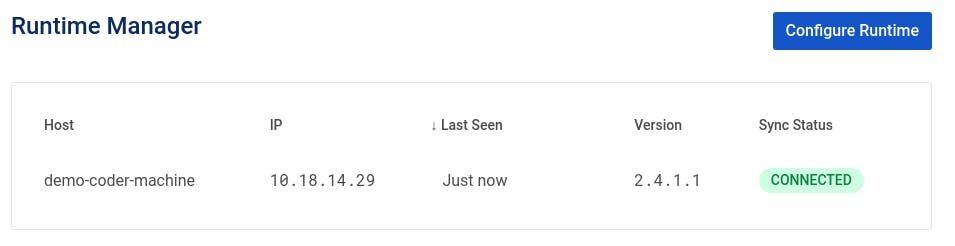

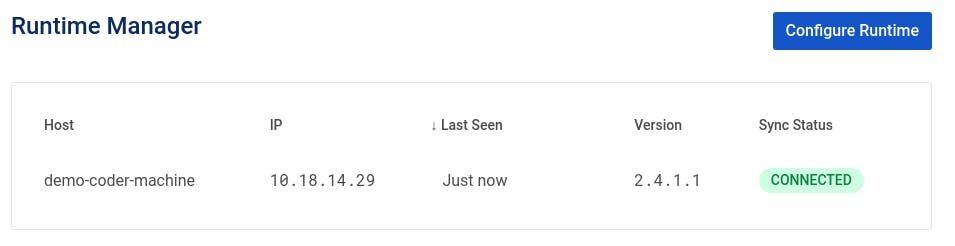

Verify Connection in Runtime Manager

After a few moments, our local Kong Gateway runtime will connect to Kong Konnect, and the Runtime Manager on Kong Konnect will show the established connection:

Now that we're connected, we can begin adding services.

Add Services to Kong Konnect

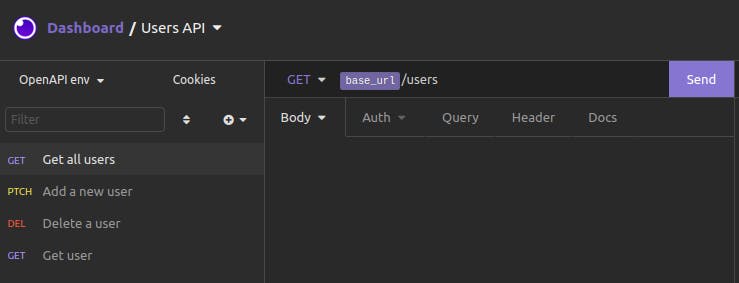

In the ServiceHub for Konnect, we click on Add New Service. We'll start by adding the Users service that is running at GCP.

We set a name, version and description for our service:

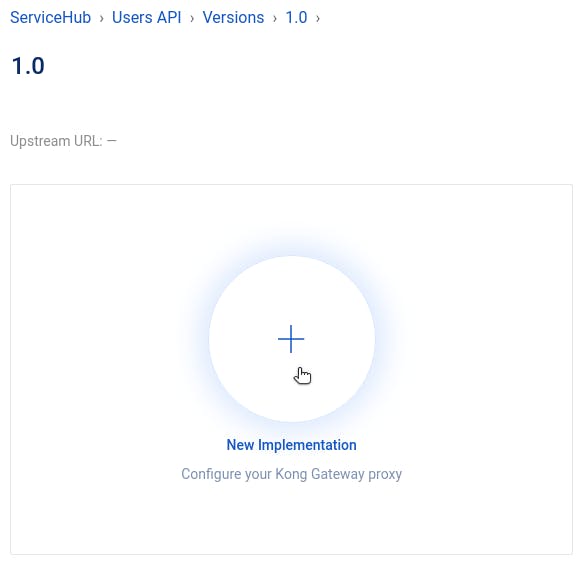

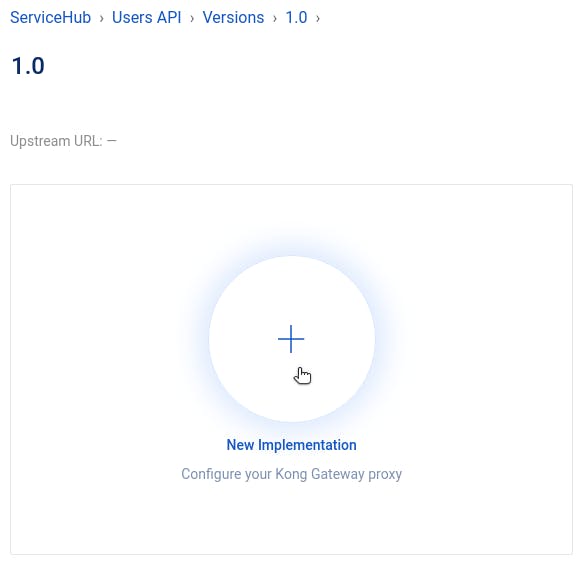

With our service created, we navigate to our 1.0 version to create a new implementation.

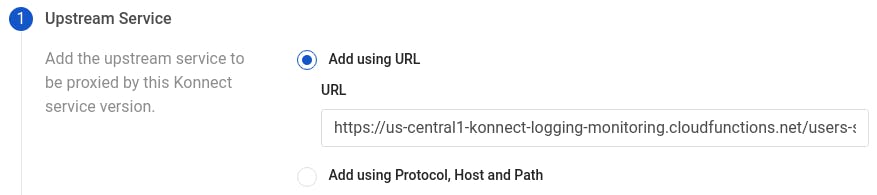

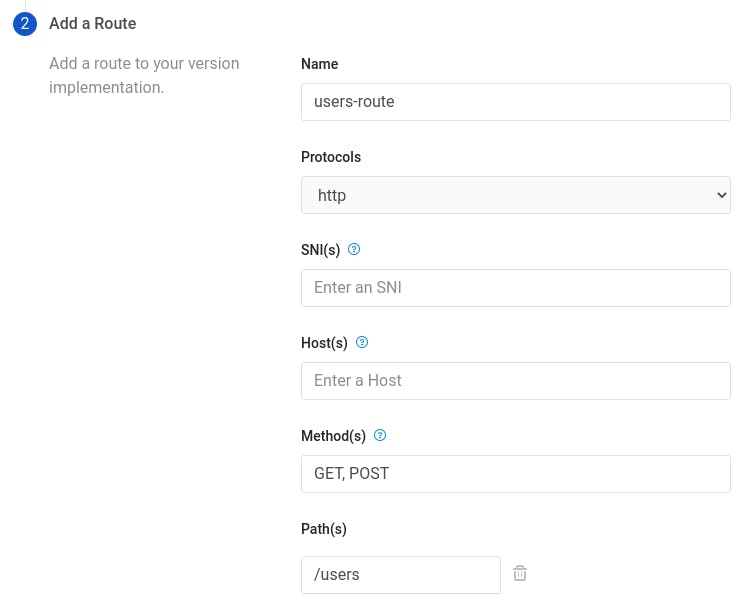

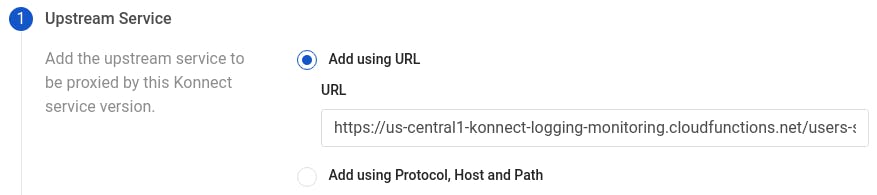

This is where we create an upstream service and an associated route. We add a URL for our upstream service, using the GCP Cloud Function URL where we deployed our service.

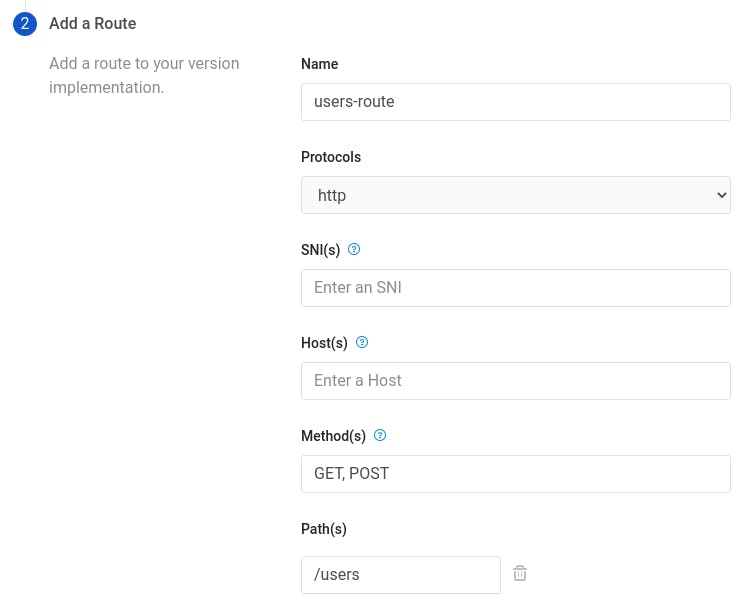

Then, we click on Next to configure the route for our service. We set a name for our route, choose the HTTP protocol (since we are running Kong Gateway locally), enter methods to listen for, and add a path.

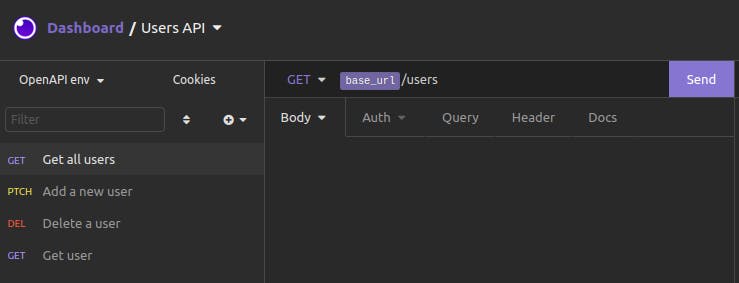

Now, when we send a curl request to http://localhost:8000/users, this is what we see:

The steps for adding the Orders and Products services are identical to what we've done above. After we've done that, curl requests to localhost are proxied by Kong to reach out to our GCP Cloud Functions:

Now, we're all set up with Kong Konnect. That may seem like a lot of steps, but we've just completed the setup for our entire microservices architecture and connectivity solution! If we need to modify a service—perhaps to point to a different Cloud Function URL or listen on a different path—it's simple to make those changes here in a central place, Kong Konnect. Whenever we need to add or remove a service, we do that here, too.

Adding Microservices Monitoring

Now, the big question is: What's the level of effort for adding a monitor microservices solution to each of my services? The level of effort is "a couple of clicks." Let's walk through it. Don't blink, or you'll miss it.

Adding the Prometheus Plugin

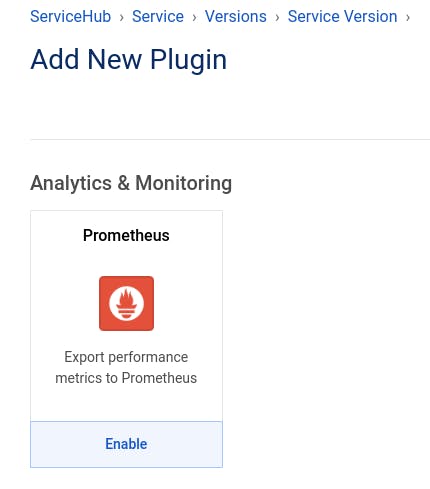

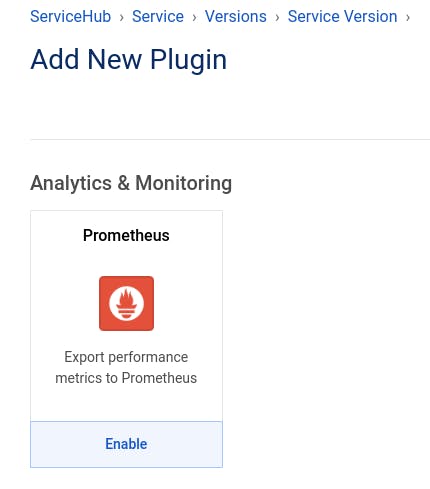

In our Users API service in Kong Konnect, we navigate again to our 1.0 version. At the bottom of the page, we click on New Plugin. We search for the Prometheus plugin. When we find it, we click on Enable.

We enter a tag so that metrics associated with this service are all grouped together. Then, we click on Create.

And… we're done. Yes, that was it.

We do the same for our Orders API service and our Products API service.

When reviewing our manual approach, you'll recall that we needed to reconfigure the scrape_configs in Prometheus, adding a target for each microservice exposing metrics. In this Kong Konnect approach, there is only one location exposing metrics—our Kong Gateway, at port 8001. Whether you have one service or three or a hundred, Kong Konnect serves as the single target for scraping all Prometheus metrics. Our prometheus.yml file looks like this:

With our configuration set, we start our Prometheus server and our Grafana server.

Configuring Grafana

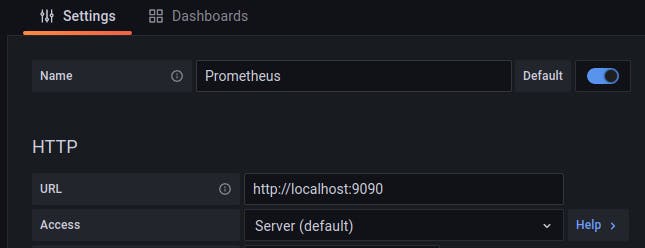

With the Grafana server up and running, we can log in and add our data source:

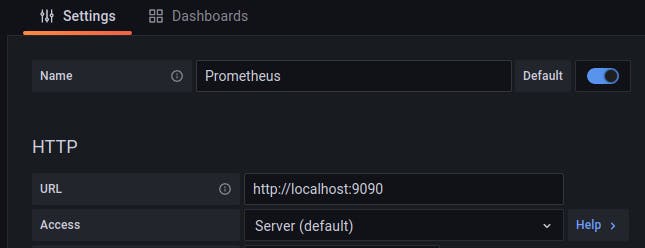

We add Prometheus as a data source, using the URL for the Prometheus server (http://localhost:9090).

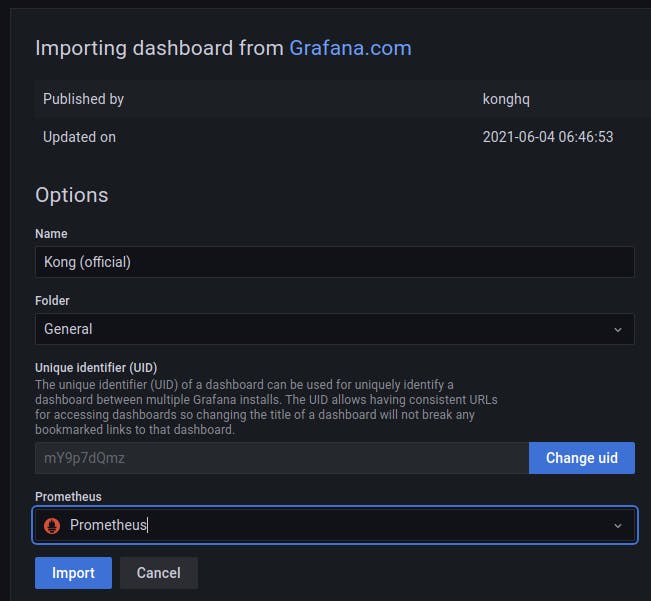

After we've added Prometheus as a data source, we could use a dashboard for some visualizations. Fortunately, Kong has an official dashboard for Grafana, designed especially to show Kong-related metrics!

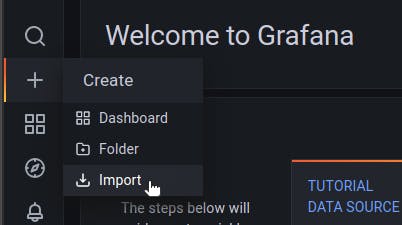

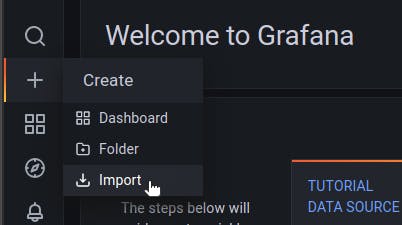

To import the Kong dashboard, click on Import in the side navbar:

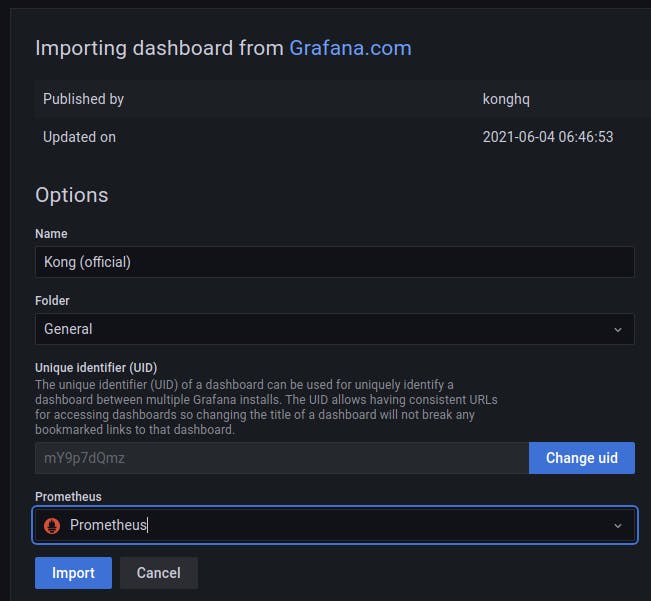

In the field for a Grafana dashboard ID, enter 7424:

Configure the Kong dashboard to use the Prometheus data source, and then click on Import.

Now, Grafana is set up to look to Prometheus for its data and then display visualizations using Kong's dashboard.

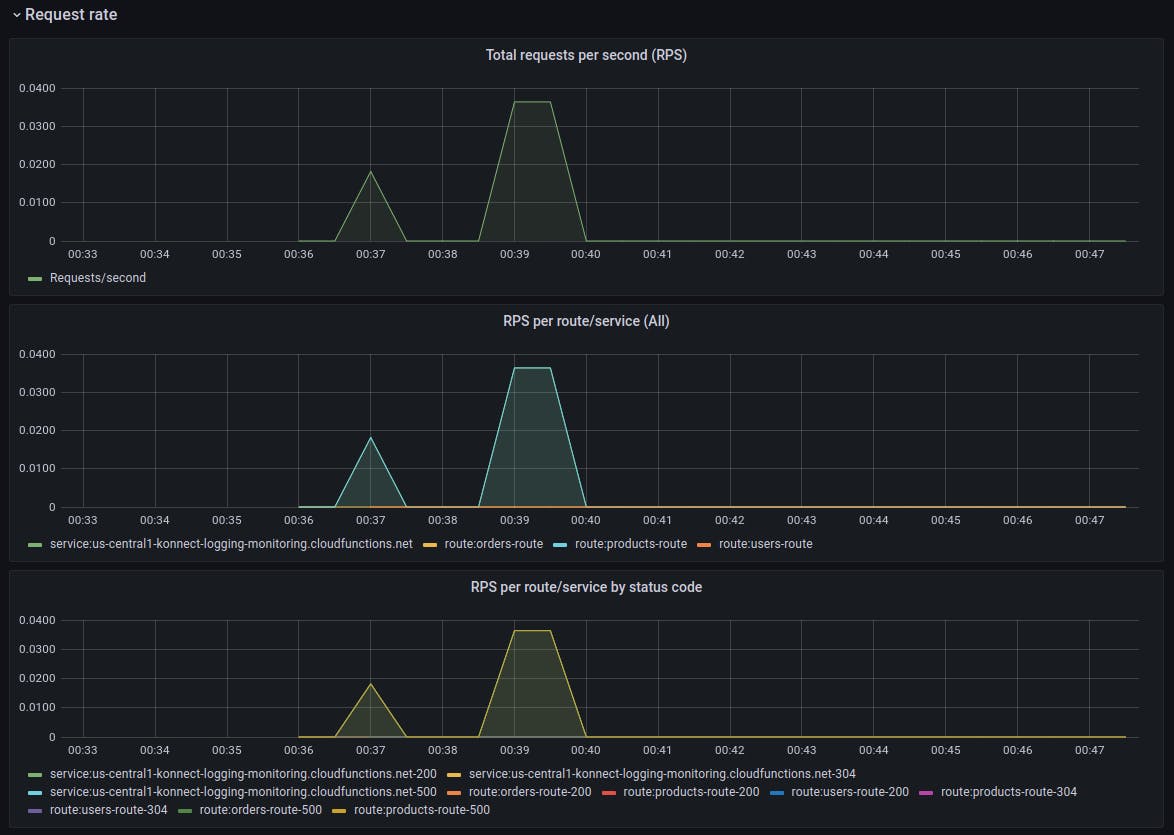

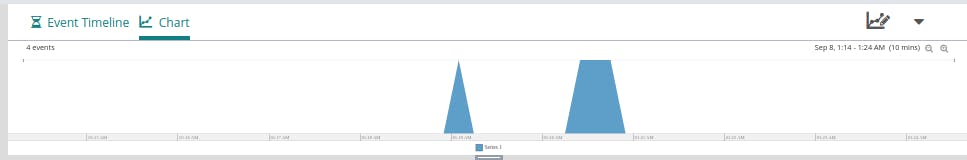

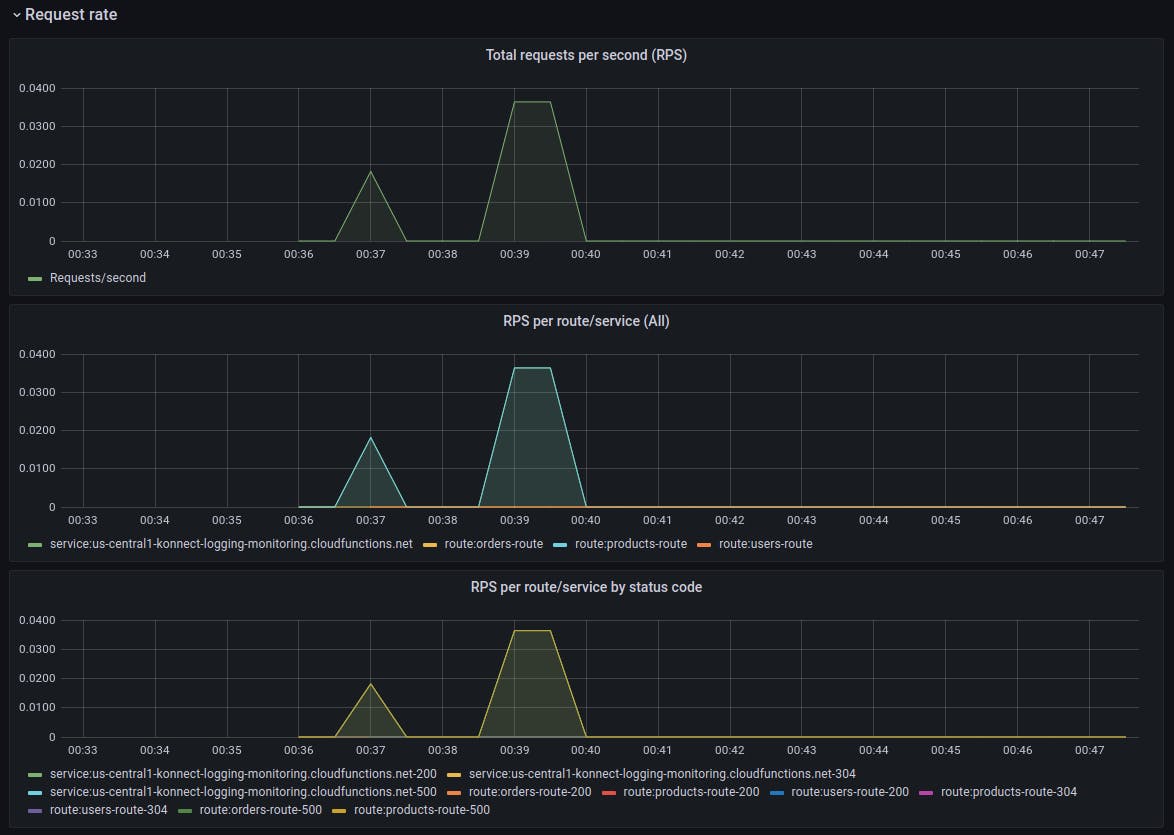

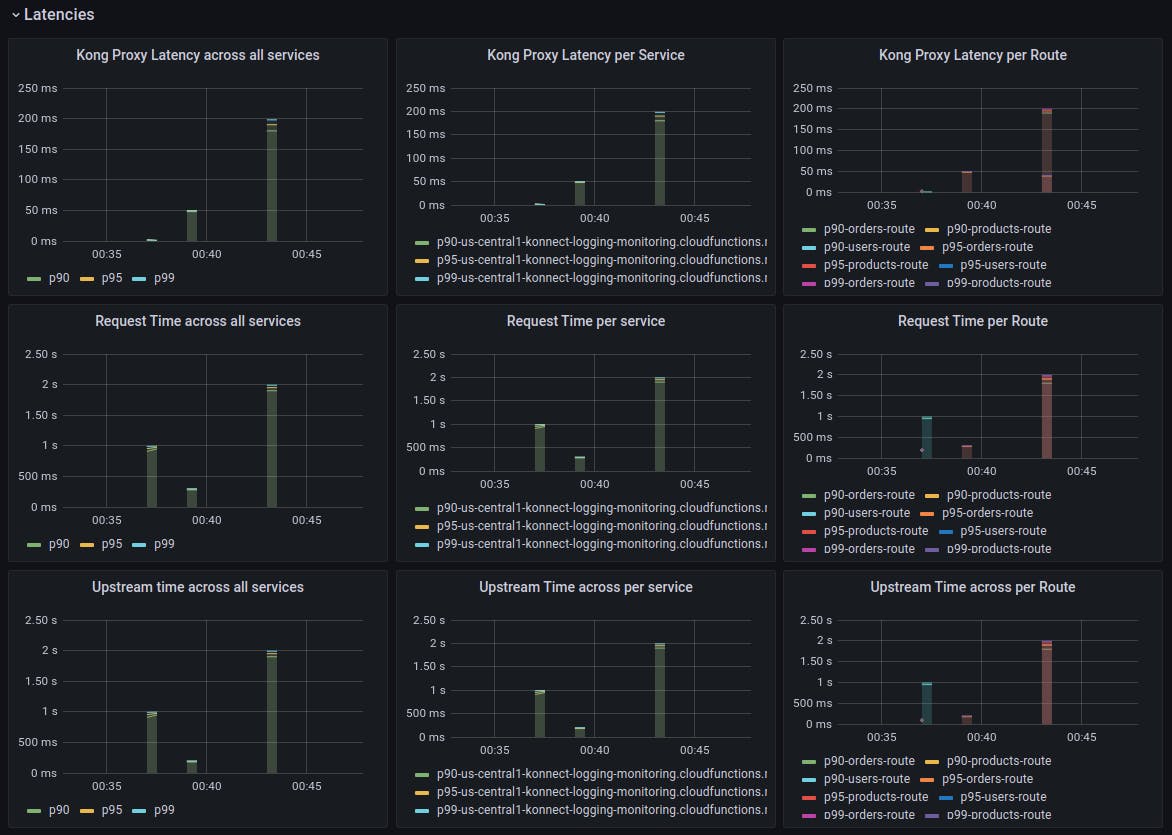

Here are some beautiful visualizations of monitoring metrics from our three services, managed by Kong Konnect and accessed through Kong Gateway:

Microservices monitoring and metrics—done! However, let's not forget that we still need to hook our three services into a logging solution.

Adding Logging

In Kong Konnect, we add the Loggly plugin to our services just like we did for Prometheus. First, we navigate to our service and version, and then we click on New Plugin. We search for the Loggly plugin and enable it.

We can add a tag to our log entries for better grouping. For Config.Key, we make sure to enter the Customer Token associated with our Loggly account.

With the plugin added, we're done!

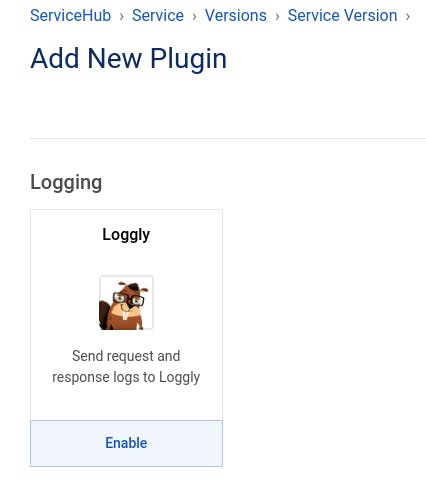

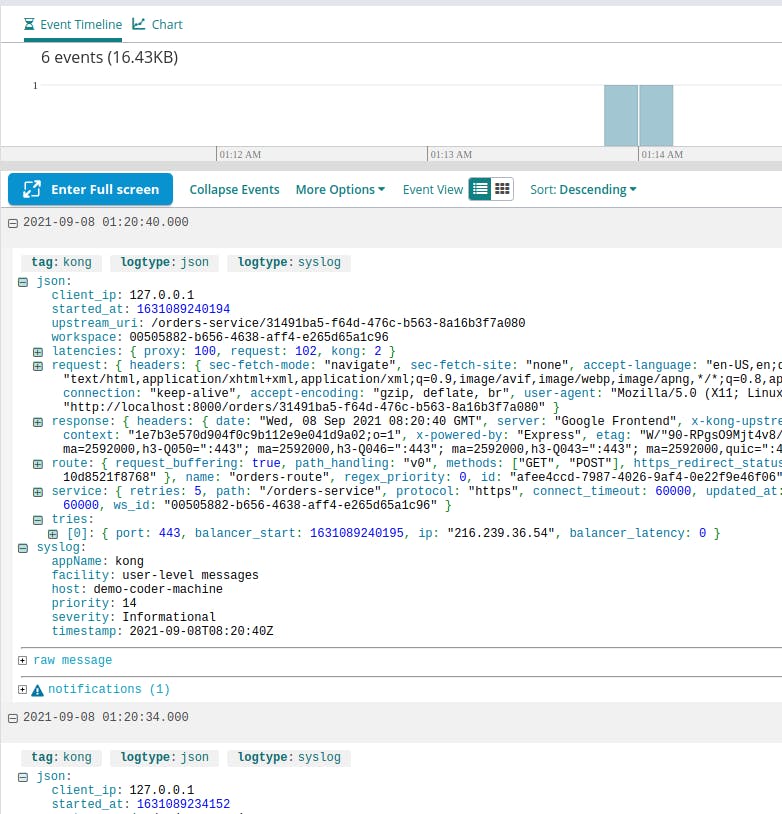

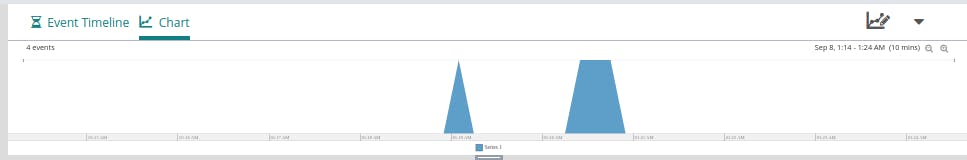

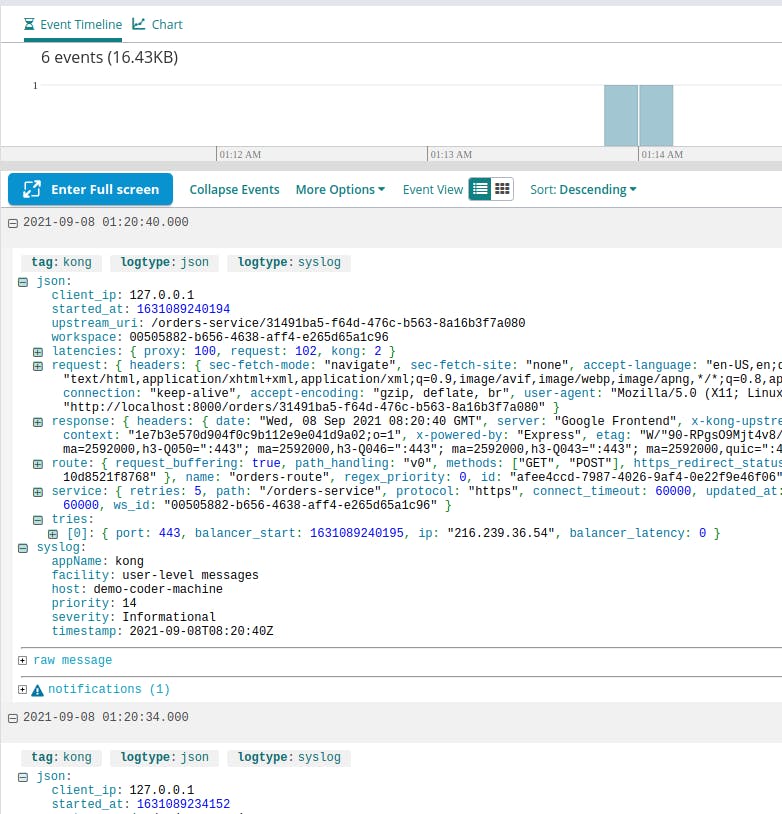

After making a few requests to our services, we can check the Log Explorer at Loggly to see what has shown up. We see some new, recent entries based on our recent requests:

The Easy Path to Microservices Monitoring and Logging

If your organization values high-velocity and high-quality software development, this means moving away from error-prone and time-consuming manual processes. You shouldn't leave simple must-haves like microservices monitoring and l