Almost all aspects of businesses are transforming to digital and internet-based solutions. It’s happening from the ground up, starting with developers. You're building applications for your organizations, and you're racing to get software and services out to the market faster. The faster your company moves, and the more you build, the more likely you need an API gateway governance strategy.

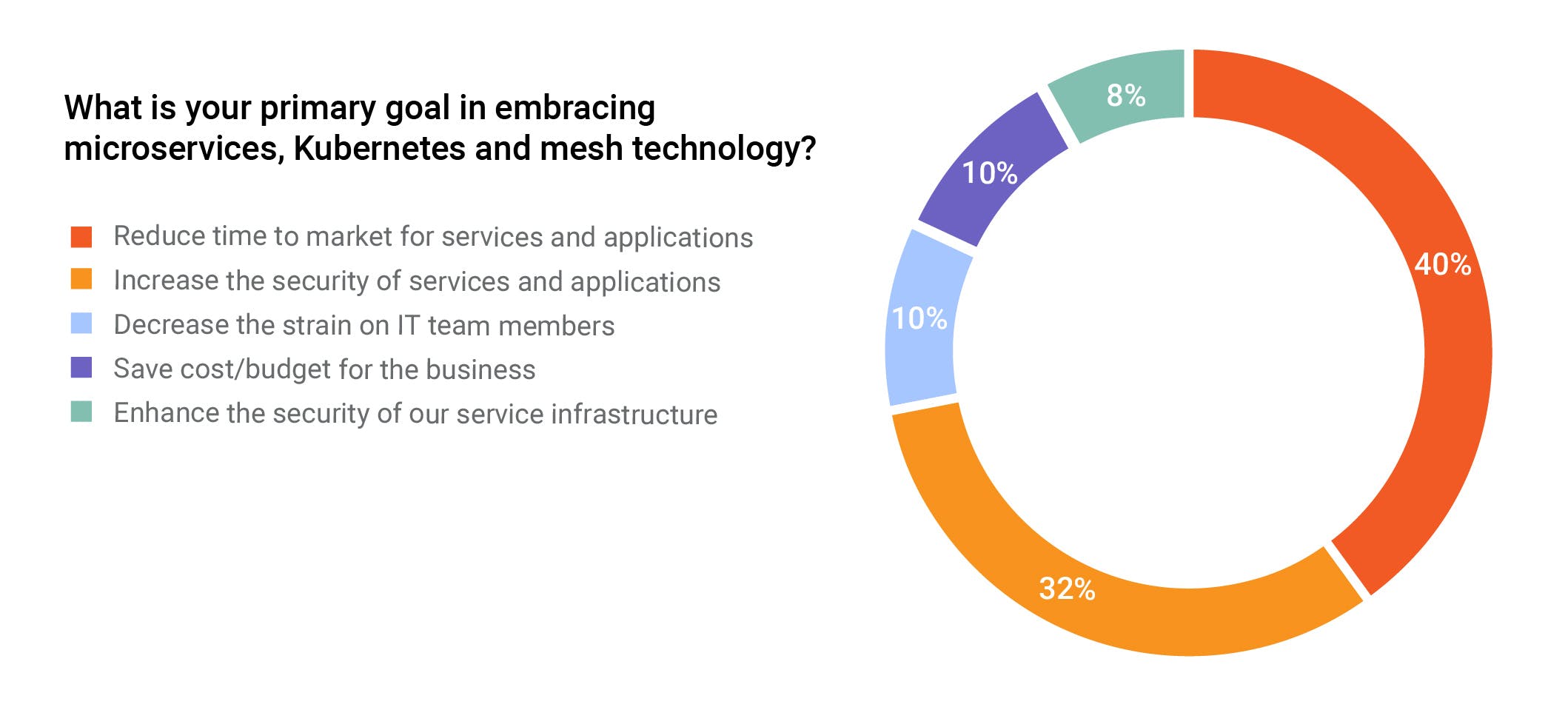

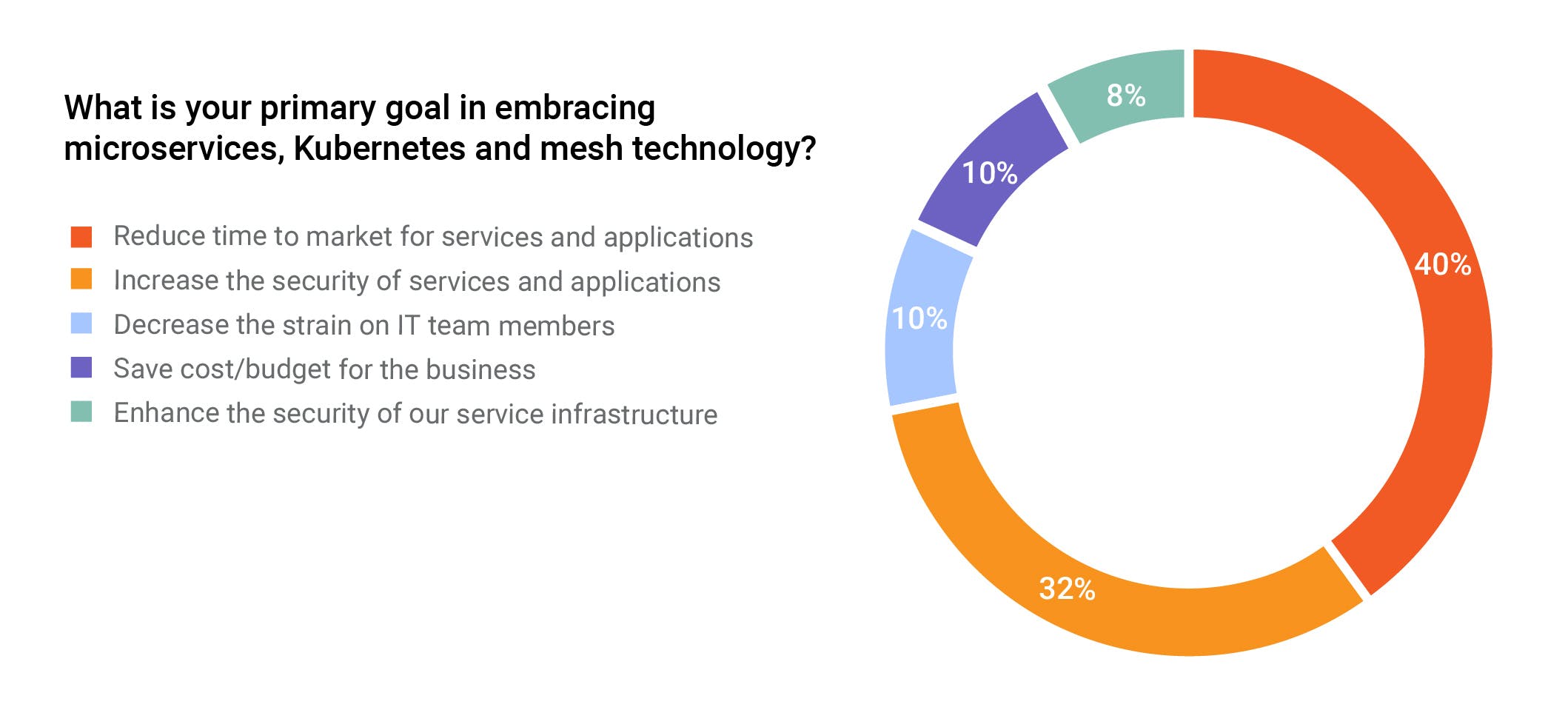

Forty percent of our Destination: Zero-Trust virtual event attendees said:

The primary reason for embracing microservices, Kubernetes and service mesh technology was to reduce time to get products out to market.

Companies move faster by making more and more services.

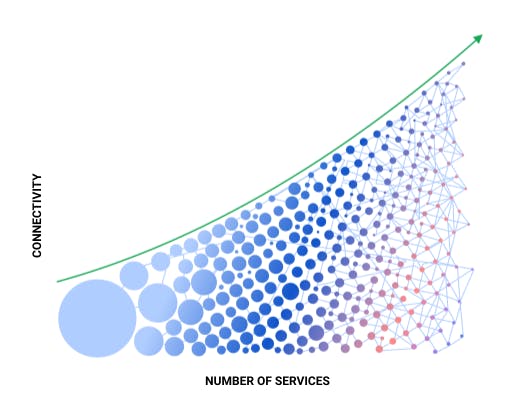

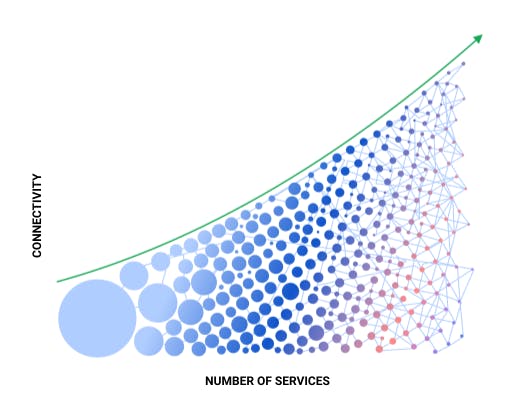

You can see this visualized in the below diagram. As you move to the right, you get smaller and smaller circles or more services. That’s because you can build services faster and deploy them in a more distributed manner to add resiliency and features.

As you move to the right, your control and visibility go down. It's as if you're juggling the small number of balls on the left versus the large number of balls on the right. It’s going to be much more challenging to handle the paradigm on the right. And that’s what a lot of customers and businesses are finding out. API governance needs to be compatible with this new paradigm.

It’s also rare for an API to be perfect on day one. Companies are finding that for their APIs to be consumable, they need to add API governance for each iteration to make it as consumable as possible as fast as possible.

Suppose you’re building APIs as an interface to the microservices on the right-hand side of the above diagram. In that case, your API gateway needs to give you the capability to govern all the circles on the right-hand side of this picture. And it has to be able to deploy alongside your application code using automation to enable the creation of a consumable API.

Furthermore, the lines that are connecting the circles in this picture represent the connections between your services. The number of connections is going to go up exponentially with each new set of microservices.

Connectivity Types

As your microservices grow in quantity, you’re going to end up with three types of connections.

- Edge Connectivity: Providing an API to your external customers and partners

- Cross-App Connectivity: Taking advantage of your API's usability and functionality; that way, no one is reinventing the wheel

- In-App Connectivity: Running service-to-service connectivity where there may not be an API, but there needs to be a reliable, trusted network that your developers can use to make service-to-service API calls reliable and secure

In all three of these scenarios, your organization will want to automate standards so developers can build their API authentication and authorization, network reliability, failover and other types of "standard" API governance requirements without taking away the autonomous nature of APIOps.

How Kong Helps Organizations With API Gateway Governance

1) Automation

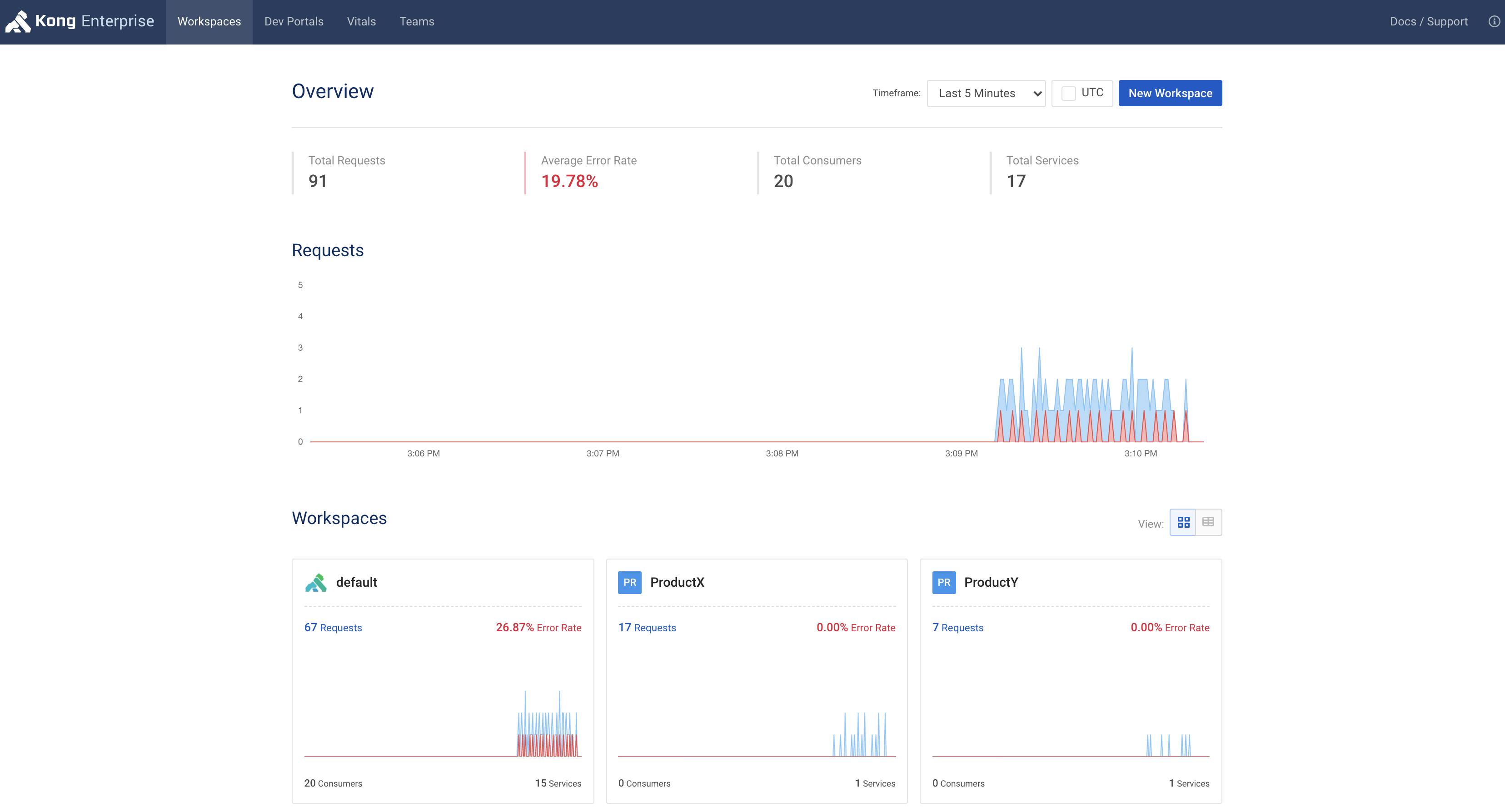

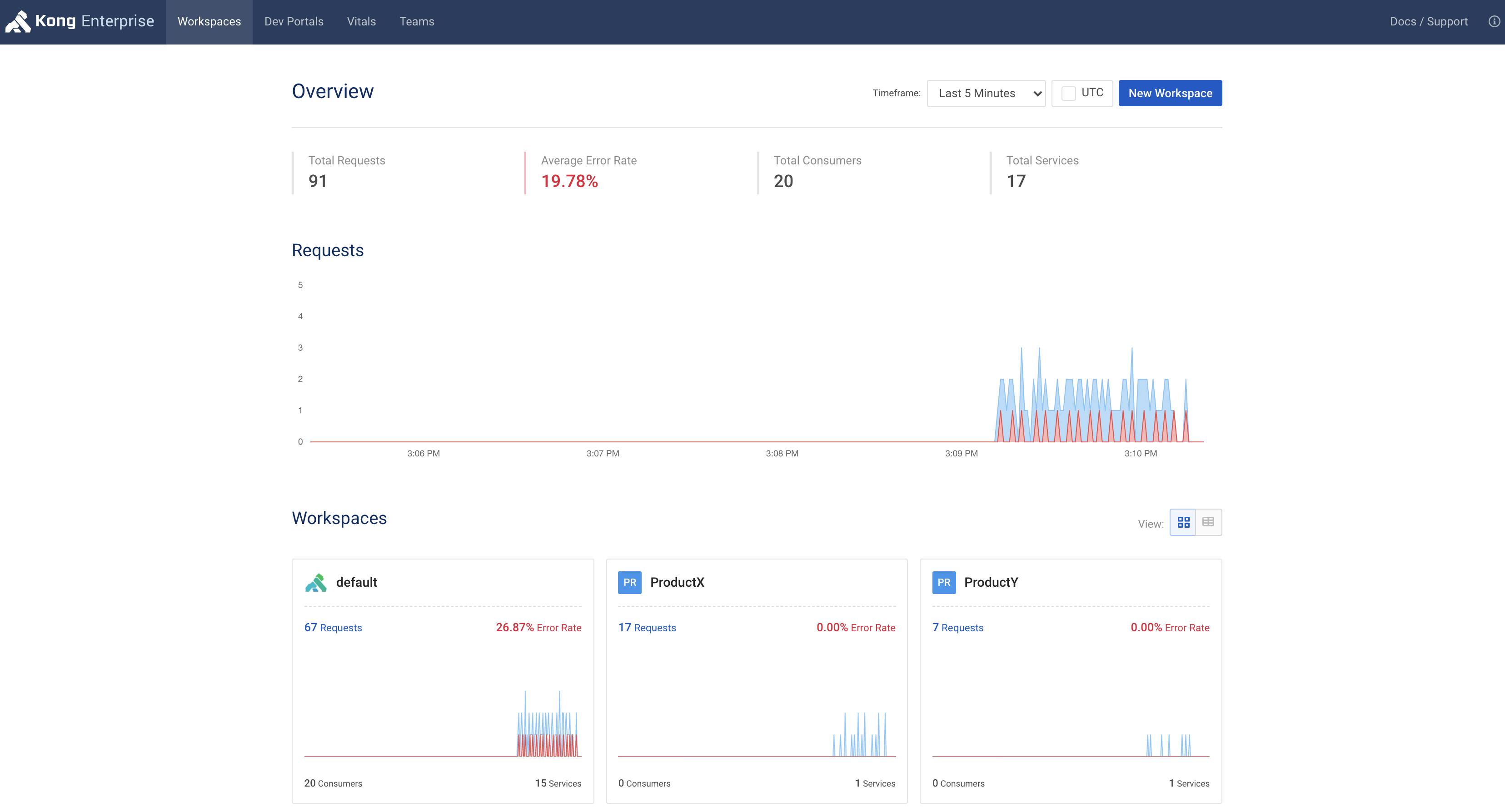

You should govern access controls to your API gateway's configuration. The Kong admin API empowers your developers to quickly deploy policy alongside their code using automated scripts, declarative configuration and the GUI. Kong enforces role-based access control (RBAC) to ensure that your developers can only change the API service definitions and policies for specific workspaces for which they have permissions.

Kong-Admin-API

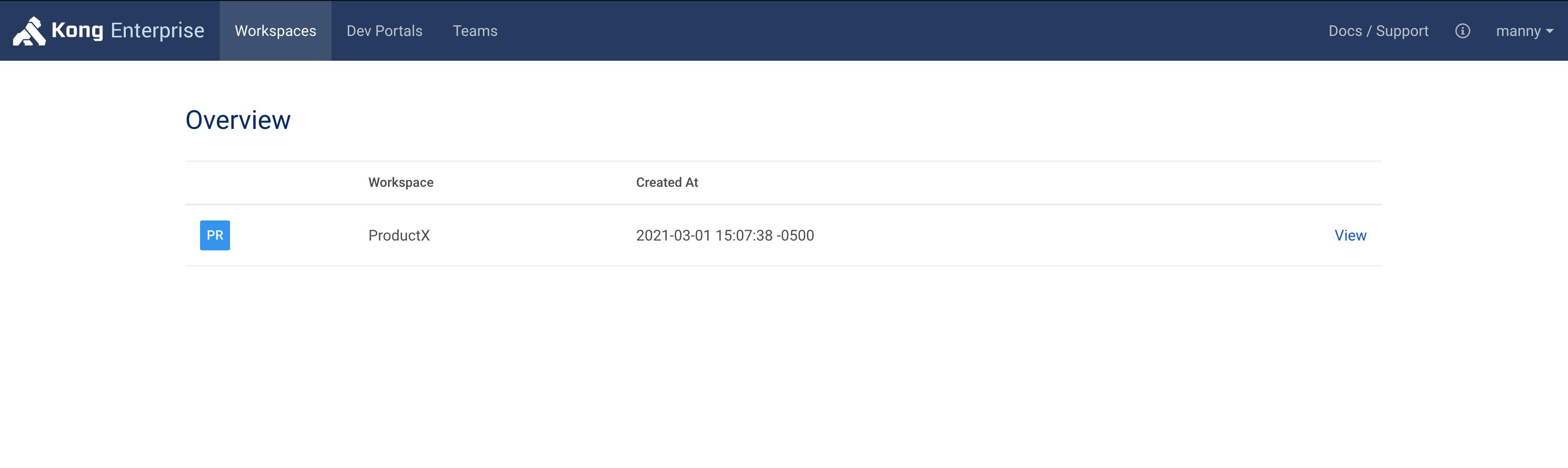

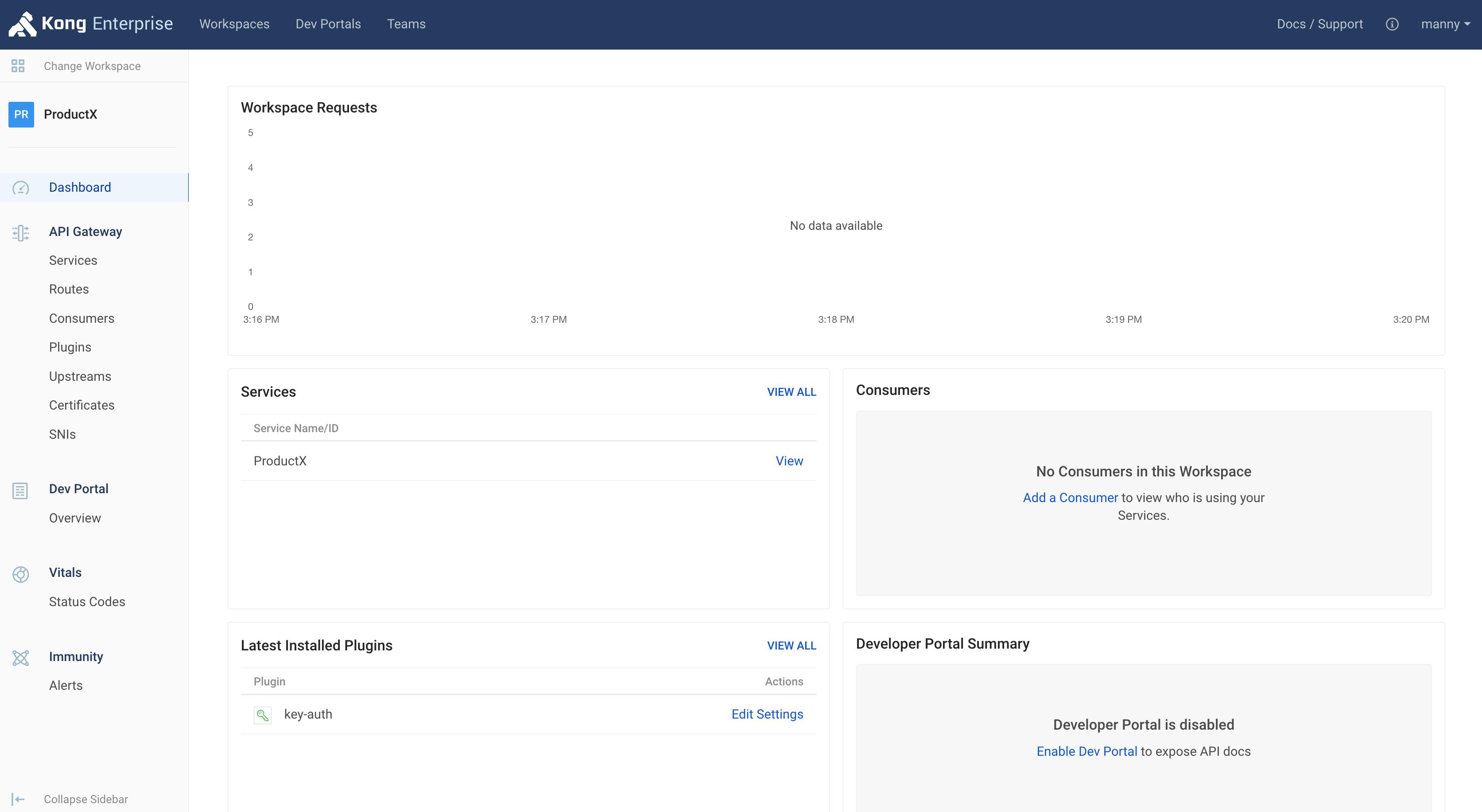

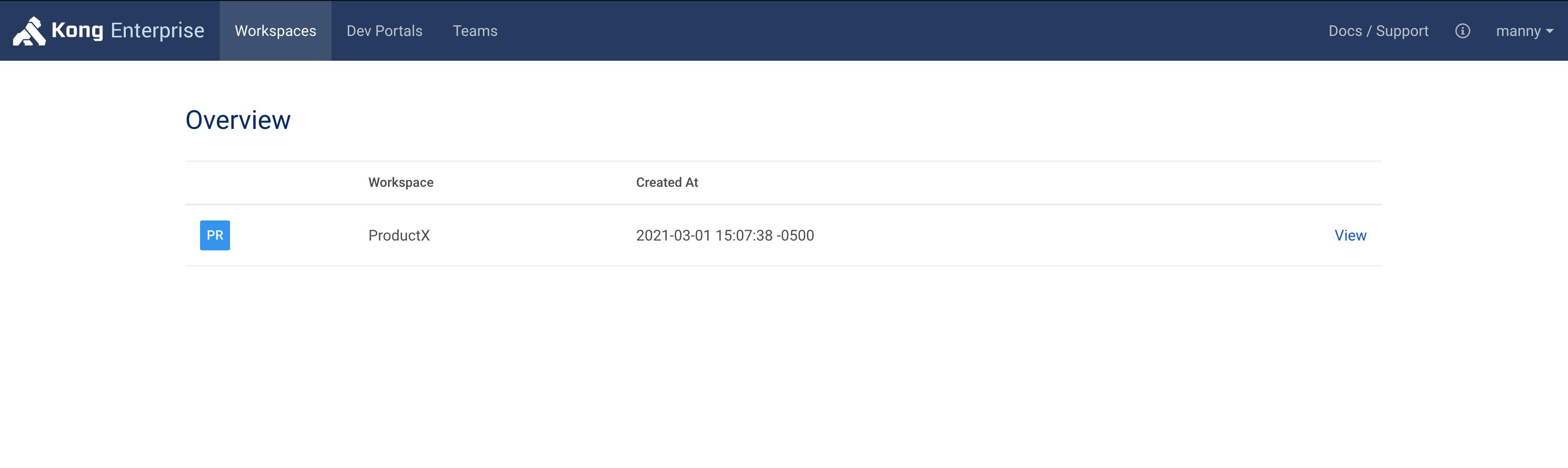

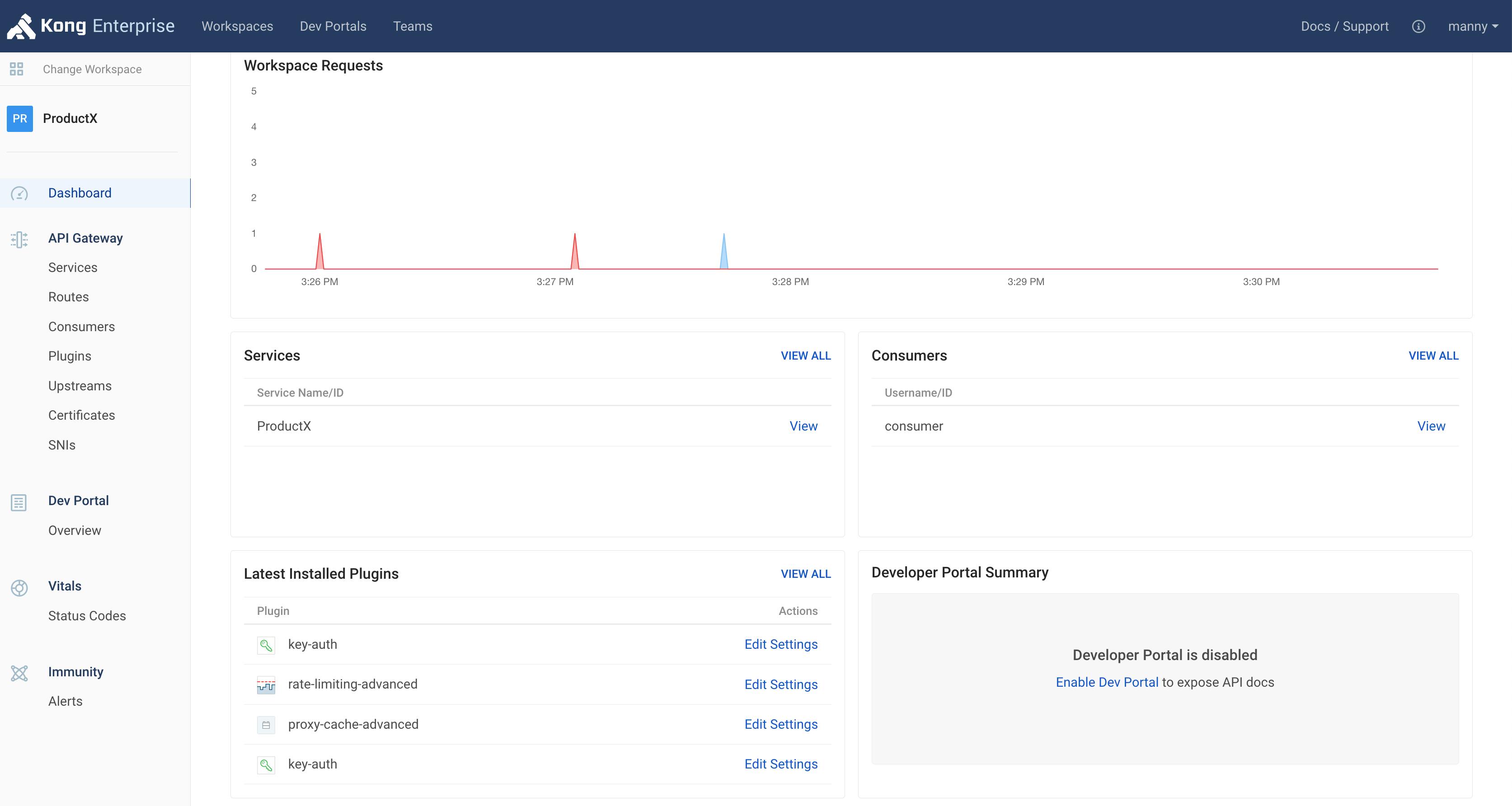

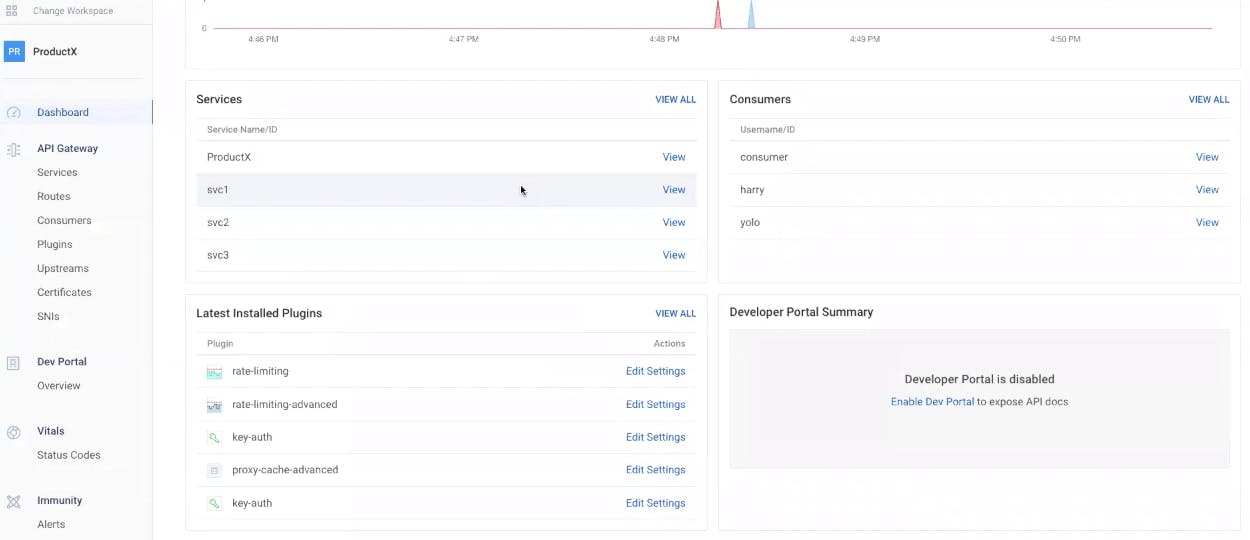

As a super administrator, you can see all the different workspaces in your environment. But as an app developer working on ProductX, you should only be able to see the workspace that applies to you. In this case, that would be the ProductX workspace.

With RBAC in Kong, you can enable the ProductX developer to work on his/her workspace only (as seen below).

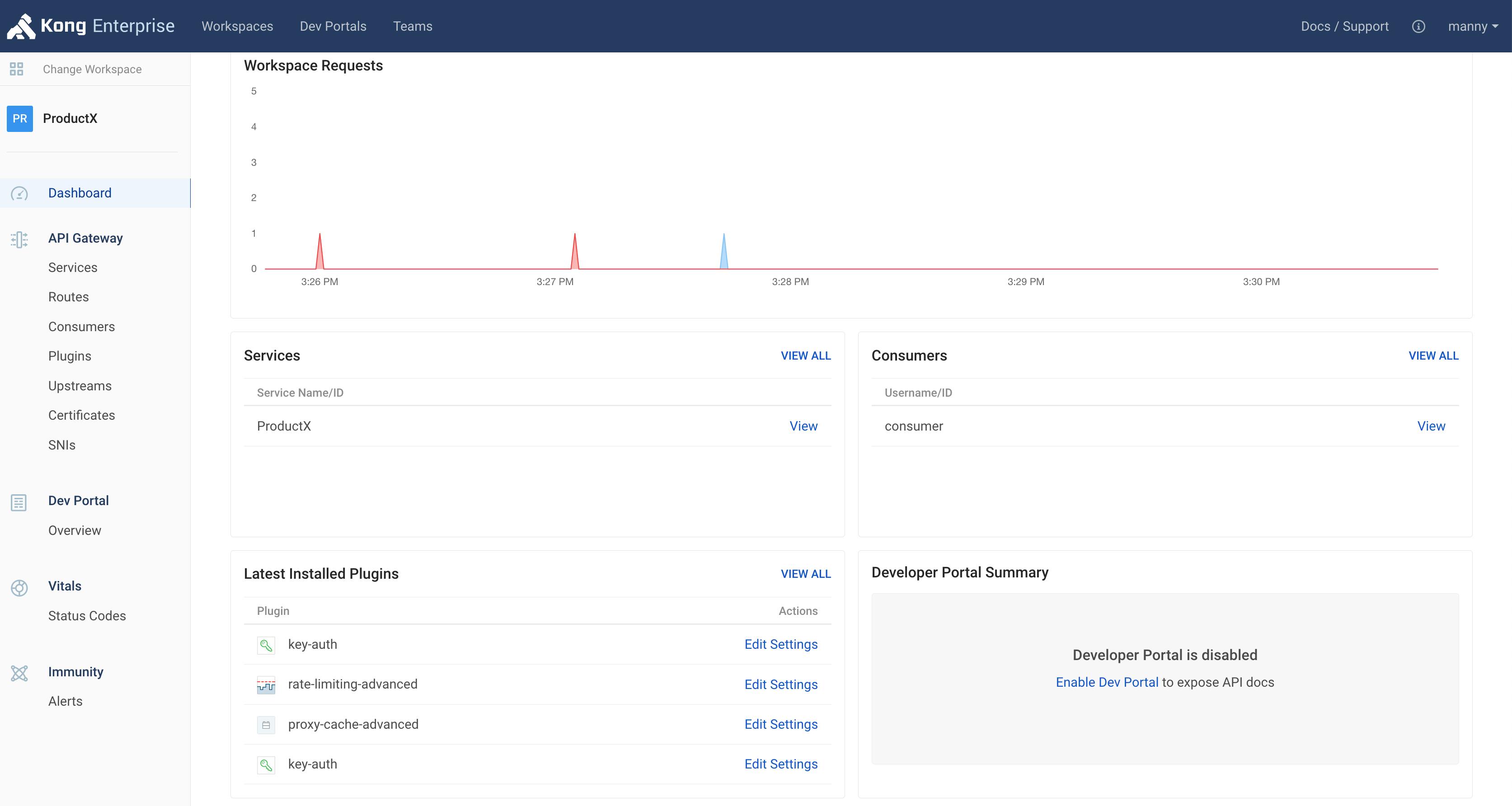

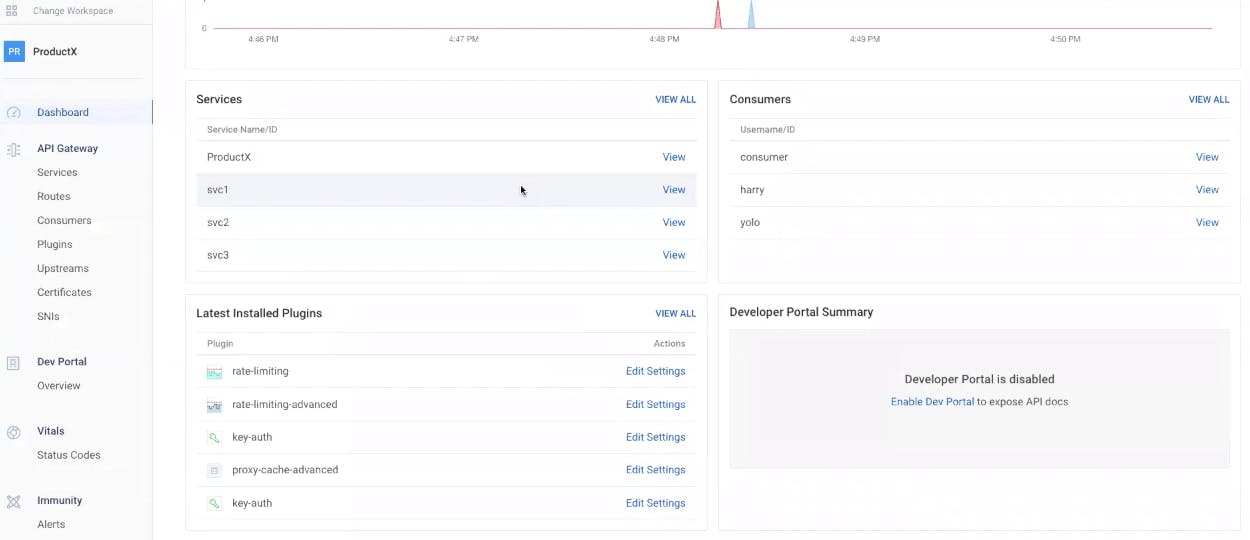

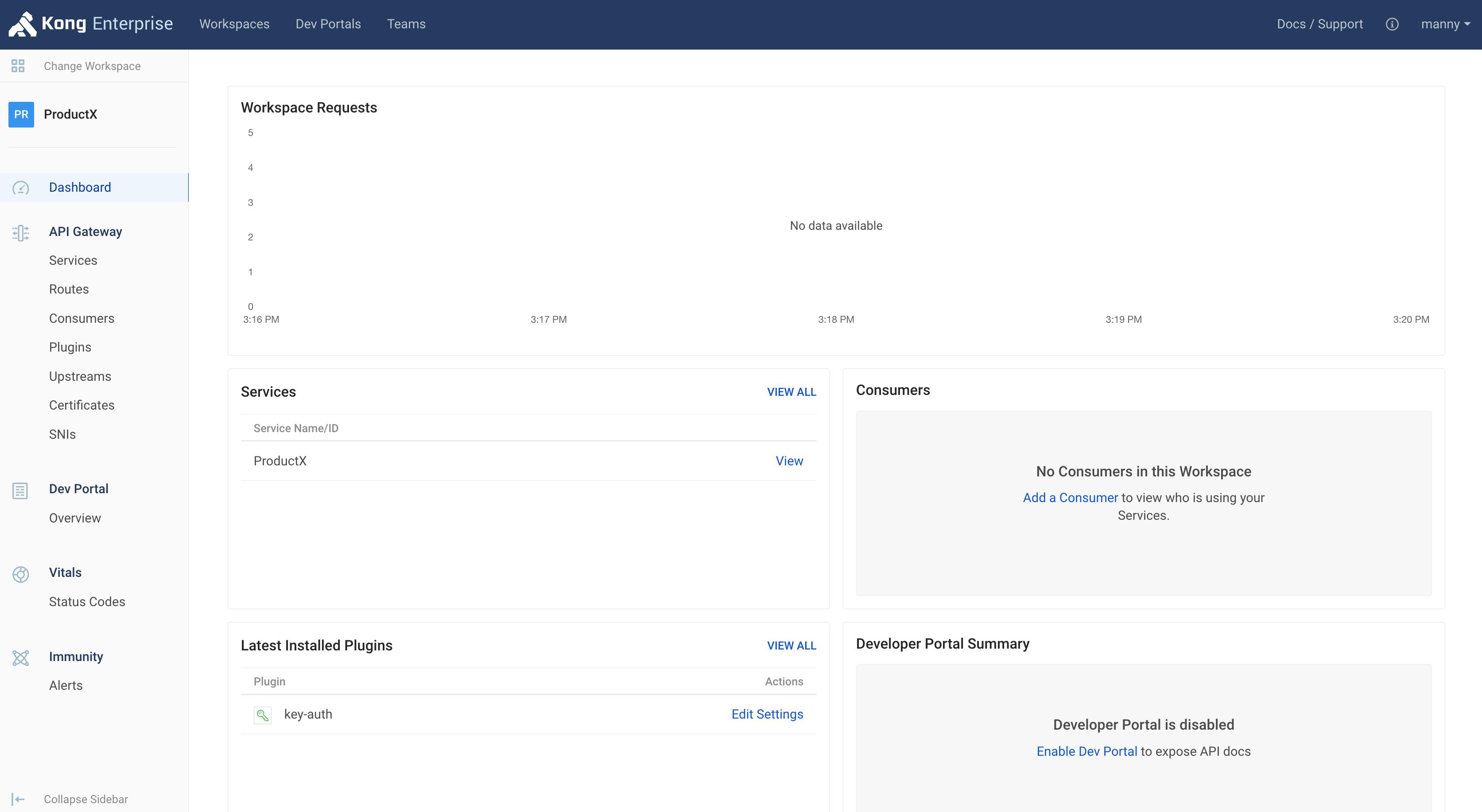

When the ProductX developer goes to the workspace dashboard, they can see all the frontends, backends and policies that Kong is handling for that workspace.

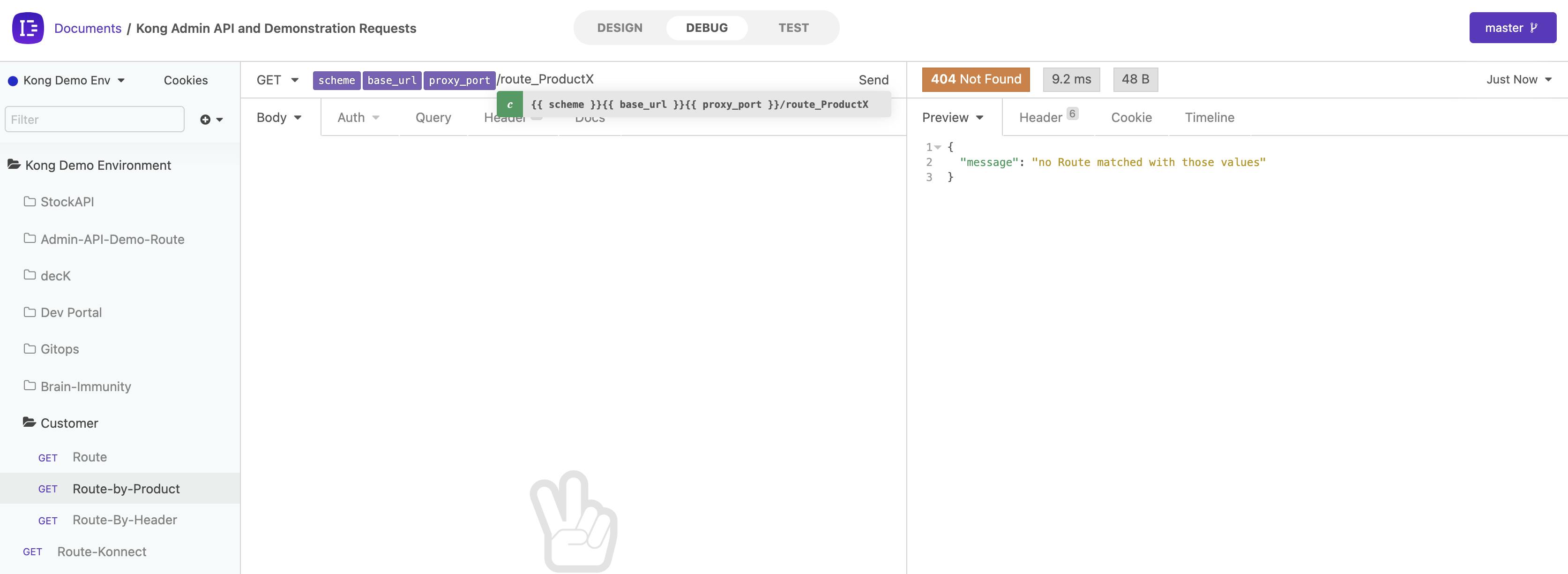

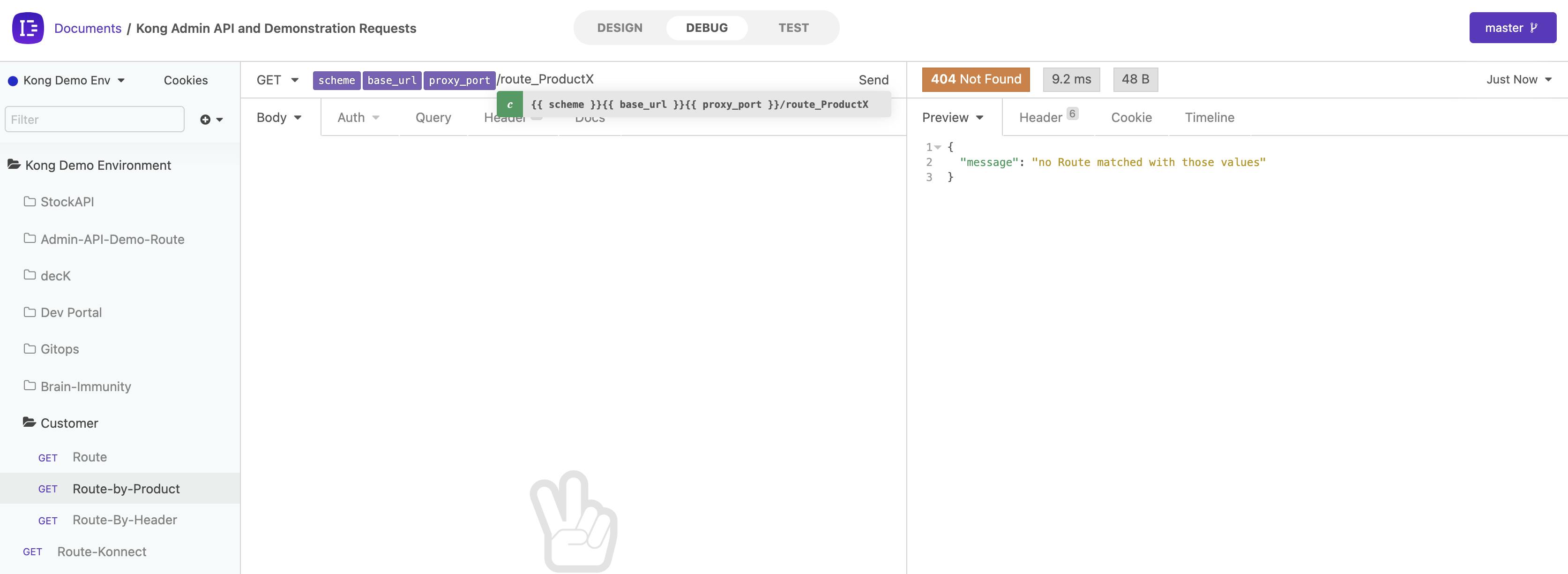

In my example, I have a route in my application called "route_productX" that I want to expose through Kong. Right now, that route isn't available and cannot be consumed, as shown below.

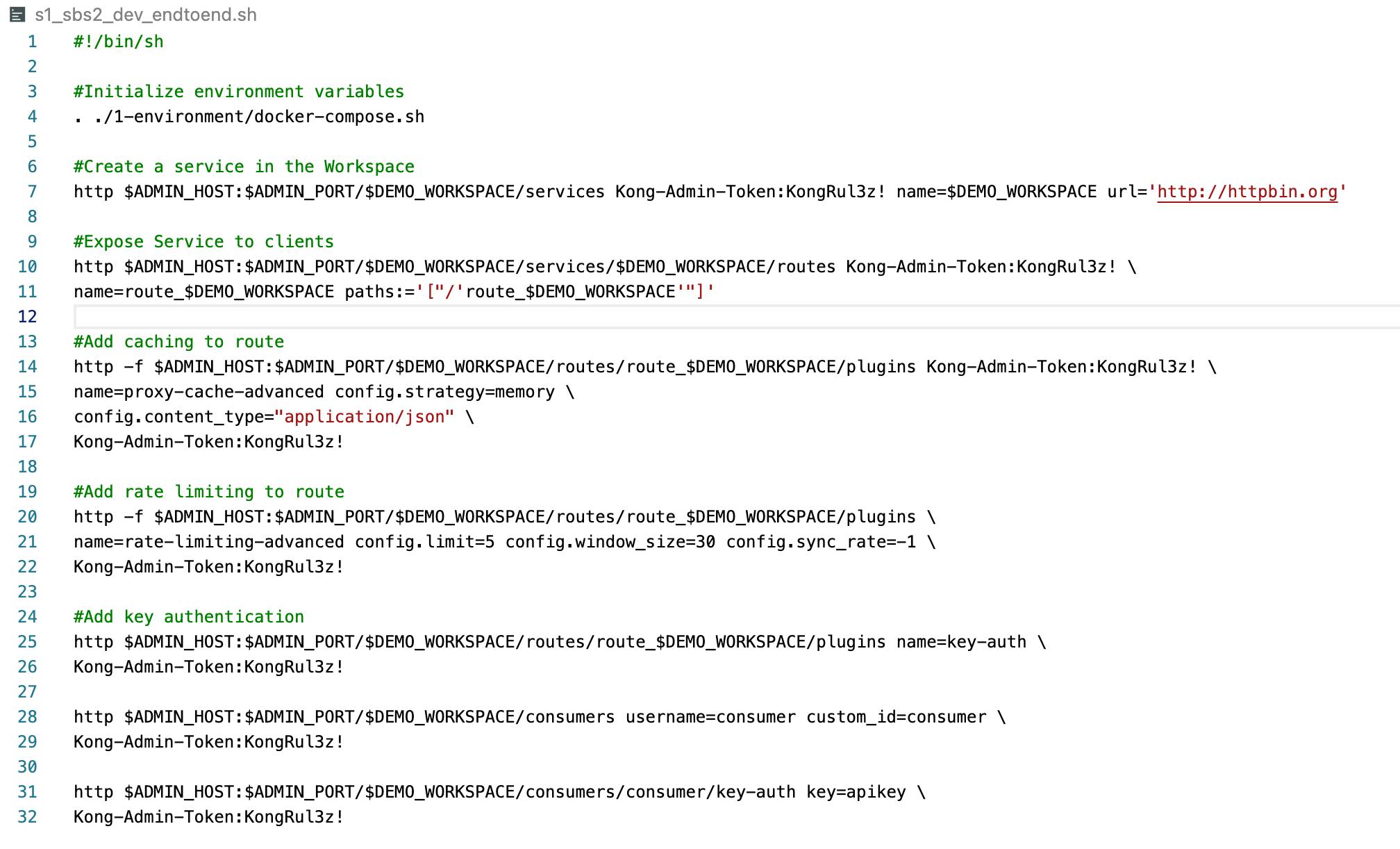

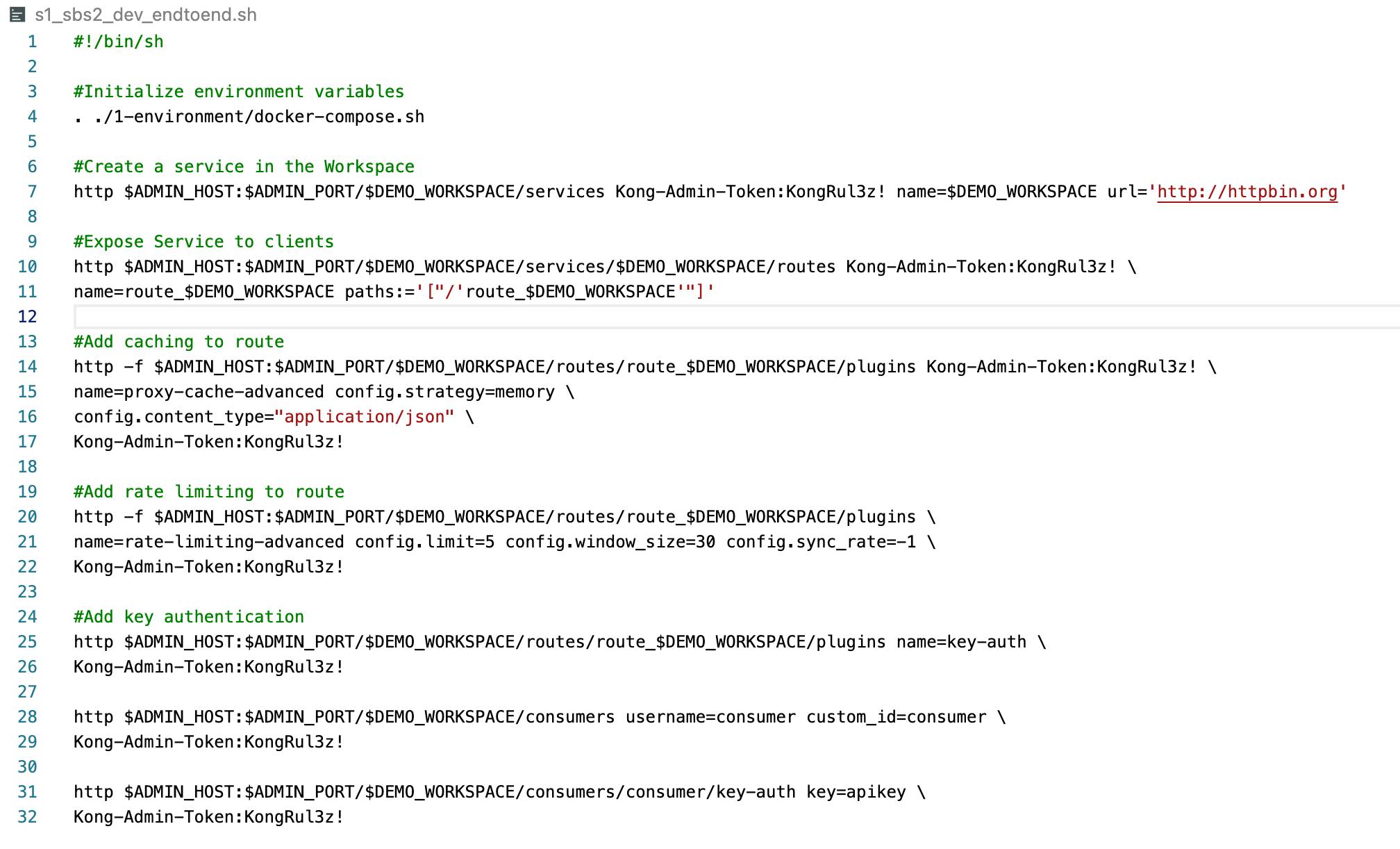

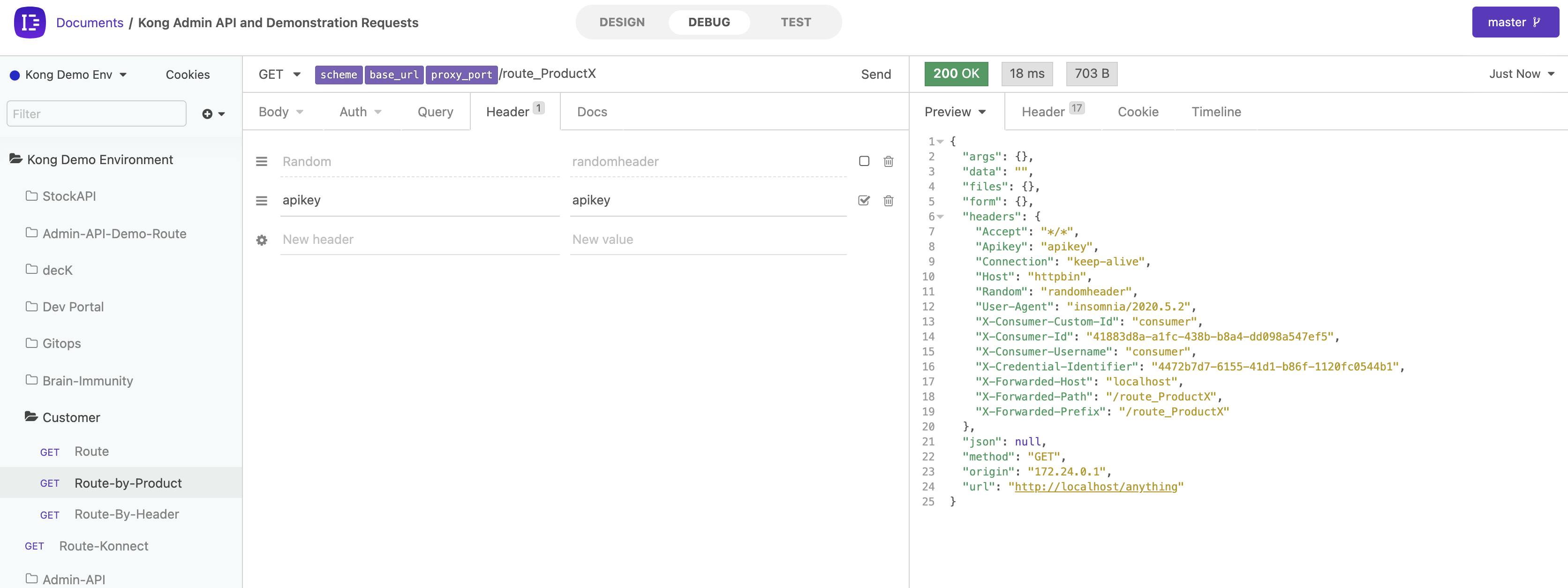

As a developer, I can quickly configure Kong programmatically to expose my service with the frontend route I choose and offload common functionality to Kong. Then I can build and test my service. I can push each iteration of my service code with GitOps and automation. Doing so will automatically configure Kong for me. For example, if I run the script below, I can configure my ProductX workspace to expose the route I need.

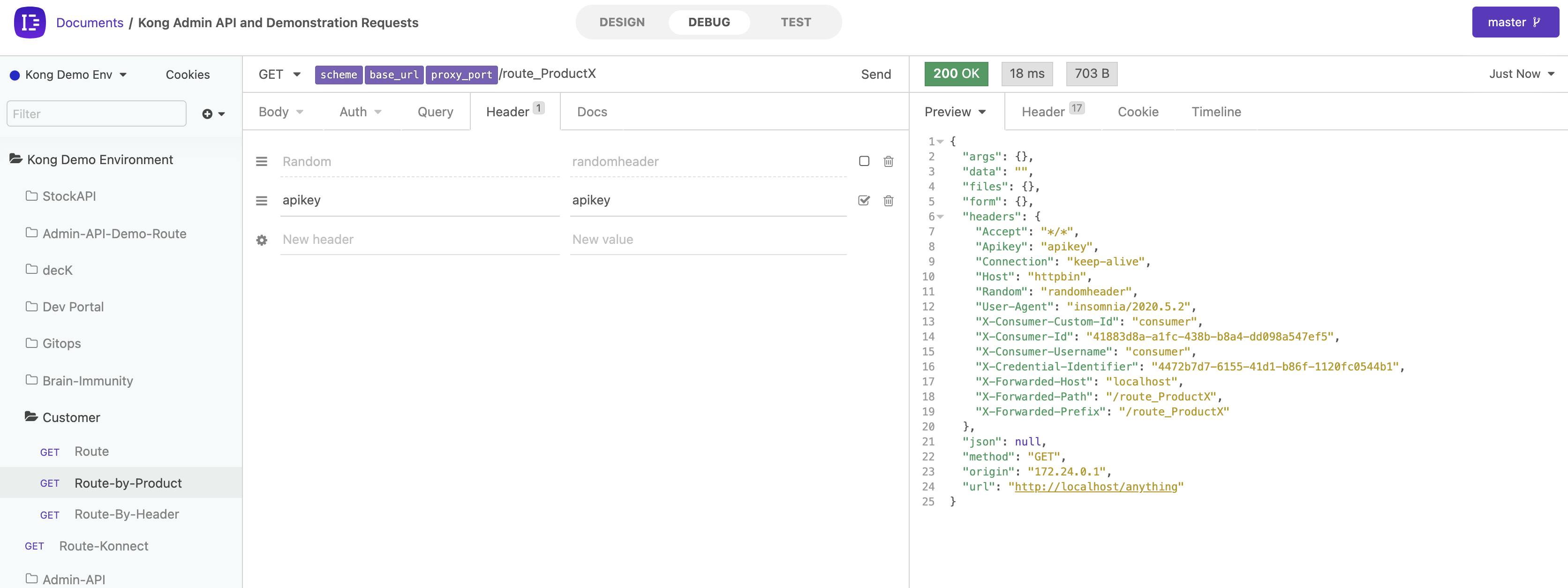

If I test my API, you’ll see the message change on the right to say "no API key was found" in the API request. Not only is Kong exposing the API, but it also adds a layer of API security. When I add an API key to my request, it will work.

As you're iterating and building your application, Kong's automated configuration allows your API developers to move very fast. They will only touch their workspace, enforcing API gateway governance.

Declarative Configuration and GUI

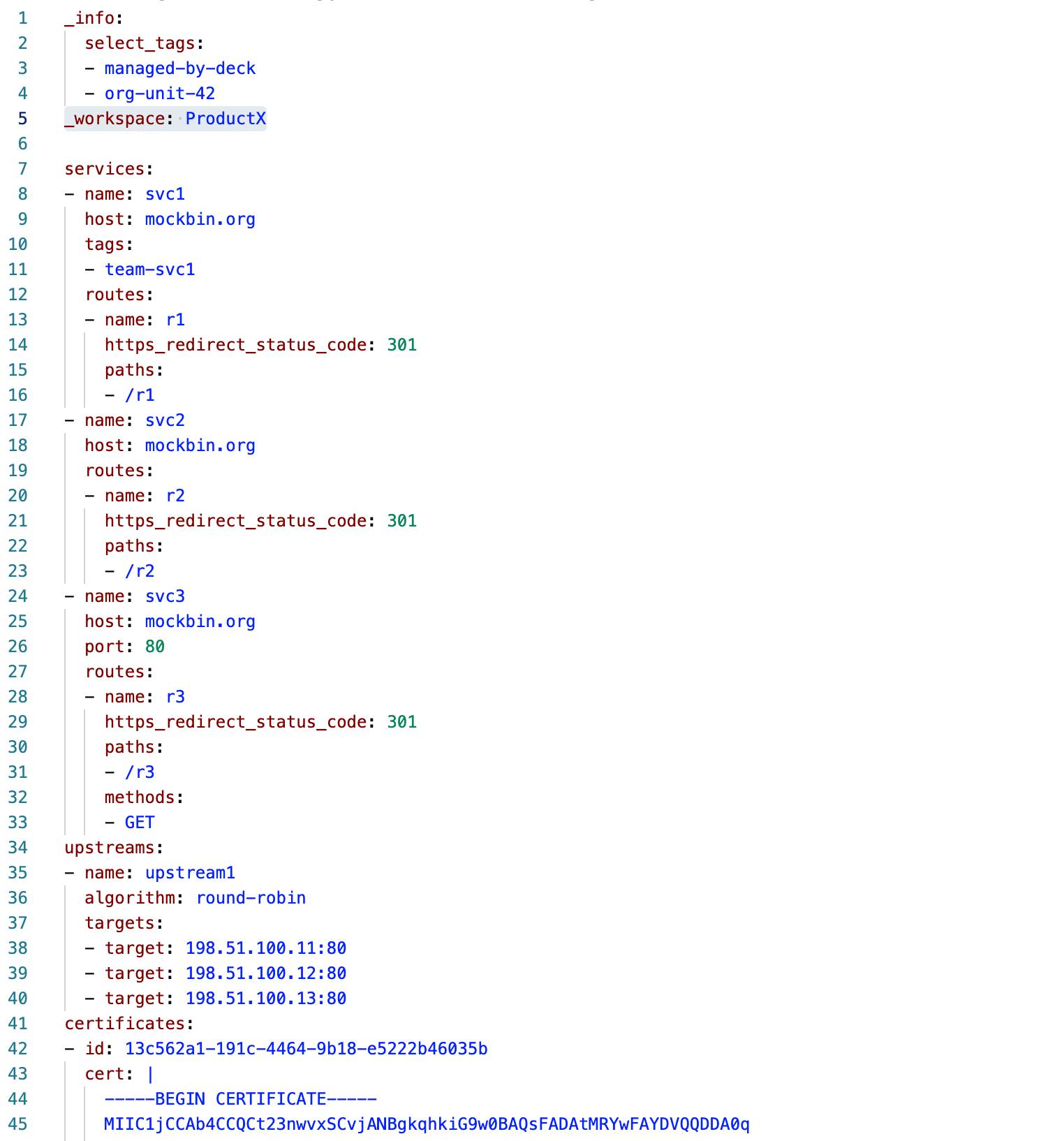

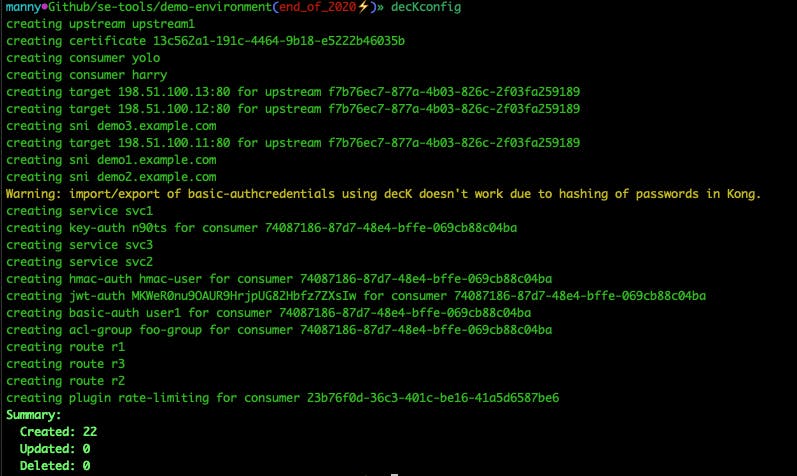

After the developers have built services in a development environment, it’s typically moved to a QA environment with more stringent uptime requirements. Kong enables this using declarative configuration so a developer's workspace or an entire Kong cluster configuration can be delivered as code to promote changes between environments.

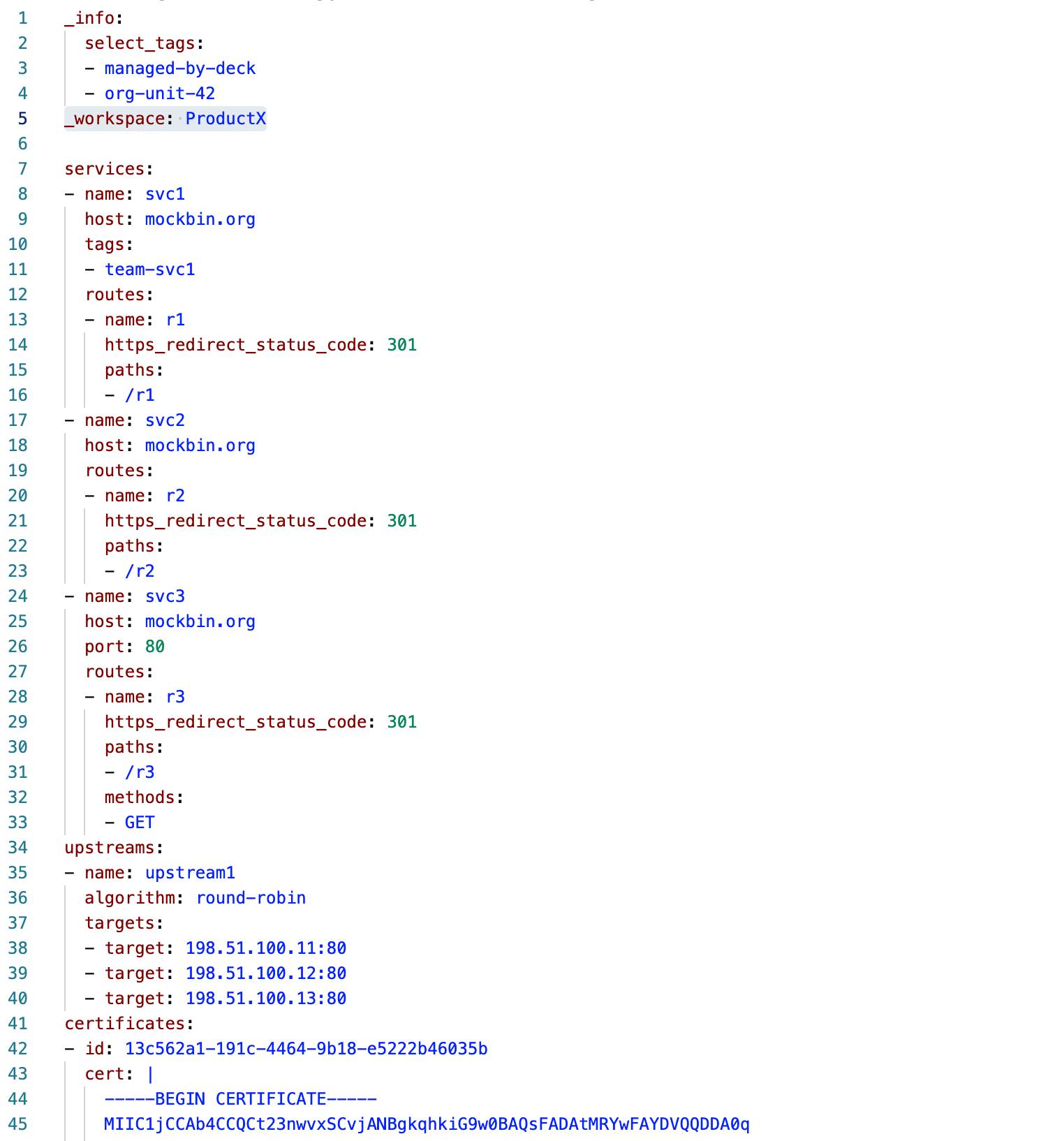

For example, the declarative file below will target a particular workspace and configure it with additional backend services and frontend routes and plugins. I’m going to target the ProductX workspace that I mentioned earlier.

For examples, go to our decK documentation.

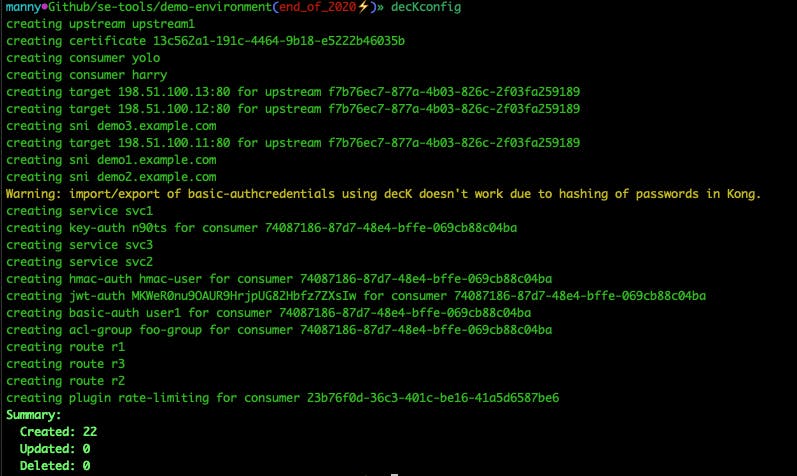

When I use the synchronization flag for decK, it will use my file to create 22 new objects within Kong.

You can see the new services and plugins added to my workspace in the GUI.

I've now effectively moved all the configurations from my API development environment to my testing environment. I was able to do this as code without touching anything in the administration console.

RBAC ensures that teams can only push changes to their workspaces. RBAC enables each team to work autonomously and deliver functionality faster. The other benefit of using declarative configuration is that it allows you to perform drift detection and rollback mechanisms for each of your kong clusters. All of these capabilities help your organization deliver a standard API experience and API governance. That way, you can provide the APIs in a consumable manner that promotes wider API adoption and API usage.

You can also configure Kong using the "API spec-first" or "API design-first" approach using Insomnia.

2) OpenID Connect for API Gateway Governance

When exposing these APIs to external API consumers and internal developers, Kong allows developers and organizations to apply a standard authentication and authorization framework. That way, you can control how clients authenticate and authorize themselves to each API endpoint in your application without your development teams building this functionality into each service endpoint. It also gives you the architectural freedom to run anywhere and use any technology you want since Kong can run alongside your application on any platform. That means you'll have a standards-based API governance model to protect application APIs.

When you have endpoints exposed through Kong, you can use a standards-based approach like OpenID Connect to secure those endpoints from human and non-human clients/users.

When I try to access the endpoint directly, the system redirected me to KeyCloak. I have to authenticate with the correct credentials to access the API that Kong is protecting. If I put in the wrong password, the system will not allow you to access the endpoint. If you put it in the correct password, then Kong will send you back to the host service that Kong sits in front of with a token to do additional upstream validations. This flow is known as the authorization code flow for human users. Kong supports all common OIDC flows, including client credentials and password grants.

Suppose I log in with another user that is not authorized to access the endpoint. In that case, the system forbids me to access this endpoint (shown below), even though I authenticated with the right username and password. It's prohibited because Kong can check the claims in a token to ensure authorization control.

That example shows how Kong helps you layer on governance controls for runtime API requests going through the Kong API proxy. The benefit of the OpenID Connect plugin (and other authentication plugins) is that your organization can standardize endpoint security for APIs no matter what technology is used to build the API itself and where it’s running. You can deploy Kong as a central gateway, ingress gateway or level 2 gateway for fine-grained authorization.

Blog Post: How to Secure APIs and Services Using OpenID Connect >>

3) Zero-Trust Network for API Gateway Governance

As your applications become more decentralized, establishing secure communication is table stakes. A zero-trust network is an important building block to achieve this goal. It allows services to communicate using mutual TLS (mTLS) policies, ensuring services can only talk to other approved services. A zero-trust network provides developers with a reliable and secure network to build on, so they do not have to build circuit breaking, retries and certificate management into their code to manage secure communication with other services.

Enforce Mutual TLS

One of the common API governance challenges is the enforcement of zero-trust. The assumption to operate under is that your network may be compromised, so it's best not to trust other services by default. A service mesh gives the organization the means to build a zero-trust network using software-defined networking policies. This way, your developers don’t have to build mTLS and network reliability into their code.

Again, it comes down to standardization. Governing the service mesh network with a central policy layer is more efficient and maintainable than relying on each application team to build network reliability and security into their code using their preferred (and usually different) technology patterns.

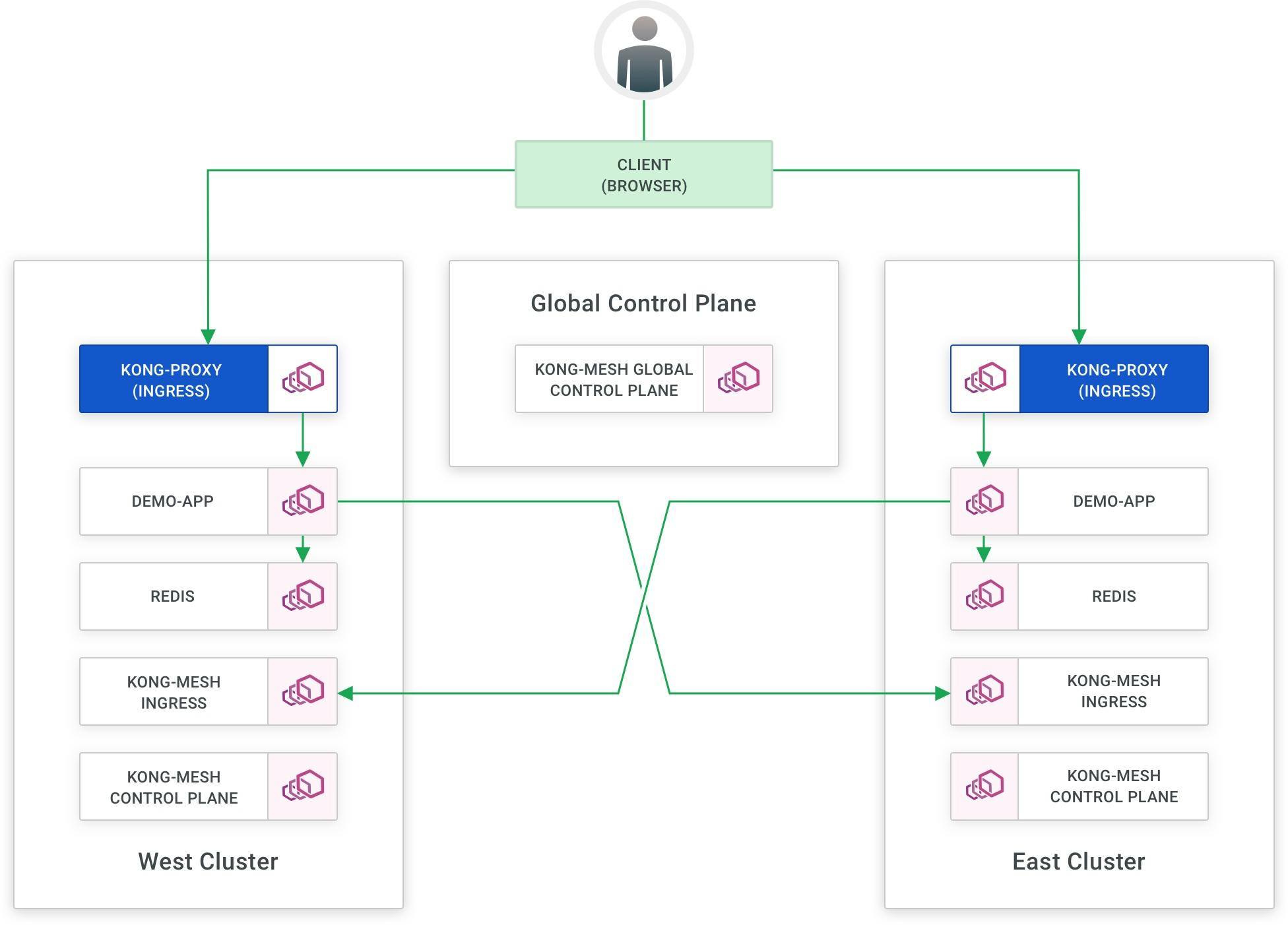

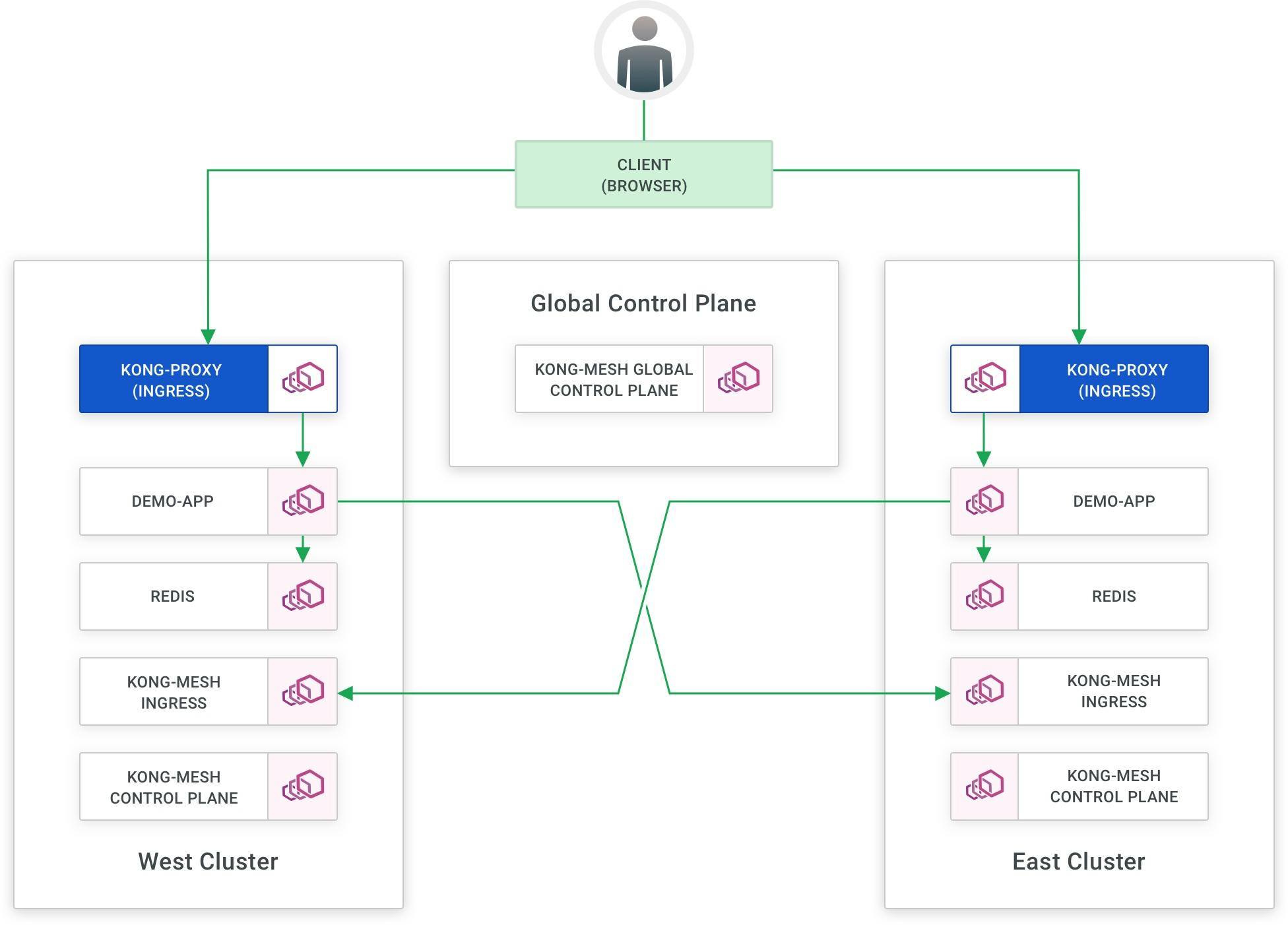

With Kong Mesh, you can enable zero-trust across multiple regional data centers and network zones. Below, you will see a counter application that I'm accessing through my browser.

When I press the increment button, everything is working and bouncing back and forth between gke-east and gke-west, where I have both infrastructures running. If I hit “Auto Increment," it'll start bouncing back and forth because I’ve told it to load balance between these two data centers.

You can see below that once I delete my API traffic permission policy, all communication stops on the mesh. That way, I know that each service can only communicate with other services that the policy has defined.

The benefit of this is you'll have a single place where you can control every one of the connections so that your developers don’t have to build mTLS into their code for API gateway governance

As I have noted, with increasing automation and digitization of your organization comes a natural growth in the number of services, all of which will need API governance. We built Kong Konnect for exactly these purposes. Your services won’t govern themselves (yet).