What is a DMZ Network?

A DMZ – Demilitarized Zone – is a military term, roughly summarized, as an area between two adversaries established as a buffer in order to reduce, or eliminate, the possibility of further conflict.

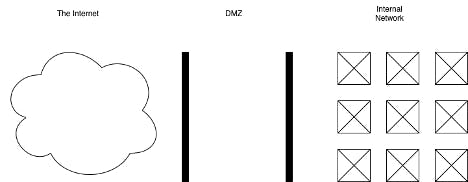

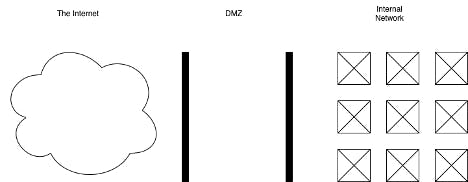

In networking, the term usually refers to an area that acts as a buffer between two segregated networks. Here is a simplified visualization.

Figure 1. Simplified diagram of a DMZ

The DMZ is the network zone between two network firewalls, marked by the two vertical bars, in the figure. The firewall on the left, the left vertical bar, may be configured to allow some traffic from the internet into the DMZ. The firewall on the right, the right vertical bar, may also be configured to allow traffic from the DMZ into the internal network. Traffic from the internet cannot reach the internal network directly without crossing the DMZ.

DMZ Network and API Security

It seems hardly a week goes by without a serious breach taking place at a high profile organization. As organizations continue to digitize their offerings they are using more APIs to create and expose their digital assets. This presents a new attack surface. The question then is: how can APIs be exposed to external parties to meet the digitization demands while reducing or mitigating the risk of a breach? In this article we review how the Kong API Gateway accomplishes this in a variety of deployment patterns.

First, we assume the APIs are deployed on-premise in an internal network. We can also deploy them in the cloud or in a hybrid model, but we keep our use case simple in this example. The models we describe apply equally to on-premise and cloud deployments and constitute and advantage for Kong’s flexibility.

Deploying API Gateway

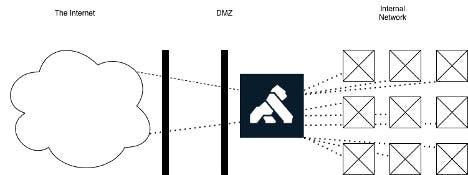

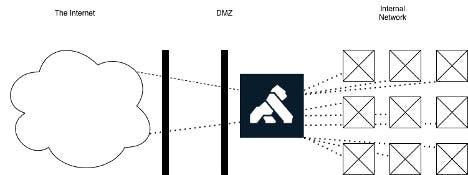

The first model deploys a Kong gateway in the DMZ.

Figure 2. An API Gateway in a DMZ

In this configuration, traffic originating from the internet is allowed to reach the Kong instance that is running in the DMZ via HTTP or HTTPS. Kong will then do a few useful things, possibly including:

- Ensure the traffic is confidential

- Identify the consumers

- Permit or reject consumers from doing certain things

- Log requests

- Cache requests

- Put a limit on how fast requests may be consumed

Upstream of Kong, there are two internal systems that currently have functionality, or data that are made available to external consumers.

This configuration is suitable for this purpose. Now we will make things more interesting…

The two teams that exposed APIs became highly successful. Their products and services are heavily used, and the organization is benefiting from this. More teams are encouraged to equally expose data and to digitally enable transactions. We initially had 2 systems, now we have 12. Is this still a suitable configuration? Could there be a better fitting configuration? Do we want to manage 12, and increasingly more configurations, through the firewall? If there is a breach into another system in the DMZ, that system could potentially have access to any exposed internal systems from the firewall on the right.

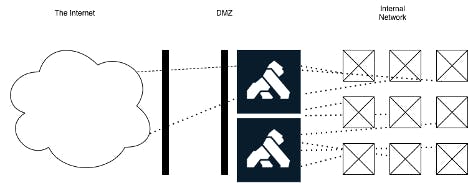

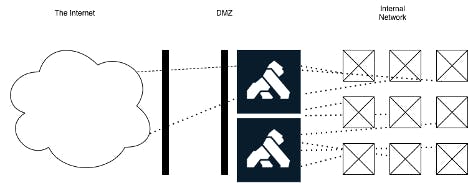

Figure 3. An API Gateway behind a DMZ

To mitigate this, we introduce an alternative to our first configuration. We move the gateway into the internal network as seen in Figure 3. We then configure the left firewall to direct the traffic originally intended to Kong to go to the right firewall instead. The right firewall then passes this traffic to Kong. We, in effect, tunnel through the DMZ. The gateway carries on enforcing the same policies as it has done before. We did not have to open more paths in the right firewall than we did in the previous configuration; only two ‘holes' , one for HTTP and one for HTTPS, remain open. The API consumers do not know, or mind, where the API gateway is running. But now things get more interesting again…

API Internal Applications

The internal applications now need to offer APIs to one another that are different from APIs offered to the external consumers. Should we have the same instance of Kong be the gateway for these internal applications and consumers? If we do, do we not provide an attack surface via the lack of segregation of APIs? In theory, if somehow, an external consumer knows the path to an internal API, they could try to access it. We need to mitigate this. Fortunately, there are a handful of useful tools available to us.

- We can use the access control list plugin to restricted access to certain routes or services to only internal consumers.

- Instead, or in combination with this, we can use the IP restriction plugin to limit access to services or routes based on certain IP or IP ranges that we know, with certainty, are internal.

- We can run another instance of the gateway for internal traffic only, and this will be the instance the applications use to communicate with one another, as seen in Figure 4.

Figure 4. An internal and external API Gateway behind a DMZ

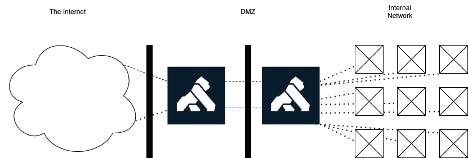

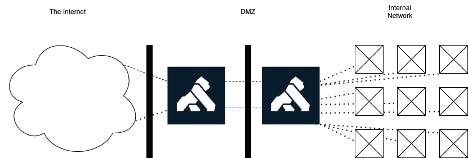

- We can run another instance of Kong in the DMZ, which filters traffic to the internal gateway, ensuring that only routes that are externally facing are allowed to continue, as seen in Figure 5.

Figure 5. Two gateways, in and behind a DMZ

In both of the last two deployment patterns, we introduced a second gateway, which needs a necessary configuration and administrative overhead. They are also more ‘complex' deployments. They have to be as they are solving a more complex use case.

We demonstrated in this progressively more complex environment that there is no single deployment model that is suitable for all conditions. All deployments have their pros, cons, and overheads. The choice of deployment is a function of mitigating legitimate security concerns. Fortunately, Kong's flexibility in deployment, and configuration, lends itself to adapt to such situations.

At Kong, we encourage you to exercise sound analysis of your potential security threats, and to apply a suitable approach to ensure the safety of your data and services. We hope this article assists you in this regard.