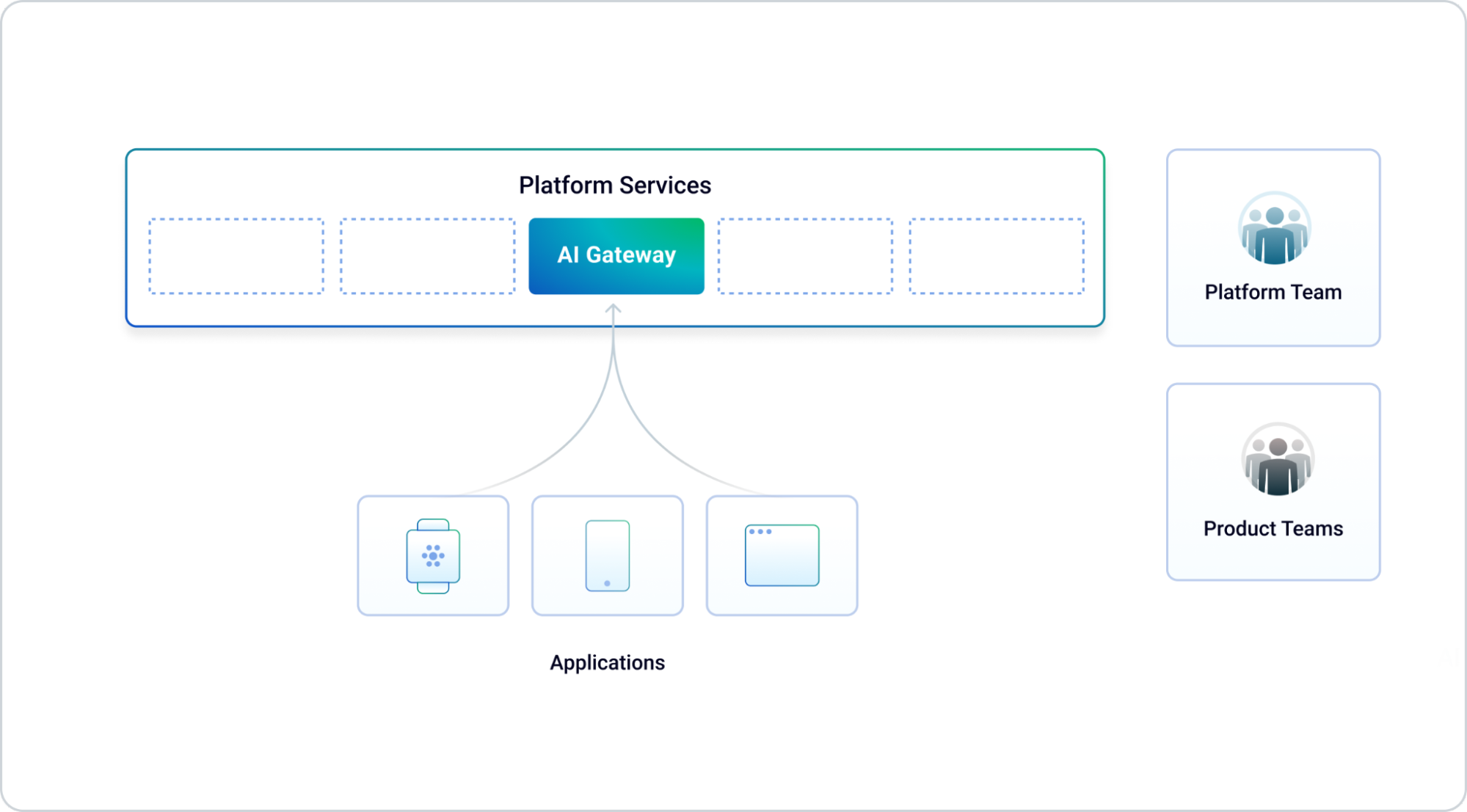

AI gateway becomes a core platform service that every team can use.

Scaling & Governance: For Leaders Ready to Take Charge

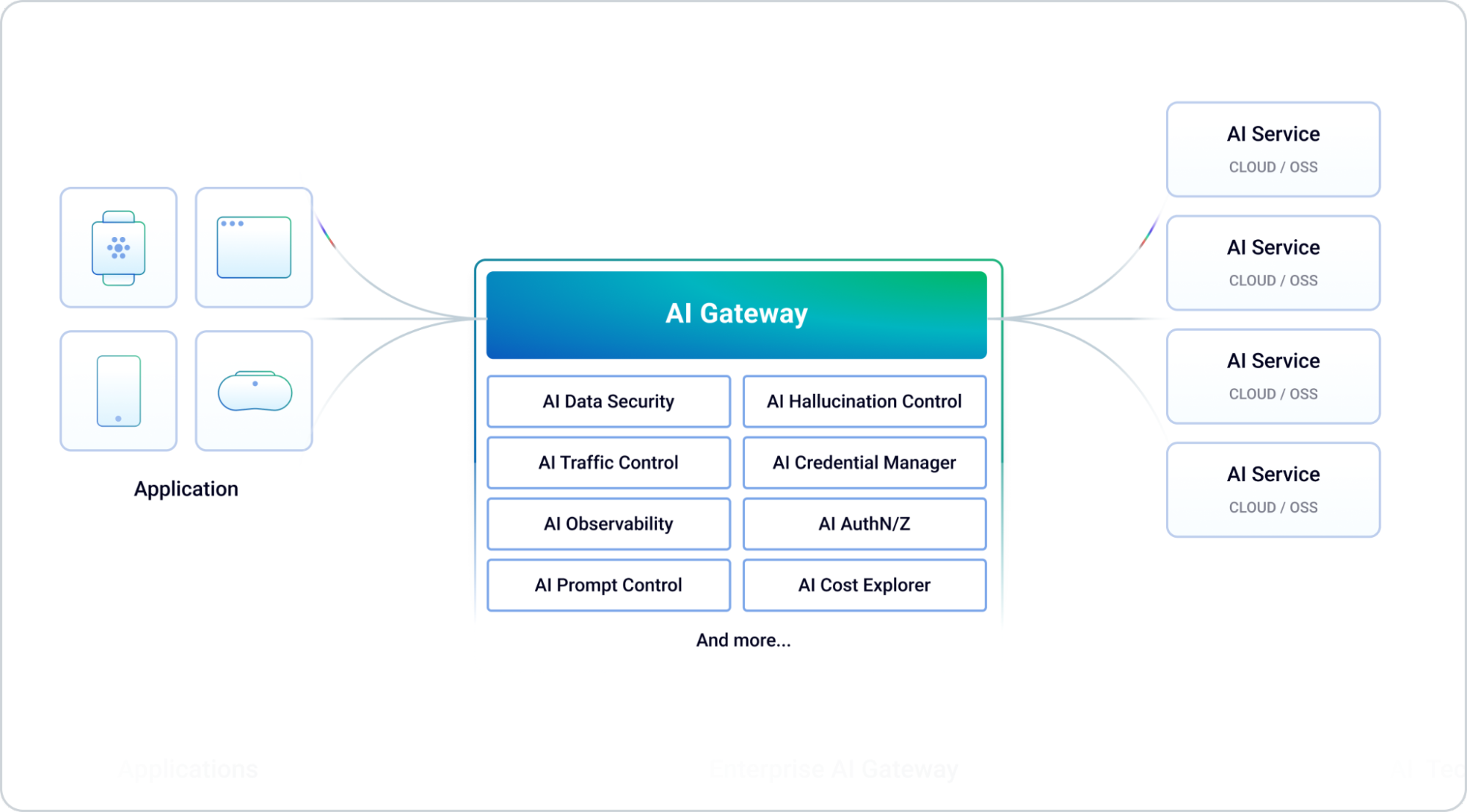

Deploying an AI Gateway is more than a technical exercise—it requires organizational alignment and robust governance. An AI gateway will support multiple AI backends (like OpenAI, Mistral, LLaMA, Anthropic, etc.) but still will provide one API interface that the developers can use to access any AI model they need. We can now manage the security credentials of any AI backend from one place so that our applications don’t need to be updated whenever we rotate or revoke a credential to a third-party AI.

Then it can implement prompt security, validation, and template generation so that the prompts themselves can be managed from one control plane and changed without having to update the client applications. Prompts are at the core of what we ask AI to do, and being able to control what prompts our applications are allowed to generate is essential for responsible and compliant adoption of AI. We wouldn’t want developers to build an AI integration around restricted topics (political, for example), or to mistakenly set the wrong context in the prompts which can then be later exploited by a malicious user.

Organizational Readiness & Team Structure

Because different teams will most likely use different AI services, the AI gateway can offer a standardized interface to consume multiple models, simplifying the implementation of AI across different models and even switching between one another.

Identify key stakeholders across IT, security, compliance, and business units. Establish a dedicated AI Gateway Ops team responsible for ongoing management, security, and compliance monitoring.

Establishing Governance Frameworks

The AI gateway could also implement security overlays like AuthN/Z, rate-limiting, and full API lifecycle governance to further manage how AI is being accessed internally by the teams. At the end of the day, AI traffic is API traffic.

Develop clear policies governing data handling, prompt engineering guidelines, logging, and auditing. Leverage existing frameworks such as Microsoft's AI Governance Framework or build bespoke policies tailored to your organizational needs.

Monitoring & Ongoing Compliance

AI observability can be managed from one place and even sent to third-party log/metrics collectors. And since it’s being configured in one place, we can easily capture the entirety of AI traffic being generated to further make sure the data is compliant, and that there are no anomalies in the usage.

Implement real-time dashboards to monitor usage metrics, detect anomalies, and ensure compliance. Integrate gateway logs and alerts with Security Information and Event Management (SIEM) platforms to automate compliance workflows.

Last but not least, as we all know AI models are quite expensive to run, being able to leverage the AI gateway will allow the organization to learn from its AI usage to implement cost-reduction initiatives and optimizations.

Conclusion

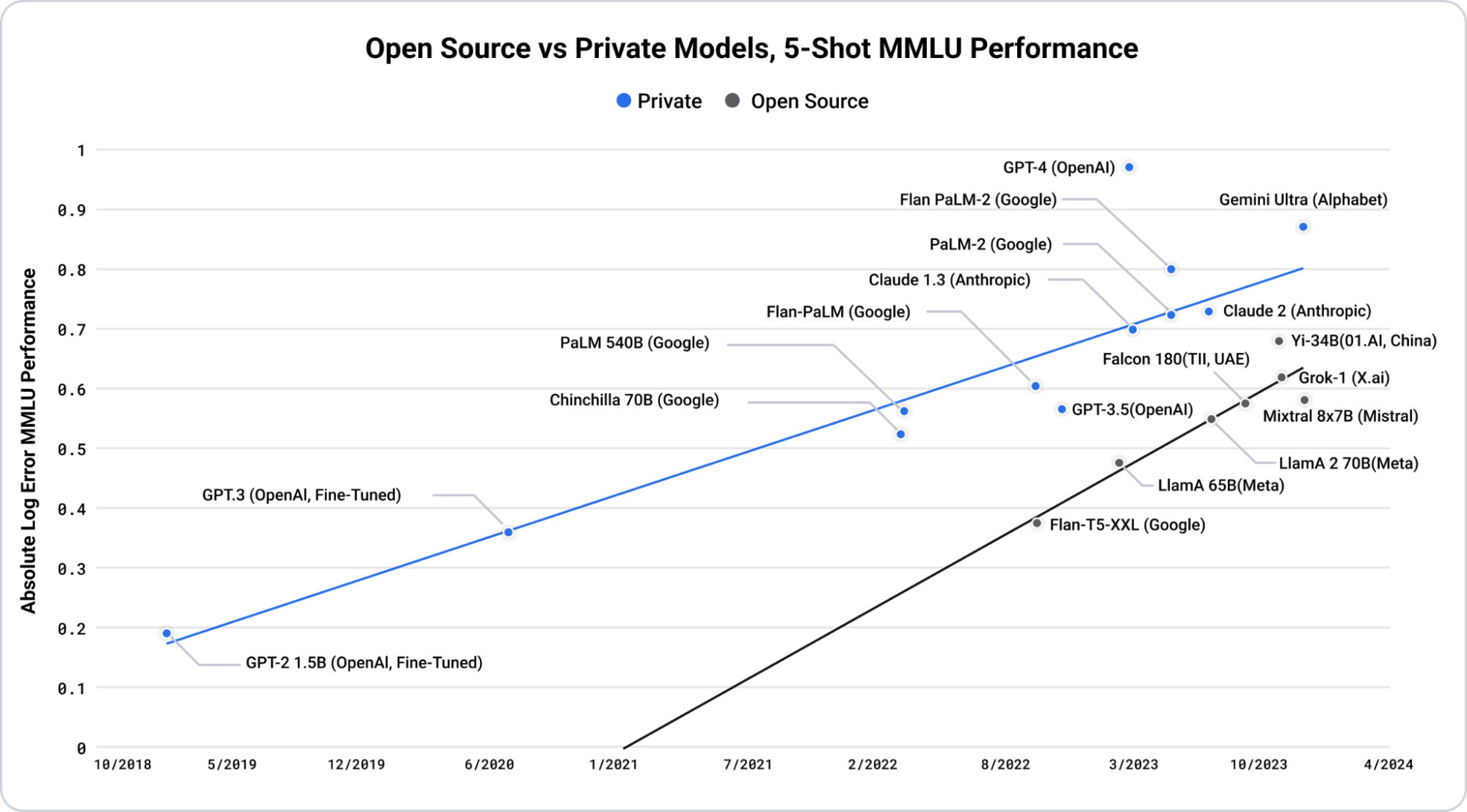

Artificial Intelligence driven by recent developments in GenAI and LLM technologies is a once-in-a-decade technology revolution posed to disrupt the industry and forever change the application experiences that every organization is building for their customers.

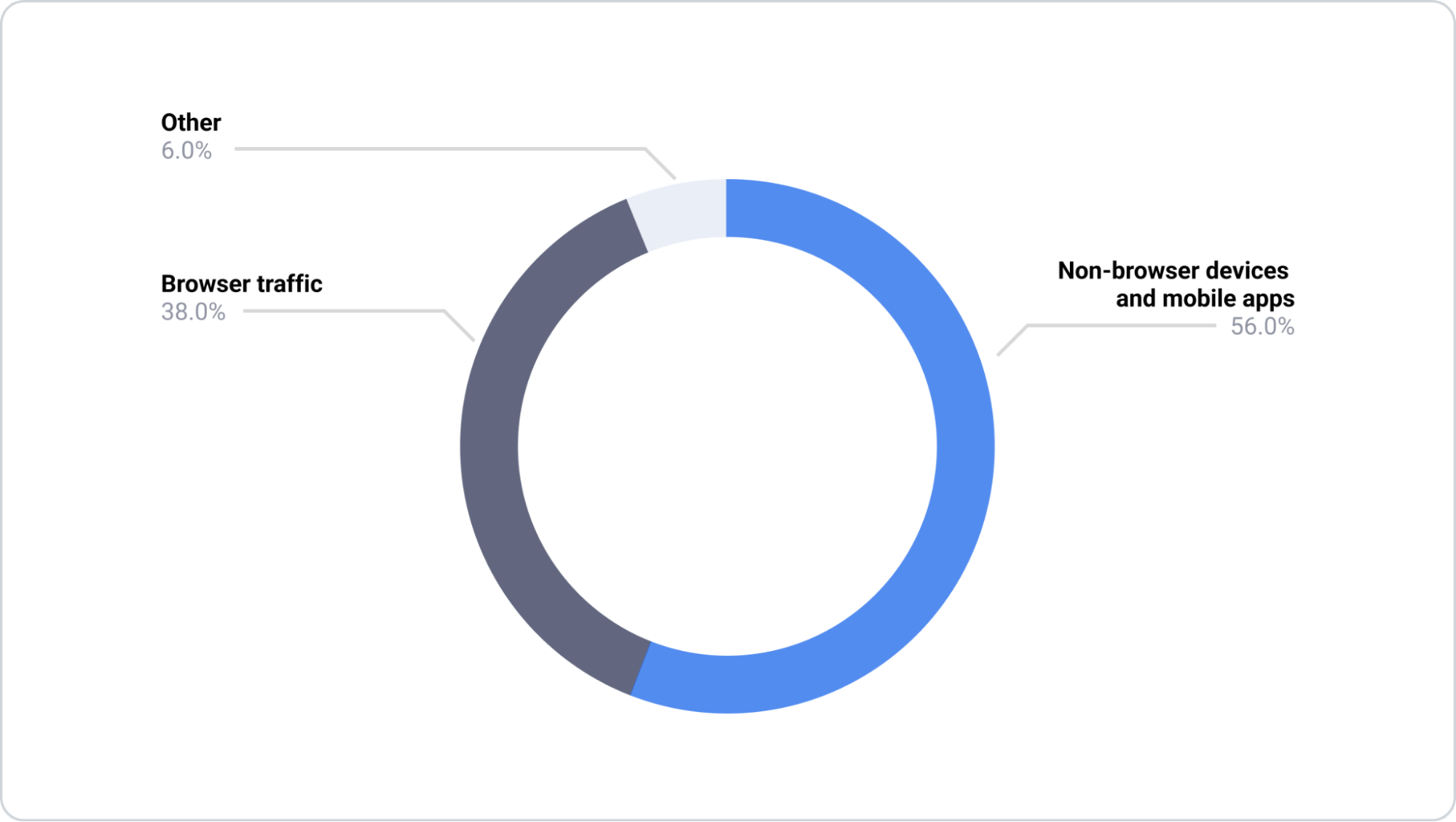

APIs have always been driven by new use cases (think of the mobile revolution, microservices, and so on) and AI further increases the amount of API traffic in the world: either APIs that our applications use to communicate with the AI models themselves, or APIs that the AI models use to interact with the world.

AI consumption is hard. There are serious data compliance ramifications when using models that can be shared across different applications, and perhaps even shared across different organizations (think of fully managed cloud models hosted in a shared environment). In addition, AI traffic needs to be secured, governed, and observed like we already do for all other types of API traffic coming inside or outside the organization. It turns out there is a great deal of infrastructure we need to put in place to use AI in production.

With an AI gateway, we can offer an out-of-the-box solution for developers to use AI in a quick and self-service way, without asking them to build AI infrastructure and all the cross-cutting capabilities that it requires. By adopting an AI gateway, the organization has the peace of mind that they have full control of the AI traffic being generated by every team and every application, and they finally have the right abstraction in place to ensure that AI is being used in a compliant and responsible way.

Marco Palladino,

CTO at Kong

AI Gateway FAQs

What is an AI Gateway?

An AI Gateway is a specialized middleware layer that manages and secures interactions between your applications and AI models (like Large Language Models, or LLMs). It handles vital tasks such as authentication, authorization, routing, rate limiting, data masking, and prompt management—all under a single control plane.

How is an AI Gateway different from a traditional API Gateway?

While both serve as proxies to manage traffic, AI Gateways include advanced capabilities specific to AI-driven workloads. These capabilities include prompt engineering controls, detailed token analytics, and session-based conversational context, which are essential for the secure, efficient, and compliant delivery of AI services.

Why do organizations need an AI Gateway now?

Organizations face critical challenges in security, compliance, and cost management when adopting AI. An AI Gateway provides centralized governance, helps prevent data leaks or compliance violations, and enables cost controls by monitoring token usage and optimizing AI spend across various LLMs.

How does an AI Gateway improve AI security and compliance?

AI Gateways implement robust security policies like data masking, encryption, and access controls. They allow organizations to track and manage all AI traffic in one place, ensuring alignment with regulations (e.g., GDPR, HIPAA) and offering fine-grained control over how AI is accessed and utilized.

Can an AI Gateway help control high AI costs?

Yes. AI Gateways provide visibility into token usage and allow for optimized resource allocation. Cost-saving features like request throttling, caching, and usage analytics help organizations rein in runaway LLM expenditures, ensuring a more predictable AI budget.

What role does an AI Gateway play in AI governance?

AI Gateways serve as a single control plane for managing how AI is consumed across an organization. They streamline oversight of model usage, enforce best practices like prompt validation, and offer centralized visibility into AI traffic—making it easier to establish and maintain governance policies.

How do AI Gateways facilitate multi-LLM adoption?

AI Gateways allow developers to connect to and switch between multiple AI providers or self-hosted LLMs through one unified interface. This simplifies integration, reduces development overhead, and ensures your teams can leverage the best AI model for each use case.

What are the potential risks of deploying an AI Gateway?

Common challenges include creating a single point of failure, risking vendor lock-in, or over-relying on prompt filtering to combat LLM hallucinations. Mitigate these risks by implementing redundancy, adopting open standards or multi-cloud strategies, and complementing gateway filters with model fine-tuning.

How does an AI Gateway address prompt engineering issues?

An AI Gateway inspects and validates prompts before they reach the model, helping to prevent prompt injection attacks or inappropriate content. Organizations can centrally manage and update prompts without having to modify individual client applications.

How can I get started with an AI Gateway from Kong?

Kong’s AI Gateway is designed for multi-LLM adoption, cost efficiency, and enterprise-grade governance. Visit the Kong AI Gateway product page to learn about its features, request a demo, or explore documentation to see how it can seamlessly integrate into your existing infrastructure.