Using Kong Kubernetes Ingress Controller as an API Gateway

In this first section, I'll provide a quick overview of the business case and the tools you can use to create a Kubernetes ingress API gateway. If you're already familiar, you could skip ahead to the tutorial section or watch the video at the bottom of this article.

Kubernetes Microservice Architecture

Digital transformation has led to a high velocity of data moving through APIs to applications and devices. Companies with legacy infrastructures are experiencing inconsistencies, failures and increased costs. And most importantly, dissatisfied customers.

All this has led to significant restructuring and modernization of API technologies, especially within IT. A primary strategy is to embrace Kubernetes and decouple monolithic systems. On top of that, IT leadership is tasking DevOps teams to find systems, like an API gateway or Kubernetes ingress controller, to support API traffic growth while minimizing costs.

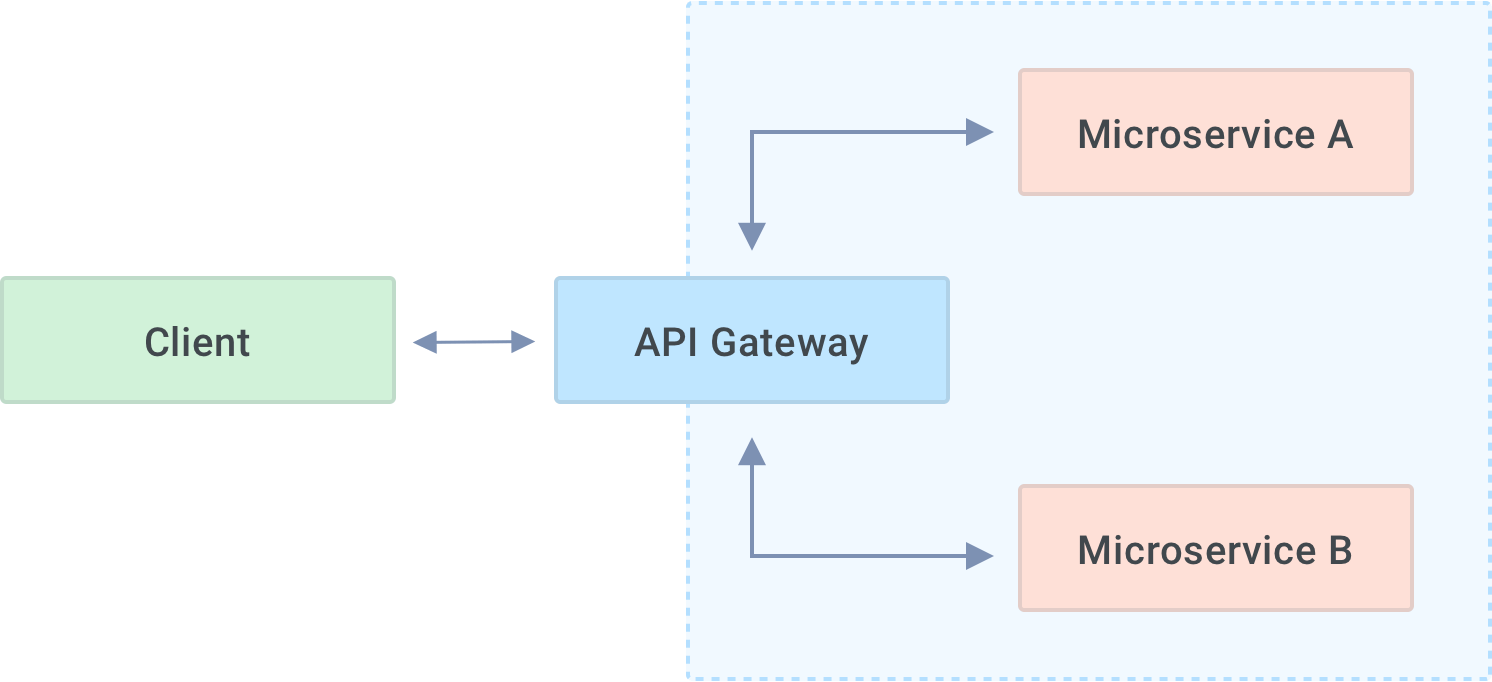

API gateways are crucial components of microservice architectures. The API gateway acts as a single entry point into a distributed system, providing a unified interface for clients who don’t need to care (or know) that the system aggregates their API call response from multiple microservices.

Some everyday use cases for API gateways include:

- Routing inbound requests to the appropriate microservice

- Presenting a unified interface to a distributed architecture by aggregating responses from multiple backend services

- Transforming microservice responses into the format required by the caller

- Implementing non-functional/policy concerns such as authentication, logging, monitoring and observability, API rate limiting, IP filtering, and attack mitigation

- Facilitating deployment strategies such as blue/green or canary releases

API gateways can simplify the development and maintenance of a kubernetes architecture. As a result, freeing up development teams to focus on the business logic of individual components.

Many companies select a Kubernetes API gateway at the beginning or partway through their transition to multi-cloud. Doing so makes it necessary to choose a solution that can function with on-prem services and the cloud.

What is Kubernetes?

Kubernetes is becoming the hosting platform of choice for distributed architectures. It offers auto-scaling, fault tolerance and zero-downtime deployments out of the box.

By providing a widely accepted, standard approach with a carefully designed API gateway, Kubernetes has spawned a thriving ecosystem of products and tools that make it much easier to deploy and maintain complex systems.

Kong Kubernetes Ingress Controller

As a native Kubernetes application, Kong is installed and managed precisely as any other Kubernetes resource. It integrates well with other CNCF projects and automatically updates itself with zero downtime in response to cluster events like pod deployments. There’s also a great plugin ecosystem and native gRPC support.

This tutorial will walk through how easy it is to set up the open source Kong Ingress Controller as a Kubernetes API gateway on a cluster.

API Gateways vs. K8s Ingress Compared: Know Your Best-Fit Solution

Use Case: Routing API Calls to Backend Services

To keep this article to a manageable size, I will only cover a single, straightforward use case.

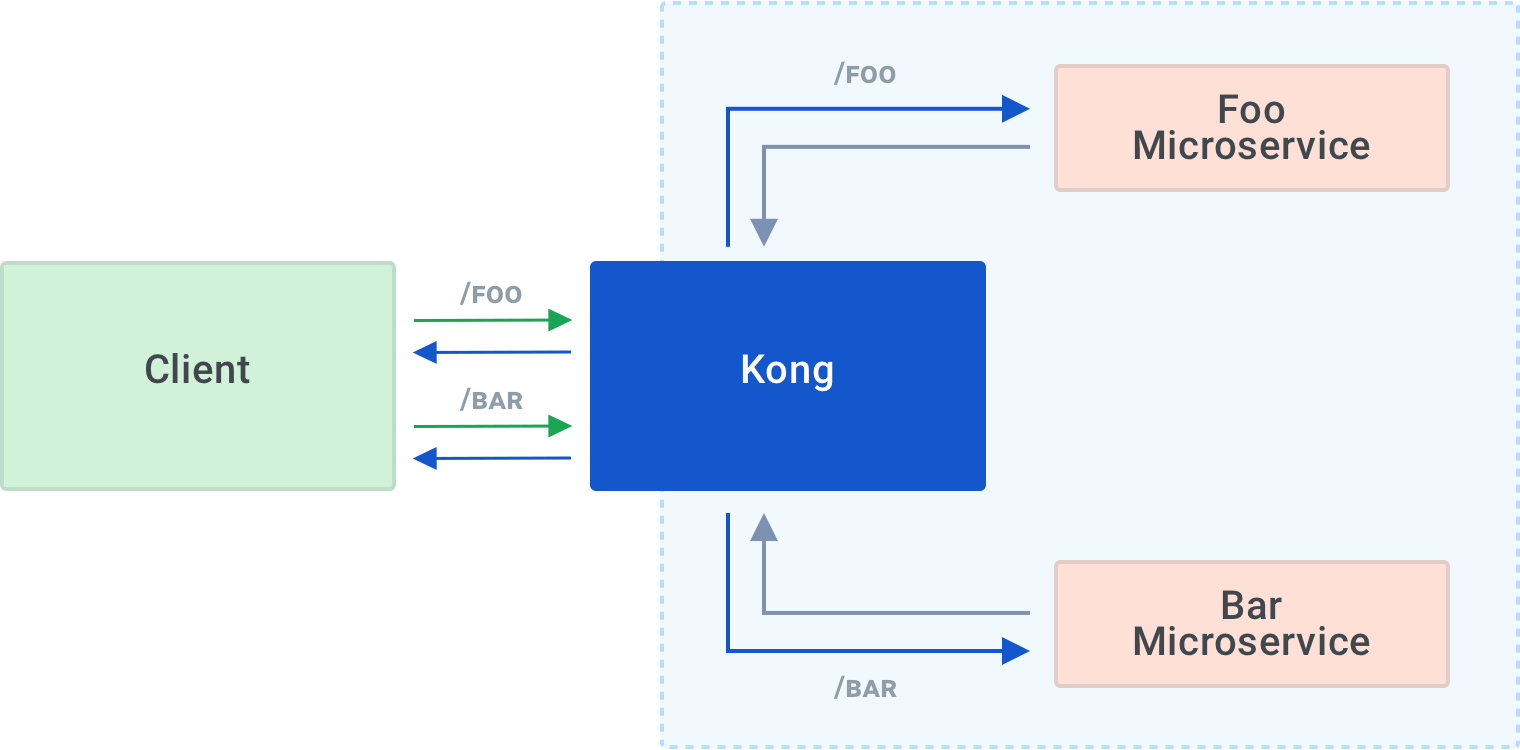

Kong foo/bar routing

I will create a Kubernetes cluster, deploy two dummy microservices, "foo" and "bar," install and configure Kong to route inbound calls to /foo to the foo microservice and send calls to /bar to the bar microservice.

The information in this post barely scratches the surface of what you can do with Kong, but it’s a good starting point.

Prerequisites

There are a few things you’ll need to work through in this article.

In this tutorial, I'm going to create a "real" Kubernetes cluster on DigitalOcean because it’s quick and easy, and I like to keep things as close to real-world scenarios as possible. If you want to work locally, you can use minikube or KinD. You will need to fake a load-balancer, though, either using the minikube tunnel or setting up a port forward to the API gateway.

For DigitalOcean, you will need:

- A DigitalOcean account

- A DigitalOcean API token with read and write scopes

- The doctl command-line tool

To build and push docker images representing our microservices, you will need:

- Docker

- An account on Docker Hub

Note: Optional because you can deploy the images I’ve already created.

You will also need kubectl to access the Kubernetes cluster.

Setting Up doctl

After installing doctl, you’ll need to authenticate using the DigitalOcean API token:

Create Kubernetes Cluster

Now that you have authenticated doctl, you can create your Kubernetes cluster with this command:

Note: The command spins up a Kubernetes cluster on DigitalOcean. Doing so will incur charges (approximately $0.01/hour, at the time of writing) as long as it is running. Please remember to destroy any resources you create when you finish with them.

The command creates a cluster with a single worker node of the smallest viable size in the New York data center. It's the smallest and simplest cluster (and also the cheapest to run). You can explore other options by running doctl kubernetes –help.

The command will take several minutes to complete, and you should see an output like this:

As you can see, the command automatically adds cluster credentials and a context to the ~/.kube/config file, so you should be able to access your cluster using kubectl:

Create Dummy Microservices

To represent backend microservices, I’m going to use a trivial Python Flask application that returns a JSON string:

foo.py

This Dockerfile builds a docker image you can deploy:

Dockerfile

The files for our "foo" and "bar" services are almost identical, so I’m only going to show the "foo" files here.

This gist contains files and a script to build foo and bar microservices docker images and push them to Docker Hub as:

- digitalronin/foo-microservice:0.1

- digitalronin/bar-microservice:0.1

Note: You don’t have to build and push these images. You can just use the ones I’ve already created.

Deploy Dummy Microservices

You’ll need a manifest that defines a Deployment and a Service for each microservice, both for "foo" and "bar." The manifest for "foo" (again, I’m only showing the "foo" example here, since the "bar" file is nearly identical) would look like this:

foo.yaml

This gist has manifests for both microservices, which you can download and deploy to your cluster like this:

Access the Services

You can check that the microservices are running correctly using a port forward:

Then, in a different terminal:

Ditto for bar, also using port 5000.

Install Kong for Kubernetes

Now that you have our two microservices running in our Kubernetes cluster, let’s install Kong.

There are several options for this, which you will find in the documentation. I’m going to apply the manifest directly, like this:

The last few lines of output should look like this:

Note: You may receive several API deprecation warnings at this point, which you can ignore. Kong's choice of API versions allows Kong Ingress Controller to support the broadest range of Kubernetes versions possible.

Installing Kong will create a DigitalOcean load balancer. It's the internet-facing endpoint to which you will make API calls to access our microservices.

Note: DigitalOcean load balancers incur charges, so please remember to delete your load balancer along with your cluster when you are finished.

Creating the load balancer will take a minute or two. You can monitor its progress like this:

Once the system creates the load balancer, the EXTERNAL-IP value will change from <pending> to a real IP address:

For convenience, let’s export that IP number as an environment variable:

Now you can check that Kong is working:

Note: It's the correct response because you haven’t yet told Kong what to do with any API calls it receives.

Configure Kong Gateway

You can use Ingress resources like this to configure Kong to route API calls to the microservices:

foo-ingress.yaml

This gist defines ingresses for both microservices. Download and apply them:

Now, Kong will route calls to /foo to the foo microservice and /bar to bar.

You can check this using curl:

What Else Can You Do?

In this article, I have:

- Deployed a Kubernetes cluster on DigitalOcean

- Created Docker images for two dummy microservices, “foo” and “bar”

- Deployed the microservices to the Kubernetes cluster

- Installed the Kong Ingress Controller

- Configured Kong to route API calls to the appropriate backend microservice

I've demonstrated one simple use of Kong, but it’s only a starting point. With Kong for Kubernetes, here are several examples of other things you can do:

Authentication

By adding an authentication plugin to Kong, you can require your API callers to provide a valid JSON Web Token (JWT) and check each call against an Access Control List (ACL) to ensure callers are entitled to perform the relevant operations.

Certificate management

You can enable integration with cert-manager to provision and auto-renew SSL certificates for your API endpoints so that all your API traffic is encrypted as it travels over the public internet.

gRPC support

Kong natively supports gRPC, so it’s easy to add gRPC support to your API.

You can do a lot more with Kong, and I’d encourage you to look at the documentation and start to explore some of the other features.

The API gateway is a crucial part of a microservices architecture, and the Kong Ingress Controller is well suited for this role in a Kubernetes cluster. You can manage it in the same way as any other Kubernetes resource.

Cleanup

Don’t forget to destroy your Kubernetes cluster when you are finished with it so that you don’t incur unnecessary charges:

Note: If you delete the cluster first, the load balancer will be left behind. You can delete any leftover resources via the DigitalOcean web interface.

Have questions or want to stay in touch with the Kong community? Join us wherever you hang out:

Once you’ve finished setting up Kong Ingress Controller, you may find these other Kubernetes tutorials helpful: