Configuring a Kubernetes Application on Kong Konnect

Hello, everyone! Viktor Gamov, a developer advocate with Kong here. In this article, I would like to show you how to set up service connectivity using Kong Konnect and Kubernetes. I will deploy an application in Kubernetes, configure a runtime through Konnect and demonstrate some management capabilities like enabling plugins.

Let's dive right in!

Set Up Konnect, Kubernetes and Helm

As a prerequisite, I have created an account set up in Konnect. If you don't already have one, you can sign up for free and follow our getting started documentation, blog post or video.

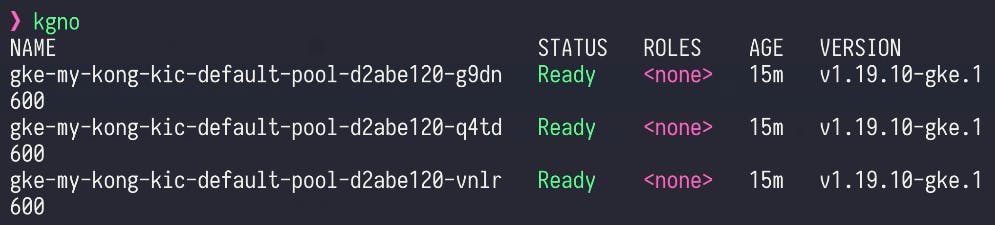

Also, I have prepared my three-node Kubernetes cluster in GCP.

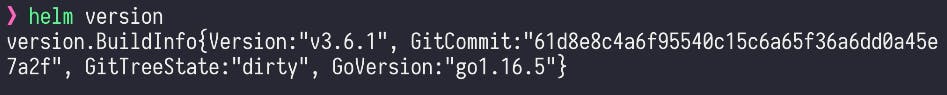

I also have Helm 3 installed on my computer.

Kong provides you with Helm Charts for Kong Gateway and Ingress Controller.

Follow this documentation to add the Kong repository to your computer.

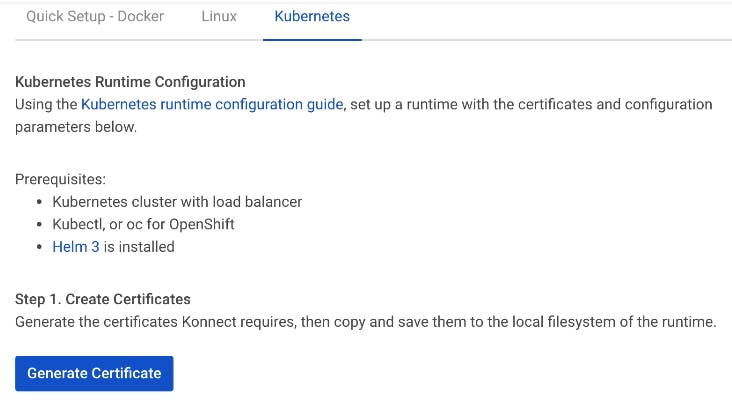

Next, we'll securely establish a connection between our control plane and our data plane. To do this, click Generate Certificate in the Runtimes section of Konnect.

You need to copy the certificate, root certificate and server private key to your files system.

We will deploy those to Kubernetes in a few steps.

Connect the Runtime in Kubernetes

Next, we should connect the runtime to our data plane. Then, we need to create secrets inside our Kubernetes cluster. One secret for the Kong cluster certificate and the other for the Kong cluster certificate code. There's more detail on this in the Kong Konnect documentation.

Note: Make sure you've created the namespace.

The next thing we'll need is the values.yaml file.

We can put all our customizations for Kong Helm Charts.

In case you are interested in customizing this installation, take a look at a repository of examples. In your case, it might contain different links because you might be using different URLs.

Apply the values.yaml file.

To get Helm access to Kong, we need to get the external IP address. For example, when creating a service with a load balancer in Google Cloud, Google Cloud will provide us with an external address. So to communicate with our application service, we need this address.

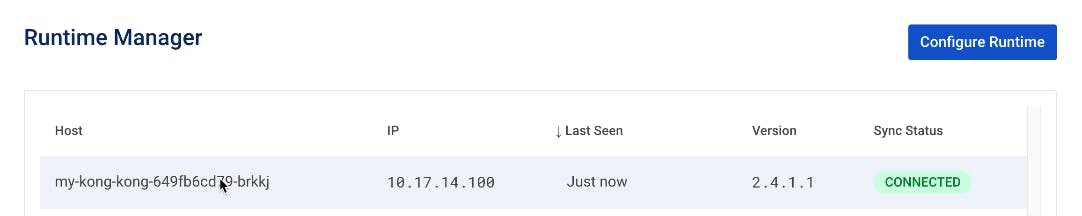

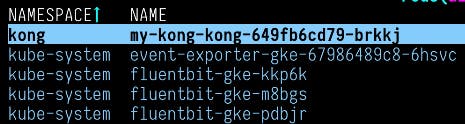

Next, let's make sure we have a connection to this runtime in Konnect and K9s.

It's connected in my Konnect Runtime Manager.

Here's my pod in K9s. It's connected to my control plane.

Now we have our data plane, our applications are running and our API gateway is running. Next, we need to manage this API gateway from the outside world.

Create the Mock Service in Konnect

We'll create a new service in Konnect ServiceHub called mock service. I'm creating a service that will proxy the request to this Mockbin through my Kong Gateway.

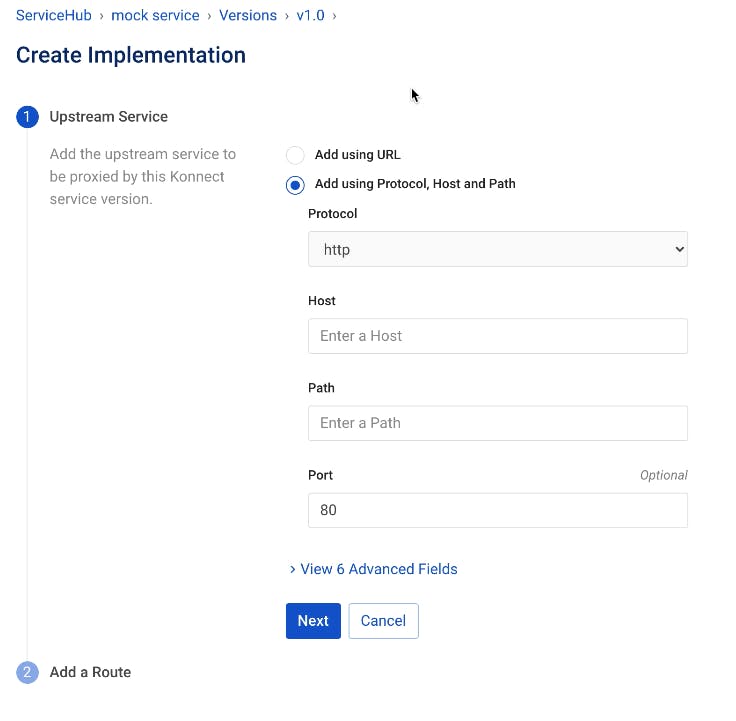

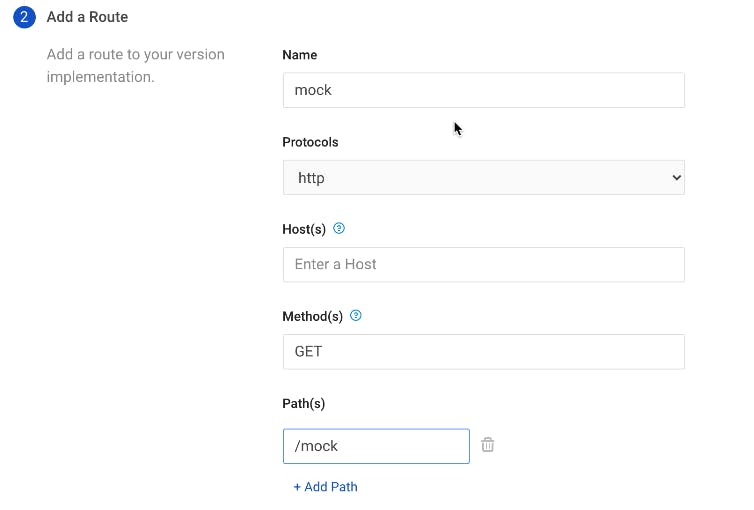

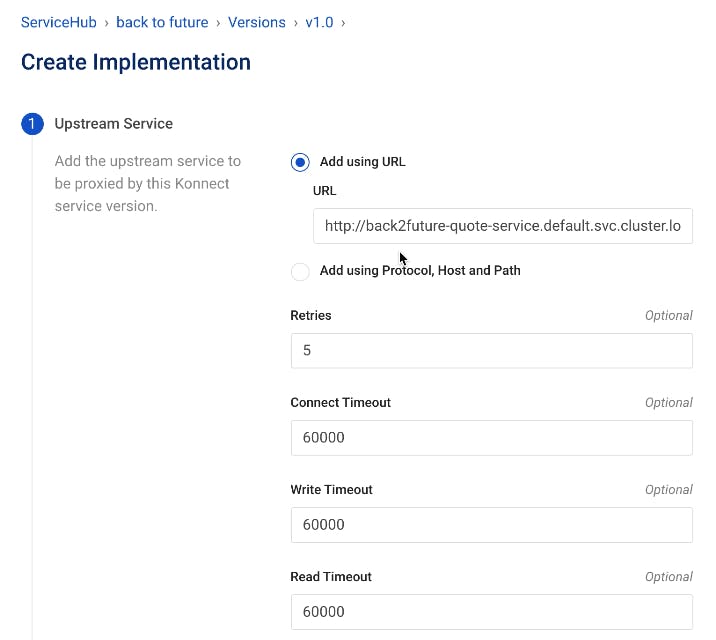

To create a new implementation, we'll go into our current version for the mock service and click Add New Implementation.

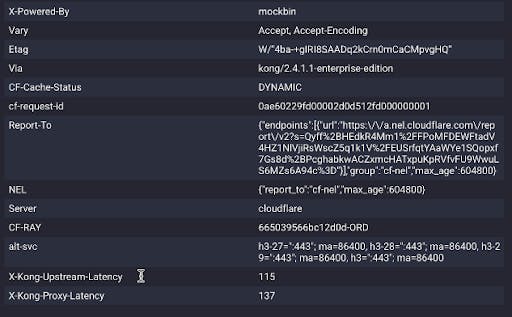

From Mockbin, we can try testing with foo and bar (http://mockbin.com/request?foo=bar&foo=baz), and I get the following response.

If we try to hit the same URL through Kong, we'll see some extra headers.

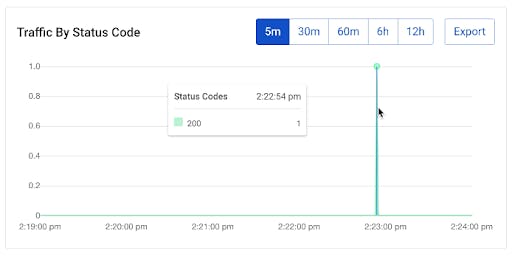

We should also be able to see this traffic in our Konnect Vitals data. I just hit once, so there's one spike.

So far, in the Konnect UI, we configured a mock service. That configuration propagated into our data plane that deployed in Kubernetes. We didn’t configure anything in Kubernetes, but suddenly our Kong Gateway service running inside Kubernetes started understanding the mock URL.

Configure the Service in Kubernetes

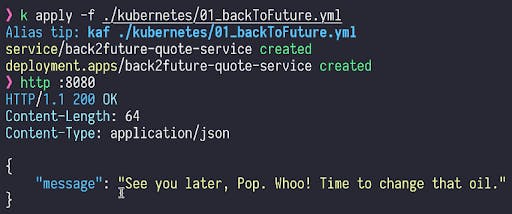

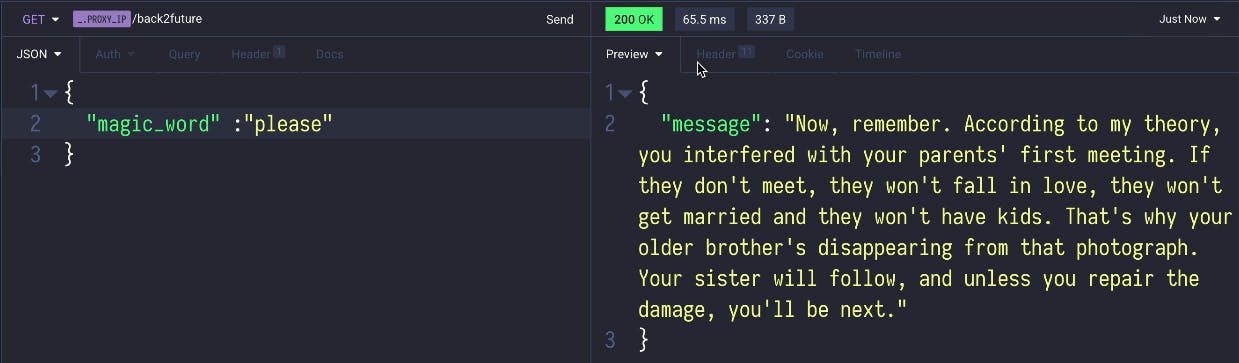

I wrote a small application called Quote Service that shows random quotes from Back to the Future. Once the application deploys, we’ll create the port forwarding. Then, once port forwarding is enabled, we’ll get responses from the service.

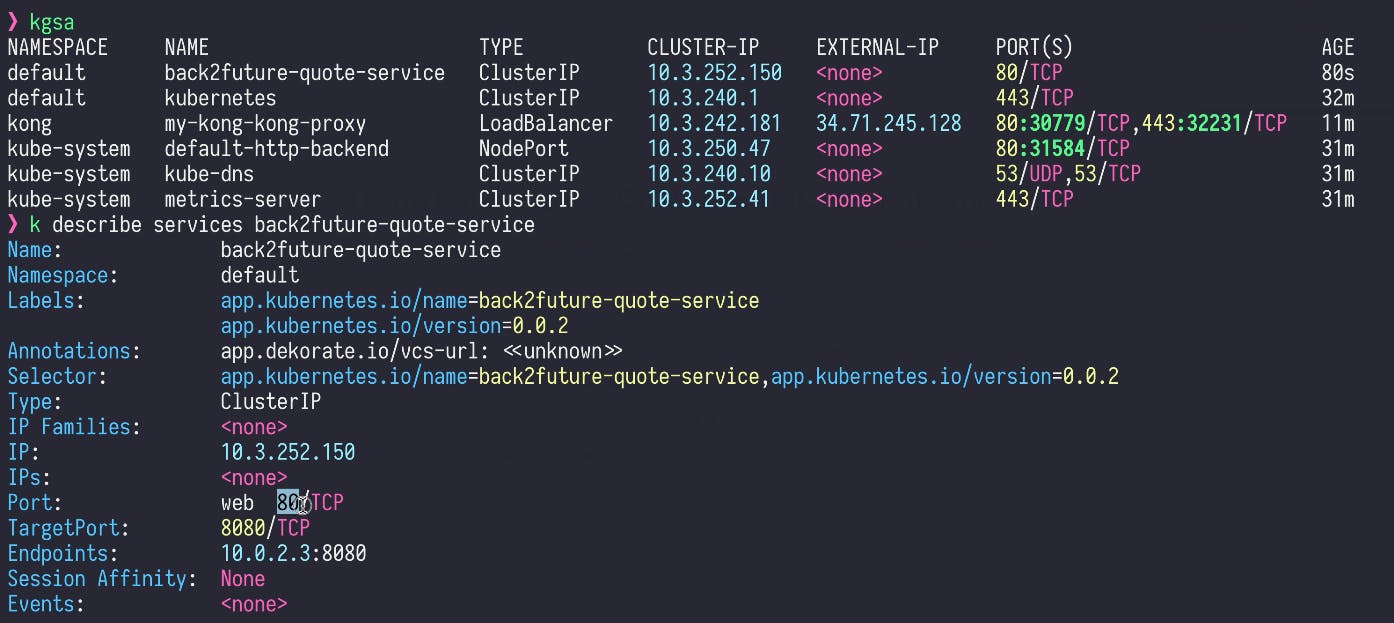

We'll hit this Kubernetes service through service discovery. So this Quote Service is now available on port 8080.

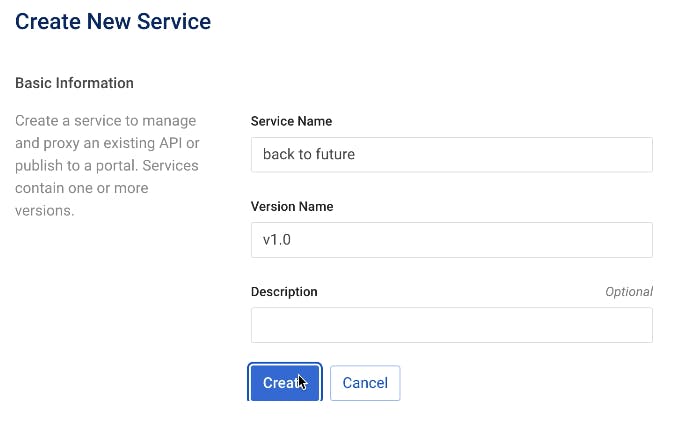

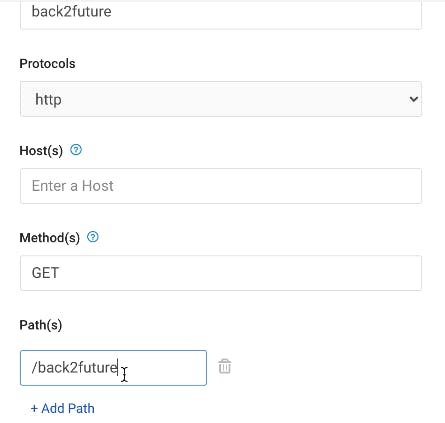

We'll go back to Konnect and create a new service and implementation again.

We'll add the route.

When we hit this now, it immediately goes through our Kong Ingress Controller. That's because the communication between the Konnect control plane and the data plane in Kubernetes is super fast.

Enable a Rate Limiting Policy

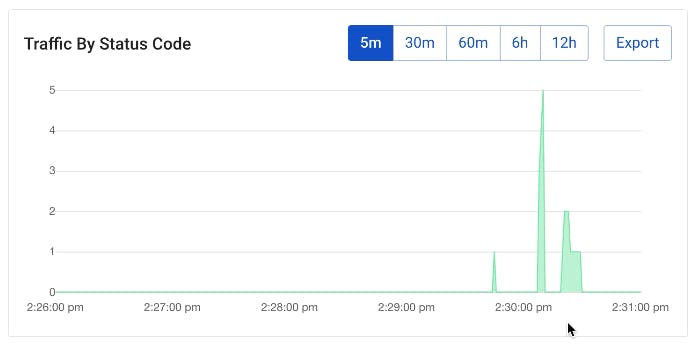

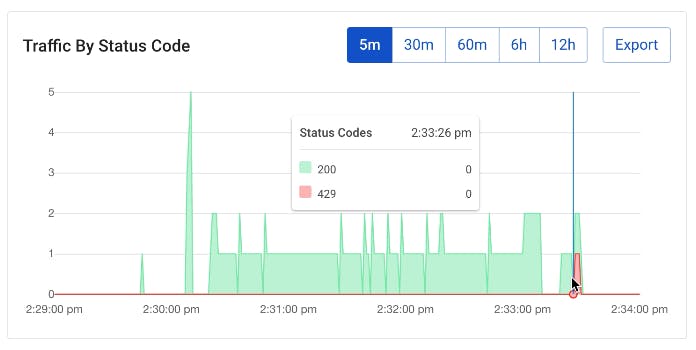

If we continue hitting this with requests on repeat, we should see that in the Konnect Vitals graph.

What should we do in real life to prevent this type of situation? That's where rate limiting policies come in.

We can quickly enable Kong's rate limiting plugin. Let's allow one request per second.

If we start getting too many requests, our mock service will push back with a 429.

![]()

And in Kong Vitals, we should be able to see errors in red.

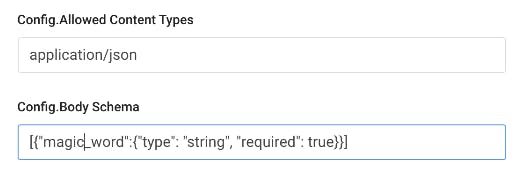

Set Up a Request Validator Policy

Another thing we could do is enable Kong's request validator plugin.

When our application starts getting bad requests, we'll get a Bad Request that says, “request body doesn’t conform to the schema.”

However, when I enter the magic_word, the application works as it should.

All of these plugins are on the runtime and don't require changing the application service. I think that’s pretty powerful.

Ready to Try Out Kong Konnect?

Start a free trial, or contact us if you have any questions as you're getting set up.

Once you've set up Kong Konnect and Kubernetes, you may find these other tutorials helpful: