Karpenter Installation

Our Karpenter deployment is based on the instructions available in its official site. To make it simpler we are going to recreate the Cluster altogether. First, delete the existing one with:

Create the Cluster

Get the following environment variables set:

Karpenter leverages several AWS technologies to run, including Amazon EventBridge, Amazon Simple Queue Service (SQS) and IAM Roles. All these fundamental components are created with the following CloudFormation template.

You can check the AWS resources created by the template with:

After submitting the CloudFormation template, create the actual EKS Cluster with eksctl. Some comments regarding the declaration:

Differently from Cluster Autoscaler, Karpenter uses the new EKS Pod Identities mechanism to access the required AWS Services.

The iam section uses the podIdentityAssociations parameters to describe how Karpenter uses EKS Pod Identities to manage EC2 instances.

The iamIdentityMappings section manages the aws-auth ConfigMap to grant permission to the KarpenterNodeRole-kong35-eks129-autoscaling Role, created by the CloudFormation template, to access the Cluster.

We are deploying Karpenter in the kong NodeGroup again. The NodeGroup will run on a t3.large EC2 Instance.

- The

addons section asks eksctl to install the Pod Identity Agent.

Check the main environment variables:

Install Karpenter with Helm Charts

Now, we are ready to install Karpenter. By default, Karpenter requests 2 replicas to run itself. For our simple exploration environment, we are changing that to 1.

You can check Karpenter Pod's Log with:

Create NodePool and EC2NodeClass

With Karpenter installed we need to manage two constructs:

NodePool: it's responsible to set constraints to the Nodes Karpenter is going to create. You can specify Taints, limit Node creation to certain zones, Instances Types, and Computer Architectures like AMD and ARM.

EC2NodeClass: specific AWS settings for EC2 Instances. Each NodePool must reference an EC2NodeClass using spec.template.spec.nodeClassRef setting.

Let's create both NodePool and EC2NodeClass based on the basic instructions provided via the Karpenter website.

NodePool

Note we've added the nodegroupname=kong label to it. This is important to make sure the new Nodes will be available for the Konnect Data Plane Deployment. Moreover, the nodeClassRef setting refers to the default NodeClass we create next. Please, check the Karpenter documentation to learn more about NodePool configuration.

EC2NodeClass

The EC2NodeClass declaration includes specific AWS settings to be used when creating a new Node such as AMI Family, Instance Profile, Subnets, Security Groups, IAM Role, etc. Note we are grating the KarpenterNodeRole-kong35-eks129-autoscaling Role, created by the CloudFormation template, to the new Nodes.

Konnect Data Plane Deployment and Consumption

As we have Karpenter installed and configured, let's move on and install the Konnect Data Plane. Make sure you use the same declaration we used before and set the same CPU and memory (cpu=1500m, memory=3Gi) resources to it.

Since we are going to use HPA and Karpenter together, install the Metrics Server on your Cluster along with the HPA policy allowing 20 replicas to be created.

Finally, create the new Node for the Upstream and Load Generator as well as deploy the Upstream Service using the same declaration.

Start the same Fortio 60-minute-long load test with 5000 qps.

After some minutes we'll see both HPA and Karpenter in action. Here's one of the HPA results I got:

And here's the new Nodes Karpenter created:

Cluster Consolidation

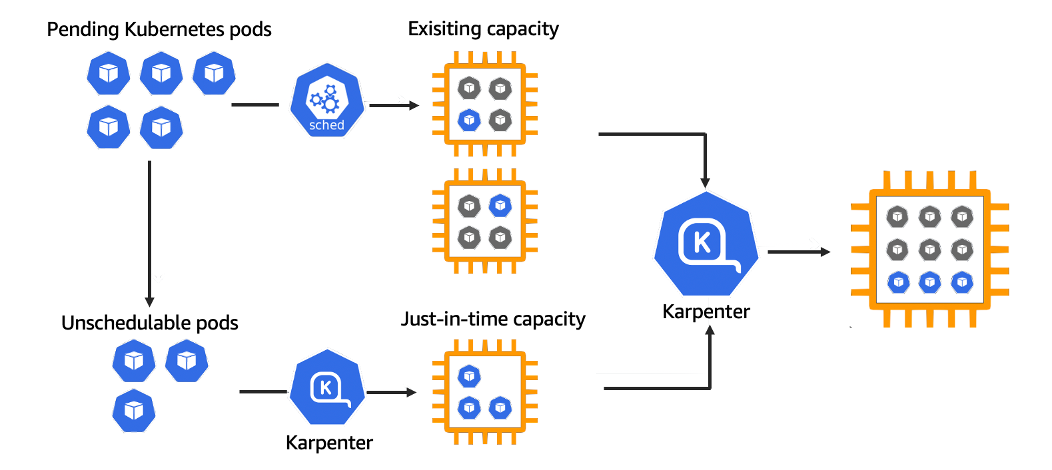

One of the most powerful Karpenter capabilities is Cluster Consolidation, that is, the ability to delete or replace Nodes to a cheaper configuration.

You can see it in action if you leave the test load running a little longer. We'll see that Karpenter has consolidated the multiple Nodes into a single one:

From the API consumption perspective, here are the results I got. As you can see the Data Plane layer with all its replicas was able to honor the QPS requested with expected latency time.

The P99 latency: for example, # target 99% 0.0484703

The number of requests sent along with the QPS: All done 18000000 calls (plus 800 warmup) 98.065 ms avg, 4999.8 qps

As a fundamental principle of Elasticity, if we stop the load test, deleting the Fortio Pod, we should see HPA and Karpenter reducing the resources allocated to the Data Plane.

Conclusion

Kong takes performance and elasticity very seriously. When we come to a Kubernetes deployment, it's important to support all Elasticity technologies available to provide our customers flexibility and a lightweight and performant API gateway infrastructure.

This blog post series described Kong Konnect Data Plane deployment to take advantage of the main Kubernetes-based Autoscaling technologies:

- VPA for vertical pod autoscaling

- HPA for horizontal pod autoscaling

- Cluster Autoscaler for node autoscaling based on EC2 ASG (Auto Scaling Groups)

- Karpenter for flexible cost-effective node autoscaling implementation.

Kong Konnect simplifies API management and improves security for all services infrastructure. Try it for free today!