Modern API gateways must provide some key functionalities, including:

- Traffic Controls with features such as request routing, load balancing, and rate limiting

- Security Controls including authentication and authorization to conforming to a number of common security standards and protocols

- Monitoring, logging and analytics to support both open and proprietary standards

- Performance capabilities like caching and gateway level request & response transformations

Critically these functions abstract these requirements away from your service developers allowing them to focus on business development needs.

Kong Gateway and GraphQL Plugins

Kong Gateway provides all of the above capabilities and more, including native GraphQL support for caching and rate limiting.

GraphQL Proxy Caching

In the modern distributed architecture, performance optimization and rapid response times are pivotal. Proxy caching is a common technique for improving response times and reducing upstream service loads. With Kong GraphQL Proxy Caching Advanced plugin, users get a simple mechanism to deploy reverse GraphQL proxy cache.

The caching plugin keys each cached element based on the GraphQL query that has been sent in the HTTP request body and, optionally, a configurable set of request headers. The caching plugin supports a configurable time to live (TTL) for admins to control the lifetime of a cached response.

GraphQL Rate Limiting

Resource protection is critical to ensuring robust and available systems. Rate Limiting is commonly used to mitigate denial-of-service attacks, helping to maintain system health and stability. GraphQL services are no different, and the Kong GraphQL Rate Limiting Advanced plugin provides a GraphQL-aware algorithm for calculating request cost.

GraphQL services typically respond to queries from a single HTTP path, and due to the nature of dynamic client GraphQL queries, normal HTTP path-based rate limiting is not sufficient. The same HTTP request to the same URL with the same method can vary greatly in cost depending on the semantics of the GraphQL operation in the body. See the documentation for more details on how request costs are calculated.

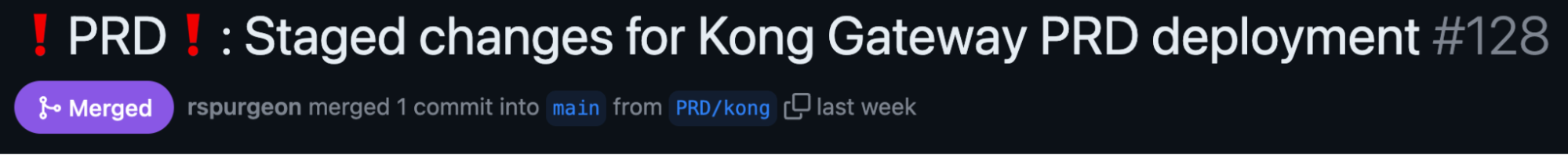

Deploying GraphQL services and plugins with APIOps

In the previous post, we introduced the KongAir Experience API built with GraphQL. Today, we are going to look at deploying GraphQL plugins on Kong Gateway in front of our GraphQL service, protecting it and improving overall system performance.

KongAir follows an automated APIOps process using Kong’s decK tooling to deploy APIs onto Kong Konnect, a complete SaaS API management platform. All of the code we will look at in this post is available in the KongAir GitHub repository.

Services, routes and plugins are configured using a combination of OpenAPI specifications and decK declarative format. Let’s start by looking at the decK declarative definition that configures our GraphQL service: