Kong has supported Redis since its early versions. In fact, the integration between Kong Gateway and Redis is a powerful combination to enhance API management. We can summarize the integration points and use cases of Kong and Redis into three main groups:

- Kong Gateway: Kong integrates with Redis via plugins that enable it to leverage Redis’ capabilities for enhanced API functionality including Caching, Rate Limiting and Session Management.

- Kong AI Gateway: Starting with Kong Gateway 3.6, several new AI-based plugins leverage Redis Vector Databases to implement AI use cases, like Rate Limiting policies based on LLM tokens, Semantic Caching, Semantic Prompt Guards, and Semantic Routing.

- RAG and Agent Applications: Kong AI Gateway and Redis can collaborate for AI-based applications using frameworks like LangChain and LangGraph.

For all use cases, Kong supports multiple flavors of Redis deployments, including Redis Community Edition (including while using Redis Cluster for horizontal scalability or Redis Sentinel for high availability), Redis Software (which provides enterprise capabilities often needed for production workloads) and Redis Cloud (available on AWS, GCP and as Azure Managed Redis in Azure).

This blog post focuses on how Kong and Redis can be used to address Semantic Processing use cases like Similarity Search and Semantic Routing across multiple LLM environments.

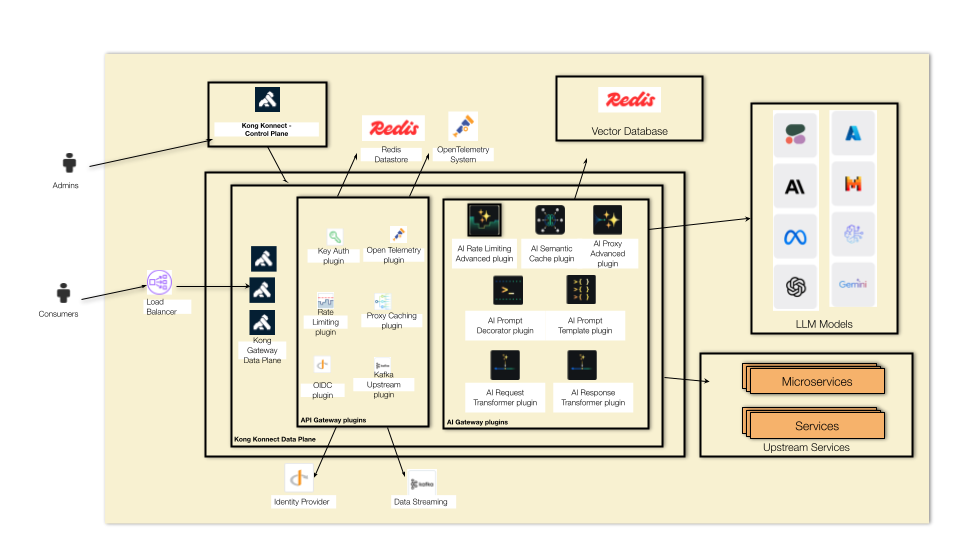

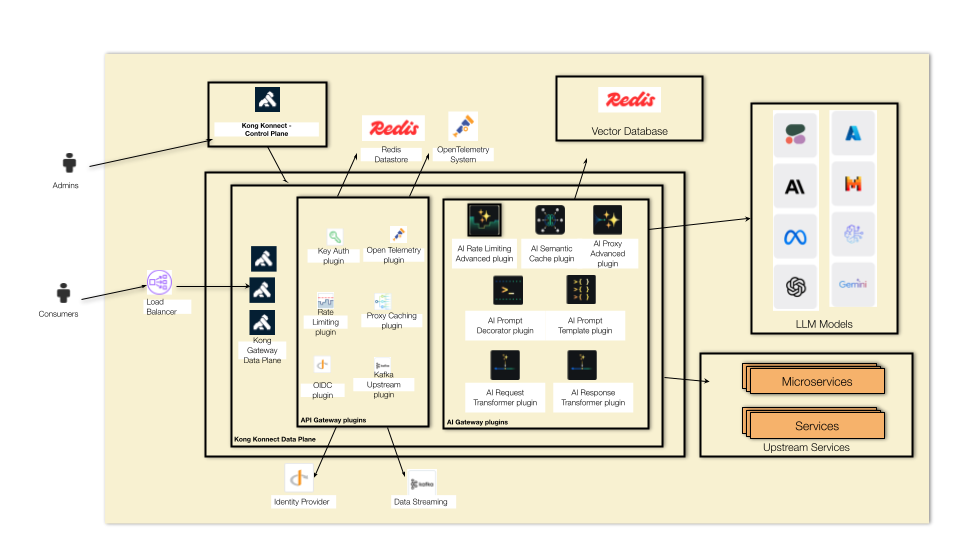

Kong AI Gateway Reference Architecture

To get started let's take a look at a high-level reference architecture of the Kong AI Gateway. As you can see, the Kong Gateway Data Plane, responsible for handling the incoming traffic, can be configured with two types of Kong Plugins:

Kong Gateway plugins

One of the main capabilities provided by Kong Gateway is extensibility. An extensive list of plugins allows you to implement specific policies to protect and control the APIs deployed in the Gateway. The plugins offload critical and complex processing usually implemented by backend services and applications. With the Gateway and its plugins in place, the backend services can focus on business logic only, leading to a faster application development process. Each plugin is responsible for specific functionality, including:

- Authentication/authorization: to implement security mechanisms such as Basic Authentication, LDAP, Mutual TLS (mTLS), API Key, OPA (Open Policy Agent) based access control policies, etc.

- Integration with Kafka-based Data/Event Streaming infrastructures.

- Log processing: to externalize all requests processed by the Gateway to third-party infrastructures.

- Analytics and monitoring: to provide metrics to external systems, including OpenTelemetry-based systems and Prometheus.

- Traffic control: to implement canary releases, mocking endpoints, routing policies based on request headers, etc.

- Transformations: to transform requests before routing them to the upstreams and to transform responses before returning to the consumers.

- For IoT projects, where MQTT over WebSockets connections are extensively used, Kong provides WebSockets Size Limit and WebSockets Validator plugins to control the events sent by the devices.

Also, Kong Gateway provides plugins that implement several integration points with Redis:

Kong AI Gateway plugins

On the other hand, Kong AI Gateway leverages the existing Kong API Gateway extensibility model to provide specific AI-based plugins, more precisely to protect LLM infrastructures:

- AI Proxy and AI Proxy Advanced plugins: the Multi-LLM capability allows the AI Gateway to abstract and load balancing multiple LLM models based on policies including latency time, model usage, semantics etc.

Prompt Engineering:

- AI Prompt Template plugin, responsible for pre-configuring AI prompts to users

- AI Prompt Decorator plugin, which injects messages at the start or end of a caller's chat history.

- AI Prompt Guard plugin lets you configure a series of PCRE-compatible regular expressions to allow and block specific prompts, words, phrases, or otherwise and have more control over an LLM service.

- AI Semantic Prompt Guard plugin to self-configurable semantic (or pattern-matching) prompt protection.

- AI Semantic Cache plugin caches responses based on threshold, to improve performance (and therefore end-user experience) and cost.

- AI Rate Limiting Advanced, you can tailor per-user or per-model policies based on the tokens returned by the LLM provider or craft a custom function to count the tokens for requests.

- AI Request Transformer and AI Response Transformer plugins seamlessly integrate with the LLM, enabling introspection and transformation of the request's body before proxying it to the Upstream Service and prior to forwarding the response to the client.

By leveraging the same underlying core of Kong Gateway, and combining both categories of plugins, we can implement powerful policies and reduce complexity in deploying the AI Gateway capabilities as well.

The first use case we are going to focus on is Semantic Cache where the AI Gateway plugin integrates with Redis to perform Similarity Search. Then, we are going to explore how the AI Proxy Advanced Plugin can take advantage of Redis to implement Semantic Routing across multiple LLMs models.

As a remark, AI Rate Limiting Advanced and AI Semantic Prompt Guard Plugins are two other examples where AI Gateway and Redis work together.

Before diving into the first use case, let's highlight and summarize the main concepts Kong AI Gateway and Redis rely on.

Embeddings

Embeddings (aka Vectors or even Vector Embeddings) are a representation of unstructured data like text, images, etc. In a LLM context, the dimensionality of the Embeddings refers to the number of characteristics captured in the vector representation of a given sentence: the more dimensions an Embedding has, the better and more effective it is.

There are multiple ML-based embedding methods used in NLP like:

- One-hot

- word2vec

- TF-IDF (Term frequency-inverse document frequency)

- GloVe (Global Vectors for Word Representation)

- BERT (Bidirectional Encoder Representations from Transformers)

Here's an example of a Python script using “Sentence Transformers” module (aka SBERT, or Sentence-BERT, maintained by Hugging Face), using the “all-mpnet-base-v2” Embedding Model to encode a simple sentence into an embedding:

The “all-mpnet-base-v2” Embedding Model encodes sentences to a 768-dimensional Vector. As an experiment, we have truncated the vector to 3 dimensions only.

The output should be like:

Vector Database

A Vector Database stores and searches Vector Embeddings. They are essential for AI-based applications supporting images, texts, etc., providing Vector Stores, Vector Indexes, and more importantly, algorithms to implement Vector Similarity Searches.

Redis provides nice introductions about Vector Embeddings and Vector Databases. Redis Query Engine is an in-built capability within Redis that provides vector search functionality (as well as other types of search such as full-text, numeric etc.) and is known for industry-leading performance. Redis is built for Speed and delivers unmatched performance with sub-millisecond latency, leveraging in-memory data structures and advanced optimizations to power real-time applications at scale. This is critical for gateway use-cases where deployment happens in the “hot-path” of LLM queries.

In addition, Redis can be deployed as as enterprise software and/or as a cloud service, thereby adding several enterprise capabilities including:

- Scalability: Redis can easily scale horizontally and effortlessly handle dynamic workloads and ability to manage massive datasets across distributed architectures.

- High availability and persistence: Redis supports high availability with built-in support for multi-AZ deployments, seamless failover, data persistence through backups and active-active architecture, enabling robust disaster recovery and consistent application performance.

- Flexibility: Redis natively supports multiple data structures such as JSON, Hash, Strings, Streams, and more to suit diverse application needs.

- Broad ecosystem: As one of the world’s most popular databases, Redis has a rich ecosystem of client libraries (redisvl for GenAI use-cases), developer tools and integrations.

Vector Similarity Search

With similarity search we can find, in a typically unstructured dataset, items similar (or dissimilar) to a certain presented item. For example, given a picture of a cell phone, try to find similar ones considering its shape, color, etc. Or, given two pictures, check the similarity score between them.

In our NLP context, we are interested in similar responses returned by the LLM when applications send prompts to it. For example, these two following sentences “Who is Joseph Conrad?” and “Tell me more about Joseph Conrad”, semantically speaking, should have a high similarity score.

We can extend our Python script to try that out:

The output should be like this. The embeddings are

The “shape” is composed of 3 embeddings of 768 dimensions each. The code asks to cross-check the similarity of all embeddings. The more similar they are, the higher the score. Notice that the “1.0000” score is returned, as expected, when self-checking a given embedding.

The “similarity” method returns a “Tensor” object, which is implemented by PyTorch, the ML library used by Sentence Transformer.

There are several techniques for similarity calculation including distance or angle between the vectors. The most common methods are:

In a Vector Database context, Vector Similarity Search (VSS) is the process of finding vectors in the vector database that are similar to a given query vector.

RediSearch and Redis VSS

Back in 2022, Redis launched RediSearch 2.4, a text search engine built on top of Redis data store, with RedisVSS (Vector Similarity Search).

To get a better understanding of how RedisVSS works, consider this Python script implementing a basic similarity search. Make sure you have set the “OPENAI_API_TYPE” environment variable as “openai" before running the script.

Initially, the script creates an index to receive the embeddings returned by OpenAI. We are using the “text-embedding-3-small” OpenAI model, which has 1536 dimensions, so the index has a VectorField defined to support those dimensions.

Next, the script has two steps:

- Stores the embeddings of a reference text, generated by OpenAI Embedding Model.

- Performs Vector Range queries passing two new texts to check their similarity with the original one.

Here's a diagram representing the steps:

The code assumes you have a Redis environment available. Please check the Redis Products documentation to learn more about it. It also assumes you have two environment variables defined: OpenAI API Key and Load Balancer address where Redis is available.

The script was coded using two main libraries:

While executing the code, you can monitor Redis with, for example, redis-cli monitor. The code line res = client.ft("index1").search(query, query_params).docs should log a message like this one:

Let's examine the command. Implicitly, the .ft("index1") method call gives us support to Redis Search Commands, as the .search(query, query_params) call sends an actual search query using the FT.SEARCH Redis command.

The FT.SEARCH command receives the parameters defined in both query and query_params objects. The query parameter, defined using the Query object, specifies the actual command as well as the return fields and dialect.

We want to return the distance (similarity) score, so we must yield it via the $yield_distance_as attribute.

Query Dialects enable enhancements to the query API, allowing the introduction of new features while maintaining compatibility with existing applications. For Vector Queries like ours, Query Dialect should be set with a value equal or greater than 2. Please, check the specific Query Dialect documentation page to learn more about it.

On the other hand, the query_params object defines extra parameters, including the radius and the embeddings it should be considered for the search.

The final FT.SEARCH also includes parameters to define offset and number of results. Check the documentation to learn more about it.

In fact, the FT.SEARCH command sent by the script is just an example of Vector Search supported by Redis. Basically, Redis supports two main types of searches:

- KNN Vector Search: this algorithm finds the top “k-nearest neighbors” to a query vector.

- Vector Range Query: the query type the script uses filters the index based on a radius parameter. Radius defines the semantic distance between the two vectors: the input query vector and indexed vector.

Our script's intent is to examine the distance between the two vectors and not implement any filter. That’s the reason why it has set the Vector Range Query with "radius": 1.

After running the script, its output should be like:

That means that, as expected, the stored embedding, related to the reference text "Who is Joseph Conrad?", is closer to the first new text, “Tell me more about Joseph Conrad”, than to the second one “Living is easy with eyes closed”.

Now that we have an introductory perspective of how we can implement Vector Similarity Searches with Redis, let’s examine the Kong AI Gateway Semantic Cache Plugin which is responsible for implementing semantic caching. We'll see it performs similar searches to what we have done with the Python script.

Kong AI Semantic Cache Plugin

To get started, logically speaking, we can analyze the Caching flow from two different perspectives:

- Request #1: We don't have any data cached.

- Request #2: Kong AI Gateway has stored some data in the Redis Vector Database.

Here's a diagram illustrating the scenarios:

Konnect Data Plane deployment

Before exploring how the Kong AI Gateway Semantic Cache Plugin and Redis work together we have to deploy a Konnect Data Plane (based on Kong Gateway). Please, refer to the Konnect documentation to register and spin your first Data Plane up.

Kong Gateway Objects creation

Next, we need to create Kong Gateway objects (Kong Gateway Services, Kong Routes and Kong Plugins) to implement the use case. There are several ways to do that, including Konnect RESTfull APIs, Konnect GUI, etc. With decK (declarations for Kong), we can manage Kong Konnect configuration and create Kong Objects in a declarative way. Please check the decK documentation to learn how to use it with Konnect.

AI Proxy and AI Semantic Cache Plugins

Here's the decK declaration we are going to submit to Konnect to implement the Semantic Cache use case:

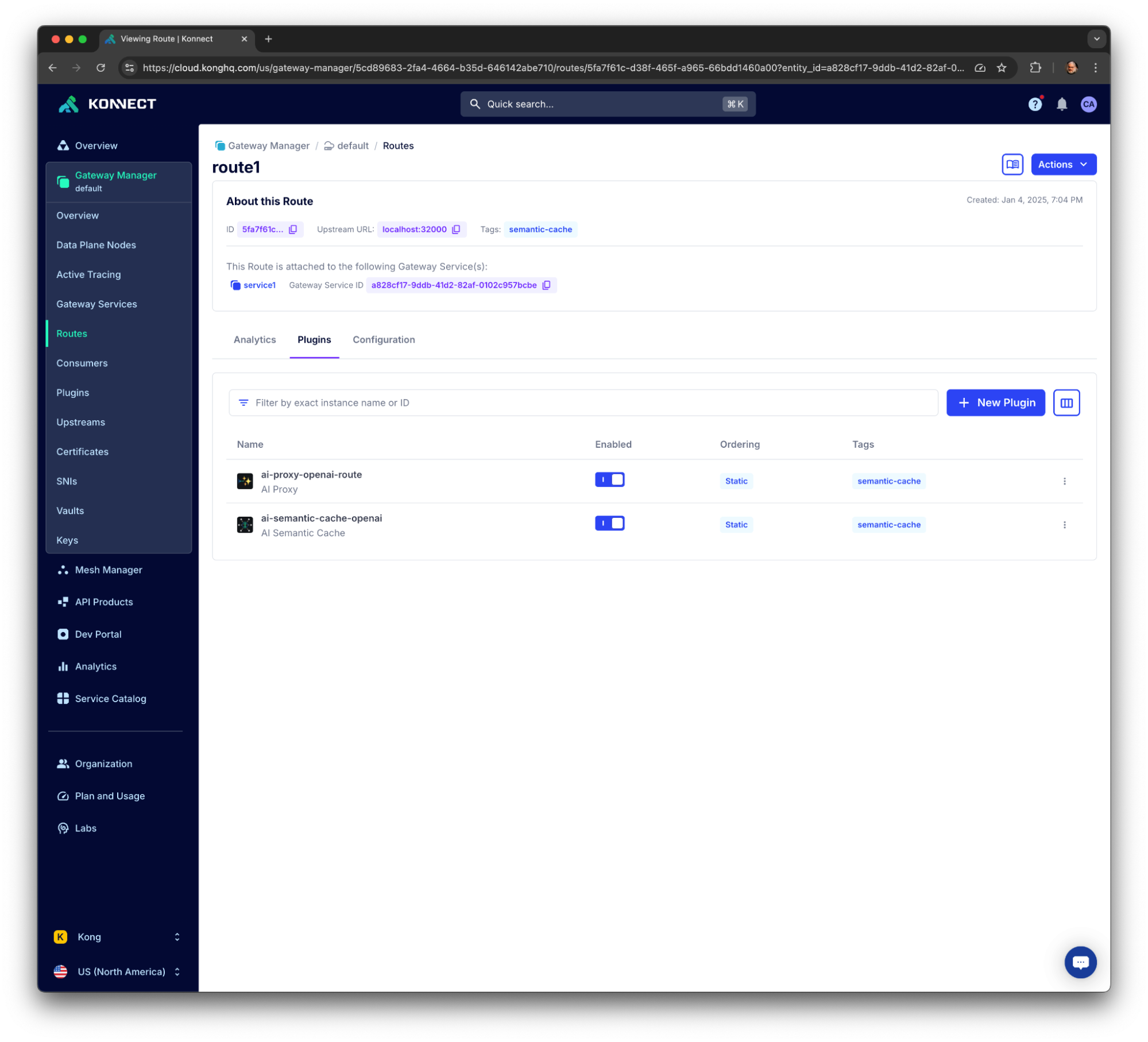

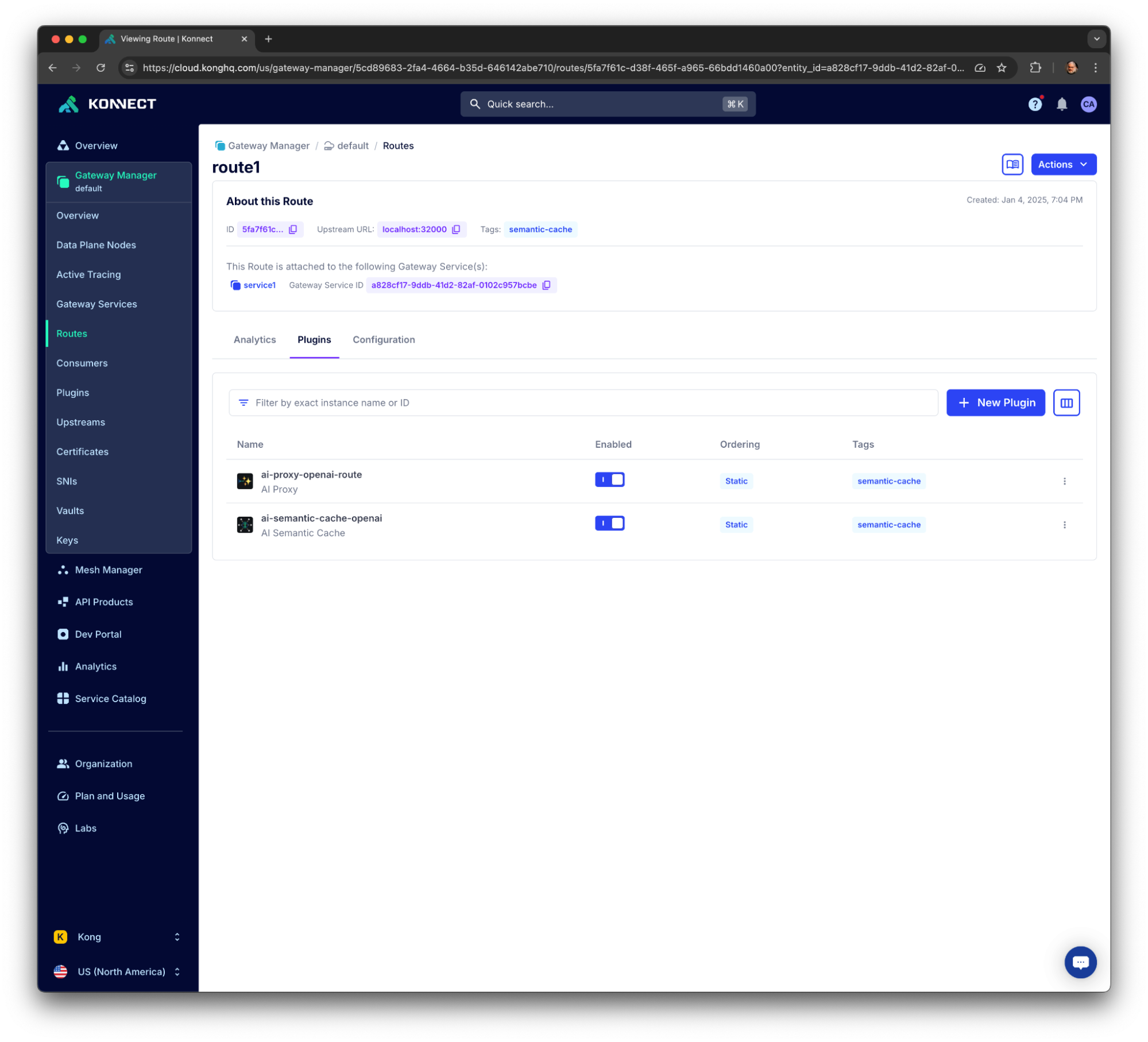

The declaration creates the following Kong Objects in the “default” Konnect Control Plane::

After submitting the decK declaration to Konnect, you should see the new Objects using the Konnect UI:

Request #1

With the new Kong Objects in place, the Kong Data Plane is refreshed with them, and we are ready to start sending requests to it. Here's the first one with the same content we used in the Python script:

You should get a response like this, meaning the Gateway successfully routed the request to OpenAI which returned an actual message to us. From Semantic Caching and Similarity perspective, the most important headers are:

X-Cache-Status: Miss, telling us the Gateway wasn't able to find any data in the cache to satisfy the request.X-Kong-Upstream-Latency and X-Kong-Proxy-Latency, showing the latency times.

Redis Introspection

Kong Gateway creates a new index. You can check it with redis-cli ft._list. The index should be named like: idx:vss_kong_semantic_cache:511efd84-117b-4c89-87cb-f92f9b74a6c0:openai-gpt-4

And redis-cli ft.search idx:vss_kong_semantic_cache:511efd84-117b-4c89-87cb-f92f9b74a6c0:openai-gpt-4 "*" return 1 - should return the ID of OpenAI's response. Something like:

The following json.get command should return the actual response received from OpenAI

More importantly, redis-cli monitor tells us all the commands the plugin sent to Redis to implement the cache. The main ones are:

1. "FT.INFO" to check if the index exists.

2. There's no index, so create it. The actual command is the following. Notice the index is similar to the one we used in the Python script.

3. Run a VSS to check if there's a key which satisfies the request. The command looks like this. Again, notice the command is the same we used in our Python script. The “range” parameter reflects the “threshold” configuration used in the decK declaration.

4. Since the index has just been created, the VSS couldn't find any key so the plugin sends a "JSON.SET" command to add a new index key with the embeddings received from OpenAI.

5. "expire" command to set the expiration time of the key as, by default, 300 seconds.

You can check the new index key using the Redis dashboard:

Request #2

If we send another request with similar content, the Gateway should return the same response, since it's going to take from the Cache, as noticed in the X-Cache-Status: Hit header. Besides, the response has specific header related to the cache: X-Cache-Key and X-Cache-Ttl.

The response should be returned faster, since the Gateway didn't have to route the request to OpenAI.

If you send another request with non-similar content, the plugin creates a new index key. For example:

Check the index keys again with:

Kong AI Proxy Advanced Plugin and Semantic Routing

Kong AI Gateway provides several semantic based capabilities besides caching. A powerful one is Semantic Routing. With such a feature, we can let the Gateway decide the best model to handle a given request. For example, you might have models trained in specific topics, like Mathematics or Classical Music, so it'd be interesting to route the requests depending on the presented content. By analyzing the content of the request, the plugin can match it to the most appropriate model that is known to perform better in similar contexts. This feature enhances the flexibility and efficiency of model selection, especially when dealing with a diverse range of AI providers and models.

In fact Semantic Routing is one of the load balancing algorithms supported by the AI Proxy Advanced Plugin. The other supported algorithms are:

- Round-robin

- Weight-based

- Lowest-usage

- Lowest-latency

- Consistent-hashing (sticky-session on given header value)

For the purpose of this blog post we are going to explore the Semantic Routing algorithm.

The diagram below shows how the AI Proxy Advanced Plugin works:

- At the configuration time, the plugin sends requests to an Embeddings Model based on descriptions defined. The embeddings returned are stored in Redis Vector Database.

- During request processing time, the plugin gets the request content and sends a VSS query to Redis Vector Database. Depending on the similarity score, the plugin routes the request to the best targeted LLM model sitting behind the Gateway.

Here's the new decK declaration:

The main configuration sections are:

balancer with algorithm: semantic, telling the load balancer will be based on Semantic Routing.embeddings with the necessary setting to reach out the Embedding Model. The same observations made previously regarding the API Key remains here.vectordb with the Redis host and Index configurations as well as the threshold to drive the VSS query.targets: each one of them represents a LLM model. Note the description parameter is used to configure the load balancing algorithm according to the topic the model has been trained for.

As you can see, for convenience's sake, the configuration uses OpenAI's model for embeddings and targets. Also, just for this exploration, we are also using the gpt-4 and gpt-4o-mini OpenAI's models for the targets.

After submitting the decK declaration to Konnect Control Plane, the Redis Vector Database should have a new index defined and a key for each target created. We can then start sending requests to the Gateway. The first two requests have contents related to Classical Musical, so the response should come from the related model, gpt-4o-mini-2024-07-18.

Now, the next request is related to Mathematics, therefore the response comes from the other model, gpt-4-0613.

Conclusion

Kong has historically supported Redis to implement a variety of critical policies and use cases. The most recent collaborations, implemented by the Kong AI Gateway, focus on Semantic Processing where Redis Vector Similarity Search capabilities play an important role.

This blog post explored two main semantic-based use cases: Semantic Caching and Semantic Routing. Check Kong's and Redis’ documentation pages to learn more about the extensive list of API and AI Gateway use cases you can implement using both technologies.