This article will dive into applying Twelve-Factor application development principles to achieve API automation using AWS and Kong Konnect Enterprise. Using this methodology, you can make scalable and resilient apps that you can continuously deploy with maximum agility. Twelve-Factor design also lets you decouple the components of your app so that you can replace or scale each component seamlessly. Because the Twelve-Factor design principles are independent of any programming language or software stack, you can apply them to various apps or APIs going through an API gateway.

Path to API Automation Using Kong and AWS

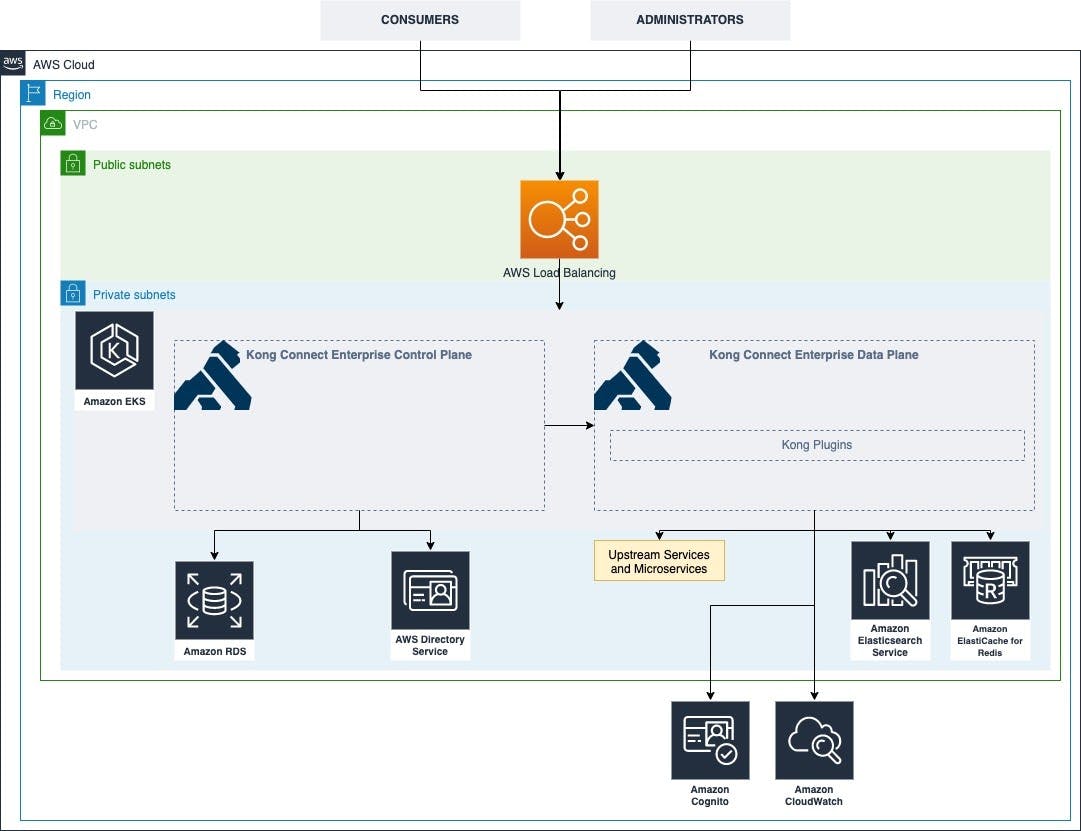

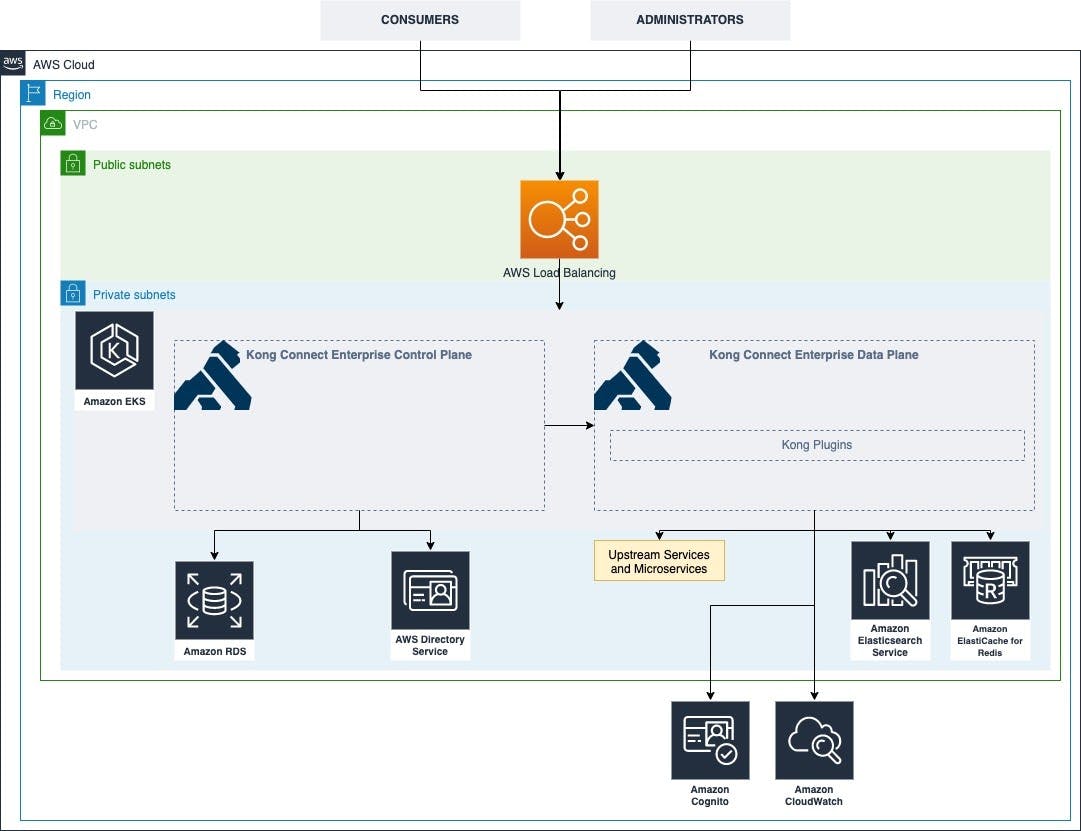

A typical installation of Kong Konnect Enterprise on Amazon Elastic Kubernetes Service (Amazon EKS) involves installing the Kong control plane and data plane on one or more clusters across multiple availability zones or regions. You can split these between on-premise and AWS, depending on your specific use cases. Kong is a Lua application designed to load and execute Lua or Go modules or Kong plugins on the data plane. Kong provides a set of standardized Lua modules bundled with Kong Gateway.

Plugins provide advanced functionality to extend the use of Kong Gateway, which allows you to add more features to your implementation. Customers typically use Amazon services to get a better managed and highly available solution out-of-the-box. This lets Kong focus on API management and leverage AWS for non-API-management-specific activities.

1. Codebase

You must manage all the code in a central source control system and use one codebase per application. This means if you have two continuous applications in your solution, they should live in two separate codebase depositories in a version control system like Git. Storing the code in a version control system also enables your team to work together by providing an audit trail of changes to the code. It's a systemic way of resolving conflicts and even the ability to roll back to a previous version.

Specifically for Kong, the entire infrastructure can be provisioned using AWS infrastructure as a code service. Moreover, Kong provides the ability to import the API specs directly from version control.

2. Dependencies

You should declare the application's dependencies explicitly. No dependency can be assumed to be coming from the execution environment. In a container-based application, you can handle this by using Docker files.

A Docker file is a text-based list of instructions to create a containerized image. You can declare their application dependencies in the manifest file of the programming language's packaging system and install them using the instructions in the file.

Specifically for Kong, it offers non-specific application-bound contexts, like compliance monitoring and logging, authentication and authorization, and traffic control, to plugins offloading these components to account. Plugins allow customers to focus on more than writing code for your specific APIs and the business facade of your interest.

3. Configurations

The deployment environment provides the specific configuration of your application using environment variables. The different stages of your deployment pipeline can provide the environment-specific configuration. You can use services like AWS AppConfig, which is a capability of AWS Systems Manager to create, manage and quickly deploy application configuration. You can use AWS AppConfig with Amazon Elastic Compute Cloud (Amazon EC2) applications, AWS Lambda functions, containers, mobile applications and even IoT devices.

Specifically for Kong infrastructure, use Helm Charts and Kubernetes manifest files to install the Kong Gateway and plugins on Amazon Elastic Kubernetes Service (Amazon EKS). Moreover, instead of using specific APIs, use the Kubernetes manifest files to deploy the Kong plugins.

4. Backing Service

Your application must treat any service that it uses to perform its business function as an attached resource. The attached resource semantics prescribes that these resources are made available to the application as a consideration. Your application will be aware of the attached resource but not tightly coupled to it.

For Kong, use managed services such as Amazon Elasticache for caching routes, discover and visualize data using ELK stack, and use Amazon Cognito for authentication and authorization.

5. Build, Release and Run

AWS CodePipeline provides a mechanism to separate the department's build, release, and run stages, as typically recommended.

Specifically for Kong Gateway, build the API spec, fetch from the version control, use AWS CodeBuild and deploy it to the API gateway. You can orchestrate an end-to-end automation experience using AWS CodePipeline or a CI/CD tool using Kong's Admin API.