I've just got off a call with one of the largest banks in Sweden, and my brain is racing with ideas. I need to get this down on paper. I want to drop everything I'm doing and spend the next week in that mental headspace where all you do is explore and live and breathe a topic, with occasional breaks for sleep, after which you race out of bed so you can go back to where you left off. You know what I mean.

What's got me so fired up?

Climate change. My eyes have been opened that as technologists, we have a much greater and more direct responsibility to save our planet than I'd thought. We have a greater opportunity than I'd thought.

This is good because it means there's more of a difference we can make. But this can't just be me. As vendors, as suppliers, as engineers and as architects, we have a duty.

We can be part of the movement. We need to be.

Why? It's pretty simple. We know we're consuming more resources than our planet has to offer. We're polluting it, warming it up, burning it, flooding it and ultimately killing everything on it.

We do this mainly by burning fossil fuels to generate the electricity we need to power the things we want. And IT is part of the problem.

Access the complete guide that explores the vital role that API technology can play in helping to mitigate climate change and facilitate sustainability.

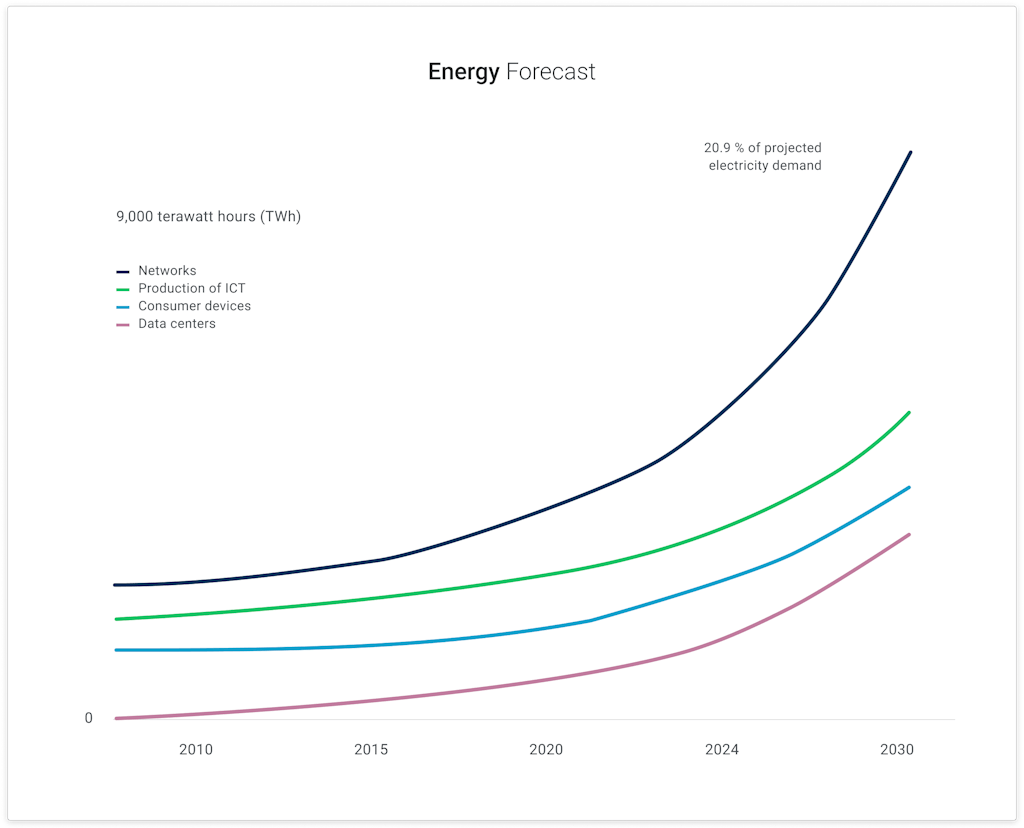

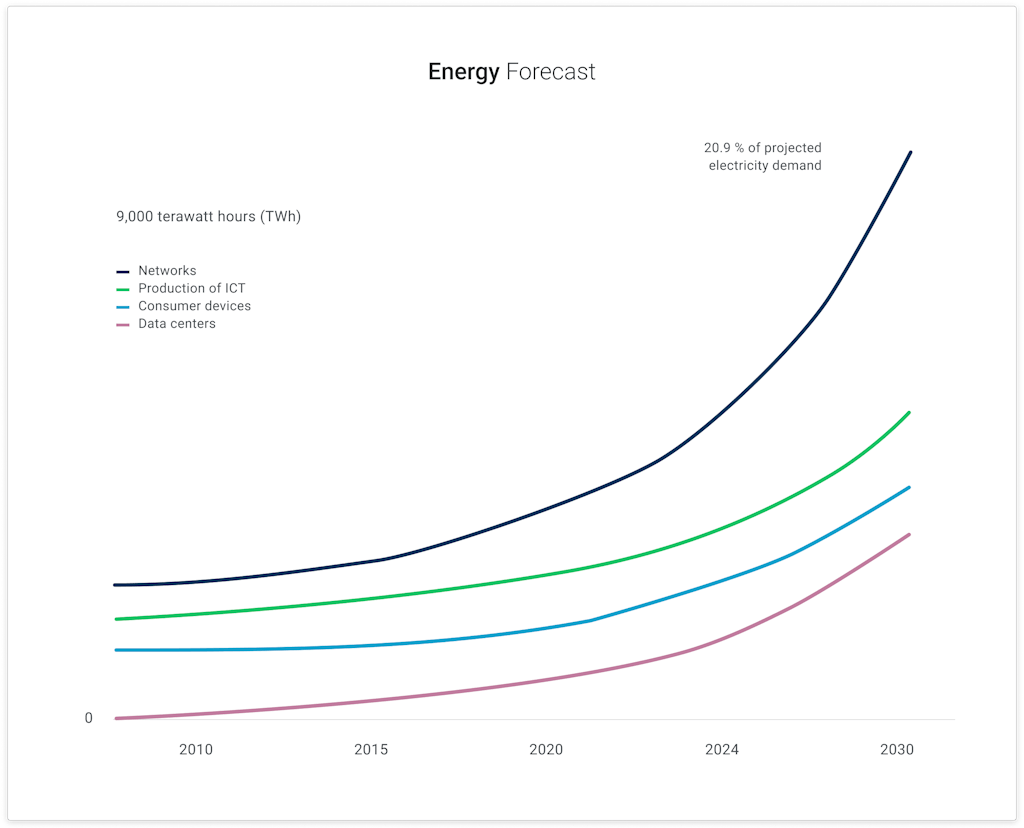

Within the next 10 years, it's predicted that 21% of all the energy consumed in the world will be by IT. Our mandates to digitally transform mean we're patting ourselves on the back celebrating new ways we delight our customers, fuelled by electricity guzzled from things our planet can't afford to give. Eighty percent of the world's electricity is still fossil-based: How much is your digital transformation costing future generations?

So what do we do? This isn't about the steps we take at home to be a good citizen: recycling, turning off appliances when not in use, or even divesting our investments. This isn't about the corporate ESG policies in our workplaces (although, if they have been done right, they should be influencing your work). This is about the way we architect our systems.

APIs Role in Climate Change

I'm not going to claim that APIs prevent climate change. No single thing does (although switching away from fossil fuels would be a good start). What I am going to claim is that they have a role to play, and we need to be making technical and architectural decisions with this in mind.

Consider that Cisco estimates that global web traffic in 2021 exceeded 2.8 zettabytes. That equates to 21 trillion MP3 songs, or 2,658 songs for every single person on the planet. It's almost 3x the number of stars in the observable universe.

It's 560x the number of all words ever spoken by human beings, ever. And now consider that 83% of this traffic is through APIs! APIs have a VERY big role to play.

Building better APIs isn't just good for the planet and our consciences; it's good for our business too. The more we can architect to reduce energy consumption, the more we can reduce our costs as well as our impact.

And guess what? Eighty-five percent of consumers have in fact shifted their purchasing behavior to prioritize sustainability in the last five years. A third of consumers will actually pay more for products that are sustainable. Dr Andreas von der Gathen, co-CEO of Simon-Kucher, says "as expectations around sustainability climb, companies will face significant pressure to prove their sustainability credentials and continue to make it a central part of their value proposition."

In this three-part blog series, we will examine how we should use, build, deploy and adopt APIs to support sustainability goals. In the later posts, we'll examine ways to consume and innovate on top of APIs with examples from companies like Tomorrow.io and start looking at architecture trends such as monolith to microservices with a sustainability lens. The remainder of this article is all about API production; what are the things we should have in mind as we're building our APIs?

Green and Sustainable APIs

To reduce the energy consumption of our APIs, we must ensure they are as efficient as possible. We need to eliminate unnecessary processing, minimize their infrastructure footprint, and monitor and govern their consumption so we aren't left with API sprawl leaking energy usage all over the place.

Here, we break down the key things to consider at each stage of the API lifecycle.

Designing Responsible APIs

Your APIs must be well-designed in the first place, not only to ensure they are consumable and therefore reused but also to ensure each API does what it needs to rather than what someone thinks it needs to. If you're building a Customer API, do consumers need all the data rather than a subset?

Sending 100 fields when most of the time consumers only use the top 10 means you're wasting resources: You're sending 90 unused and unhelpful bits of data every time that API is called.

Consider two versions of the API: a Simple Customer API and a Detailed Customer API. Consider building a parser to enable consumers to pass the name of the fields they want in the request (GET /customer/32944?fields=name,email). Consider building in GraphQL instead of REST so consumers can specify only the fields they need; but bear in mind consumers will need to be educated on how to make computationally efficient requests rather than always asking for everything.

Getting the design right is the first step, which is easier to do when you follow a design-first approach. Using a tool like Insomnia, you can optimize and perfect the design of your API so you can make sure what you build isn't wasteful or unnecessary.

Build and deploy

Where do your APIs live? What are they written in? What do they do? There are many architectural, design and deployment decisions we make that have an impact on the resources they use.

We need the code itself to be efficient; something fortunately already prioritized as a slow API makes for a bad experience. There are nuances to this though when we think about optimizing for energy consumption as well as performance; for example, an efficient service polling for updates every 10 seconds will be worse than an efficient service that just pushes updates when there are some.

And when there is an update, we just want the new data to be sent, not the full record. Consider the amount of traffic your APIs create, and for anything that isn't acted upon, is that traffic necessary at that time?

Our deployment targets matter. Cloud vendors have significant R&D budgets to make their energy consumption as low as possible; budgets that no other company would be prepared to invest in their own data centers. However, with the annual electricity usage of the big five tech companies — Amazon, Google, Microsoft, Facebook and Apple — more or less the same as the entirety of New Zealand's, it's not as simple as moving to the cloud and the job being finished. How renewable are their energy sources?

How much of their power comes from fossil fuels? The more cloud vendors see this being a factor in our evaluation of their services, the more we will compel them to prioritize sustainability as well as efficiency.

We must also consider the network traffic of our deployment topology. The more data we send, and the more data we send across networks, the more energy we use. We need to reduce any unnecessary network hops, even if the overall performance is good enough.

We must deploy our APIs near the systems they interact with, and we must deploy our gateways close to our APIs. Think how much traffic you're generating if every single API request and response has to be routed through a gateway running somewhere entirely different!

Kong's API gateway will help you in all these areas. The gateway itself is super small (think Raspberry Pi small), extremely fast and highly efficient. This means the overall footprint of your Kong instances will remain small, no matter what you throw at it: In our latest performance benchmarks, one node of Kong (8 cores) achieved a throughput of 54,250 TPS.

No dependency on a JVM, no scary scaling. Some companies have saved upwards of seven figures on their infrastructure costs just by migrating to Kong - and therefore greatly reduced their carbon footprint.

Kong is also completely deployment-agnostic. Run it where you like, when you like; distribute Kong Gateway instances in all your network zones and remove the unnecessary hops between your gateway and your APIs. Run everything in containers, or use serverless computing, or choose one of the newer, so far lesser-known "green" data centers. It doesn't matter.

Complementary to Kong Gateway for your APIs, Kong Mesh will reduce the network traffic of your microservices. By deploying proxies as a sidecar right next to each microservice, traffic only needs to travel the absolute minimum distance across each localhost rather than routing messages across network zones to a centrally deployed component.

Policies that come with Kong Mesh give you further traffic control, such as circuit breaking, which prevents unnecessary messages being sent to a service if it's not available. Built following the same philosophy as Kong Gateway, it's platform-agnostic so you can run it across Kubernetesand VMs and bare metal and any other environment you have microservices running in, making it easy to realize these efficiency gains for all your microservices, anywhere.

Manage

To understand, and therefore minimize our API traffic, we need to manage it in a gateway. Policies like rate limiting control how many requests a client can make in any given time period; why let someone make 100 requests in one minute when one would do? Why let everyone make as many requests as they like, generating an uncontrolled amount of network traffic, rather than limiting this benefit to your top tier consumers?

We should also cache API responses, using Kong's proxy cache plugin for example. This prevents the API implementation code from executing anytime there's a cache hit - an immediate reduction in processing power.

Policies give us visibility and control over every API request, so we know at all times how and if each API is used, where requests are coming from, performance and response times, and we can use this insight to optimize our API architecture.

Are there lots of requests for an API coming from a different continent to where it's hosted? Consider redeploying the API local to the demand to reduce network traffic. Are there unused APIs, sitting there idle?

Consider decommissioning them to reduce your footprint. Is there a performance bottleneck? Investigate the cause and, if appropriate, consider refactoring the API implementation to be more efficient.

API management is one of the necessary tools for reducing the carbon footprint of your API estate; its sole purpose is to give you visibility and control over your APIs and how they are consumed, which greatly impacts their overall energy consumption. When you use an API management platform that is itself efficient and reduces your infrastructure footprint, you are in a much better position to ensure your APIs are built and managed with sustainability in mind.

A final word

Sitting here behind our laptop screens, it's easy to feel quite far removed from the impact of climate change. We (and I put my hands up here) happily switch between Google Drive, iCloud, SaaS apps and the umpteen different applications we use day-to-day without thinking about their impact on the planet.

Thanks to privacy concerns, we have a growing awareness of how and where our data is transferred, stored and shared, but most of us do not have the same instinctive thought process when we think about carbon emissions rather than trust.

It's time to make this a default behavior. It's time to accept, brainstorm and challenge each other that, as technologists, there are better ways for us to build applications and connect systems than we've previously considered. What I've shared here is just a starting point.

Right now, Big Oil has a (deservedly) bad rep when it comes to climate change. Unless we take action, it could be us next… as we digitize, we need to make sure we're not the next industry causing the problem.

Coming next in this series: